作业要求来源:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/2822

1. 下载一长篇中文小说。

2. 从文件读取待分析文本。

f = open("红楼梦.txt", "r", encoding='gb18030')

novel = f.read()

f.close()

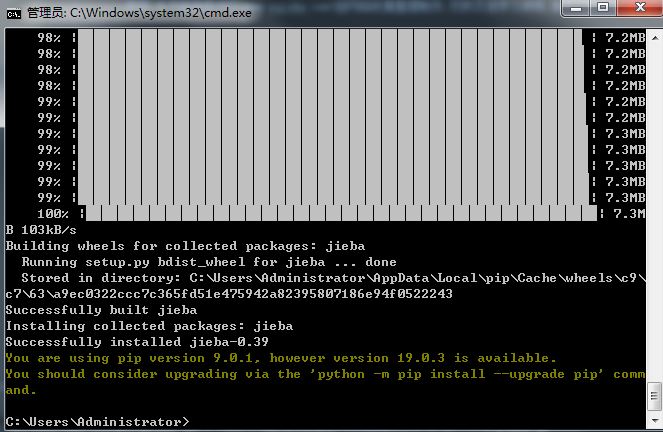

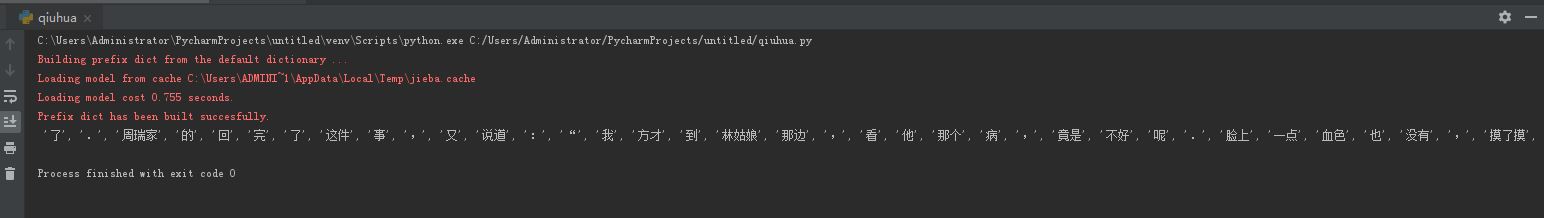

3. 安装并使用jieba进行中文分词。

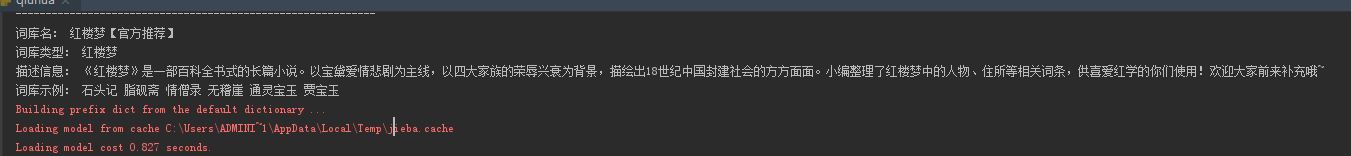

4. 更新词库,加入所分析对象的专业词汇

jieba.add_word('林姑娘')

jieba.load_userdict(r'红楼梦词库.txt'

5. 生成词频统计

for i in tokens:

wordsCount[i] = tokens.count(i)

6. 排序

top = list(wordsCount.items()) top.sort(key=lambda x: x[1], reverse=True)

7.排除语法型词汇,代词、冠词、连词等停用词

f = open("stop_chinese.txt", "r", encoding='utf-8')

stops = f.read().split()

f.close()

tokens = [token for token in novel if token not in stops]

8. 输出词频最大TOP20,把结果存放到文件里

top.sort(key=lambda x: x[1], reverse=True)

pd.DataFrame(data=top[0:20]).to_csv('top_chinese20.csv', encoding='utf-8')

9. 生成词云

txt = open('top_chinese20.csv','r',encoding='utf-8').read()

wordlist = jieba.lcut(txt)

wl_split = ''.join(wordlist)

backgroud_Image = plt.imread('background.jpg')

mymc = WordCloud(background_color='white',mask=backgroud_Image,

margin=2,max_words=20,max_font_size=150,random_state=30).generate(wl_split)

plt.imshow(mymc)

plt.axis("off")

plt.show()

mymc.to_file(r'WordCloud.png')