爬虫

1.概念:

是一种按照一定的规则,自动地抓取万维网信息的程序或者脚本;

2.案例:

一: 爬虫抓取信息

引用名称空间:

using System.Net;

代码:

private void button1_Click(object sender, EventArgs e) { if (this.txtUrl.Text.Trim().Length == 0) { MessageBox.Show("URL地址不能为空!"); } WebClient wc = new WebClient(); //上载或下载当页的编码 wc.Encoding = Encoding.Default; //下载 this.txtContent.Text = wc.DownloadString(this.txtUrl.Text.Trim()); }

二: 爬虫抓取指定信息

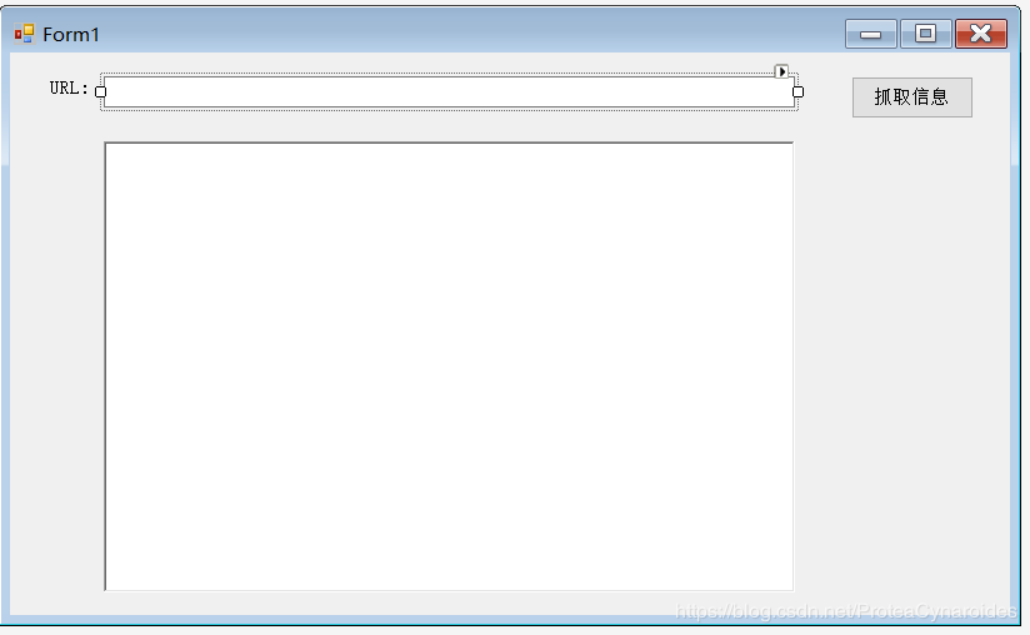

界面:

引用空间:

using System.Net.Http;

using HtmlAgilityPack;

代码:

//抓取页面内容 private void button1_Click(object sender, EventArgs e) { //httpClient模拟提交访问请求类 var httpClient = new HttpClient(); string url = this.txtUrl.Text.Trim(); //模拟提交访问请求并得到整页HTML HttpResponseMessage http = httpClient.GetAsync(url).Result; //如果访问成功 if (http.IsSuccessStatusCode) { //分析内容 //第三方分析HTML的组件 HtmlAgilityPack.HtmlDocument doc = new HtmlAgilityPack.HtmlDocument(); HtmlNode node = doc.DocumentNode; //把HTML字符串变成对象 doc.LoadHtml(http.Content.ReadAsStringAsync().Result); //查找需要的节点内容 this.txtContent.Text = node.SelectNodes("//div[@class='info-cont']")[0].SelectNodes("//ul[@class='info-list']") [0].InnerText.Replace("\r\n ", "").Replace(" ", ""); } else { this.txtContent.Text = "未抓取到内容"; } }

结果:

三: 爬虫分页

代码:

private void btnCrawl_Click(object sender, EventArgs e) { List<film> ls = new List<film>(); film ns; string url = this.txtUrl.Text.Trim(); //HtmlWeb类是一个从网络上获取一个HTML文档的类 HtmlAgilityPack.HtmlWeb htmlWeb = new HtmlAgilityPack.HtmlWeb(); //定义网页解析对象 HtmlAgilityPack.HtmlDocument document = htmlWeb.Load(url); ////html/body/div[4]/div[3]/a[7] HtmlNode pagenode = document.DocumentNode.SelectSingleNode("//html/body/div[3]/div[3]/a[7]"); //得到总页数 int pageCount = int.Parse(pagenode.InnerText); if (pageCount > 2) pageCount = 2;//页多太慢,人工改成3页 //循环每一页 for (int i = 1; i <= pageCount; i++) { if (i != 1) //非第一页的话,将采集下一页 { url = "http://www.360kan.com/dianshi/list.php?year=all&area=all&act=all&cat=all&pageno=" + i; document = htmlWeb.Load(url); } //Xpath /html/body/div[4]/div[2]/div/div[2] //HtmlNode node = document.DocumentNode.SelectSingleNode("//html//body//div[3]//div[2]//div//div[2]"); //HtmlNodeCollection htmlNodes = node.SelectNodes("ul//li"); HtmlNodeCollection htmlNodes = document.DocumentNode.SelectNodes("//html//body//div[3]//div[2]//div//div[2]//ul//li"); //nd 现在代表的是 li int j = 1; foreach (var nd in htmlNodes) { try { //电视剧的名字所在标签的 Xpath var vName = nd.SelectSingleNode("a//div[2]//p[1]//span").InnerText; //var yName = nd.SelectSingleNode("a//div[2]//p[2]").InnerText; //HtmlNode imgNode = nd.SelectSingleNode("a/div[1]/img"); //var imgsrc = imgNode.Attributes["src"].Value; //获取该部电视剧详情地址 // Xpath如下: /html/body/div[4]/div[2]/div/div[2]/ul/li[1]/a HtmlNode aNode = nd.SelectSingleNode("a"); string aurl = aNode.Attributes["href"].Value; //加载详情页 document = htmlWeb.Load("http://www.360kan.com/" + aurl); //html/body/div[3]/div/div/div HtmlNode node = document.DocumentNode.SelectSingleNode("//html//body//div[3]//div//div//div"); //获取电视剧图片:/html/body/div[3]/div/div/div/div[1]/div/a/img HtmlNode node01 = node.SelectSingleNode("div[1]//div//a//img"); string picture = node01.Attributes["src"].Value; //获取电视剧名称:/html/body/div[3]/div/div/div/div[2]/div[1]/div[1]/div[1]/h1 HtmlNode node02 = node.SelectSingleNode("div[2]//div[1]//div[1]//div[1]//h1"); string tvName = node02.InnerText; //获取电视剧集数:/html/body/div[3]/div/div/div/div[2]/div[1]/div[1]/div[1]/p HtmlNode node03 = node.SelectSingleNode("div[2]//div[1]//div[1]//div[1]//p"); string setNumber = node03.InnerText; //获取电视类型,多个 /html/body/div[3]/div/div/div/div[2]/div[1]/div[2]/div[1]/p[1]/a HtmlNodeCollection node04 = node.SelectNodes("div[2]//div[1]//div[2]//div[1]//p[1]//a"); string tvType = ""; foreach (var nd04 in node04) { tvType += nd04.InnerText + ","; } tvType = tvType.TrimEnd(','); //获取电视年代 /html/body/div[4]/div/div/div/div[2]/div[1]/div[2]/div[1]/p[2] HtmlNode node05 = node.SelectSingleNode("div[2]//div[1]//div[2]//div[1]//p[2]"); string years = node05.InnerText; //获取电视地区 /html/body/div[3]/div/div/div/div[2]/div[1]/div[2]/div[1]/p[3]/span HtmlNode node06 = node.SelectSingleNode("div[2]//div[1]//div[2]//div[1]//p[3]"); string region = node06.InnerText; //获取电视剧导演 /html/body/div[4]/div/div/div/div[2]/div[1]/div[2]/div[1]/p[5]/a[1] HtmlNodeCollection node07 = node.SelectNodes("div[2]//div[1]/div[2]//div[1]//p[5]//a"); string director = ""; foreach (var no07 in node07) { director += no07.InnerText + ","; } director = director.TrimEnd(','); //获取电视剧演员 /html/body/div[4]/div/div/div/div[2]/div[1]/div[2]/div[1]/p[6]/a[1] HtmlNodeCollection node08 = node.SelectNodes("div[2]//div[1]/div[2]//div[1]//p[6]//a"); string performer = ""; foreach (var no08 in node08) { performer += no08.InnerText + ","; } performer = performer.TrimEnd(','); //获取电视剧描述 /html/body/div[4]/div/div/div/div[2]/div[1]/div[2]/div[3]/p HtmlNode node09 = node.SelectSingleNode("div[2]//div[1]//div[2]//div[3]//p"); string miaoshu = node09.InnerText; ns = new film(); ns.picture = picture; ns.tvName = tvName; ns.setNumber = setNumber; ns.tvType = tvType; ns.years = years; ns.region = region; ns.director = director; ns.performer = performer; ns.miaoshu = miaoshu; ls.Add(ns); } catch (Exception ex) { } // Thread.Sleep(random.Next(50)); j++; } // Thread.Sleep(random.Next(50)); } this.dataGridView1.DataSource = ls; }