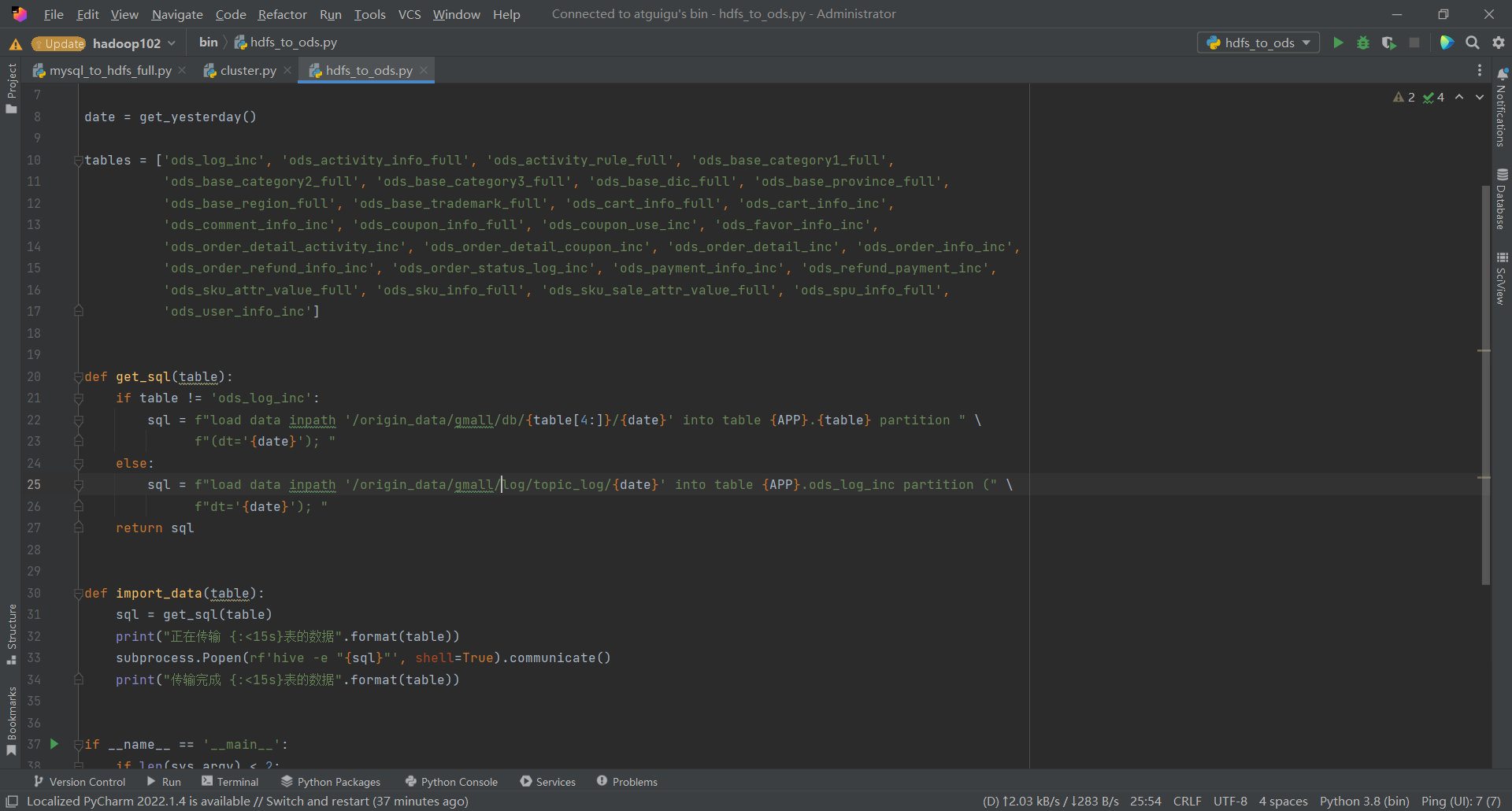

hdfs文件传输到ods层的脚本

#!/usr/bin/python3 # coding=utf-8 import sys from base import get_yesterday, APP import subprocess date = get_yesterday() tables = ['ods_log_inc', 'ods_activity_info_full', 'ods_activity_rule_full', 'ods_base_category1_full', 'ods_base_category2_full', 'ods_base_category3_full', 'ods_base_dic_full', 'ods_base_province_full', 'ods_base_region_full', 'ods_base_trademark_full', 'ods_cart_info_full', 'ods_cart_info_inc', 'ods_comment_info_inc', 'ods_coupon_info_full', 'ods_coupon_use_inc', 'ods_favor_info_inc', 'ods_order_detail_activity_inc', 'ods_order_detail_coupon_inc', 'ods_order_detail_inc', 'ods_order_info_inc', 'ods_order_refund_info_inc', 'ods_order_status_log_inc', 'ods_payment_info_inc', 'ods_refund_payment_inc', 'ods_sku_attr_value_full', 'ods_sku_info_full', 'ods_sku_sale_attr_value_full', 'ods_spu_info_full', 'ods_user_info_inc'] def get_sql(table): if table != 'ods_log_inc': sql = f"load data inpath '/origin_data/gmall/db/{table[4:]}/{date}' into table {APP}.{table} partition " \ f"(dt='{date}'); " else: sql = f"load data inpath '/origin_data/gmall/log/topic_log/{date}' into table {APP}.ods_log_inc partition (" \ f"dt='{date}'); " return sql def import_data(table): sql = get_sql(table) print("正在传输 {:<15s}表的数据".format(table)) subprocess.Popen(rf'hive -e "{sql}"', shell=True).communicate() print("传输完成 {:<15s}表的数据".format(table)) if __name__ == '__main__': if len(sys.argv) < 2: print("参数过少,请重新调用") exit(0) if len(sys.argv) == 3: date = sys.argv[2] argc_table = sys.argv[1] for table in tables: if argc_table == table or argc_table == 'all': import_data(table)

调用形式

hdfs_to_ods.py all 2020-06-15