MySQL 8.0 Optimizer 源码笔记

MySQL 8.0 Optimizer 源码笔记

Based on 社区版源码。核心入口 JOIN::optimize

/** Optimizes one query block into a query execution plan (QEP.) This is the entry point to the query optimization phase. This phase applies both logical (equivalent) query rewrites, cost-based join optimization, and rule-based access path selection. Once an optimal plan is found, the member function creates/initializes all structures needed for query execution. The main optimization phases are outlined below: -# Logical transformations: - Outer to inner joins transformation. - Equality/constant propagation. - Partition pruning. - COUNT(*), MIN(), MAX() constant substitution in case of implicit grouping. - ORDER BY optimization. -# Perform cost-based optimization of table order and access path selection. See JOIN::make_join_plan() -# Post-join order optimization: - Create optimal table conditions from the where clause and the join conditions. - Inject outer-join guarding conditions. - Adjust data access methods after determining table condition (several times.) - Optimize ORDER BY/DISTINCT. -# Code generation - Set data access functions. - Try to optimize away sorting/distinct. - Setup temporary table usage for grouping and/or sorting. @retval 0 Success. @retval 1 Error, error code saved in member JOIN::error. */ int JOIN::optimize();

预备知识:

-

MySQL JOIN syntax: https://dev.mysql.com/doc/refman/8.0/en/join.html

-

Straight join: is similar to

JOIN, except that the left table is always read before the right table. This can be used for those (few) cases for which the join optimizer processes the tables in a suboptimal order. STRAIGHT_JOIN有两种用法:一种是加在JOIN处作为INNER JOIN的一种特殊类型hint该join的顺序;另一种是加在SELECT处使该select下的所有JOIN都强制为用户table的join顺序,从优化代码上看该用法与semi-join不可同时存在(Optimize_table_order::optimize_straight_join: DBUG_ASSERT(join->select_lex->sj_nests.is_empty()))。 -

join order hint: Join-order hints affect the order in which the optimizer joins tables, including

JOIN_FIXED_ORDER,JOIN_ORDER,JOIN_PREFIX,JOIN_SUFFIX -

各种JOIN类型:INNER JOIN, OUTER JOIN, SEMI JOIN, LEFT/RIGHT JOIN, etc.

-

Materialization(物化): Usually happens in subquery (sometimes known as semi-join). Materialization speeds up query execution by generating a subquery result as a temporary table, normally in memory.

-

Statistics (一般叫统计信息,有时也叫采样,但区分sample采样):从存储获取表的rowcount、min/max/sum/avg/keyrange等元信息,用于辅助plan优化。

-

table dependencies: A LEFT JOIN B : B depends on A and A's own dependencies。(待确认 DEPEND JOIN语义是否也是由table dependencies关系表示)

-

table access path: An access path may use either an

index scan, atable scan, arange scanorrefaccess, known as join type in explain. -

- index scan: 一般index scan指的是二级索引scan (MySQL主键索引会带着data存放)

-

- table scan: 直接扫表

-

- range scan: 对于索引列的一些可能转化为范围查询的条件,MySQL会试图将其转化为range scan来减少范围外无用的scan。单个范围的range query类似带range条件下推的index scan或table scan;range query支持抽取出多个范围查询。

-

- ref: join field是索引,但不是pk或unique not null 索引

-

- eq_ref: join field是索引且是pk或unique not null索引,意味着对于每个record最多只会join到右表的一行。

-

MySQL源码中JOIN对象的tables的存放layout(参考注释,单看变量名有歧义):

/** Before plan has been created, "tables" denote number of input tables in the query block and "primary_tables" is equal to "tables". After plan has been created (after JOIN::get_best_combination()), the JOIN_TAB objects are enumerated as follows: - "tables" gives the total number of allocated JOIN_TAB objects - "primary_tables" gives the number of input tables, including materialized temporary tables from semi-join operation. - "const_tables" are those tables among primary_tables that are detected to be constant. - "tmp_tables" is 0, 1 or 2 (more if windows) and counts the maximum possible number of intermediate tables in post-processing (ie sorting and duplicate removal). Later, tmp_tables will be adjusted to the correct number of intermediate tables, @see JOIN::make_tmp_tables_info. - The remaining tables (ie. tables - primary_tables - tmp_tables) are input tables to materialized semi-join operations. The tables are ordered as follows in the join_tab array: 1. const primary table 2. non-const primary tables 3. intermediate sort/group tables 4. possible holes in array 5. semi-joined tables used with materialization strategy */ uint tables; ///< Total number of tables in query block uint primary_tables; ///< Number of primary input tables in query block uint const_tables; ///< Number of primary tables deemed constant uint tmp_tables; ///< Number of temporary tables used by query

optimizer大致步骤

-

outer join -> inner join

if (select_lex->first_execution) { /** @todo This query block didn't transform itself in SELECT_LEX::prepare(), so belongs to a parent query block. That parent, or its parents, had to transform us - it has not; maybe it is itself in prepare() and evaluating the present query block as an Item_subselect. Such evaluation in prepare() is expected to be a rare case to be eliminated in the future ("SET x=(subq)" is one such case; because it locks tables before prepare()). */ if (select_lex->apply_local_transforms(thd, false)) DBUG_RETURN(error = 1); }实际上这一步应该是在 prepare 的 resolver 阶段去做的,resolver 后 first_execution 会被置位 false。但是由于实现问题,有可能有些深层嵌套的子查询并没有被 prepare,相当于是一个临时 fix。

-

optimize_derived()

/* Run optimize phase for all derived tables/views used in this SELECT, including those in semi-joins. */ if (select_lex->materialized_derived_table_count) { for (TABLE_LIST *tl = select_lex->leaf_tables; tl; tl = tl->next_leaf) { if (tl->is_view_or_derived() && tl->optimize_derived(thd)) DBUG_RETURN(1); } }递归 optimize derived table,包括但不仅限于各种子查询、CTE、table function等。如果能在优化器阶段判断这是一个constant table (if materializable table contains one or zero rows) ,则提前物化便于后面的优化判断。

-

optimize_cond()

a) applying transitivity to build multiple equality predicates (MEP): if x=y and y=z the MEP x=y=z is built. 对应 build_equal_items() b) apply constants where possible. If the value of x is known to be 42, x is replaced with a constant of value 42. By transitivity, this also applies to MEPs, so the MEP in a) will become 42=x=y=z. 对应 propagate_cond_constants() c) remove conditions that are always false or always true. 对应 remove_eq_conds()优化 where_cond 和 having_cond 。这一块严格说其实也可以算Rewriter的范围。但MySQL和很多引擎不同的是,它在这里不会去对Item表达式树做改写,而是把可以优化掉的子树或变量标记为 constant,当执行阶段遇到constant的Item,直接去读它的cache值即可,不需要重新计算。

-

prune_table_partitions()

裁剪分区。对于分区表,如果能提前判断一些分区是不会被覆盖到的,那直接裁剪忽略掉。输入是join_cond (join tables) 或者where_cond (first table)。这一步在分布式计算引擎里是最常见的优化之一,因为分布式的存储往往会更依赖类似sharding的分区模式。

-

opt_sum_query()

利用统计信息行数、索引等去预先将能优化的 select fields 里的 agg 函数优化成常数。比如如果统计信息的行数是准确的 get_exact_record_count() ,则简单的 COUNT 可以被直接替换;如果 MAX/MIN 的列是索引的第一列,则可以直接 seek 索引来找到极值。

-

make_join_plan()

/** Calculate best possible join order and initialize the join structure. @return true if success, false if error. The JOIN object is populated with statistics about the query, and a plan with table order and access method selection is made. The list of tables to be optimized is taken from select_lex->leaf_tables. JOIN::where_cond is also used in the optimization. As a side-effect, JOIN::keyuse_array is populated with key_use information. Here is an overview of the logic of this function: */-

Initialize JOIN data structures and setup basic dependencies between tables.

init_planner_arrays(),初始化构建 JOIN_TAB 数组用于后续优化。Prepare table 之间的 join 依赖关系,包括 embed / outer join 等。 -

Update dependencies based on join information.

propagate_dependencies(),基于outer join构建出table之间的依赖关系。在一些优化理论中,会把table之间的这种 join 依赖关系视为一种图结构。但边的构建会视实际实现而异,有依靠join关系构建的,有依靠 join cond 是否有索引构建的 等等。 -

Make key descriptions (update_ref_and_keys()).

update_ref_and_keys(),根据 where cond / join cond 提前确定可能待选的keys。这会为后续决定 join order 提供支持。 -

Pull out semi-join tables based on table dependencies.

尝试子查询 semi join 上提。

SELECT * FROM t1 WHERE c1 IN (..t2)-->SELECT * FROM t1 SEMI JOIN t2 WHERE c1=...-

Extract tables with zero or one rows as const tables. Read contents of const tables, substitute columns from these tables with actual data. Also keep track of empty tables vs. one-row tables.

extract_const_tables(), 如果统计信息表明该表是个 const table (0 or 1 row) ,则可以预读优化。 -

After const table extraction based on row count, more tables may have become functionally dependent. Extract these as const tables.

extract_func_dependent_tables()-

Add new sargable predicates based on retrieved const values.

update_sargable_from_const()。SARGABLE意为 Search ARGument ABLE ,是个计算引擎术语,原义表示SQL中可利用数据库自身索引优势对查询条件进行执行性能优化。SARGABLE_PARAM 对象是在update_ref_and_keys()的add_key_field()中更新的,存下sargable的op(比如 =,>,< 等),然后在update_sargable_from_const()中结合 const_table 的优化,来优化 possible_keys 。 -

Calculate number of rows to be retrieved from each table.

estimate_rowcount(),这里会去通过试读去估算出MySQL认为应该给当前table的row_count(以及io_cost 、 cpu_cost等),用于 cost_model 计算,cost结果会存在 TABLE->read_time 中。 -

Calculate cost of potential semi-join materializations.

optimize_semijoin_nests_for_materialization(),对embeding、semijoin的子查询做局部的 join reorder。其实就是按照依赖顺序排个序。 -

Calculate best possible join order based on available statistics.

Optimize_table_order(), 对非子查询的所有表做 join reorder。 -

Fill in remaining information for the generated join order.

-

-

make_join_select()

Statistics

核心对象ha_statistics。最主要的是records表示table rowcount。

class ha_statistics {

ulonglong data_file_length; /* Length off data file */

ulonglong max_data_file_length; /* Length off data file */

ulonglong index_file_length;

ulonglong max_index_file_length;

ulonglong delete_length; /* Free bytes */

ulonglong auto_increment_value;

/*

The number of records in the table.

0 - means the table has exactly 0 rows

other - if (table_flags() & HA_STATS_RECORDS_IS_EXACT)

the value is the exact number of records in the table

else

it is an estimate

*/

ha_rows records;

ha_rows deleted; /* Deleted records */

ulong mean_rec_length; /* physical reclength */

/* TODO: create_time should be retrieved from the new DD. Remove this. */

time_t create_time; /* When table was created */

ulong check_time;

ulong update_time;

uint block_size; /* index block size */

/*

number of buffer bytes that native mrr implementation needs,

*/

uint mrr_length_per_rec;

}

myrocks是在handler::info中更新stats的。而info在除了insert的写和部分查询场景会被调用以更新采样信息(调用处多达十余处)。

/**

General method to gather info from handler

::info() is used to return information to the optimizer.

SHOW also makes use of this data Another note, if your handler

doesn't proved exact record count, you will probably want to

have the following in your code:

if (records < 2)

records = 2;

The reason is that the server will optimize for cases of only a single

record. If in a table scan you don't know the number of records

it will probably be better to set records to two so you can return

as many records as you need.

Along with records a few more variables you may wish to set are:

records

deleted

data_file_length

index_file_length

delete_length

check_time

Take a look at the public variables in handler.h for more information.

See also my_base.h for a full description.

@param flag Specifies what info is requested

*/

virtual int info(uint flag) = 0;

// 以下为可能的flag对应bit取值。 CONST除了初始化较少用;大部分情况下用VARIABLE,因为VARIABLE涉及的变量确实是较频繁更新的;ERRKEY在正常路径不会用到,用来报错查信息;AUTO专门针对自增值,自增值可从内存里table级别对象拿到。

/*

Recalculate loads of constant variables. MyISAM also sets things

directly on the table share object.

Check whether this should be fixed since handlers should not

change things directly on the table object.

Monty comment: This should NOT be changed! It's the handlers

responsibility to correct table->s->keys_xxxx information if keys

have been disabled.

The most important parameters set here is records per key on

all indexes. block_size and primar key ref_length.

For each index there is an array of rec_per_key.

As an example if we have an index with three attributes a,b and c

we will have an array of 3 rec_per_key.

rec_per_key[0] is an estimate of number of records divided by

number of unique values of the field a.

rec_per_key[1] is an estimate of the number of records divided

by the number of unique combinations of the fields a and b.

rec_per_key[2] is an estimate of the number of records divided

by the number of unique combinations of the fields a,b and c.

Many handlers only set the value of rec_per_key when all fields

are bound (rec_per_key[2] in the example above).

If the handler doesn't support statistics, it should set all of the

above to 0.

update the 'constant' part of the info:

handler::max_data_file_length, max_index_file_length, create_time

sortkey, ref_length, block_size, data_file_name, index_file_name.

handler::table->s->keys_in_use, keys_for_keyread, rec_per_key

*/

#define HA_STATUS_CONST 8

/*

update the 'variable' part of the info:

handler::records, deleted, data_file_length, index_file_length,

check_time, mean_rec_length

*/

#define HA_STATUS_VARIABLE 16

/*

This flag is used to get index number of the unique index that

reported duplicate key.

update handler::errkey and handler::dupp_ref

see handler::get_dup_key()

*/

#define HA_STATUS_ERRKEY 32

/*

update handler::auto_increment_value

*/

#define HA_STATUS_AUTO 64

Cost model

基本定义:

-

基数 Cardinality:表示 distinct value的个数,比如性别字段取值只有男和女的话,则其基数为2。

-

选择度 Selectivity :the fraction of the rows will be selected. **Selectivity of index = Cardinality / (row_count) ** . 选择度越高越好,最高为 100%。像性别字段的例子,选择度一般就很低,作为索引列时区分度差,一个男性筛选条件下面的数据依然是处于较大量级。

-

代价 Cost : 常说的CBO即是通过估算代价来决定优化策略。不同数据库代价模型可能会不一样,常见的作为代价模型的输入包括但不限于 row_count ,cardinality,selectivity,io/cpu/mem cost, max/min/avg of columns等。

-

Partial Plan:MySQL里的一个概念,指 one part of the whole plan,至少是两个表的一个JOIN关系。

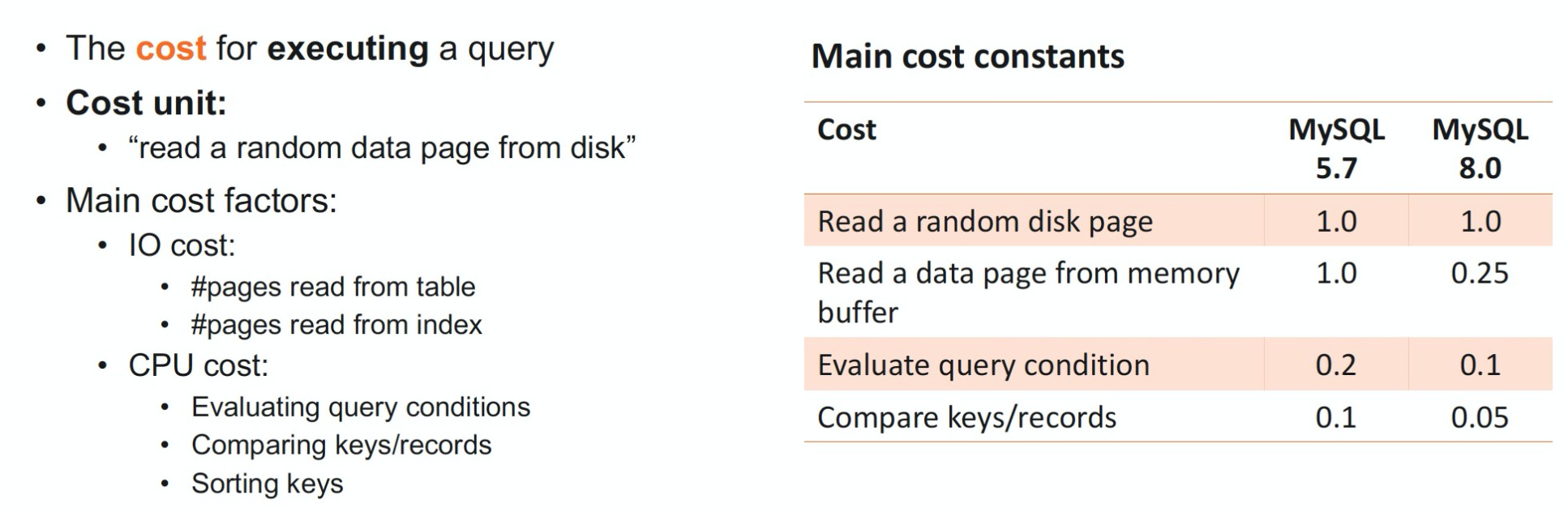

上表为MySQL默认的一些单元操作的cost值,这些值是用户可以配置修改的。

# MySQL cost model大致思路可以描述为一个递推关系。实际cost计算会比这个要复杂。

f(tbl_i) = prev_total_cost + tbl_i_cost ->

f(tbl_i) = f(tbl_i-1) + row_evaluate_cost(prev_rows * cur_table_rows * selectivity) + tbl_i_extra_cost

plan_cost = f(tbl_n)

- prev_rows 由于join的乘积关系,会越滚越大。因此时常有直观的reorder策略是把表从小到大排序 (但因为筛选行数等原因,这不一定是最佳策略)。

- tbl_i_extra_cost 包括了 materialized_cost、compare cost等,access method策略不同,可能extra_cost值也会不一样。

Join Reorder

Join 表示一个 query 的 join plan,同时也作为 plan 的 context 流转(因此在 para query 等一些优化实现中,并行查询里除了最上层的父查询有实际优化的价值外,Join 起的作用更像一个 context )。

-

best_positions存放最终优化的 table order 结果。

-

best_read 存放最终 cost

-

best_ref 存放输入的 table 序列,the optimizer optimizes best_ref 。

需注意,在一些单表简单查询下,上面的变量有可能为空。

make_join_plan 在 JOIN::optimize 里被调用,计算最佳的 join order 并构建 join plan。

Optimize_table_order 类负责实际的join reorder操作,入口方法为其惟一的 public 方法 choose_table_order ,在 make_join_plan 中被调用。 Optimize_table_order 依赖三个前提:

- tables的依赖关系已经排好序 (其实就是前面的依赖传递和semijoin pullout)

- access paths 排好序 (这个意思有点奇怪,我猜是想表示ref和key已经解析好)

- statistics 采样已经完成 (采样发生在别的步骤,make_join_plan只是从内存读取而已)

choose_table_order Steps:

-

初始化 const_tables的 cost,如果全是 const_tables 则可以直接短路返回

-

如果是在一个 sjm(semi-join materialization) plan 优化过程中,则做一次排序将 semi-join (即子查询的query 提前预计算,可根据需求物化)

-

否则,非 STRAIGHT_JOIN 且 depend 无关的 tables 是按照 row_count 从小到大排序的

if (SELECT_STRAIGHT_JOIN option is set) reorder tables so dependent tables come after tables they depend on, otherwise keep tables in the order they were specified in the query else Apply heuristic: pre-sort all access plans with respect to the number of records accessed. Sort algo is merge-sort (tbl >= 5) or insert-sort (tbl < 5) -

如果有 where_cond,需要把where_cond涉及的列 遍历设置到table->cond_set的bitmap中。

-

STRAIGHT_JOIN 的 tables 优化

optimize_straight_join。 STRAIGHT_JOIN 相当于用户限定了 JOIN 的顺序,所以此处的优化工作如其注释所说:Select the best ways to access the tables in a query without reordering them. -

非 STRAIGHT_JOIN 则使用 启发式贪心算法

greedy_search进行 join reorder。

optimize_straight_join :

- 只支持straight_join,

DBUG_ASSERT(join->select_lex->sj_nests.is_empty());与semi-join不兼容,只关注primary tables。 - 对每个JOIN_TABLE,

best_access_path计算其最优的access path,best_access_path通俗的思路概括可参见上面table access pathexplain文档中关于join types的介绍。 -

计算当前表基于对应access path下的cost,并计入总的cost model。Cost计算如下:set_prefix_join_costm_row_evaluate_cost = 0.1 // default value /* Cost of accessing the table in course of the entire complete join execution, i.e. cost of one access method use (e.g. 'range' or 'ref' scan ) multiplied by estimated number of rows from tables earlier in the join sequence. */ read_cost = get_read_cost(table) void set_prefix_join_cost(uint idx, const Cost_model_server *cm) { if (idx == 0) { prefix_rowcount = rows_fetched; prefix_cost = read_cost + prefix_rowcount * m_row_evaluate_cost; } else { // this - 1 means last table prefix_rowcount = (this - 1)->prefix_rowcount * rows_fetched; prefix_cost = (this - 1)->prefix_cost + read_cost + prefix_rowcount * m_row_evaluate_cost; } // float filter_effect [0,1] means cond filters in executor may reduce rows. 1 means nothing filtered, 0 means all rows filtered and no rows left. It is used to calculate how many row combinations will be joined with the next table prefix_rowcount *= filter_effect; }

greedy_search:

bool Optimize_table_order::best_extension_by_limited_search( table_map remaining_tables, uint idx, uint current_search_depth);

procedure greedy_search

input: remaining_tables

output: partial_plan;

{

partial_plan = <>;

do {

(table, a) = best_extension_by_limited_search(partial_plan, remaining_tables, limit_search_depth);

partial_plan = concat(partial_plan, (table, a));

remaining_tables = remaining_tables - table;

} while (remaining_tables != {})

return pplan;

}

// 简单理解就是每一步找拓展出去join路径最佳的table,按顺序加进plan里面。

// 这种方案会很受选取的第一个表影响(因为第一个表没有join关系,只能依靠筛选之后的cardinality,一般都是小表),选小表作第一个表不一定是最优选择。一般Greedy的优化方案会把每个表都当第一个表去评估一次cost,然后从N个cost里选最小的作为最终plan。MySQL里只是返回找到的第一个完整的plan。

best_extension_by_limited_search是一个启发式的搜索过程,search_depth即最大可搜索的深度。best_extension_by_limited_search前半部分逻辑和optimize_straight_join类似:

- 计算

best_access_path并计算cost。 - 如果此时的cost已经大于best_read,则直接剪枝,无需继续搜索。

- 如果

prune_level=PRUNE_BY_TIME_OR_ROWS开启,则判断如果best_row_count和best_cost已经大于当前的rows和cost(注意新版本是and关系),且该表没有被后续的其他表依赖 (可以理解成该表是这个图路径上的最后一个节点,所以可以直接prune;但不一定是整个plan的最后一个节点),则将best_row_count和best_cost设为当前的。写的很绕的代码,结合整个循环看,大致就是每次找基于(rowcount, cost)二维最优的表,所谓的剪枝实际变成了类似加强贪心。 - 对eq_ref做优先的选择,遇到第一个eq_ref后便递归将所有的eq_ref join找出来。(原作者认为eq_ref是个1:1的mapping,所以基本可以认为cost是恒定的,单独将这个eq_ref的序列提前生成,在后续优化时可以看作一整块放在任何一个顺序位置。当然前提是eq_ref是可以连续的。)

- 如果还有remaining_tables,则递归继续处理直至remaining 为空。