Pytorch计算loss前的一些工作:one-hot与indexes、nn.CrossEntropyLoss

indexes转one-hot

https://stackoverflow.com/questions/65424771/how-to-convert-one-hot-vector-to-label-index-and-back-in-pytorch

https://pytorch.org/docs/stable/generated/torch.nn.functional.one_hot.html

可以用pytorch中的自带函数one-hot

import torch.nn.functional as F num_classes = 100 trg = torch.randint(0, num_classes, (2,10)) # [2,10] one-hot = F.one_hot(trg, num_classes=num_classes) # [2,10,100]

one-hot转indexes

torch.argmax(target, dim=2)

torch.nn.CrossEntropyLoss

https://pytorch.org/docs/stable/generated/torch.nn.CrossEntropyLoss.html

通常在计算loss的时候会用到,看官方的样例,target既可以是index,也可以是probalities(类似于softmax之后)

# Example of target with class indices loss = nn.CrossEntropyLoss() input = torch.randn(3, 5, requires_grad=True) target = torch.empty(3, dtype=torch.long).random_(5) output = loss(input, target) output.backward() # Example of target with class probabilities input = torch.randn(3, 5, requires_grad=True) target = torch.randn(3, 5).softmax(dim=1) output = loss(input, target) output.backward()

但是它的行为并不完全是我想象的那样,

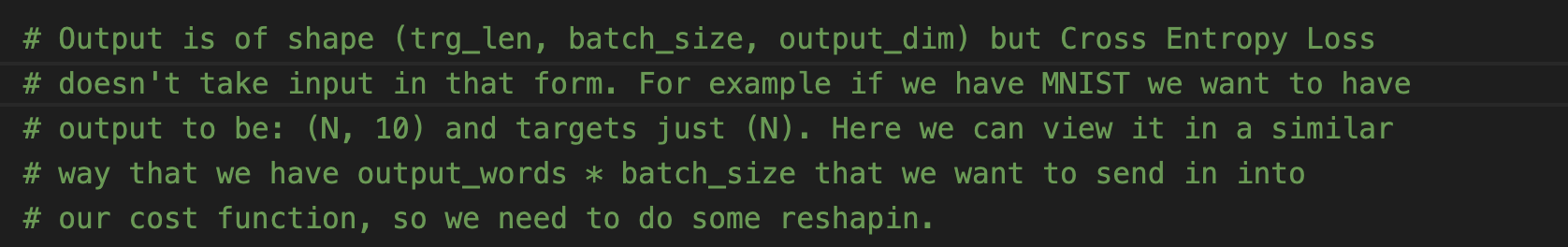

需要reshape成(N, size)和(N),再进行计算

其实也能理解,因为loss计算的结果应该是一个值,而不是matrix

# output: [batch_size, len, trg_vocab_size] # trg: [batch_size, len] # 发现并不能直接计算 # 需要reshape成样例中的形状 output = output.reshape(-1, trg_vocab_size) trg = trg.reshape(-1) loss = criterion(output, trg)

个性签名:时间会解决一切

浙公网安备 33010602011771号

浙公网安备 33010602011771号