记一次Flink TableAPI使用的小坑

废话不多说直接上代码:

cat info.txt

s_1,1547718120,32.1

s_6,1547718297,17.8

s_7,1547718299,9.5

s_10,1547718205,39.1

s_1,1547718207,37.2

s_3,1547718215,35.6

s_1,1547718217,39.4

s_10,1547718205,40.1

--------分割线----------------------

package com.flink.study.tableapi

import org.apache.flink.streaming.api.scala._

import org.apache.flink.table.api.scala._

import org.apache.flink.table.api.{EnvironmentSettings, Table}

object TableApiTest01 {

def main(args: Array[String]): Unit = {

val env: StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

env.setParallelism(1)

val inputstream: DataStream[String] = env.readTextFile("D:\\flink-demo\\src\\main\\resources\\info.txt")

val dataStream: DataStream[SensorReading] = inputstream.map(item => {

val dataArray: Array[String] = item.split(",")

InfoReading(dataArray(0).trim, dataArray(1).trim.toLong, dataArray(2).trim.toDouble)

})

val settings: EnvironmentSettings = EnvironmentSettings.newInstance().useOldPlanner().inStreamingMode().build()

val tableEnv: StreamTableEnvironment = StreamTableEnvironment.create(env, settings)

val table: Table = tableEnv.fromDataStream(dataStream)

//val selectTable: Table = table.select("id, temperature").filter("id = 's_3'")

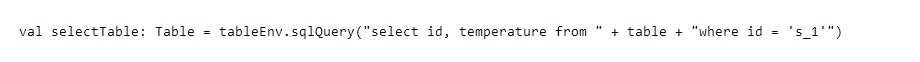

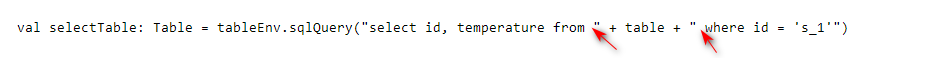

val selectTable: Table = tableEnv.sqlQuery("select id, temperature from " + table + "where id = 's_1'")

val selectStream: DataStream[(String, Double)] = selectTable.toAppendStream[(String, Double)]

println("--------------------------------------------")

selectStream.print()

env.execute("flink tableapi test.")

}

}

case class InfoReading( id: String, timestamp: Long, temperature: Double )

异常信息如下:

Connected to the target VM, address: '127.0.0.1:0', transport: 'socket'

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

Exception in thread "main" org.apache.flink.table.api.SqlParserException: SQL parse failed.*** * Encountered "=" at line 1, column 52.**

Was expecting one of:

<EOF>

"EXCEPT" ...

"FETCH" ...

"GROUP" ...

"HAVING" ...

"INTERSECT" ...

"LIMIT" ...

"OFFSET" ...

"ORDER" ...

"MINUS" ...

"TABLESAMPLE" ...

"UNION" ...

"WHERE" ...

"WINDOW" ...

"(" ...

"NATURAL" ...

"JOIN" ...

"INNER" ...

"LEFT" ...

"RIGHT" ...

"FULL" ...

"CROSS" ...

"," ...

"OUTER" ...

at org.apache.flink.table.calcite.CalciteParser.parse(CalciteParser.java:50)

at org.apache.flink.table.planner.ParserImpl.parse(ParserImpl.java:64)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.sqlQuery(TableEnvironmentImpl.java:464)

at com.flink.study.tableapi.TableApiTest01$.main(TableApiTest01.scala:24)

at com.flink.study.tableapi.TableApiTest01.main(TableApiTest01.scala)

Disconnected from the target VM, address: '127.0.0.1:0', transport: 'socket'

Process finished with exit code 1

刺激不,查找了好多资料没看到代码有什么问题呀,脑瓜疼······(自学狗,还找不到人问),然后一遍遍屡,一遍遍调试,终于找到了问题所在:

此处应该有空格,一时手快居然干掉了,一个小坑,记录一下。

SQL不规范,亲人两行泪。

程序改变世界