KVM虚拟化

KVM虚拟化

虚拟化介绍

虚拟化是云计算的基础。简单的说,虚拟化使得在一台物理的服务器上可以跑多台虚拟机,虚拟机共享物理机的 CPU、内存、IO 硬件资源,但逻辑上虚拟机之间是相互隔离的。

物理机我们一般称为宿主机(Host),宿主机上面的虚拟机称为客户机(Guest)。

那么 Host 是如何将自己的硬件资源虚拟化,并提供给 Guest 使用的呢?

这个主要是通过一个叫做 Hypervisor 的程序实现的。

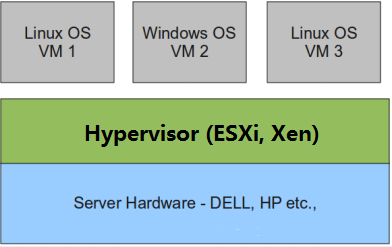

根据 Hypervisor 的实现方式和所处的位置,虚拟化又分为两种:

- 全虚拟化

- 半虚拟化

全虚拟化:

Hypervisor 直接安装在物理机上,多个虚拟机在 Hypervisor 上运行。Hypervisor 实现方式一般是一个特殊定制的 Linux 系统。Xen 和 VMWare 的 ESXi 都属于这个类型

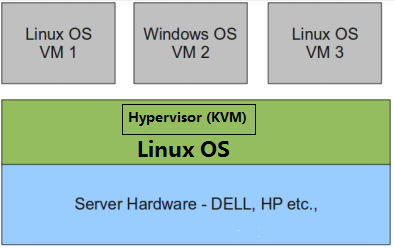

半虚拟化:

物理机上首先安装常规的操作系统,比如 Redhat、Ubuntu 和 Windows。Hypervisor 作为 OS 上的一个程序模块运行,并对管理虚拟机进行管理。KVM、VirtualBox 和 VMWare Workstation 都属于这个类型

理论上讲:

全虚拟化一般对硬件虚拟化功能进行了特别优化,性能上比半虚拟化要高;

半虚拟化因为基于普通的操作系统,会比较灵活,比如支持虚拟机嵌套。嵌套意味着可以在KVM虚拟机中再运行KVM。

kvm介绍

kVM 全称是 Kernel-Based Virtual Machine。也就是说 KVM 是基于 Linux 内核实现的。

KVM有一个内核模块叫 kvm.ko,只用于管理虚拟 CPU 和内存。

那 IO 的虚拟化,比如存储和网络设备则是由 Linux 内核与Qemu来实现。

作为一个 Hypervisor,KVM 本身只关注虚拟机调度和内存管理这两个方面。IO 外设的任务交给 Linux 内核和 Qemu。

大家在网上看 KVM 相关文章的时候肯定经常会看到 Libvirt 这个东西。

Libvirt 就是 KVM 的管理工具。

其实,Libvirt 除了能管理 KVM 这种 Hypervisor,还能管理 Xen,VirtualBox 等。

Libvirt 包含 3 个东西:后台 daemon 程序 libvirtd、API 库和命令行工具 virsh

- libvirtd是服务程序,接收和处理 API 请求;

- API 库使得其他人可以开发基于 Libvirt 的高级工具,比如 virt-manager,这是个图形化的 KVM 管理工具;

- virsh 是我们经常要用的 KVM 命令行工具

KVM部署(基于CentOS 7)

环境说明

| IP地址 | 系统版本 |

|---|---|

| 192.168.110.60 | CentOS 7 |

准备工作

CPU虚拟化功能

部署前请确保你的CPU虚拟化功能已开启,分为两种情况:

- 虚拟机要关机设置CPU虚拟化

- 物理机要在BIOS里开启CPU虚拟化

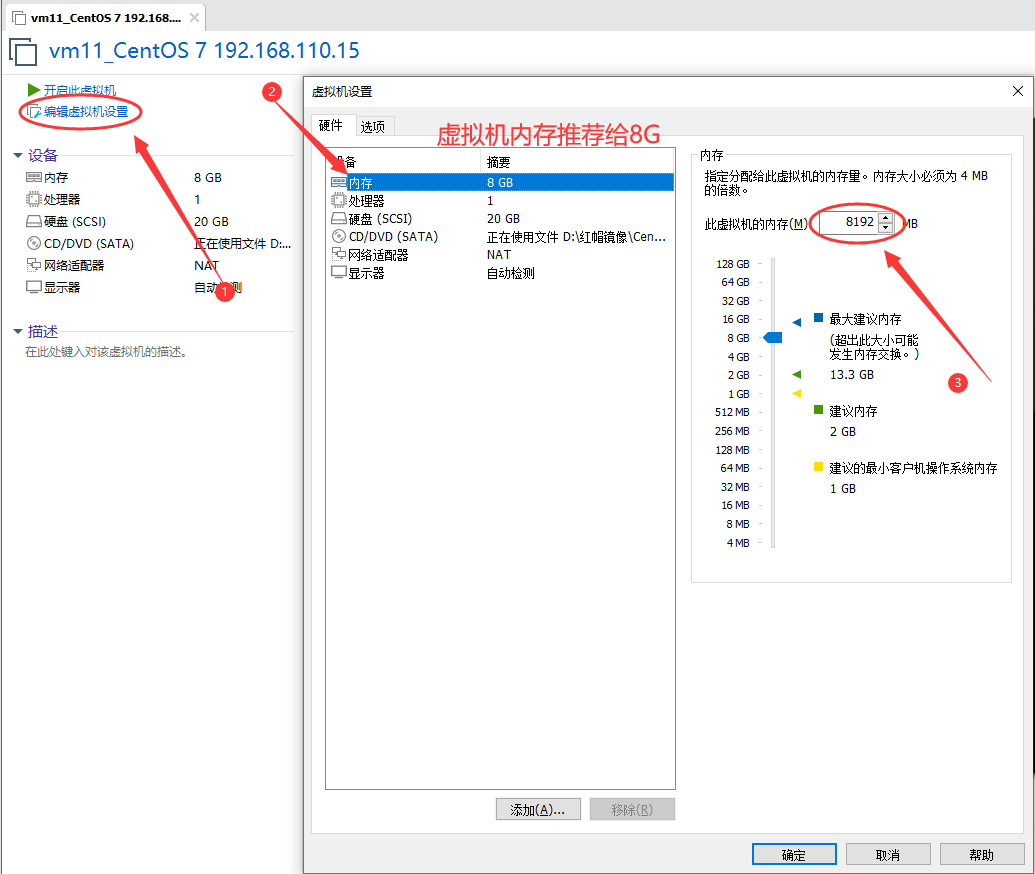

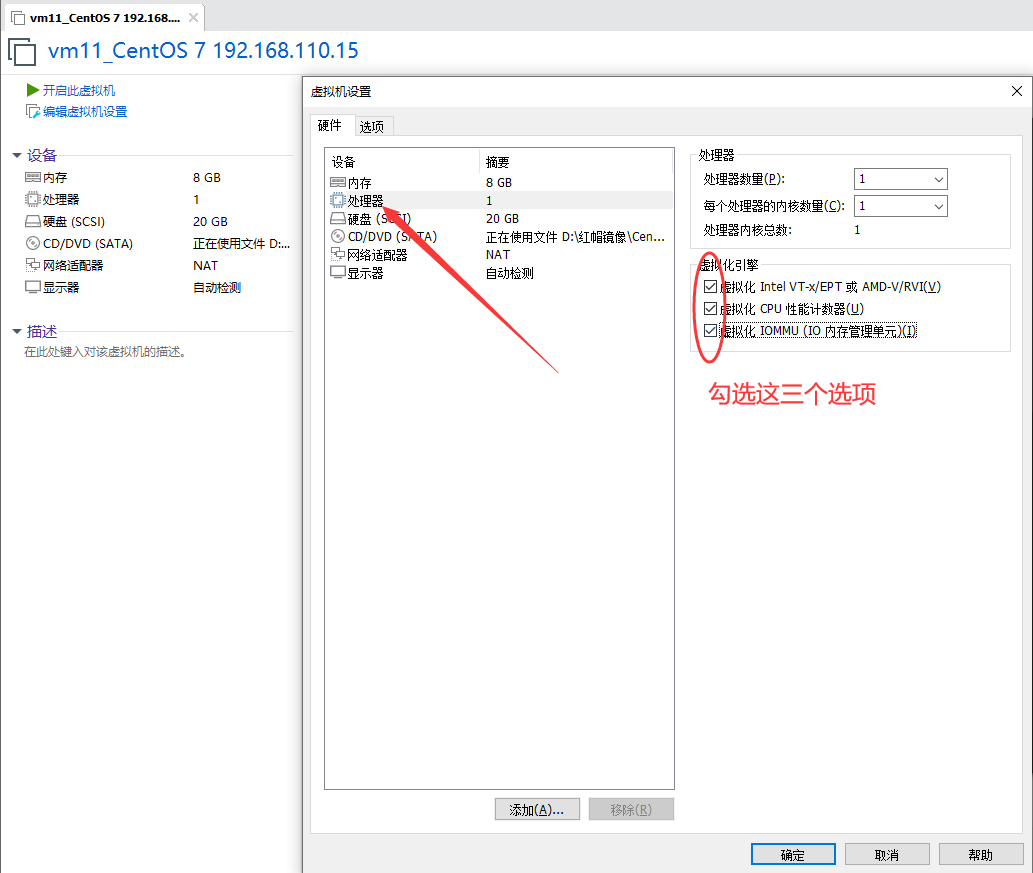

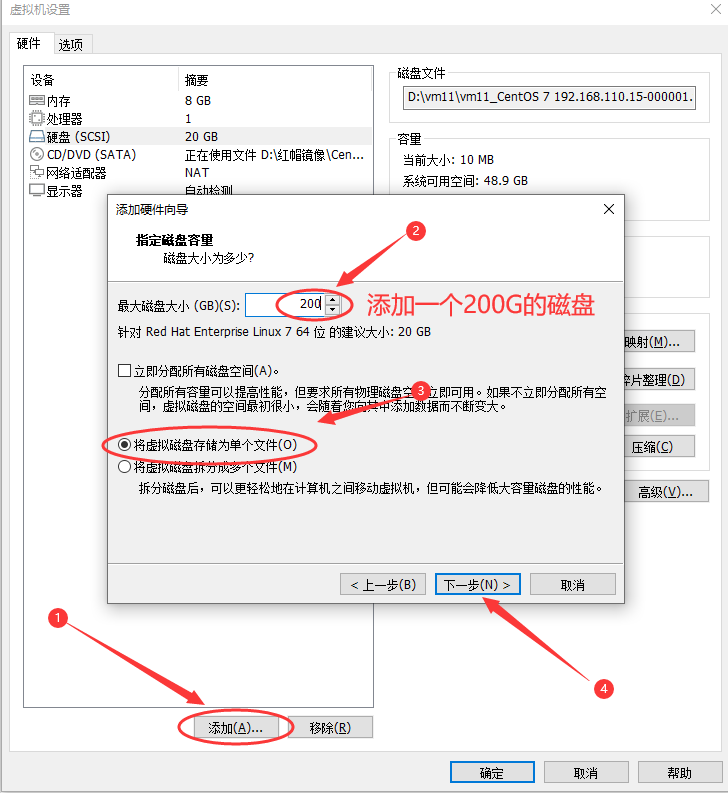

虚拟机设置(内存:8G 磁盘大小:200G 虚拟化功能:开启)

- 设置虚拟机内存 8G

- 开启虚拟化功能

- 添加一个200G硬盘

//给新添加的磁盘分区

#查看分区表

[root@localhost ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sdb 8:16 0 200G 0 disk

sr0 11:0 1 4.2G 0 rom

#给sdb分区

[root@localhost ~]# fdisk /dev/sdb

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x9ac61ddf.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p

Partition number (1-4, default 1):

First sector (2048-419430399, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-419430399, default 419430399):

Using default value 419430399

Partition 1 of type Linux and of size 200 GiB is set

Command (m for help): p

Disk /dev/sdb: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x9ac61ddf

Device Boot Start End Blocks Id System

/dev/sdb1 2048 419430399 209714176 83 Linux

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

#刷新分区表

[root@localhost ~]# partprobe

Warning: Unable to open /dev/sr0 read-write (Read-only file system). /dev/sr0 has been opened read-only.

#格式化sdb1为xfs格式

[root@localhost ~]# mkfs.xfs /dev/sdb1

meta-data=/dev/sdb1 isize=512 agcount=4, agsize=13107136 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=52428544, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=25599, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

#查看UUID

[root@localhost ~]# blkid /dev/sdb1

/dev/sdb1: UUID="709d3658-a048-45c7-b6fe-078bc934fb85" TYPE="xfs"

#自动挂载

[root@localhost ~]# vim /etc/fstab

...在最后一行添加配置...

UUID="709d3658-a048-45c7-b6fe-078bc934fb85" /kvmdata xfs defaults 0 0

#创建挂载目录

[root@localhost ~]# mkdir /kvmdata

#挂载成功

[root@localhost ~]# mount -a

[root@localhost ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 17G 1.5G 16G 9% /

devtmpfs 3.9G 0 3.9G 0% /dev

tmpfs 3.9G 0 3.9G 0% /dev/shm

tmpfs 3.9G 8.6M 3.9G 1% /run

tmpfs 3.9G 0 3.9G 0% /sys/fs/cgroup

/dev/sda1 1014M 143M 872M 15% /boot

tmpfs 781M 0 781M 0% /run/user/0

/dev/sdb1 200G 33M 200G 1% /kvmdata

KVM安装

//关闭防火墙与SELINUX

[root@localhost ~]# systemctl disable --now firewalld

[root@localhost ~]# setenforce 0

[root@localhost ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@localhost ~]# reboot

//安装常用工具包

[root@localhost ~]# yum -y install epel-release vim wget net-tools unzip zip gcc gcc-c++

//验证CPU是否支持KVM;如果结果中有vmx(Intel)或svm(AMD)字样,就说明CPU的支持的

[root@localhost ~]# egrep -o 'vmx|svm' /proc/cpuinfo

vmx

//安装kvm

[root@localhost ~]# yum -y install qemu-kvm qemu-kvm-tools qemu-img virt-manager libvirt libvirt-python libvirt-client virt-install virt-viewer bridge-utils libguestfs-tools

...耐心等待即可...

//因为虚拟机中网络,我们一般都是和公司的其他服务器是同一个网段,所以我们需要把KVM服务器的网卡配置成桥接模式;这样的话KVM的虚拟机就可以通过该桥接网卡和公司内部其他服务器处于同一网段。

#此处我的网卡是ens32,所以用br0来桥接ens32网卡

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:7f:9a:1c brd ff:ff:ff:ff:ff:ff

inet 192.168.110.15/24 brd 192.168.110.255 scope global ens32

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe7f:9a1c/64 scope link

valid_lft forever preferred_lft forever

#复制一个网卡br0

[root@localhost ~]# cd /etc/sysconfig/network-scripts/

[root@localhost network-scripts]# cp ifcfg-ens32 ifcfg-br0

#配置br0

[root@localhost network-scripts]# vim ifcfg-br0

TYPE=Bridge

BOOTPROTO=static

NAME=br0

DEVICE=br0

ONBOOT=yes

IPADDR=192.168.110.15

PREFIX=24

GATEWAY=192.168.110.2

DNS1=114.114.114.114

#配置ens32

[root@localhost network-scripts]# vim ifcfg-ens32

TYPE=Ethernet

BOOTPROTO=static

NAME=ens32

DEVICE=ens32

ONBOOT=yes

BRIDGE=br0

//重启网络

[root@kvm network-scripts]# systemctl restart network

[root@kvm network-scripts]# reboot

[root@localhost ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master br0 state UP qlen 1000

link/ether 00:0c:29:7f:9a:1c brd ff:ff:ff:ff:ff:ff

3: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP qlen 1000

link/ether 00:0c:29:7f:9a:1c brd ff:ff:ff:ff:ff:ff

inet 192.168.110.15/24 brd 192.168.110.255 scope global br0

valid_lft forever preferred_lft forever

inet6 fe80::4cf1:c7ff:fe12:e3c8/64 scope link

valid_lft forever preferred_lft forever

4: virbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN qlen 1000

link/ether 52:54:00:8f:fd:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

5: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN qlen 1000

link/ether 52:54:00:8f:fd:99 brd ff:ff:ff:ff:ff:ff

//启动libvirt服务

[root@localhost ~]# systemctl enable --now libvirtd

//验证安装结果

[root@localhost ~]# lsmod|grep kvm

kvm_intel 170086 0

kvm 566340 1 kvm_intel

irqbypass 13503 1 kvm

//测试并验证安装结果

[root@localhost ~]# virsh -c qemu:///system list

Id Name State

----------------------------------------------------

[root@localhost ~]# virsh --version

4.5.0

[root@localhost ~]# virt-install --version

1.5.0

[root@localhost ~]# ln -s /usr/libexec/qemu-kvm /usr/bin/qemu-kvm

[root@localhost ~]# ll /usr/bin/qemu-kvm

lrwxrwxrwx 1 root root 21 May 24 01:25 /usr/bin/qemu-kvm -> /usr/libexec/qemu-kvm

//查看网桥信息

[root@localhost ~]# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.000c297f9a1c no ens32

virbr0 8000.5254008ffd99 yes virbr0-nic

KVM管理界面安装

kvm 的 web 管理界面是由 webvirtmgr 程序提供的

//安装依赖包

[root@localhost ~]# yum -y install git python-pip libvirt-python libxml2-python python-websockify supervisor nginx python-devel

//从github上下载webvirtmgr代码

[root@localhost ~]# cd /usr/local/src/

[root@localhost src]# git clone git://github.com/retspen/webvirtmgr.git

Cloning into 'webvirtmgr'...

remote: Enumerating objects: 5614, done.

remote: Total 5614 (delta 0), reused 0 (delta 0), pack-reused 5614

Receiving objects: 100% (5614/5614), 2.97 MiB | 1.46 MiB/s, done.

Resolving deltas: 100% (3606/3606), done.

//安装webvirtmgr

[root@localhost src]# cd webvirtmgr/

[root@localhost webvirtmgr]# pip install -r requirements.txt

Collecting django==1.5.5 (from -r requirements.txt (line 1))

Downloading https://files.pythonhosted.org/packages/38/49/93511c5d3367b6b21fc2995a0e53399721afc15e4cd6eb57be879ae13ad4/Django-1.5.5.tar.gz (8.1MB)

100% |████████████████████████████████| 8.1MB 170kB/s

Collecting gunicorn==19.5.0 (from -r requirements.txt (line 2))

Downloading https://files.pythonhosted.org/packages/f9/4e/f4076a1a57fc1e75edc0828db365cfa9005f9f6b4a51b489ae39a91eb4be/gunicorn-19.5.0-py2.py3-none-any.whl (113kB)

100% |████████████████████████████████| 122kB 2.7MB/s

Collecting lockfile>=0.9 (from -r requirements.txt (line 5))

Downloading https://files.pythonhosted.org/packages/c8/22/9460e311f340cb62d26a38c419b1381b8593b0bb6b5d1f056938b086d362/lockfile-0.12.2-py2.py3-none-any.whl

Installing collected packages: django, gunicorn, lockfile

Running setup.py install for django ... done

Successfully installed django-1.5.5 gunicorn-19.5.0 lockfile-0.12.2

You are using pip version 8.1.2, however version 21.1.2 is available.

You should consider upgrading via the 'pip install --upgrade pip' command. //这里提示建议升级,忽略就行

//检查sqlite3是否安装

[root@localhost webvirtmgr]# python

Python 2.7.5 (default, Nov 16 2020, 22:23:17)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import sqlite3

>>> exit()

//初始化账号信息

[root@localhost webvirtmgr]# python manage.py syncdb

WARNING:root:No local_settings file found.

Creating tables ...

Creating table auth_permission

Creating table auth_group_permissions

Creating table auth_group

Creating table auth_user_groups

Creating table auth_user_user_permissions

Creating table auth_user

Creating table django_content_type

Creating table django_session

Creating table django_site

Creating table servers_compute

Creating table instance_instance

Creating table create_flavor

You just installed Django's auth system, which means you don't have any superusers defined.

Would you like to create one now? (yes/no): yes //是否创建超级管理员帐号

Username (leave blank to use 'root'): admin //指定超级管理员帐号用户名,默认留空为root

Email address: leidazhuang123@163.com //设置超级管理员邮箱

Password: //设置超级管理员密码

Password (again): //再次输入超级管理员密码

Superuser created successfully.

Installing custom SQL ...

Installing indexes ...

Installed 6 object(s) from 1 fixture(s)

//拷贝web网页至指定目录

[root@localhost webvirtmgr]# mkdir -p /var/www

[root@localhost webvirtmgr]# cp -r /usr/local/src/webvirtmgr /var/www/

[root@localhost webvirtmgr]# chown -R nginx.nginx /var/www/webvirtmgr/

//生成密钥

[root@localhost ~]# ssh-keygen -t rsa(直接回车)

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:OKLRcPd0eDRBh24K7Lfj9SHsCZhOnr6QDi7CQhvLQ0U root@localhost.localdomain

The key's randomart image is:

+---[RSA 2048]----+

| .=o. |

| E o.o |

| o ... o.o |

| = .o+ oo |

| o o.o.So |

| + o o.+o. |

|= * o +...+ . |

|+B o = .o+ + . |

|o.o ..*o..o . |

+----[SHA256]-----+

//由于这里webvirtmgr和kvm服务部署在同一台机器,所以这里本地信任。如果kvm部署在其他机器,那么这个是它的ip

[root@localhost ~]# ssh-copy-id 192.168.110.15

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host '192.168.110.15 (192.168.110.15)' can't be established.

ECDSA key fingerprint is SHA256:QUwyaJ6fccvhuHkUz6j4xpAcLCq9aOR7yt46V6GZUXU.

ECDSA key fingerprint is MD5:fb:a4:12:46:78:56:c6:c2:e9:2d:95:2b:42:07:bd:38.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@192.168.110.15's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh '192.168.110.15'"

and check to make sure that only the key(s) you wanted were added.

//配置端口转发

[root@localhost ~]# ssh 192.168.110.15 -L localhost:8000:localhost:8000 -L localhost:6080:localhost:60

Last login: Mon May 24 01:24:08 2021 from 192.168.110.1

[root@localhost ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:111 *:*

LISTEN 0 5 192.168.122.1:53 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 127.0.0.1:6010 *:*

LISTEN 0 128 127.0.0.1:6080 *:*

LISTEN 0 128 127.0.0.1:8000 *:*

LISTEN 0 128 :::111 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 128 ::1:6010 :::*

LISTEN 0 128 ::1:6080 :::*

LISTEN 0 128 ::1:8000 :::*

//配置nginx

[root@localhost ~]# cp /etc/nginx/nginx.conf{,.bak}

[root@localhost ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

worker_rlimit_nofile 655350;

include /usr/share/nginx/modules/*.conf;

events {

worker_connections 1024;

}

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 2048;

include /etc/nginx/mime.types;

default_type application/octet-stream;

include /etc/nginx/conf.d/*.conf;

server {

listen 80;

server_name localhost;

include /etc/nginx/default.d/*.conf;

location / {

root html;

index index.html index.htm;

}

error_page 404 /404.html;

location = /40x.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}

#虚拟主机

[root@localhost ~]# vim /etc/nginx/conf.d/webvirtmgr.conf

server {

listen 80 default_server;

server_name $hostname;

#access_log /var/log/nginx/webvirtmgr_access_log;

location /static/ {

root /var/www/webvirtmgr/webvirtmgr;

expires max;

}

location / {

proxy_pass http://127.0.0.1:8000;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-for $proxy_add_x_forwarded_for;

proxy_set_header Host $host:$server_port;

proxy_set_header X-Forwarded-Proto $remote_addr;

proxy_connect_timeout 600;

proxy_read_timeout 600;

proxy_send_timeout 600;

client_max_body_size 1024M;

}

}

//确保bind绑定的是本机的8000端口

[root@localhost ~]# vim /var/www/webvirtmgr/conf/gunicorn.conf.py

...

bind = '0.0.0.0:8000' //确保此处绑定的是本机的8000端口,这个在nginx配置中定义了,被代理的端口

backlog = 2048

...

//启动nginx,设置开机自启

[root@localhost ~]# systemctl enable --now nginx

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@localhost ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:111 *:*

LISTEN 0 128 *:80 *:*

LISTEN 0 5 192.168.122.1:53 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 127.0.0.1:6010 *:*

LISTEN 0 128 127.0.0.1:6080 *:*

LISTEN 0 128 127.0.0.1:8000 *:*

LISTEN 0 128 :::111 :::*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

LISTEN 0 128 ::1:6010 :::*

LISTEN 0 128 ::1:6080 :::*

LISTEN 0 128 ::1:8000 :::*

//设置supervisor

[root@localhost ~]# vim /etc/supervisord.conf

.....此处省略上面的内容,在文件最后加上以下内容

[program:webvirtmgr]

command=/usr/bin/python2 /var/www/webvirtmgr/manage.py run_gunicorn -c /var/www/webvirtmgr/conf/gunicorn.conf.py

directory=/var/www/webvirtmgr

autostart=true

autorestart=true

logfile=/var/log/supervisor/webvirtmgr.log

log_stderr=true

user=nginx

[program:webvirtmgr-console]

command=/usr/bin/python2 /var/www/webvirtmgr/console/webvirtmgr-console

directory=/var/www/webvirtmgr

autostart=true

autorestart=true

stdout_logfile=/var/log/supervisor/webvirtmgr-console.log

redirect_stderr=true

user=nginx

//启动supervisor并设置开机自启

[root@localhost ~]# systemctl enable --now supervisord

Created symlink from /etc/systemd/system/multi-user.target.wants/supervisord.service to /usr/lib/systemd/system/supervisord.service.

[root@localhost ~]# systemctl status supervisord

● supervisord.service - Process Monitoring and Control Daemon

Loaded: loaded (/usr/lib/systemd/system/supervisord.service; enabled; vendor preset: disabled)

Active: active (running) since Mon 2021-05-24 01:46:32 CST; 7s ago

Process: 12461 ExecStart=/usr/bin/supervisord -c /etc/supervisord.conf (code=exited, status=0/SUCCESS)

Main PID: 12464 (supervisord)

Tasks: 2

CGroup: /system.slice/supervisord.service

├─12464 /usr/bin/python /usr/bin/supervisord -c /etc/supervisord.conf

└─12476 /usr/bin/python2 /var/www/webvirtmgr/manage.py run_gunicorn -c ...

May 24 01:46:32 localhost.localdomain systemd[1]: Starting Process Monitoring and....

May 24 01:46:32 localhost.localdomain systemd[1]: Started Process Monitoring and ....

Hint: Some lines were ellipsized, use -l to show in full.

//配置nginx用户

[root@localhost ~]# su - nginx -s /bin/bash

-bash-4.2$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/var/lib/nginx/.ssh/id_rsa):

Created directory '/var/lib/nginx/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /var/lib/nginx/.ssh/id_rsa.

Your public key has been saved in /var/lib/nginx/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:d1cDlpmMACHVccIr/D8ZGM3F8NQG5YbESVWbP1Sl+e8 nginx@localhost.localdomain

The key's randomart image is:

+---[RSA 2048]----+

| ..+=+ooBBXo*|

| . oo.=X+=+|

| . + .o+B.|

| o o o ooo|

| S + . ..o|

| + o . o|

| . o .|

| + . |

| . E|

+----[SHA256]-----+

-bash-4.2$ touch ~/.ssh/config && echo -e "StrictHostKeyChecking=no\nUserKnownHostsFile=/dev/null" >> ~/.ssh/config

-bash-4.2$ chmod 0600 ~/.ssh/config

-bash-4.2$ ssh-copy-id root@192.168.110.15

/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/var/lib/nginx/.ssh/id_rsa.pub"

/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

Warning: Permanently added '192.168.110.15' (ECDSA) to the list of known hosts.

root@192.168.110.15's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@192.168.110.15'"

and check to make sure that only the key(s) you wanted were added.

-bash-4.2$ exit

logout

[root@localhost ~]# vim /etc/polkit-1/localauthority/50-local.d/50-libvirt-remote-access.pkla

[Remote libvirt SSH access]

Identity=unix-user:root

Action=org.libvirt.unix.manage

ResultAny=yes

ResultInactive=yes

ResultActive=yes

[root@localhost ~]# chown -R root.root /etc/polkit-1/localauthority/50-local.d/50-libvirt-remote-access.pkla

#重启服务

[root@localhost ~]# systemctl restart nginx

[root@localhost ~]# systemctl restart libvirtd

故障案例

完成了上面的操作后,访问 192.168.110.15 ,可能会遇到以下两个情况:

1. 案例一

第一次通过web访问kvm时可能会一直访问不了,一直转圈,而命令行界面一直报错(Too many open files)

[root@localhost ~]# accept: Too many open files

accept: Too many open files

accept: Too many open files

accept: Too many open files

......

此时需要对nginx进行配置

[root@localhost ~]# vim /etc/nginx/nginx.conf

#文件开头

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log;

pid /run/nginx.pid;

worker_rlimit_nofile 655350; //添加此行配置

然后对系统参数进行设置

[root@localhost ~]# vim /etc/security/limits.conf

....此处省略N行

# End of file

* soft nofile 655350

* hard nofile 655350

重启服务,重读文件

[root@localhost ~]# sysctl -p

[root@localhost ~]# systemctl restart nginx

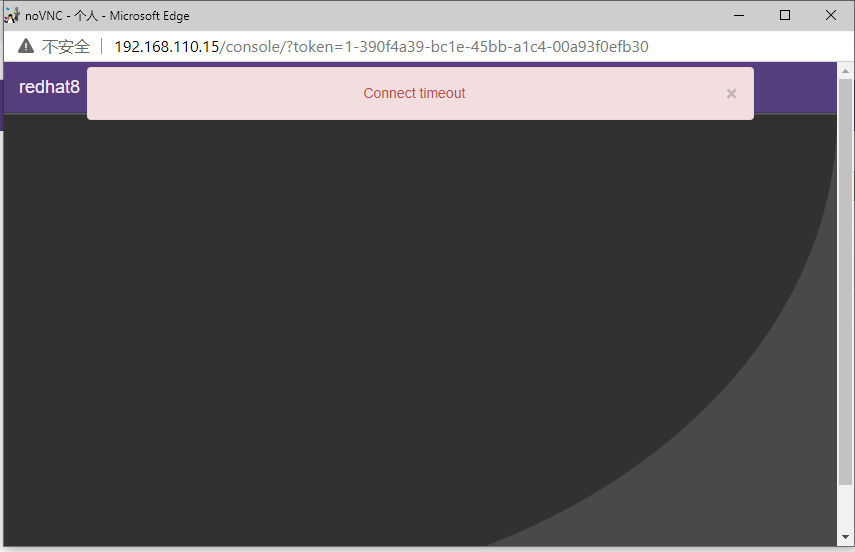

2. 案例二

web界面配置完成后可能会出现以下错误界面

解决方法是安装novnc并通过novnc_server启动一个vnc

[root@localhost ~]# yum -y install novnc

[root@localhost ~]# ll /etc/rc.local

lrwxrwxrwx. 1 root root 13 May 23 17:18 /etc/rc.local -> rc.d/rc.local

[root@localhost ~]# ll /etc/rc.d/rc.local

-rw-r--r--. 1 root root 473 Aug 5 2017 /etc/rc.d/rc.local

[root@localhost ~]# chmod +x /etc/rc.d/rc.local

[root@localhost ~]# ll /etc/rc.d/rc.local

-rwxr-xr-x. 1 root root 473 Aug 5 2017 /etc/rc.d/rc.local

[root@localhost ~]# vim /etc/rc.d/rc.local

······

touch /var/lock/subsys/local

#在最后面加入以下内容

nohup novnc_server 192.168.110.15:5920 &

[root@kvm ~]# . /etc/rc.d/rc.local

...在运行...

做完以上操作后再次访问即可正常访问

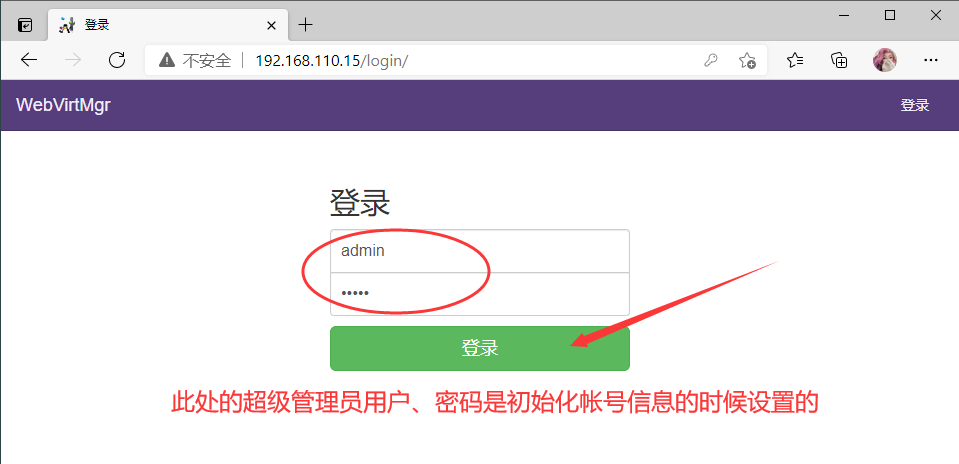

KVM web界面管理

通过ip地址在浏览器上访问kvm,例如我这里就是:http://192.168.110.15

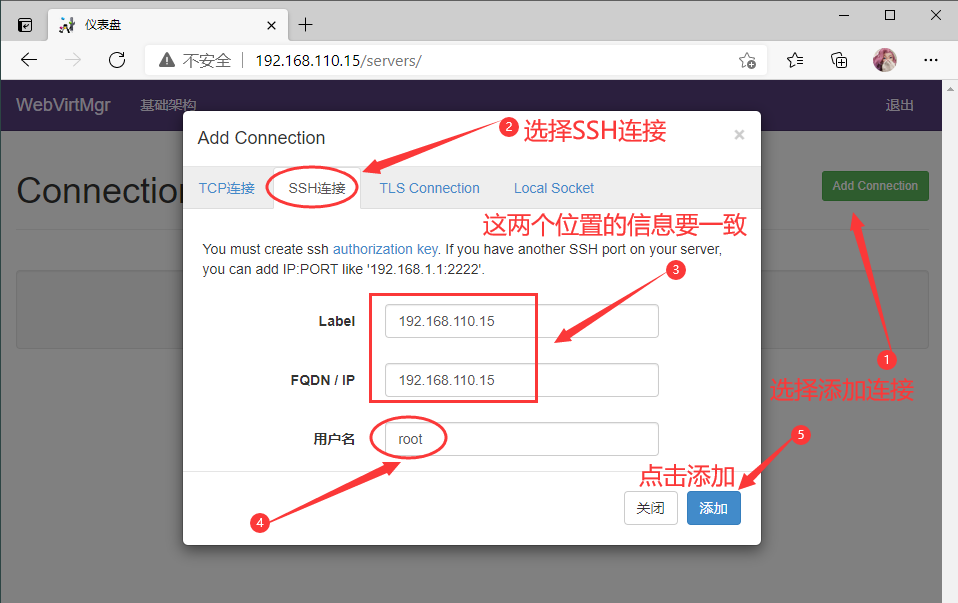

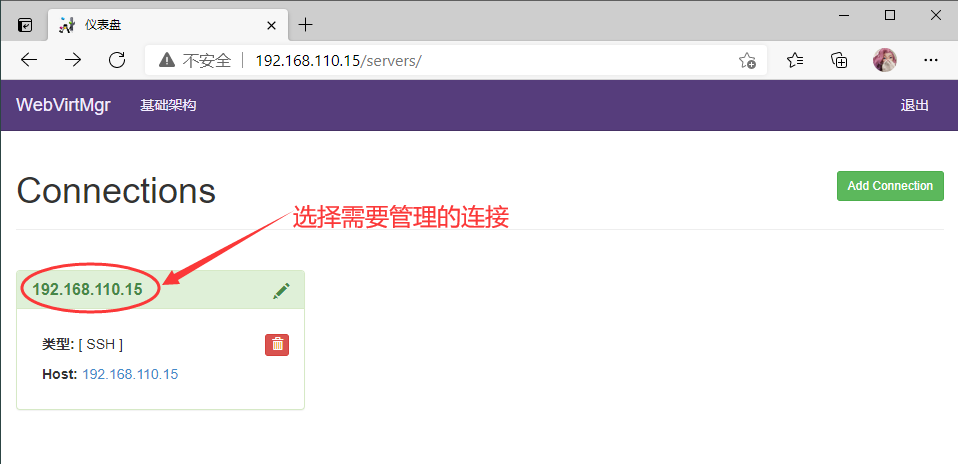

KVM连接管理

创建SSH连接

管理连接

管理界面如下

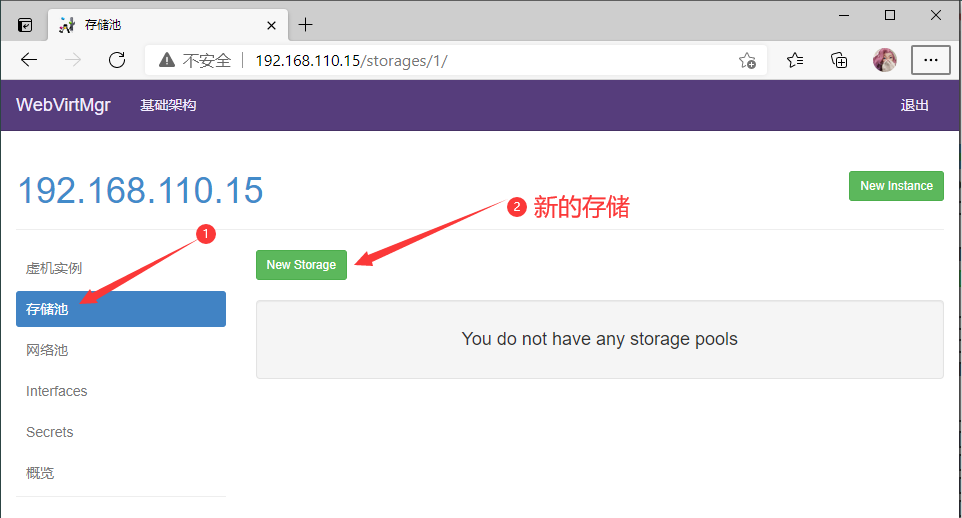

KVM存储管理

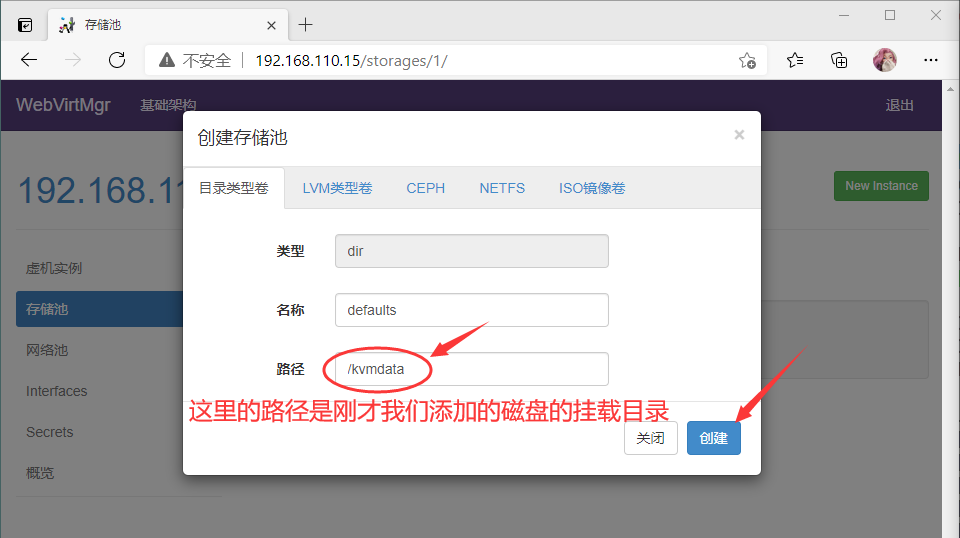

创建存储

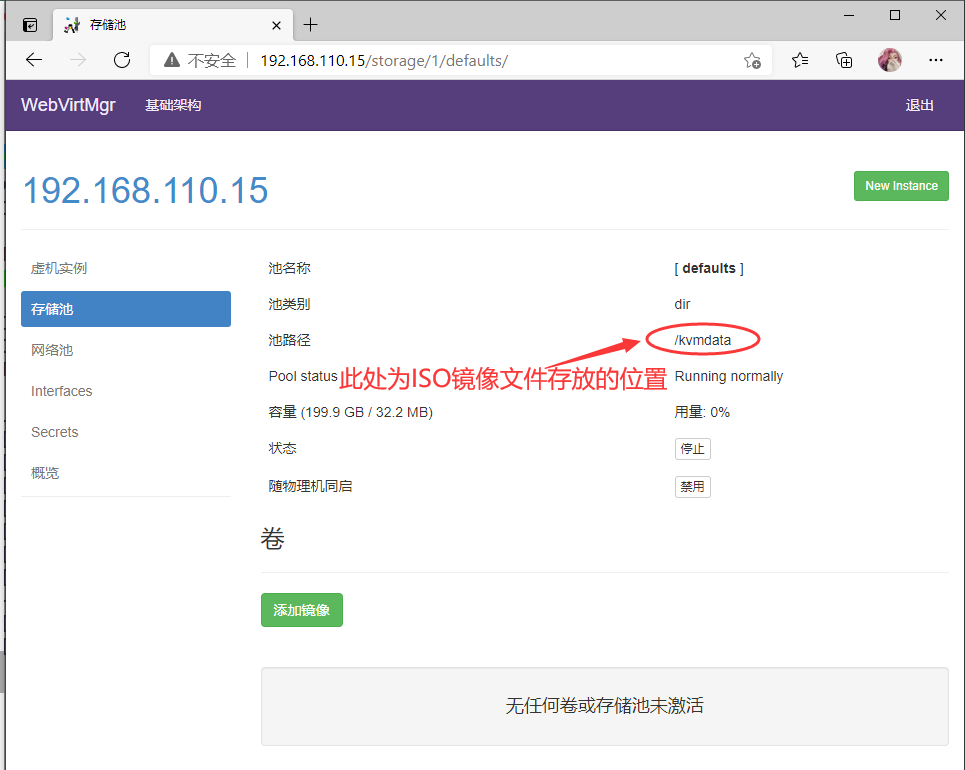

选项界面

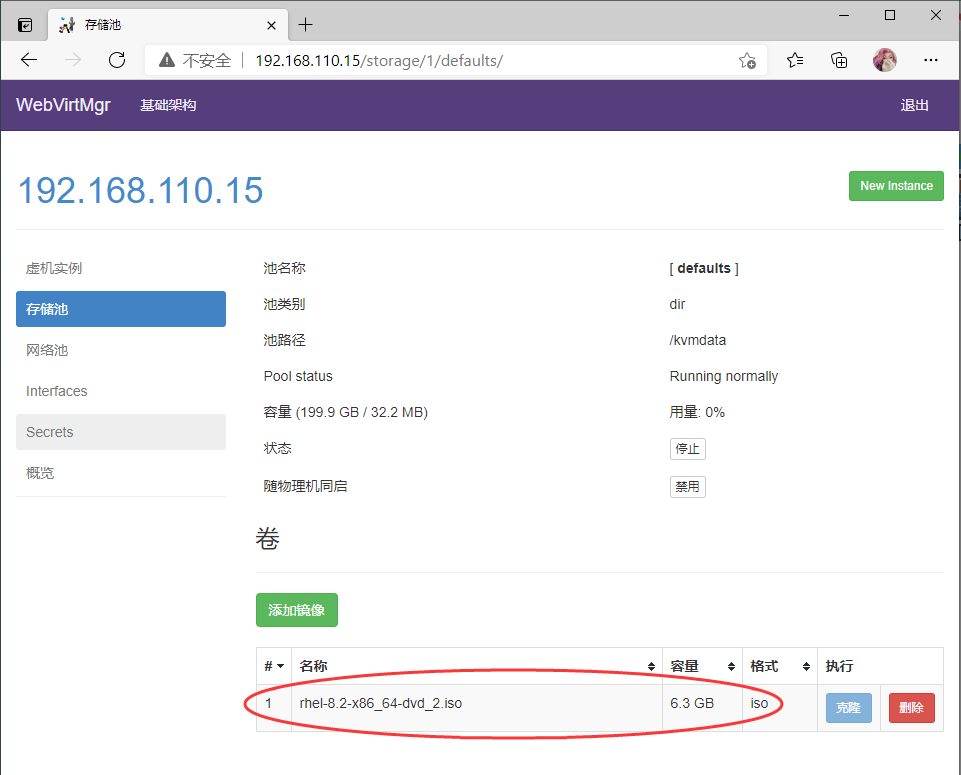

存储池详细信息

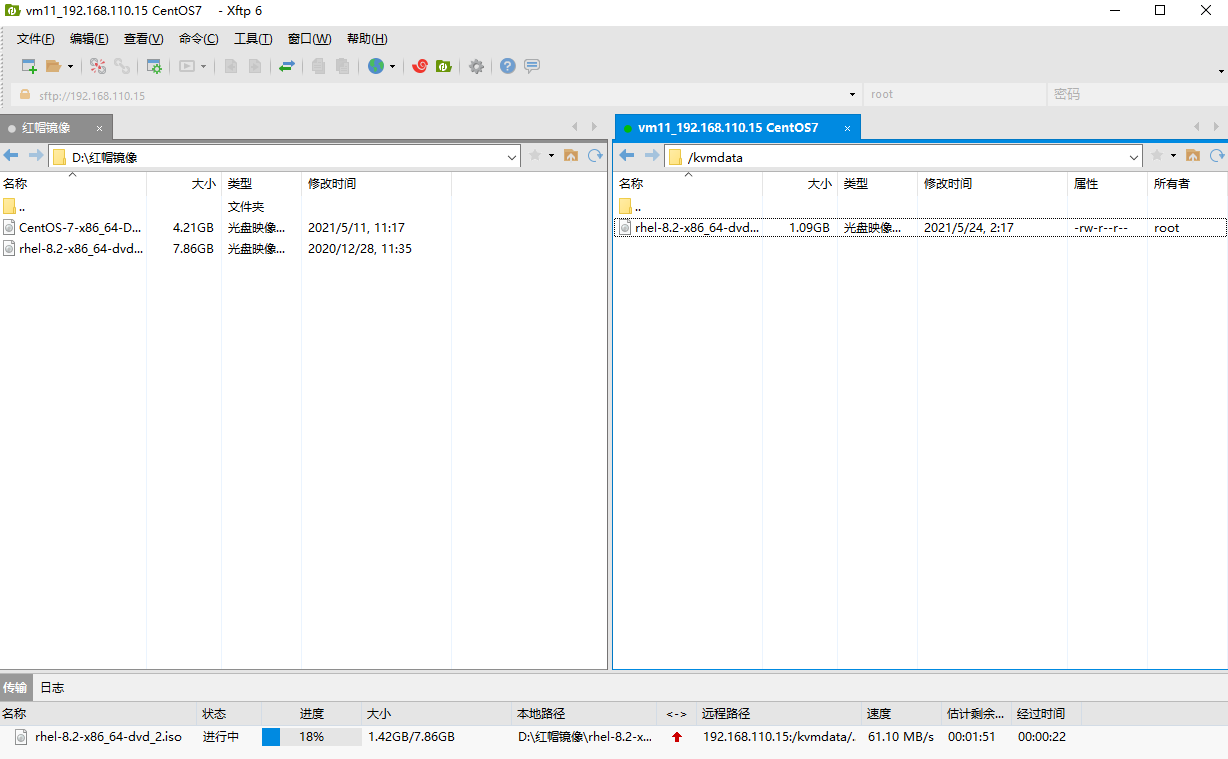

使用xftp把镜像文件传到存放的目录(/kvmdata)

#查看一下[root@localhost ~]# ls /kvmdata/rhel-8.2-x86_64-dvd_2.iso

刷新web界面查看镜像是否传输成功

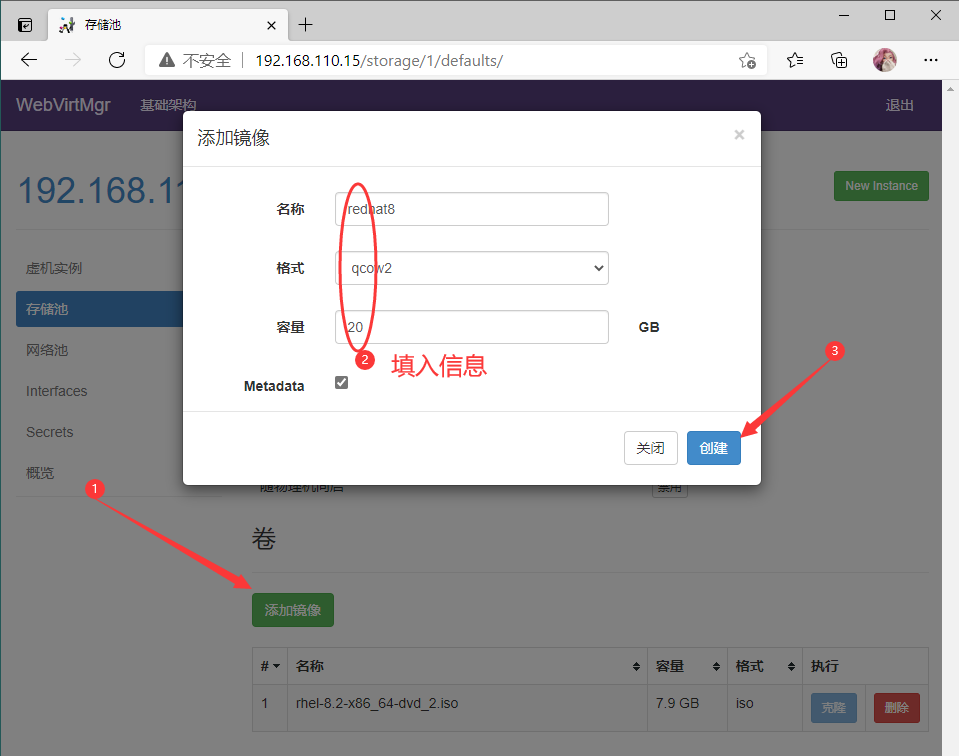

创建系统安装镜像

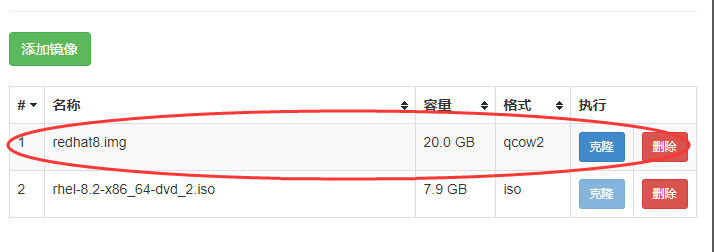

添加成功

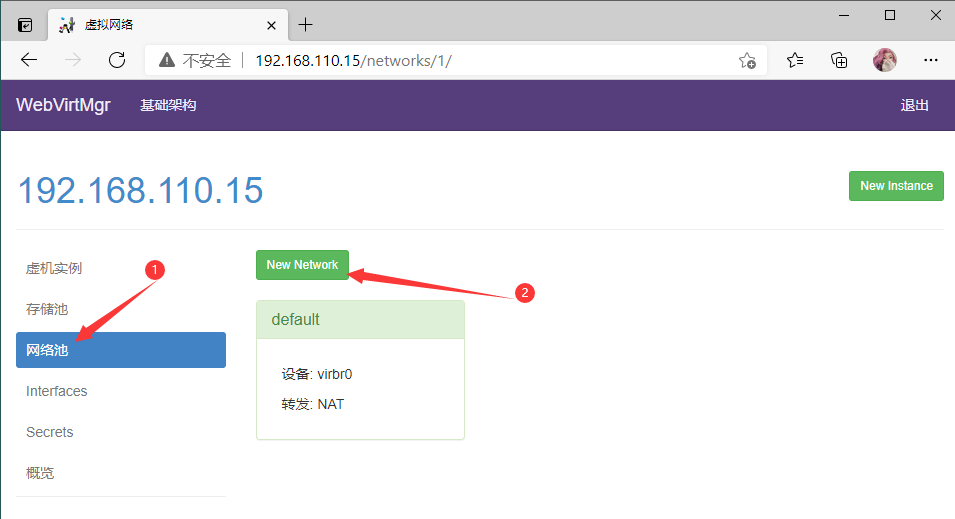

KVM网络管理

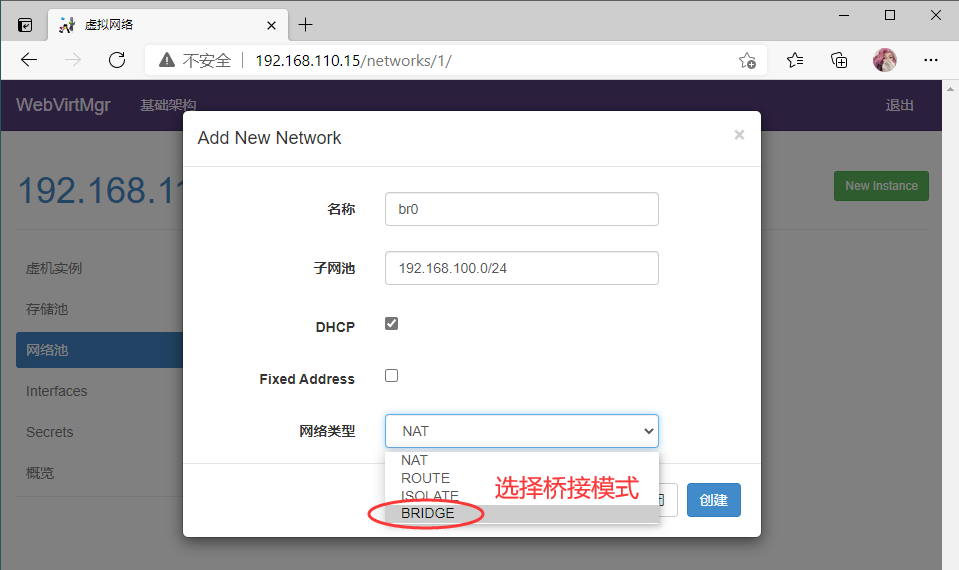

添加桥接网络

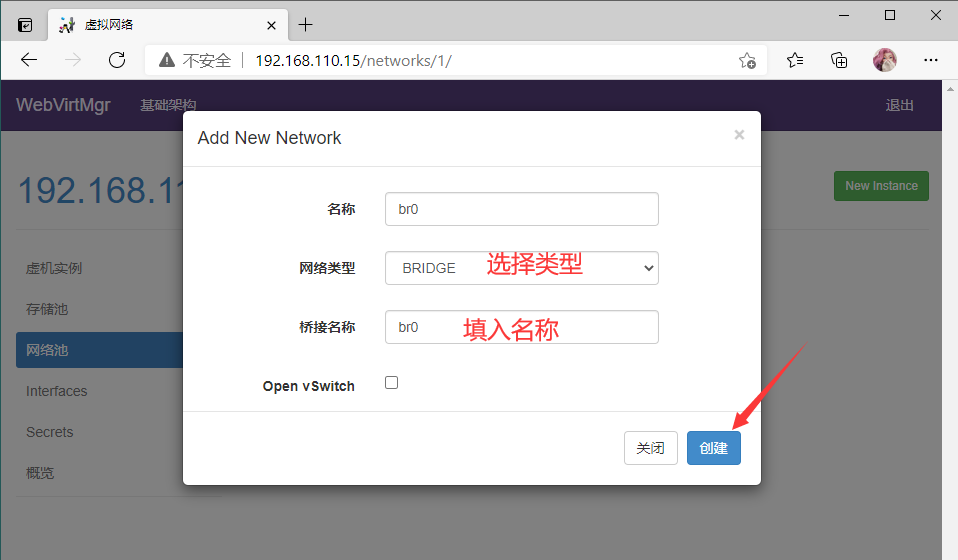

选择桥接模式

点击创建

创建成功

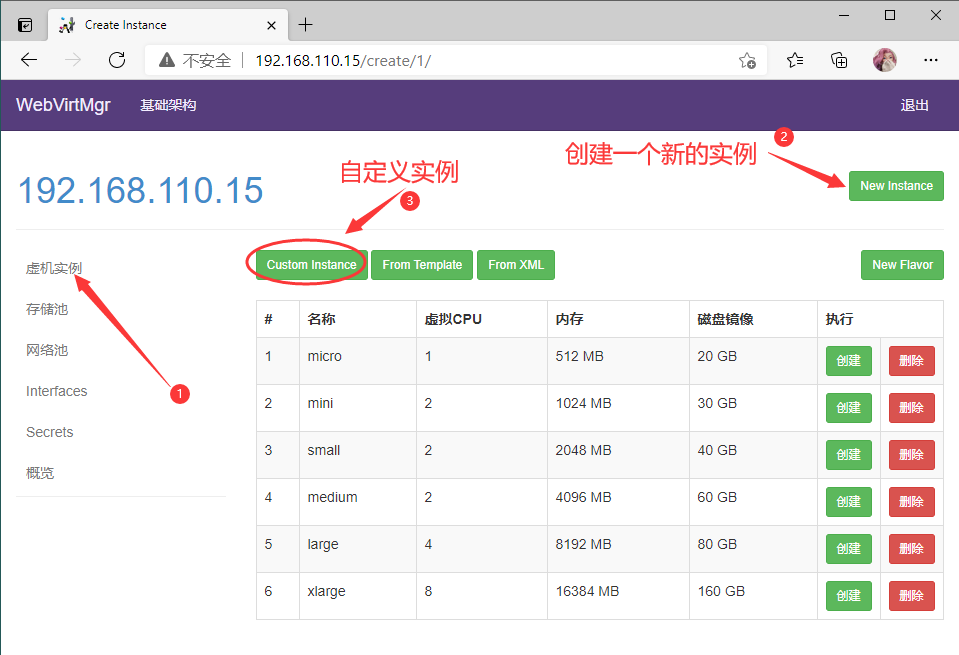

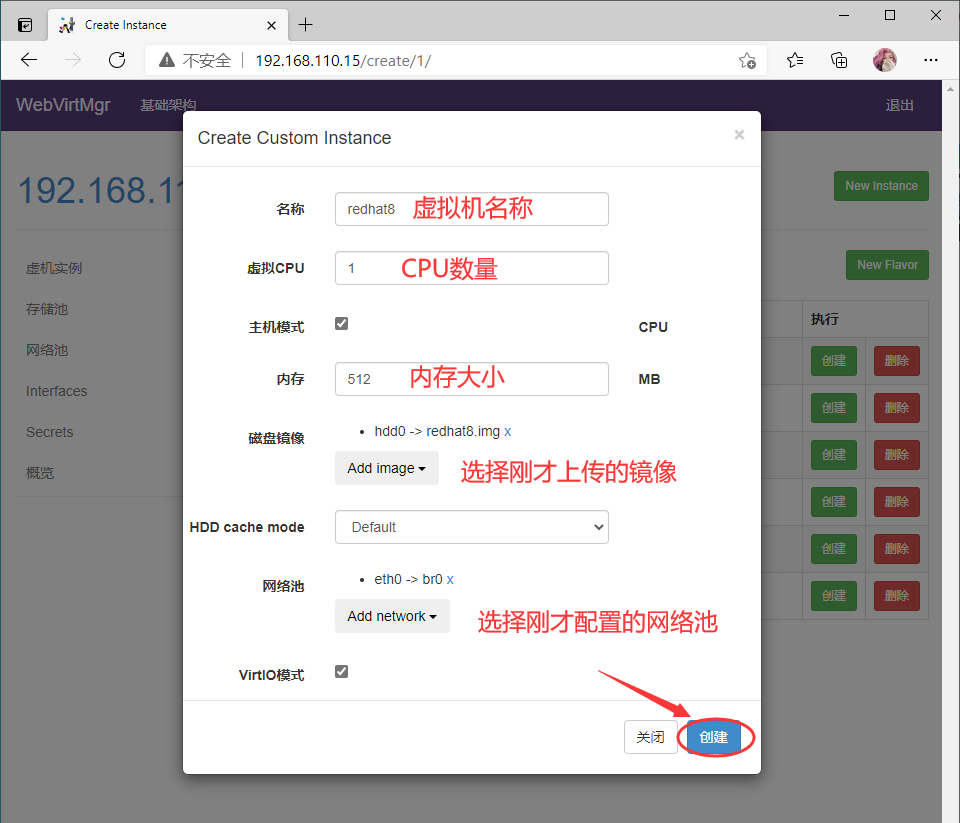

实例管理(创建一个虚拟机)

新建一个实例

配置页面

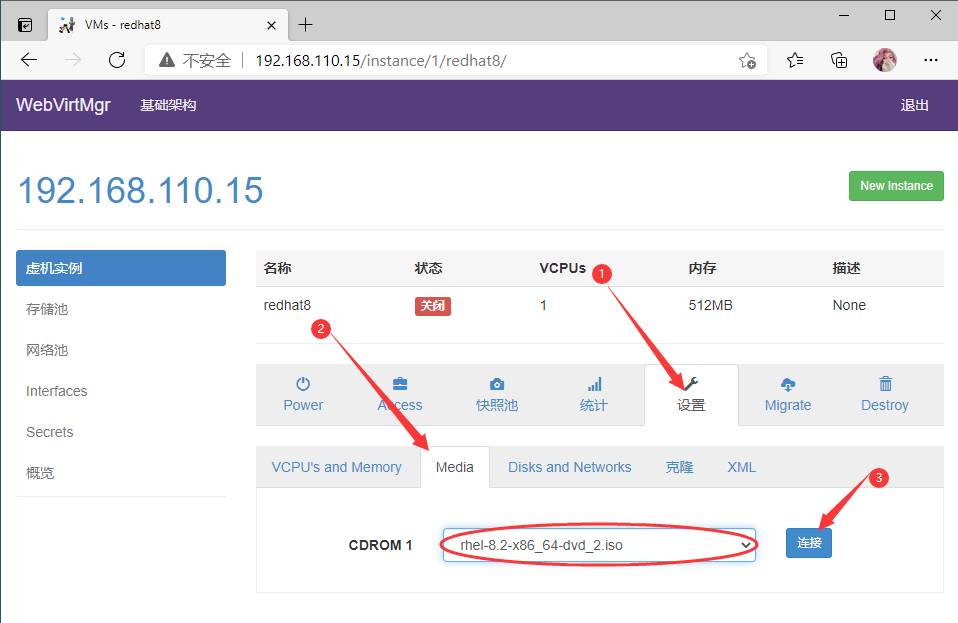

连接光盘到虚拟机

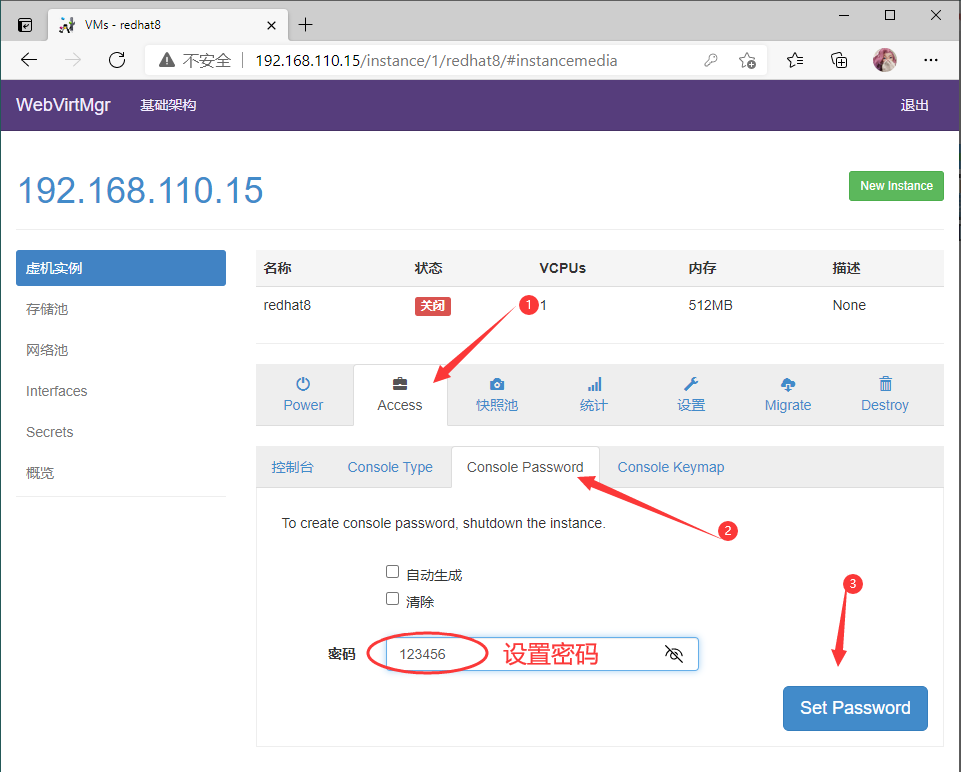

设置在 web 上访问虚拟机的密码

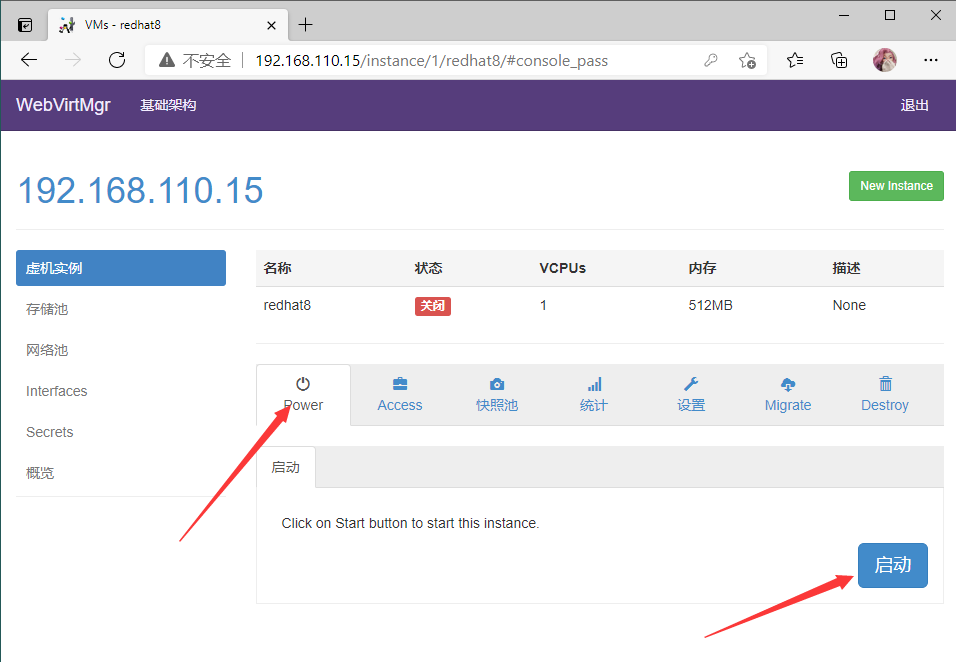

启动虚拟机

查看运行状态

界面如下

虚拟机安装步骤就是安装系统的步骤,此处就不再赘述