7.Python Spark安装

7.1Scla安装

下载:wget https://scala-lang.org/files/archive/scala-2.13.0.tgz

解压:tar xvf scala-2.13.0.tgz

移动到/usr/local 目录 :sudo mv scala-2.13.0 /usr/local/scala

设置Scala环境变量:

sudo gedit ~/.bashrc

source ~/.bashrc

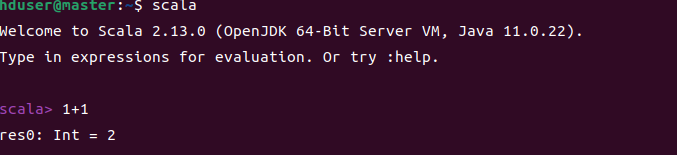

启动scala

:q退出

7.2安装Spark

wget https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.4.2/spark-3.4.2-bin-hadoop3.tgz

解压:tar zxf spark-3.4.2-bin-hadoop3.tgz

移动到/usr/local下:sudo mv spark-3.4.2-bin-hadoop3 /usr/local/spark/

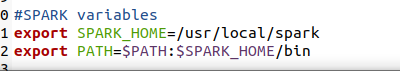

编辑~/.bashrc:sudo gedit ~/.bashrc

source ~/.bashrc

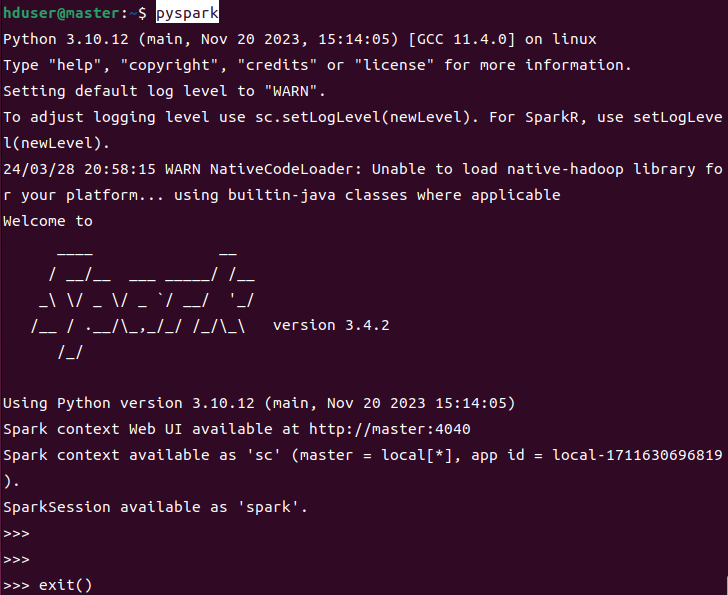

7.3启动pyspark交互式界面

pyspark

8.5设置pyspark显示信息

cd /usr/local/spark/conf

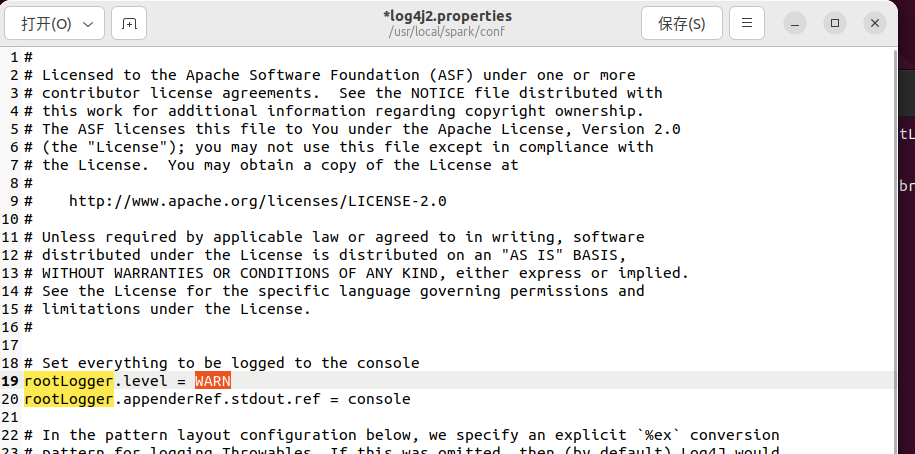

cp log4j2.properties.template log4j2.properties

sudo gedit log4j2.properties,把橙色的地方改成WARN

7.5 创建测试文件

启动Hadoop Multi-Node Cluster,依次执行:

cp /usr/local/hadoop/LICENSE.txt ~/wordcount/input

ll ~/wordcount/input

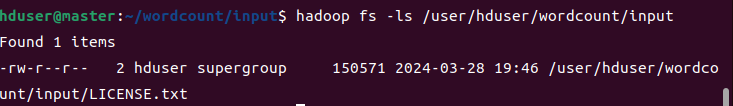

hadoop fs -mkdir -p /user/hduser/wordcount/input

cd ~/wordcount/input

hadoop fs -copyFromLocal LICENSE.txt /user/hduser/wordcount/input

hadoop fs -ls /user/hduser/wordcount/input

7.6本地运行pyspark程序

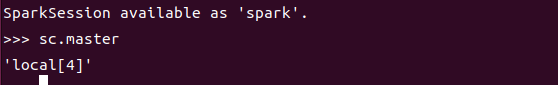

pyspark --master local[4]

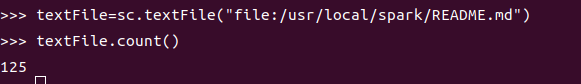

读取本地文件

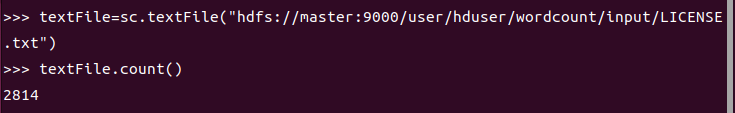

读取HDFS文件

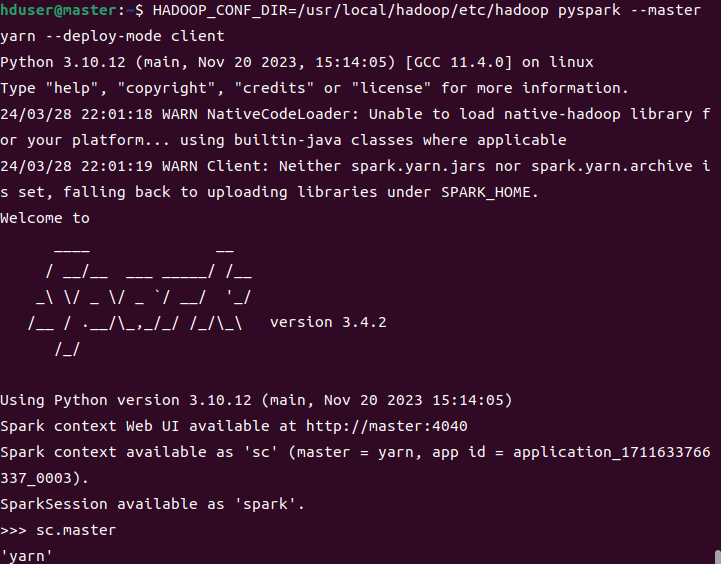

7.7在Hadoop YARN 运行pyspark

关闭hadoop安全模式

HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop pyspark --master yarn --deploy-mode client

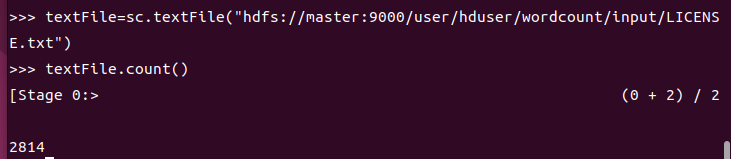

读取HDFS文件:textFile=sc.textFile("hdfs://master:9000/user/hduser/wordcount/input/LICENSE.txt")

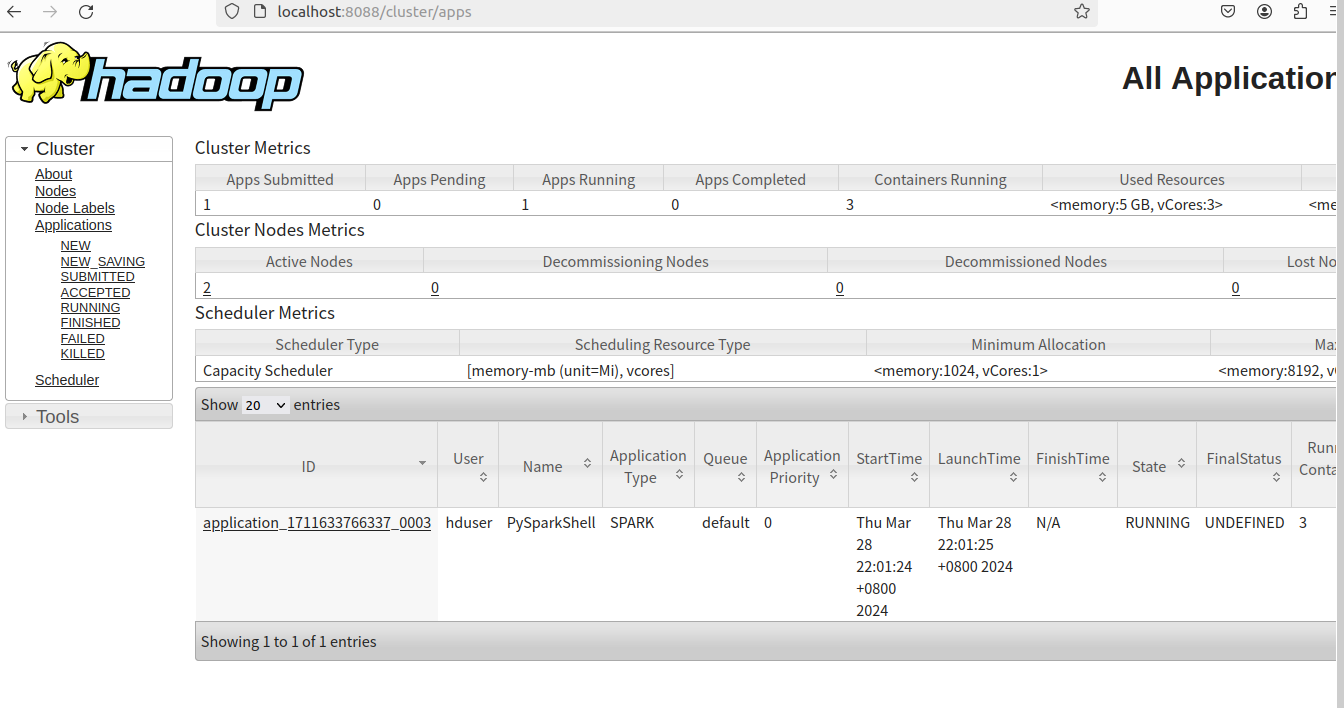

可以在Hadoop Web界面查看PySparkShell App

7.8构建Spark Standalone Cluster运行环境

复制模板文件:

cp /usr/local/spark/conf/spark-env.sh.template /usr/local/spark/conf/spark-env.sh

编辑spark-env.sh:sudo gedit /usr/local/spark/conf/spark-env.sh

连接data1:ssh data1

创建spark目录:sudo mkdir /usr/local/spark

更改所有者为hduser:sudo chown hduser:hduser /usr/local/spark

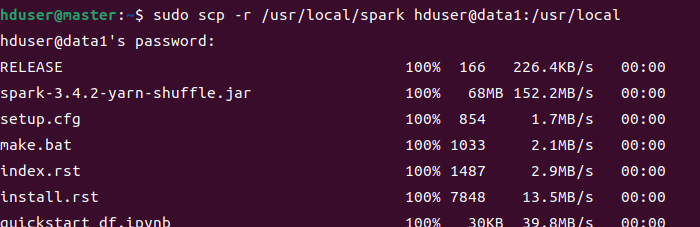

使用scp把master的spark程序复制到data1:sudo scp -r /usr/local/spark hduser@data1:/usr/local

data2同样操作一遍。

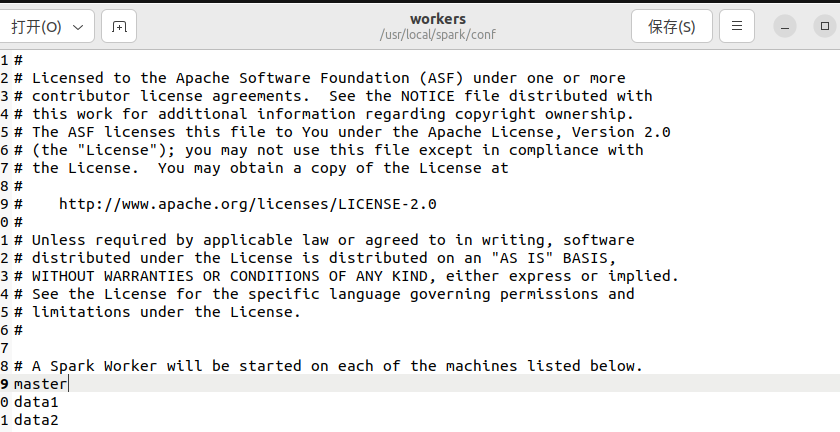

编辑spark/conf/workers文件

cp /usr/local/spark/conf/workers.template /usr/local/spark/conf/workers

sudo gedit /usr/local/spark/conf/workers

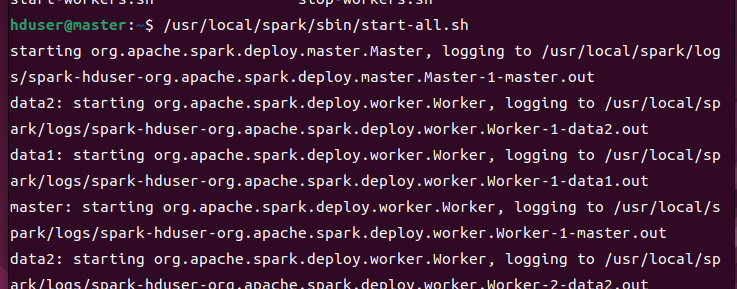

7.9在Spark Standalone 运行 pyspark

/usr/local/spark/sbin/start-all.sh

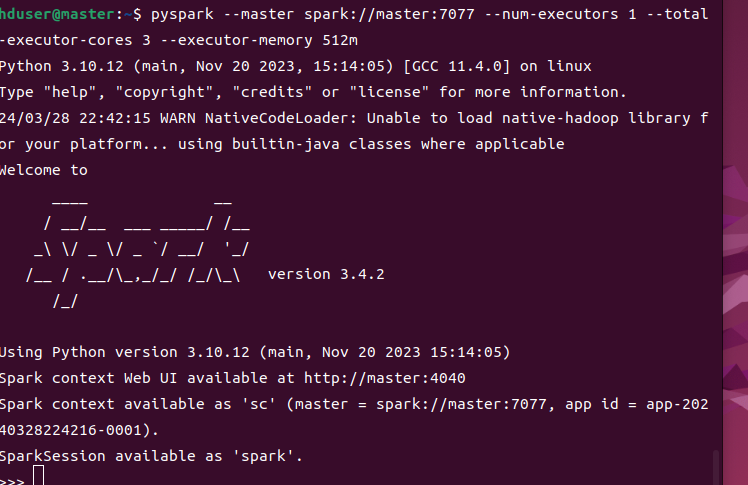

z在Spark Standalone 运行 pyspark

pyspark --master spark://master:7077 --num-executors 1 --total-executor-cores 3 --executor-memory 512m

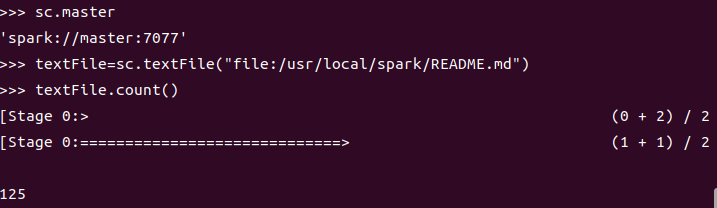

读取文件

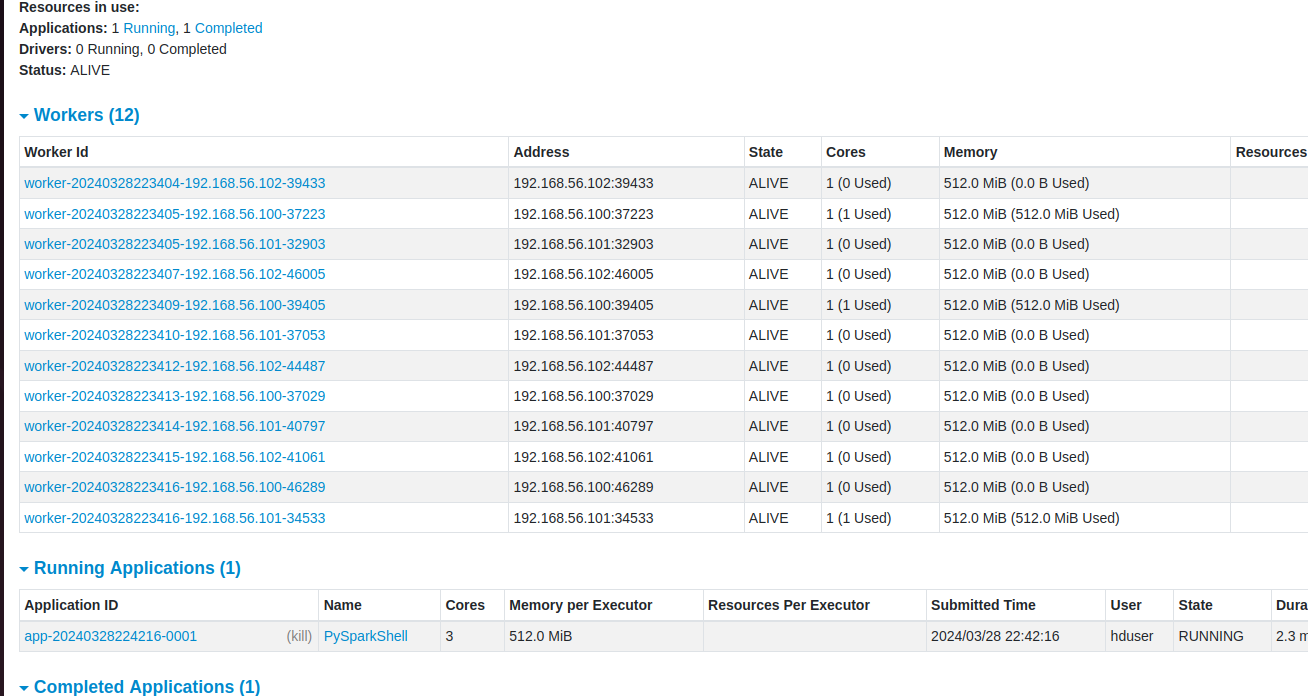

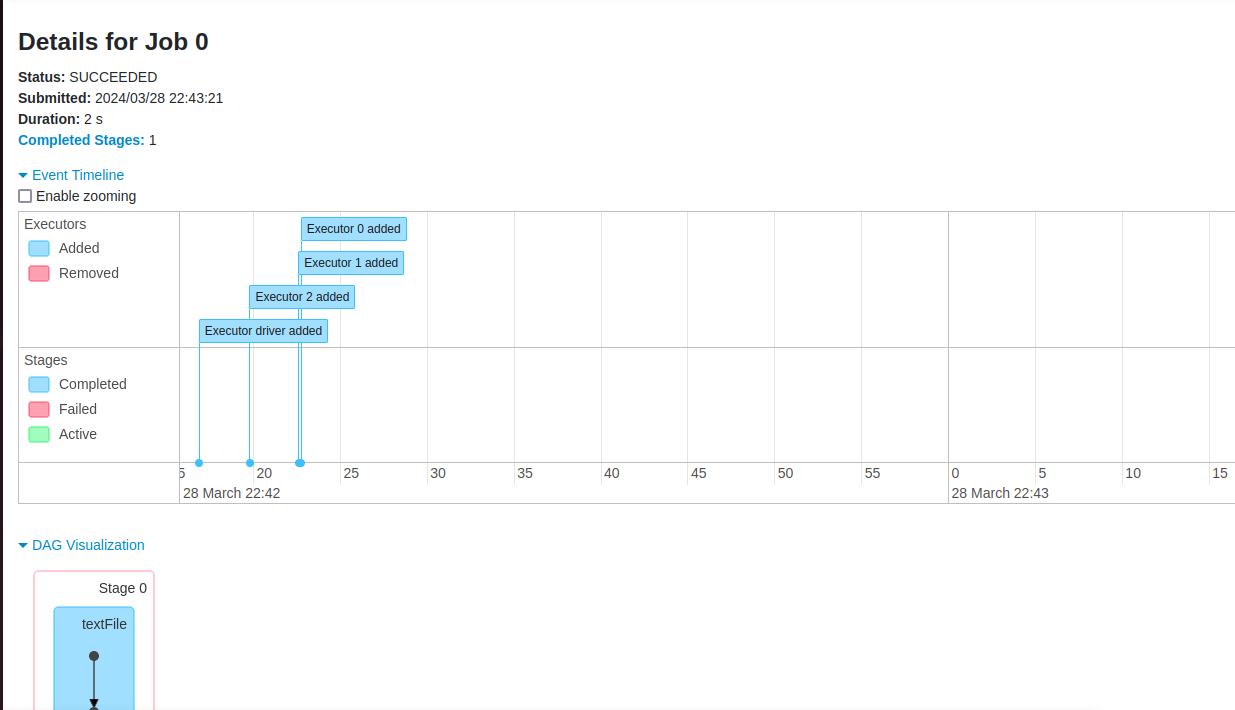

7.9Spark Web UI 界面

http://master:8080/

可以查看worker和job详细信息

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· SQL Server 2025 AI相关能力初探

· AI编程工具终极对决:字节Trae VS Cursor,谁才是开发者新宠?

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南