sparksql连接mysql

1、方法1:分别将两张表中的数据加载为DataFrame

/*

* 方法1:分别将两张表中的数据加载为DataFrame

* */

/*

Map<String,String> options = new HashMap<String,String>();

options.put("url","jdbc:mysql://localhost:3306/tset");

options.put("driver","com.mysql.jdbc.Driver");

options.put("user","root");

options.put("password","admin");

options.put("dbtable","information");

Dataset myinfromation = sqlContext.read().format("jdbc").options(options).load();

//如果需要多张表,则需要再put一遍

options.put("dbtable","score");

Dataset scores = sqlContext.read().format("jdbc").options(options).load();*/

2、方法2:分别将mysql中两张表的数据加载为DataFrame

//方法2:分别将mysql中两张表的数据加载为DataFrame

DataFrameReader reader = sqlContext.read().format("jdbc");

reader.option("url","jdbc:mysql://127.0.0.1:3306/test?serverTimezone=GMT");

reader.option("driver","com.mysql.cj.jdbc.Driver");

reader.option("user","root");

reader.option("password","admin");

reader.option("dbtable","information");

Dataset myinformation = reader.load();

reader.option("dbtable","score");

Dataset scores = reader.load();

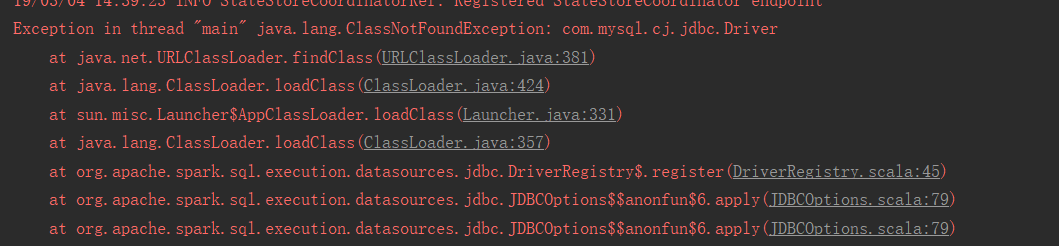

3、问题:

在程序运行过程报错

(1)

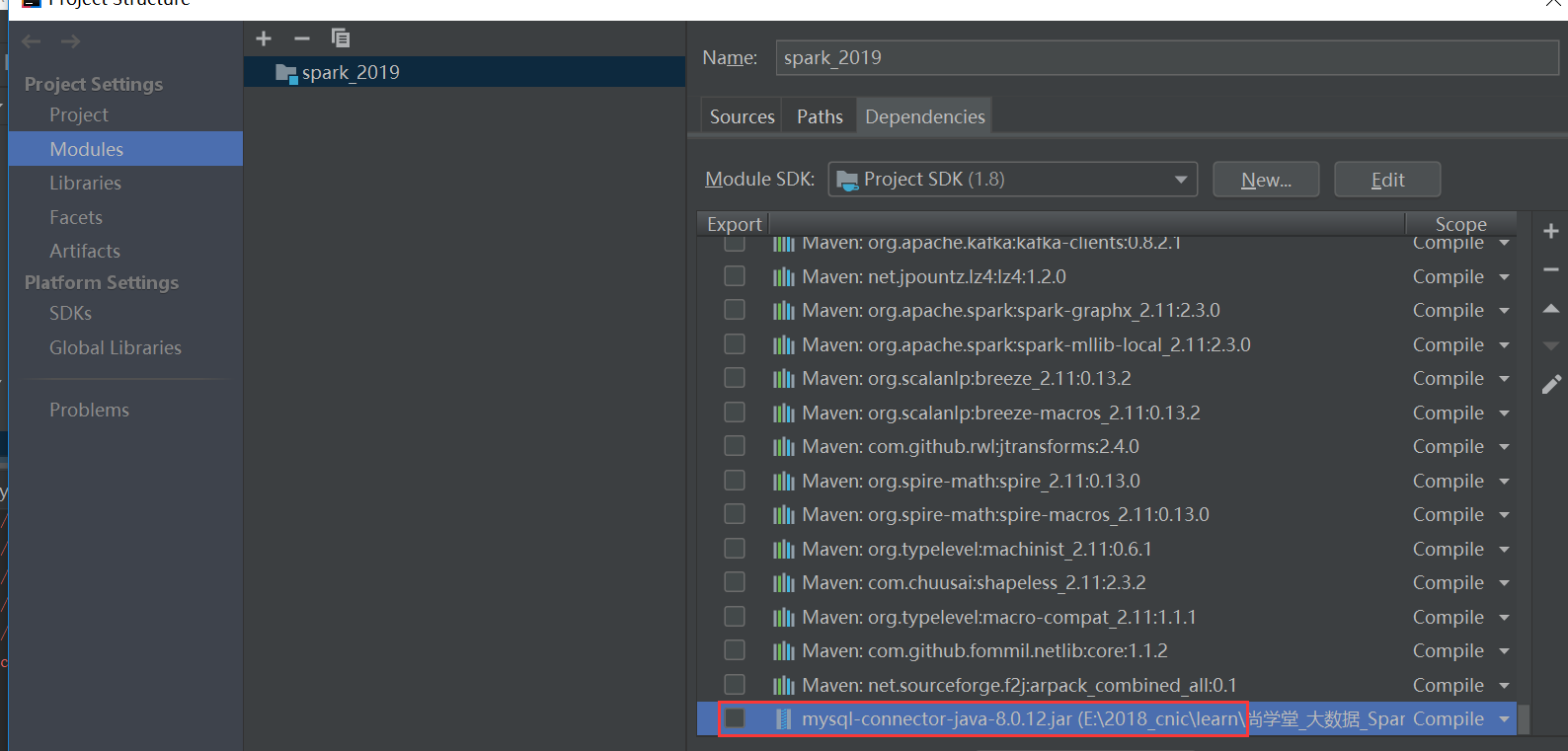

解决:

在idea中加入jar包

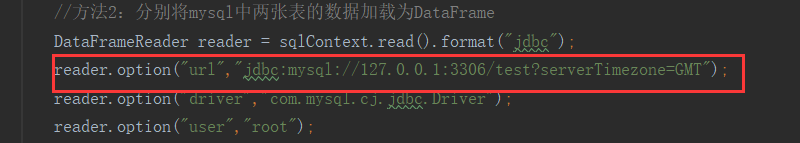

(2)运行报错

The server time zone value 'Öйú±ê׼ʱ¼ä' is unrecognized or represents .....

解决:添加信息。

dbc:mysql://127.0.0.1:3306/test?serverTimezone=GMT

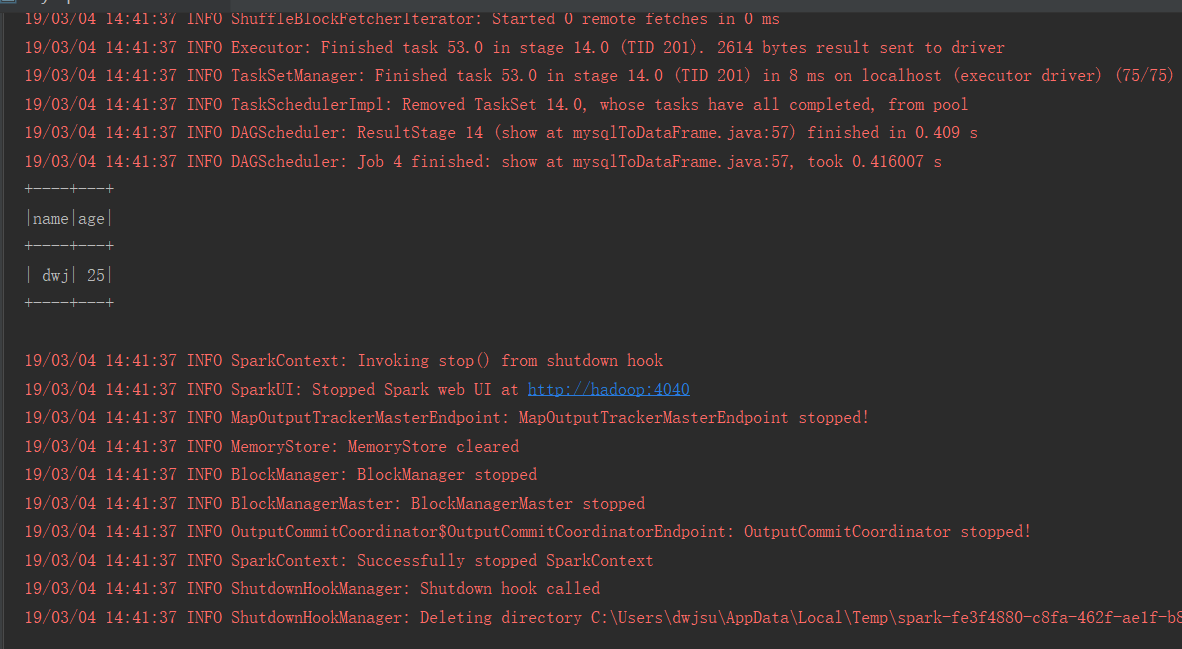

4、成功运行

附:程序源码:

package sparkSQl; import org.apache.spark.SparkConf; import org.apache.spark.api.java.JavaSparkContext; import org.apache.spark.sql.DataFrameReader; import org.apache.spark.sql.Dataset; import org.apache.spark.sql.SQLContext; import java.util.HashMap; import java.util.Map; public class mysqlToDataFrame { public static void main(String[] args) { //首先新建一个sparkconf定义参数 SparkConf conf = new SparkConf().setMaster("local").setAppName("JDBCDataSource"); //创建sparkContext,是通往spark集群的唯一通道 JavaSparkContext sc = new JavaSparkContext(conf); //新建一个sparksql SQLContext sqlContext = new SQLContext(sc); //sparksql连接mysql /* * 方法1:分别将两张表中的数据加载为DataFrame * */ /*Map<String,String> options = new HashMap<String,String>(); options.put("url","jdbc:mysql://localhost:3306/tset"); options.put("driver","com.mysql.jdbc.Driver"); options.put("user","root"); options.put("password","admin"); options.put("dbtable","information"); Dataset myinfromation = sqlContext.read().format("jdbc").options(options).load(); //如果需要多张表,则需要再put一遍 options.put("dbtable","score"); Dataset scores = sqlContext.read().format("jdbc").options(options).load();*/ //方法2:分别将mysql中两张表的数据加载为DataFrame DataFrameReader reader = sqlContext.read().format("jdbc"); reader.option("url","jdbc:mysql://127.0.0.1:3306/test?serverTimezone=GMT"); reader.option("driver","com.mysql.cj.jdbc.Driver"); reader.option("user","root"); reader.option("password","admin"); reader.option("dbtable","information"); Dataset myinformation = reader.load(); reader.option("dbtable","score"); Dataset scores = reader.load(); //将两个DataFrame转换为javapairrdd,执行join操作 myinformation.registerTempTable("info"); scores.registerTempTable("score"); //定义sql语句 String sql = "select info.name,age" +" from info join score" +" on(info.name=score.name)" +" where score.score>90"; Dataset sql2 = sqlContext.sql(sql); sql2.show(); } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号