大数据-hive安装

1、下载Hive需要的版本

我们选用的是hive-3.1.0

将下载下来的hive压缩文件放到/opt/workspace/下

2、解压hive-3.1.0.tar.gz文件

[root@master1 workspace]# tar -zxvf apache-hive-3.1.0-bin.tar.gz

3、重命名

[root@master1 workspace]# mv apache-hive-3.1.0-bin hive-3.1.0

4、因为我们的hive运行在hive on spark 所以需要在lib文件下加入jar包

Since Hive 2.2.0, Hive on Spark runs with Spark 2.0.0 and above, which doesn’t have an assembly jar.

To run with YARN mode (either yarn-client or yarn-cluster), link the following jars to HIVE_HOME/lib. scala-library spark-core spark-network-common

mysql-connector-java-8.0.12.jar

5、修改配置文件 hive-site.xml hive-env.sh /etc/profile

hive-env.sh配置文件

# Hive Client memory usage can be an issue if a large number of clients # are running at the same time. The flags below have been useful in # reducing memory usage: # # if [ "$SERVICE" = "cli" ]; then # if [ -z "$DEBUG" ]; then # export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:+UseParNewGC -XX:-UseGCOverheadLimit" # else # export HADOOP_OPTS="$HADOOP_OPTS -XX:NewRatio=12 -Xms10m -XX:MaxHeapFreeRatio=40 -XX:MinHeapFreeRatio=15 -XX:-UseGCOverheadLimit" # fi # fi # The heap size of the jvm stared by hive shell script can be controlled via: # # export HADOOP_HEAPSIZE=1024 export HADOOP_HEAPSIZE=4096 # # Larger heap size may be required when running queries over large number of files or partitions. # By default hive shell scripts use a heap size of 256 (MB). Larger heap size would also be # appropriate for hive server. # Set HADOOP_HOME to point to a specific hadoop install directory # HADOOP_HOME=${bin}/../../hadoop export HADOOP_HOME=/opt/workspace/hadoop-2.9.1 # Hive Configuration Directory can be controlled by: # export HIVE_CONF_DIR= export HIVE_CONF_DIR=/opt/workspace/hive-3.1.0/conf # Folder containing extra libraries required for hive compilation/execution can be controlled by: # export HIVE_AUX_JARS_PATH= export HIVE_AUX_JARS_PATH=/opt/workspace/hive-3.1.0/lib

hive-site.xml配置文件如下

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><configuration>

<!-- WARNING!!! This file is auto generated for documentation purposes ONLY! -->

<!-- WARNING!!! Any changes you make to this file will be ignored by Hive. -->

<!-- WARNING!!! You must make your changes in hive-site.xml instead. -->

<!-- Hive Execution Parameters -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://159.226.48.202:3306/hivedata?createDatabaseIfNotExist=true&useUnicode=true&characterEncoding=UTF-8&u seSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>(用户名)

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>(密码)

<value>MyPass@123</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.local</name>

<value>true</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/hive/warehouse</value>

</property>

<!--

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

<property>

<name>spark.home</name>

<value>/opt/workspace/spark-2.3.0-bin-hadoop2-without-hive</value>

</property>

<property>

<name>spark.master</name>

<value>spark://master1:7077,spark://master2:7077</value> // 或者yarn-cluster/yarn-client

</property>

<property>

<name>spark.submit.deployMode</name>

<value>client</value>

</property>

<property>

<name>spark.eventLog.enabled</name>

<value>true</value>

</property>

<property>

<name>spark.eventLog.dir</name>

<value>hdfs://user/spark/spark-log</value>

</property>

<property>

<name>spark.serializer</name>

<value>org.apache.spark.serializer.KryoSerializer</value>

</property>

<property>

<name>spark.executor.memeory</name>

<value>512m</value>

</property>

<property>

<name>spark.driver.memeory</name>

<value>512m</value>

</property>

<property>

<name>spark.executor.extraJavaOptions</name>

<value>-XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"</value>

</property>-->

<!--spark engine -->

<property>

<name>hive.execution.engine</name>

<value>spark</value>

</property>

<property>

<name>hive.enable.spark.execution.engine</name>

<value>true</value>

</property>

<!--sparkcontext -->

<property>

<name>spark.master</name>

<value>yarn-cluster</value>

</property>

<property>

<name>spark.serializer</name>

<value>org.apache.spark.serializer.KryoSerializer</value>

</property>

<!--下面的根据实际情况配置 -->

<property>

<name>spark.executor.instances</name>

<value>3</value>

</property>

<property>

<name>spark.executor.cores</name>

<value>4</value>

</property>

<property>

<name>spark.executor.memeory</name>

<value>1024m</value>

</property>

<property>

<name>spark.driver.cores</name>

<value>2</value>

</property>

<property>

<name>spark.driver.memory</name>

<value>1024m</value>

</property>

<property>

<name>spark.yarn.queue</name>

<value>default</value>

</property>

<property>

<name>spark.app.name</name>

<value>myInceptor</value>

</property>

<!--事务相关 -->

<property>

<name>hive.support.concurrency</name>

<value>true</value>

</property>

<property>

<name>hive.enforce.bucketing</name>

<value>true</value>

</property>

<property>

<name>hive.exec.dynamic.partition.mode</name>

<value>nonstrict</value>

</property>

<property>

<name>hive.txn.manager</name>

<value>org.apache.hadoop.hive.ql.lockmgr.DbTxnManager</value>

</property>

<property>

<name>hive.compactor.initiator.on</name>

<value>true</value>

</property>

<property>

<name>hive.compactor.worker.threads</name>

<value>1</value>

</property>

<property>

<name>spark.executor.extraJavaOptions</name>

<value>-XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"

</value>

</property>

<!--其它 -->

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>

<property>

<name>hive.server2.thrift.max.worker.threads</name>

<value>1000</value>

</property>

<property>

<name>hive.spark.job.monitor.timeout</name>

<value>3m</value>

<description>

Expects a time value with unit (d/day, h/hour, m/min, s/sec, ms/msec, us/usec, ns/nsec), which is sec if not specified.

Timeout for job monitor to get Spark job state.

</description>

</property>

</configuration>

注意事项:在不同的服务器上进行配置时,注意标红部分,需要按照不同的服务器进行更改,改为对应的ip及元数据库。

/etc/profile

# Hive Config export HIVE_HOME=/opt/workspace/hive-3.1.0 export HIVE_CONF_DIR=${HIVE_HOME}/conf export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

export PATH=.:${JAVA_HOME}/bin:${SCALA_HOME}/bin:${MAVEN_HOME}/bin:$HADOOP_HOME/sbin:$HADOOP_HOME/bin:${HIVE_HOME}/bin:${SPARK_HOME}/bin:${HBASE_HOME}/bin:$SQOOP_HOME/bin:${ZK_HOME}/bin:$PATH

source /etc/profile

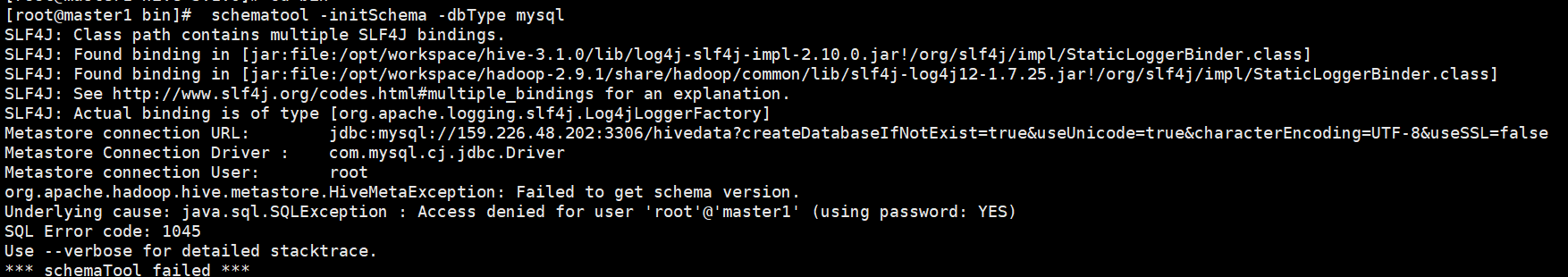

6、hive初始化

[root@master1 hive-3.1.0]# cd bin

[root@master1 bin]# schematool -initSchema -dbType mysql

问题:mysql需要授权

说明:在进行grant mysql添加时候,注意:之前设置的是本地登录mysql 密码是123456

而在hive-site.xml中配置的远程访问元数据库hivedata是使用密码MyPass@123,所以设置密码时需要注意,之后进行刷新操作。

登录数据库密码变为MyPass@123

[root@slave1 bin]# mysql -u root -p Enter password: Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 4 Server version: 5.6.43 MySQL Community Server (GPL) Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. mysql> grant all privileges on *.* to root@"%" identified by 'MyPass@123'; Query OK, 0 rows affected (0.00 sec) mysql> grant all privileges on *.* to root@"localhost" identified by 'MyPass@123'; Query OK, 0 rows affected (0.01 sec)

mysql> grant all privileges on *.* to root@"slave1" identified by 'MyPass@123';

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges; Query OK, 0 rows affected (0.00 sec)

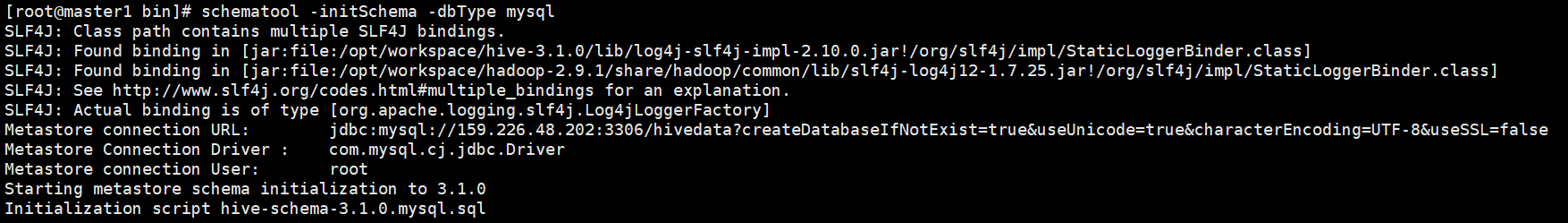

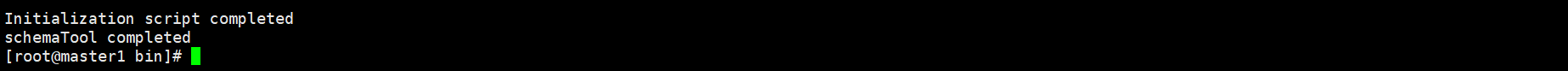

重新初始化成功。状态如下:

[root@master1 hive-3.1.0]# cd bin [root@master1 bin]# schematool -initSchema -dbType mysql

参考:https://blog.csdn.net/sinat_25943197/article/details/81906060

浙公网安备 33010602011771号

浙公网安备 33010602011771号