报错如下:

300 [main] DEBUG org.apache.hadoop.util.Shell - Failed to detect a valid hadoop home directory

java.io.IOException: HADOOP_HOME or hadoop.home.dir are not set

解决办法一:

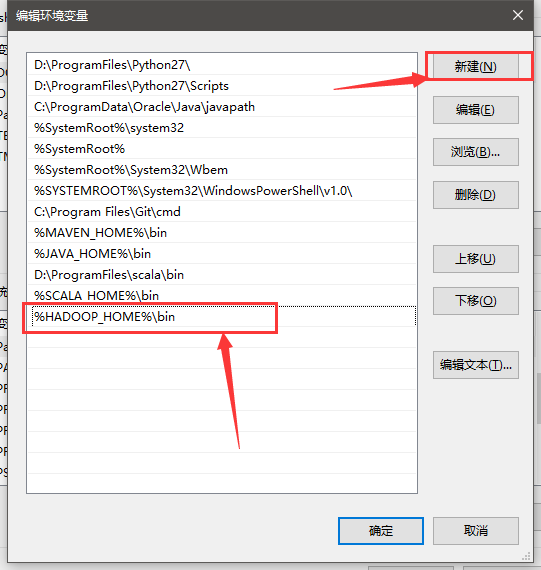

根据 http://blog.csdn.net/baidu_19473529/article/details/54693523 配置hadoop_home变量

下载winutils地址https://github.com/srccodes/hadoop-common-2.2.0-bin下载解压

重新打开Eclipse,运行程序,可以解决内网的Hadoop连接问题。(亲测试通过)

解决办法二:

在java程序中加入

System.setProperty("hadoop.home.dir", "/usr/local/hadoop-2.6.0");

依然报错(未亲测试)

解决办法三:(不是同一问题?)

根据该提示:

依然报错

--------------------------------5.19.早晨------------------------------------------

今天早上再次执行该程序,将上传到HDFS部分的代码给注释掉后发现日志报错信息如下:

132 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Trying to load the custom-built native-hadoop library...

134 [main] DEBUG org.apache.hadoop.util.NativeCodeLoader - Failed to load native-hadoop with error: java.lang.UnsatisfiedLinkError: no hadoop in java.library.path

1)check Hadoop library:

root@Ubuntu-1:/usr/local/hadoop-2.6.0/lib/native# file libhadoop.so.1.0.0

It's 64-bite library

2)Try adding the HADOOP_OPTS environment variable:

root@Ubuntu-1:~# vi /etc/profile //environment variable

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/native/"

It doesn't work, and reports the same error.

3)Try adding the HADOOP_OPTS and HADOOP_COMMON_LIB_NATIVE_DIR environment variable:

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_HOME}/lib/native

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/"

It still doesn't work, and reports the same error.

4)Adding the Hadoop library into LD_LIBRARY_PATH

root@Ubuntu-1:~# vi .bashrc

export LD_LIBRARY_PATH=/usr/local/hadoop/lib/native/:$LD_LIBRARY_PATH

It doesn't work, and reports the same error.

5)append word native to HADOOP_OPTS like this

export HADOOP_OPTS="$HADOOP_OPTS -Djava.library.path=$HADOOP_HOME/lib/native"

It doesn't work, and reports the same error.

-----------------------------------5.20早晨-------------------------

win下依旧无法使用java代码上传文件到HDFS

----------------------------------5.24------------

copy了一份hadoop-2.6.0文件到本机,更改bin目录和path ,显示无效的path,遂更改回去

---------------------2017.5.26-------------------

今天早上开机 试了一下 发现它已经好了 其实上述的设置已经可以解决大部分遇到这类问题的人群

浙公网安备 33010602011771号

浙公网安备 33010602011771号