综合练习_词频统计预处理

1.下载一首英文的歌词或文章

将所有,.?!’:等分隔符全部替换为空格

将所有大写转换为小写

生成单词列表

生成词频统计

排序

排除语法型词汇,代词、冠词、连词

输出词频最大TOP20

将分析对象存为utf-8编码的文件,通过文件读取的方式获得词频分析内容。

fo = open('news.txt','r')

news = fo.read()

fo.close()

sep = ''',.!?'":;()'''

exclude = {'to','and','the','that','in','for','of'}

for c in sep:

news = news.replace(c,' ')

wordList = news.lower().split()

wordDict = {}

for w in wordList:

wordDict[w] = wordDict.get(w,0)+1

for w in exclude:

del(wordDict[w])

#

# #方法二

# wordSet = set(wordList)

# for w in wordSet:

# wordDict[w] = wordList.count(w)

dictList = list(wordDict.items())

dictList.sort(key=lambda x: x[1],reverse=True)

for i in range(20):

print(dictList[i])

#输出所有词频

for w in wordDict:

print(w,wordDict[w])

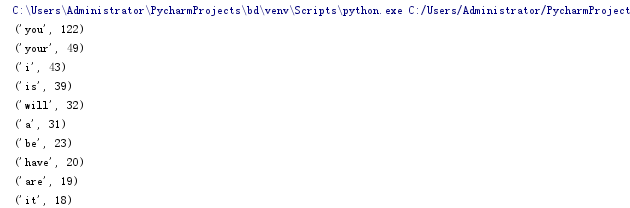

运行结果:

2.中文词频统计

下载一长篇中文文章。

从文件读取待分析文本。

news = open('gzccnews.txt','r',encoding = 'utf-8')

安装与使用jieba进行中文分词。

pip install jieba

import jieba

list(jieba.lcut(news))

生成词频统计

排序

排除语法型词汇,代词、冠词、连词

输出词频最大TOP20(或把结果存放到文件里)

import jieba

#获取文件

fo = open('jueshi.txt', 'r', encoding='utf-8')

file = fo.read()

fo.close();

#排除空格,代词,连词

str1 = ''',。‘’“”:;()!?、··· '''

dele = {' ','\n','\u3000','的','和','在','是','你','我','他','她','们','了','这','给','又','个','那','里',

'不','就','着','一','也','都', '者', '有', '出','而','了'}

jieba.add_word('唐门')

jieba.add_word('魂师')

jieba.add_word('武魂')

jieba.add_word('魂导器')

for c in str1:

file = file.replace(c, ' ')

tempwords = list(jieba.cut(file))

count = {}

words = list(set(tempwords) - dele)

for i in range(0, len(words)):

count[words[i]] = file.count(str(words[i]))

countList = list(count.items())

countList.sort(key=lambda x: x[1], reverse=True)

print(countList)

#把结果存到文件夹

fo = open('F:\cipinTJ.txt', 'a', encoding='utf-8')

for i in range(20):

fo.write(countList[i][0] + ':' + str(countList[i][1]) + '\n')

fo.close()

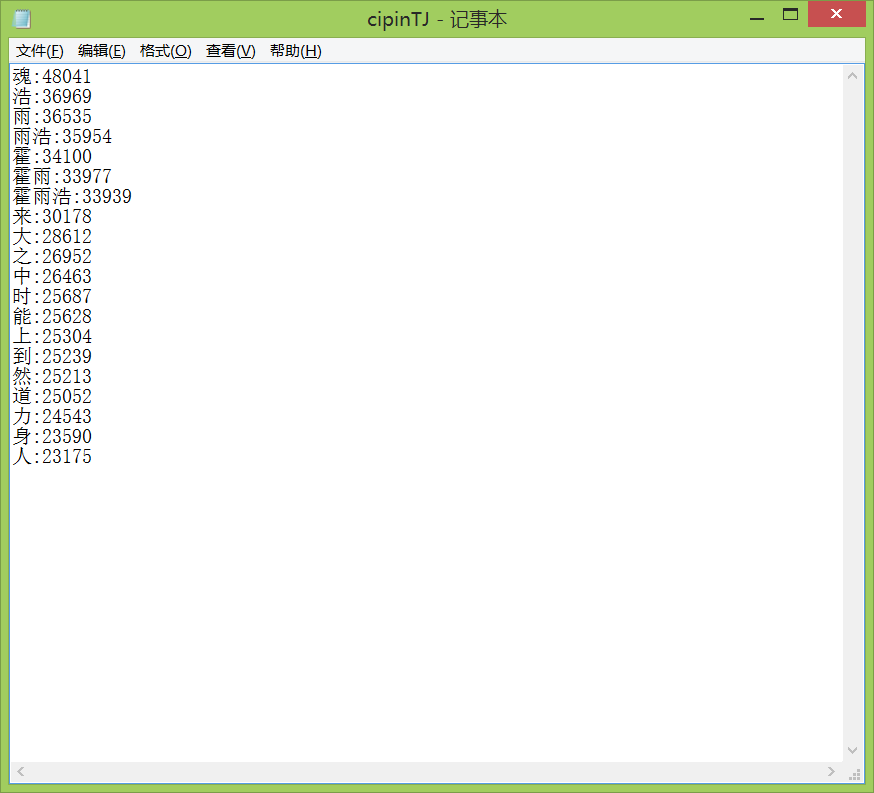

统计结果: