1:Ingress前言

我们都知道,Kubernetes中内部服务发现使用的是kube-dns实现的,那么我们部署在Kubernetes中的应用如何暴露给外部客户使用呢?

我们知道可以用NodePort和LoadBlancer类型的Service可以把应用暴露给外部用户使用,除此之外,Kubernetes还为我们提供了一个非常重要的资源对象可以用来暴露服务给外部用户,那么它就是Ingress对象,对于小规模的应用我们可以使用NodePort或许也可以满足需求,但是当应用规模越来越大,你就会发现对于NodePort的管理就非常的麻烦了,这个时候使用Ingress就非常的方便了,可以避免管理大量的端口。

2:Ingress资源对象

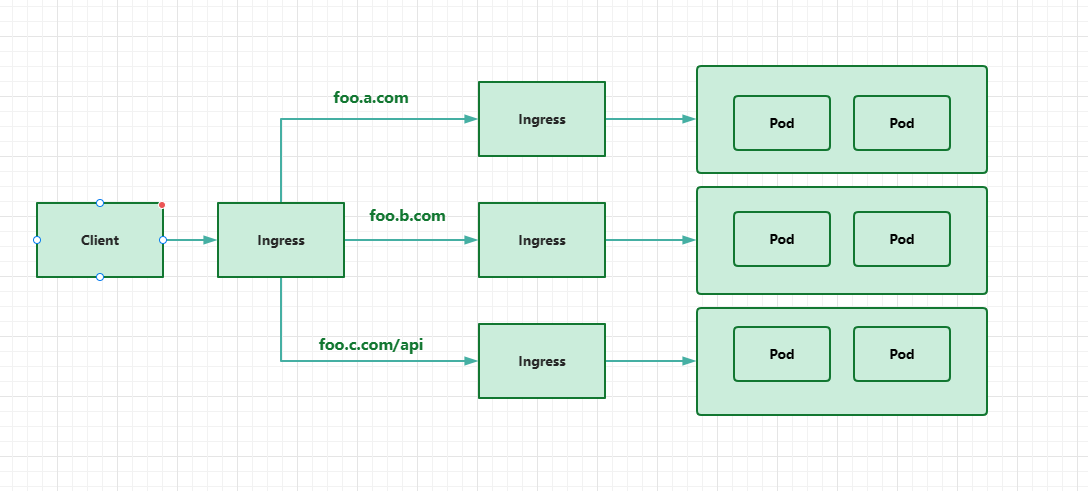

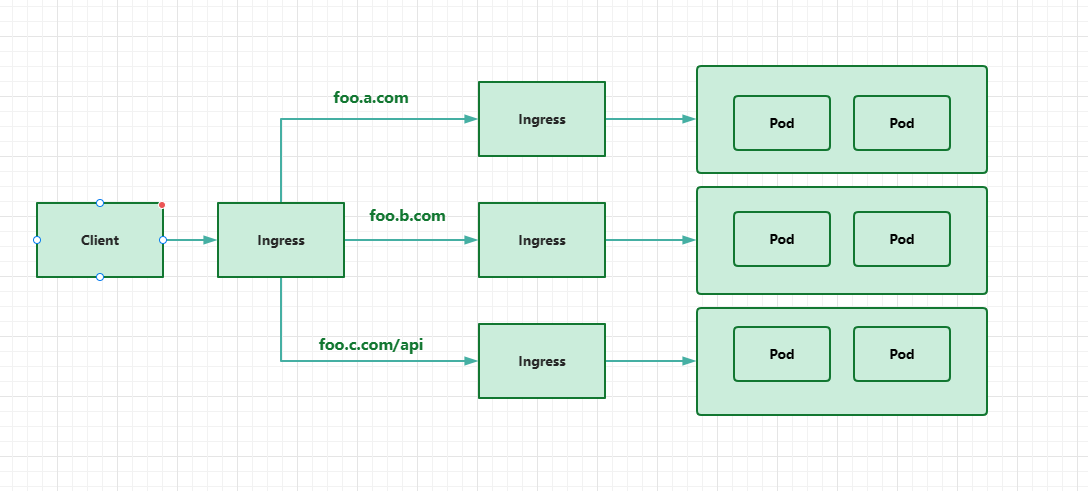

Ingress对象是Kubernetes内置定义的一个资源对象,是从kubernetes集群外部访问集群的一个入口,将外部的请求转发到集群内不同的Service上,其实就相当于nginx,haproxy等负载均衡代理服务器,可能你会觉得我们直接使用nginx就实现了,但是只使用nginx这种方式是有很大缺陷的,每次有新服务加入的时候怎么更新nginx的配置呢?不可能说让我们去手动更改或者滚动更新前端的Nginx Pod把?那我们再加上一个服务发现的工具比如consul如何?貌似是可以的,对吧?Ingress实际上就是这行实现的,只是服务发现的功能是自己实现了,不需要第三方的服务了,然后再加上一个域名规则定义,路由信息刷新依靠Ingress Controller来提供

Ingress Controller可以理解为是一个监听器,通过不断地监听kube-apiserver,实时的感知后端Service,Pod的变化,当这些信息变化后,Ingress Controller再结合Ingress的配置,更新反向代理负载均衡器,达到服务发现的作用,其实这一点和服务发现工具consul,consul-template非常类似

3:Ingress定义

那么,知道这个资源之后我们就来自定义一个资源来看看

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

上面是一个比较通用的Ingress的资源定义,配置了一个路径名为/的路由,所有的/***的入站请求,都会被Ingress转发到nginx这个这个Service的80端口上,可以将Ingress理解为Nginx中的nginx.conf配置文件

此外Ingress通常使用Annotations来配置一些选项,当然这取决于Ingress Controller控制器的实现方式,不同的Ingress Controller支持不同的注解

另外需要注意的是,Ingress的apiVersion在老版本的k8s和新版本的k8s是有很大的变化的,具体变化的大版本在1.18之前的版本和1.18之后的版本的差异

[root@k-m-1 ~]# kubectl explain ingress

KIND: Ingress

VERSION: networking.k8s.io/v1

DESCRIPTION:

Ingress is a collection of rules that allow inbound connections to reach

the endpoints defined by a backend. An Ingress can be configured to give

services externally-reachable urls, load balance traffic, terminate SSL,

offer name based virtual hosting etc.

FIELDS:

apiVersion <string>

APIVersion defines the versioned schema of this representation of an

object. Servers should convert recognized schemas to the latest internal

value, and may reject unrecognized values. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resources

kind <string>

Kind is a string value representing the REST resource this object

represents. Servers may infer this from the endpoint the client submits

requests to. Cannot be updated. In CamelCase. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kinds

metadata <Object>

Standard object's metadata. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadata

spec <Object>

Spec is the desired state of the Ingress. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

status <Object>

Status is the current state of the Ingress. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

[root@k-m-1 ~]# kubectl explain ingress.spec

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: spec <Object>

DESCRIPTION:

Spec is the desired state of the Ingress. More info:

https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#spec-and-status

IngressSpec describes the Ingress the user wishes to exist.

FIELDS:

defaultBackend <Object>

DefaultBackend is the backend that should handle requests that don't match

any rule. If Rules are not specified, DefaultBackend must be specified. If

DefaultBackend is not set, the handling of requests that do not match any

of the rules will be up to the Ingress controller.

ingressClassName <string>

IngressClassName is the name of an IngressClass cluster resource. Ingress

controller implementations use this field to know whether they should be

serving this Ingress resource, by a transitive connection (controller ->

IngressClass -> Ingress resource). Although the

`kubernetes.io/ingress.class` annotation (simple constant name) was never

formally defined, it was widely supported by Ingress controllers to create

a direct binding between Ingress controller and Ingress resources. Newly

created Ingress resources should prefer using the field. However, even

though the annotation is officially deprecated, for backwards compatibility

reasons, ingress controllers should still honor that annotation if present.

rules <[]Object>

A list of host rules used to configure the Ingress. If unspecified, or no

rule matches, all traffic is sent to the default backend.

tls <[]Object>

TLS configuration. Currently the Ingress only supports a single TLS port,

443. If multiple members of this list specify different hosts, they will be

multiplexed on the same port according to the hostname specified through

the SNI TLS extension, if the ingress controller fulfilling the ingress

supports SNI.

其实在Ingress中没有特别多的字段,基本上就这四个,那么我们先来说defaultBackend,这个参数是当我们请求进来没有匹配到任何规则的时候就走这个配置,其实就是个默认值配置,那么它是个Object对象,那么它需要指定哪儿些东西呢?我们来看看

[root@k-m-1 ~]# kubectl explain ingress.spec.defaultBackend

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: defaultBackend <Object>

DESCRIPTION:

DefaultBackend is the backend that should handle requests that don't match

any rule. If Rules are not specified, DefaultBackend must be specified. If

DefaultBackend is not set, the handling of requests that do not match any

of the rules will be up to the Ingress controller.

IngressBackend describes all endpoints for a given service and port.

FIELDS:

resource <Object>

Resource is an ObjectRef to another Kubernetes resource in the namespace of

the Ingress object. If resource is specified, a service.Name and

service.Port must not be specified. This is a mutually exclusive setting

with "Service".

service <Object>

Service references a Service as a Backend. This is a mutually exclusive

setting with "Resource".

这里可以看到它其实只需要指定一个Resource资源或者一个service就可以了,但是我们要知道,这俩资源是互斥的,不能同时出现

接着我们来看第二个参数,也就是IngressClassName

[root@k-m-1 ~]# kubectl explain ingress.spec.ingressClassName

KIND: Ingress

VERSION: networking.k8s.io/v1

FIELD: ingressClassName <string>

DESCRIPTION:

IngressClassName is the name of an IngressClass cluster resource. Ingress

controller implementations use this field to know whether they should be

serving this Ingress resource, by a transitive connection (controller ->

IngressClass -> Ingress resource). Although the

`kubernetes.io/ingress.class` annotation (simple constant name) was never

formally defined, it was widely supported by Ingress controllers to create

a direct binding between Ingress controller and Ingress resources. Newly

created Ingress resources should prefer using the field. However, even

though the annotation is officially deprecated, for backwards compatibility

reasons, ingress controllers should still honor that annotation if present.

[root@k-m-1 ~]# kubectl get ingressclasses.networking.k8s.io

NAME CONTROLLER PARAMETERS AGE

nginx k8s.io/ingress-nginx <none> 12h

这里我们要知道ingress和ingressclass是两个资源,ingressclass的存在就是为了能让ingress-controller可以读取到ingress资源的配置而诞生的,也就是说,Ingress Controller可以在一个集群上装多个,但是你想让哪儿个ingress Controller可以读取到Ingress资源的配置,这个时候就需要指定ingressclass了,我这里是装的官方的ingress-nginx,当然它的配置可以通过`kubernetes.io/ingress.class`这个注解配置,也是可以的,不过新版本都是通过IngressClassName来配置了

第三个参数就是我们比较重要的参数了,rules

[root@k-m-1 ~]# kubectl explain ingress.spec.rules

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: rules <[]Object>

DESCRIPTION:

A list of host rules used to configure the Ingress. If unspecified, or no

rule matches, all traffic is sent to the default backend.

IngressRule represents the rules mapping the paths under a specified host

to the related backend services. Incoming requests are first evaluated for

a host match, then routed to the backend associated with the matching

IngressRuleValue.

FIELDS:

host <string>

Host is the fully qualified domain name of a network host, as defined by

RFC 3986. Note the following deviations from the "host" part of the URI as

defined in RFC 3986: 1. IPs are not allowed. Currently an IngressRuleValue

can only apply to the IP in the Spec of the parent Ingress.

2. The `:` delimiter is not respected because ports are not allowed.

Currently the port of an Ingress is implicitly :80 for http and :443 for

https. Both these may change in the future. Incoming requests are matched

against the host before the IngressRuleValue. If the host is unspecified,

the Ingress routes all traffic based on the specified IngressRuleValue.

Host can be "precise" which is a domain name without the terminating dot of

a network host (e.g. "foo.bar.com") or "wildcard", which is a domain name

prefixed with a single wildcard label (e.g. "*.foo.com"). The wildcard

character '*' must appear by itself as the first DNS label and matches only

a single label. You cannot have a wildcard label by itself (e.g. Host ==

"*"). Requests will be matched against the Host field in the following way:

1. If Host is precise, the request matches this rule if the http host

header is equal to Host. 2. If Host is a wildcard, then the request matches

this rule if the http host header is to equal to the suffix (removing the

first label) of the wildcard rule.

http <Object>

也就是说,我们具体的路由规则都在这个rules内配置了,对应了前面我们说的,如果没有配置rules的规则,那么它将会把流量全部匹配到defaultBackend指定的资源中去,那么我们来看第一个参数了,host,这个参数其实就是要我们指定一个域名,这个域名只要是一个标准的FQDN就可以,用于访问ingress控制器

第二个参数是个对象,http它其实就是具体的一个nginx.conf中的server块的配置了

[root@k-m-1 ~]# kubectl explain ingress.spec.rules.http

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: http <Object>

DESCRIPTION:

HTTPIngressRuleValue is a list of http selectors pointing to backends. In

the example: http://<host>/<path>?<searchpart> -> backend where where parts

of the url correspond to RFC 3986, this resource will be used to match

against everything after the last '/' and before the first '?' or '#'.

FIELDS:

paths <[]Object> -required-

A collection of paths that map requests to backends.

[root@k-m-1 ~]# kubectl explain ingress.spec.rules.http.paths

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: paths <[]Object>

DESCRIPTION:

A collection of paths that map requests to backends.

HTTPIngressPath associates a path with a backend. Incoming urls matching

the path are forwarded to the backend.

FIELDS:

backend <Object> -required-

Backend defines the referenced service endpoint to which the traffic will

be forwarded to.

path <string>

Path is matched against the path of an incoming request. Currently it can

contain characters disallowed from the conventional "path" part of a URL as

defined by RFC 3986. Paths must begin with a '/' and must be present when

using PathType with value "Exact" or "Prefix".

pathType <string> -required-

PathType determines the interpretation of the Path matching. PathType can

be one of the following values: * Exact: Matches the URL path exactly. *

Prefix: Matches based on a URL path prefix split by '/'. Matching is done

on a path element by element basis. A path element refers is the list of

labels in the path split by the '/' separator. A request is a match for

path p if every p is an element-wise prefix of p of the request path. Note

that if the last element of the path is a substring of the last element in

request path, it is not a match (e.g. /foo/bar matches /foo/bar/baz, but

does not match /foo/barbaz).

* ImplementationSpecific: Interpretation of the Path matching is up to the

IngressClass. Implementations can treat this as a separate PathType or

treat it identically to Prefix or Exact path types. Implementations are

required to support all path types.

[root@k-m-1 ~]# kubectl explain ingress.spec.rules.http.paths.backend

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: backend <Object>

DESCRIPTION:

Backend defines the referenced service endpoint to which the traffic will

be forwarded to.

IngressBackend describes all endpoints for a given service and port.

FIELDS:

resource <Object>

Resource is an ObjectRef to another Kubernetes resource in the namespace of

the Ingress object. If resource is specified, a service.Name and

service.Port must not be specified. This is a mutually exclusive setting

with "Service".

service <Object>

Service references a Service as a Backend. This is a mutually exclusive

setting with "Resource".

大致看一看这个参数其实就是它下面可以有很多的path然后每个path又可以有一个匹配规则和一个后端配置,那么这个后端配置和defaultBackend支持的是一样的,可以是resource或者service,同样它们俩一样互斥

最后一个参数就是tls,也就是ssl的配置,这个基本上没太多的参数,我们来看看

[root@k-m-1 ~]# kubectl explain ingress.spec.tls

KIND: Ingress

VERSION: networking.k8s.io/v1

RESOURCE: tls <[]Object>

DESCRIPTION:

TLS configuration. Currently the Ingress only supports a single TLS port,

443. If multiple members of this list specify different hosts, they will be

multiplexed on the same port according to the hostname specified through

the SNI TLS extension, if the ingress controller fulfilling the ingress

supports SNI.

IngressTLS describes the transport layer security associated with an

Ingress.

FIELDS:

hosts <[]string>

Hosts are a list of hosts included in the TLS certificate. The values in

this list must match the name/s used in the tlsSecret. Defaults to the

wildcard host setting for the loadbalancer controller fulfilling this

Ingress, if left unspecified.

secretName <string>

SecretName is the name of the secret used to terminate TLS traffic on port

443. Field is left optional to allow TLS routing based on SNI hostname

alone. If the SNI host in a listener conflicts with the "Host" header field

used by an IngressRule, the SNI host is used for termination and value of

the Host header is used for routing.

这里面基本上就是你可以指定多个host到hosts参数下,然后指定一个secretName也就是secret类型为tls的名称就可以了,那我们简单的写一个比较完整的Ingress资源来看一下把

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

namespaec: nginx

labels:

app: nginx

spec:

tls:

- hosts:

- nginx.kudevops.cn

secretName: ssl

rules:

- host: nginx.kudevops.cn

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

我们上面基本上都是在了解Ingress资源本身,但是我们要知道的是仅仅创建Ingress资源本身是没有任何效果的,还需要部署一个Ingress控制器来进行Ingress对象资源的解析,当然这个控制器是有很多的,比如现在的traefik,ApiSix,Caddy,Haproxy Ingress Controller,Kong,ingress-nginx等等,当然如果有能力,我们甚至可以自己实现一个Ingress Controller,现在普遍比较多的是ingress-nginx,apisix以及traefik,当然也有些使用其他的控制器,不过都大同小异,实际上现在社区已经开源出来了一个高配置能力的API,被称为Gateway API,新API会提供一种Ingress的替代方案,它的目的并不是为了替代Ingress,而是提供一种更具有配置能力的方案。

4:Ingress之ingress-nginx

我们前面看到了Ingress资源对象只是一个描述文件,要让它真正的生效我们就需要部署一个Ingress控制器,Ingress控制器有很多,这里我们选择官方维护的ingress-nginx来作为Ingress控制器,它是基于nginx而开发的

运行原理

ingress-nginx控制器的主要作用就是为了组装一个nginx.conf的配置文件,当配置文件发生任何变动的时候就需要重新加载Nginx来生效,但是并不会只在影响upstream配置的变更后就会重新加载Nginx,控制器内部会使用一个lua-nginx-module来实现该功能

我们知道Kubernetes控制器使用控制循环模式来检查控制器中所需的状态是否已更新或是否需要更新,所以ingress-nginx需要使用集群的不同对象来构建模型,比如Ingress,Service,Endpoints,Secrets,ConfigMaps等可以生成反映集群状态的配置文件的对象,控制器需要一直watch这些对象的变化,但是并没有办法知道特定的对象更改是否会影响到最终生成的nginx.conf配置文件,所以一旦Watch到了任何变化控制器都必须根据集群状态重建一个新的模型,并将其与当前的模型进行比较,如果模型相同则就可以避免生成新的Nginx配置并触发重新加载,否则还需要检查模型的差异是否只和端点有关,如果是这样,则然后需要使用HTTP POST请求将端点列表发送到Nginx内运行的Lua处理程序,并再次避免生成新的nginx配置并触发重新加载,如果运行和新模型之间的差异不仅仅是端点,那么就会基于新模型创建一个新的Nginx配置,这样构建模型最大的一个好处就是在状态没有变化时避免不必要的重新加载,可以节省大量的Nginx的重新加载,下面我们来看看一些需要加载的场景

1:创建/删除新的Ingress资源对象

2:TLS添加到现有的Ingress资源对象中

3:从Ingress中添加或者删除path路径

4:Ingress,Service,Secret被删除

5:Ingress的一些缺失引用对象变成可用状态,例如Service或Secret

6:更新了一个Secret

对于集群规模较大的场景下频繁的对Nginx进行重载显然会造成大量的消耗,所以要尽可能的减少出现重新加载的场景

5:Ingress之ingress-nginx安装

这里我要说一点,就是我现在是虚拟机跑的k8s,如果要直接使用80和443,那么我们有两种选择,hostport或者hostnetwork模式,当然对于线上环境来说,为了保证ingress-controller的高可用,一般会使用selector或者直接用DaemonSet模式部署ingress-nginx实例,然后可以用一个nginx/haproxy作为入口,通过keepalived来访问边缘节点的vip地址

# 所谓边缘节点就是集群内部用来向集群外部暴露服务能力的节点,集群外部的服务通过该节点来调用集群内部的服务,边缘节点是集群内外交流的一个EndPoint

安装ingress-nginx的方式有很多,我这里就是将官方的yaml下载下来之后进行了一下更改,主要是将Deployment改成了DaemonSet,然后在spec下面开启了hostNetwork功能,主要就是这些

[root@k-m-1 ~]# kubectl get pod,svc,ingressclass,validatingwebhookconfigurations -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-admission-create-njkj5 0/1 Completed 0 22h

pod/ingress-nginx-admission-patch-hpvwj 0/1 Completed 0 22h

pod/ingress-nginx-controller-stw7w 1/1 Running 0 22h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller NodePort 10.96.2.149 <none> 80:31022/TCP,443:31793/TCP 22h

service/ingress-nginx-controller-admission ClusterIP 10.96.3.214 <none> 443/TCP 22h

NAME CONTROLLER PARAMETERS AGE

ingressclass.networking.k8s.io/nginx k8s.io/ingress-nginx <none> 22h

NAME WEBHOOKS AGE

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission 1 22h

# 这是我提前部署好的,这里我们如果使用了hostNetwork的话,那么k8s的Service负载功能就无法使用了也就意味着这个时候的Service就没有什么存在的意义了,基本就提供一个服务发现功能了

那么我们主要来解释一下ingressclass的这个CONTROLLER字段的值是干什么用的,这个我们需要去看ingress-controller的启动参数

[root@k-m-1 ~]# cat ingress-controller/deploy.yaml

......

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

......

可以看到这里--controller-class指定的是这个CONTROLLER参数,所以这里的CONTROLLER参数才是k8s.io/ingress-nginx,当然ingressclass的name也是一样的,也是参数内指定了,那么我们接下来就来手写一个整体的demo服务吧

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- name: http

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

selector:

app: nginx

ports:

- name: http

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

spec:

ingressClassName: nginx

rules:

- host: nginx.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

# 这里主要想提一下的就是nip.io这个域名,这个域名可以帮助我们在测试环境中免去写hosts的烦恼,有兴趣的可以去了解一下

# 我们创建上面的资源

[root@k-m-1 ingress]# kubectl apply -f nginx.yaml

deployment.apps/nginx created

service/nginx created

ingress.networking.k8s.io/nginx created

# 查看资源情况

[root@k-m-1 ingress]# kubectl get pod,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/nginx-586b477ddc-7gcvj 1/1 Running 0 60s

pod/nginx-586b477ddc-9p225 1/1 Running 0 60s

pod/nginx-586b477ddc-mgxxp 1/1 Running 0 60s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h

service/nginx ClusterIP 10.96.3.134 <none> 80/TCP 60s

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/nginx nginx nginx.192.168.3.236.nip.io 192.168.3.236 80 60s

# 验证一下是否可以访问到Ingress的后端服务了

[root@k-m-1 ingress]# curl -I nginx.192.168.3.236.nip.io

HTTP/1.1 200 OK

Date: Sat, 01 Jul 2023 05:31:48 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 这里可以看到已经访问到了Ingress的后端服务了,也就是nginx服务

上面我们学到的是ingress-nginx的基础使用,并不涉及其他的一些比如注解等具体配置的使用,那么接下来我们就来详细了解和是使用一下这些功能,那么首先我们需要知道的一个配置就是关于全局的配置

[root@k-m-1 ~]# kubectl get cm -n ingress-nginx ingress-nginx-controller -oyaml

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"allow-snippet-annotations":"true"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/component":"controller","app.kubernetes.io/instance":"ingress-nginx","app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx","app.kubernetes.io/version":"1.7.1"},"name":"ingress-nginx-controller","namespace":"ingress-nginx"}}

creationTimestamp: "2023-06-30T04:56:40Z"

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-controller

namespace: ingress-nginx

resourceVersion: "8624"

uid: c14156bb-cb3a-489d-a4a4-6f59fee91a8c

那么这个配置就是Ingress-nginx的全局配置,如果我们有什么需要让全部的ingress对象都生效的配置,可以写在这里,不过我们还是主要关注Annotations的配置

7:Ingress具体使用

7.1:Basic Auth

我们可以在Ingress对象中配置一些基本的Auth认证,比如Basic Auth,可以用htpasswd生成一个密码文件来验证身份

[root@k-m-1 ~]# yum install -y httpd-tools

[root@k-m-1 ~]# htpasswd -c auth foo

New password:

Re-type new password:

Adding password for user foo

# 这里密码我写的123,随之本地会生成一个auth文件,然后我们把这个文件创建成为secret类型为generic

[root@k-m-1 ~]# kubectl create secret generic basic-auth --from-file=auth

secret/basic-auth created

[root@k-m-1 ~]# kubectl get secrets basic-auth -o yaml

apiVersion: v1

data:

auth: Zm9vOiRhcHIxJFFaRjAwcS5yJFZxbFduM25TTnVnendkalc2anlDTDEK

kind: Secret

metadata:

creationTimestamp: "2023-07-02T05:05:11Z"

name: basic-auth

namespace: default

resourceVersion: "515579"

uid: 0eb8ea81-4cbe-459d-9174-e9ba0371c588

type: Opaque

# 然后我们就可以基于Ingress的配置去创建指定的注解了

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

annotations:

nginx.ingress.kubernetes.io/auth-type: basic # 开启基于这个Ingress对象的认证

nginx.ingress.kubernetes.io/auth-secret: basic-auth # 添加一个认证的Secret文件,前面创建过的

nginx.ingress.kubernetes.io/auth-realm: "Authentication Required" # 认证失败的原因

spec:

ingressClassName: nginx

rules:

- host: nginx.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

# 将上面的yaml更新到我们的资源中

[root@k-m-1 ingress]# kubectl apply -f nginx.yaml

deployment.apps/nginx unchanged

service/nginx unchanged

ingress.networking.k8s.io/nginx configured

# 再次尝试请求服务

[root@k-m-1 ingress]# curl -I nginx.192.168.3.236.nip.io

HTTP/1.1 401 Unauthorized

Date: Sun, 02 Jul 2023 05:14:57 GMT

Content-Type: text/html

Content-Length: 172

Connection: keep-alive

WWW-Authenticate: Basic realm="Authentication Required"

# 可以看到,返回了401,基本上我们也就可以明白,401是客户端没有通过认证导致的,并且看认证的realm,是不是也是我们定义的错误,那么这个时候基本就可以确认我们的配置生效了,那么我们带上认证的账号密码再来看看

[root@k-m-1 ingress]# curl -u foo:123 -I nginx.192.168.3.236.nip.io

HTTP/1.1 200 OK

Date: Sun, 02 Jul 2023 05:18:12 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 可以看到,这个时候的状态码就认证通过了,除此之外,如果我们不想对接本地的文件认证,我想对接一个第三方的API认证可以吗?这个其实在Ingress-nginx是可以的,也都支持,那么我们来看看如何配置

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

annotations:

nginx.ingress.kubernetes.io/auth-url: https://httpbin.org/basic-auth/user/password # 这里指定的就是一个第三方的认证服务接口

spec:

ingressClassName: nginx

rules:

- host: nginx.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

# 当然我这里用的这个第三方是在线的,大家可以自己写,也可以用现成的

[root@k-m-1 ingress]# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/nginx created

# 再次请求认证

[root@k-m-1 ingress]# curl -I nginx.192.168.3.236.nip.io

HTTP/1.1 401 Unauthorized

Date: Sun, 02 Jul 2023 05:26:37 GMT

Content-Type: text/html

Content-Length: 172

Connection: keep-alive

WWW-Authenticate: Basic realm="Fake Realm"

# 可以看到,这个时候已经接入第三方认证了,我们需要根据第三方接口的认证去访问我们的服务,这个接口账号是user,密码是password

[root@k-m-1 ingress]# curl -u user:password -I nginx.192.168.3.236.nip.io

HTTP/1.1 200 OK

Date: Sun, 02 Jul 2023 05:29:32 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 可以看到,这个时候就认证通过了,那么这个就是接入第三方认证的一个方式了,实际其实是比较实用的,比如我们有自己的一个认证API,可以基于在线控制API的账号密码,而不需要频繁改动Ingress的配置

# 尝试接入JWT认证

# 在线Token认证URL:https://httpbin.org/bearer

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

annotations:

nginx.ingress.kubernetes.io/auth-url: https://httpbin.org/bearer # 和上面的Basic-auth一样指定一下第三方的认证API就可以了

spec:

ingressClassName: nginx

rules:

- host: nginx.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

[root@k-m-1 ingress]# kubectl get pod,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/nginx-586b477ddc-94b9c 1/1 Running 0 31s

pod/nginx-586b477ddc-b6nbf 1/1 Running 0 31s

pod/nginx-586b477ddc-hb544 1/1 Running 0 31s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6d20h

service/nginx ClusterIP 10.96.2.51 <none> 80/TCP 31s

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/nginx nginx jwt.192.168.3.236.nip.io 192.168.3.236 80 31s

[root@k-m-1 ingress]# curl -I -H "Authorization: Bearer eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ.SflKxwRJSMeKKF2QT4fwpMeJf36POk6yJV_adQssw5c" jwt.192.168.3.236.nip.io

HTTP/1.1 200 OK

Date: Fri, 07 Jul 2023 00:53:44 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 可以看到接入JWT和Basic-Auth的方法是一致的,只需要对接接口即可,其他的认证全部由接口去处理

7.2:URL Rewrite

从名字也能看出,这个是Nginx内的一个重写功能,很多时候我们会拿Ingress-nginx当作网关使用,比如访问到/registry的时候在nginx里面实现可能转发到某一个服务的接口上,比如

location /registry/ {

proxy_pass http://127.0.0.1/reg/;

}

proxy_pass后面加了/reg这个路径,此时会将匹配到的该规则路径中的/registry替换为/reg,相当于截掉路径中的/registry,同样在kubernetes中ingress-nginx是如何实现的呢?我们可以使用rewrite-target来注解实现这个需求,比如我们现在想要通过rewrite.192.168.3.236.nip.io/gateway/来访问到Nginx服务,则我们需要对访问的URL路径做一个rewrite,在Path中添加一个gateway的前缀,关于rewrite的具体配置,官方也给出了一些操作文档:https://kubernetes.github.io/ingress-nginx/examples/rewrite/

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

spec:

ingressClassName: nginx

rules:

- host: rewrite.192.168.3.236.nip.io

http:

paths:

- path: /gateway(/|$)(.*)

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

# 这里面我们在Path里面用了一个正则表达式,也就是可以匹配到加/和不加/的规则,然后比如/gateway/xxx都重写到/

[root@k-m-1 ingress]# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/nginx configured

# 正常没匹配到任何规则

[root@k-m-1 ingress]# curl -I rewrite.192.168.3.236.nip.io

HTTP/1.1 404 Not Found

Date: Sun, 02 Jul 2023 06:19:07 GMT

Content-Type: text/html

Content-Length: 146

Connection: keep-alive

# 匹配到/gateway但是不带/

[root@k-m-1 ingress]# curl -I rewrite.192.168.3.236.nip.io/gateway

HTTP/1.1 200 OK

Date: Sun, 02 Jul 2023 06:19:13 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 匹配到/gateway带/

[root@k-m-1 ingress]# curl -I rewrite.192.168.3.236.nip.io/gateway/

HTTP/1.1 200 OK

Date: Sun, 02 Jul 2023 06:19:16 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 根据正则表达式访问下一级

[root@k-m-1 ingress]# curl -I rewrite.192.168.3.236.nip.io/gateway/abc

HTTP/1.1 404 Not Found

Date: Sun, 02 Jul 2023 06:20:32 GMT

Content-Type: text/html

Content-Length: 153

Connection: keep-alive

# 通过查看服务的日志可以看到,它是重写到了服务/然后一级一级的去查找接口

2023/07/02 06:19:31 [error] 30#30: *2 open() "/usr/share/nginx/html/abc" failed (2: No such file or directory), client: 192.168.3.236, server: localhost, request: "HEAD /abc HTTP/1.1", host: "rewrite.192.168.3.236.nip.io"

# 那么如果我们想访问/的时候不出现404,而是直接转到gateway怎么办呢?这个时候我们就要配置一个参数了nginx.ingress.kubernetes.io/app-root这个参数

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/app-root: /gateway

spec:

ingressClassName: nginx

rules:

- host: rewrite.192.168.3.236.nip.io

http:

paths:

- path: /gateway(/|$)(.*)

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

# 这样配置之后我们再去验证一下

[root@k-m-1 ingress]# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/nginx configured

[root@k-m-1 ingress]# curl rewrite.192.168.3.236.nip.io -v

* Rebuilt URL to: rewrite.192.168.3.236.nip.io/

* Trying 192.168.3.236...

* TCP_NODELAY set

* Connected to rewrite.192.168.3.236.nip.io (192.168.3.236) port 80 (#0)

> GET / HTTP/1.1

> Host: rewrite.192.168.3.236.nip.io

> User-Agent: curl/7.61.1

> Accept: */*

>

< HTTP/1.1 302 Moved Temporarily

< Date: Sun, 02 Jul 2023 07:11:00 GMT

< Content-Type: text/html

< Content-Length: 138

< Connection: keep-alive

# 可以看到这里已经根据302重写给了/gateway了

< Location: http://rewrite.192.168.3.236.nip.io/gateway

<

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host rewrite.192.168.3.236.nip.io left intact

其实这个情况如果做过SEO可能都知道,/gateway和/gateway/这两个如果不做合并的话,会造成分权的问题,所以一般我们会对它们两个进行一个合并处理,那么我们来看看怎么做

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

labels:

app: nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2

nginx.ingress.kubernetes.io/app-root: /gateway

nginx.ingress.kubernetes.io/configuration-snippet: |

rewrite ^(/gateway)$ $1/ redirect;

spec:

ingressClassName: nginx

rules:

- host: rewrite.192.168.3.236.nip.io

http:

paths:

- path: /gateway(/|$)(.*)

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

# 这里主要要说的就是nginx.ingress.kubernetes.io/configuration-snippet这个参数,它可以让你像写nginx配置一样写ingress的配置

[root@k-m-1 ingress]# kubectl apply -f ingress.yaml

ingress.networking.k8s.io/nginx configured

# 验证两者是否合并

# 不携带/的访问

[root@k-m-1 ingress]# curl rewrite.192.168.3.236.nip.io/gateway -v

* Trying 192.168.3.236...

* TCP_NODELAY set

* Connected to rewrite.192.168.3.236.nip.io (192.168.3.236) port 80 (#0)

> GET /gateway HTTP/1.1

> Host: rewrite.192.168.3.236.nip.io

> User-Agent: curl/7.61.1

> Accept: */*

>

< HTTP/1.1 302 Moved Temporarily

< Date: Sun, 02 Jul 2023 09:16:50 GMT

< Content-Type: text/html

< Content-Length: 138

< Location: http://rewrite.192.168.3.236.nip.io/gateway/

< Connection: keep-alive

<

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host rewrite.192.168.3.236.nip.io left intact

# 携带/的访问

[root@k-m-1 ingress]# curl rewrite.192.168.3.236.nip.io/gateway/

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 从/跳转到/gateway

[root@k-m-1 ingress]# curl rewrite.192.168.3.236.nip.io -v

* Rebuilt URL to: rewrite.192.168.3.236.nip.io/

* Trying 192.168.3.236...

* TCP_NODELAY set

* Connected to rewrite.192.168.3.236.nip.io (192.168.3.236) port 80 (#0)

> GET / HTTP/1.1

> Host: rewrite.192.168.3.236.nip.io

> User-Agent: curl/7.61.1

> Accept: */*

>

< HTTP/1.1 302 Moved Temporarily

< Date: Sun, 02 Jul 2023 09:16:09 GMT

< Content-Type: text/html

< Content-Length: 138

< Connection: keep-alive

< Location: http://rewrite.192.168.3.236.nip.io/gateway

<

<html>

<head><title>302 Found</title></head>

<body>

<center><h1>302 Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

* Connection #0 to host rewrite.192.168.3.236.nip.io left intact

# 这就是我们日常操作的Rewrite的操作了

7.3:灰度发布

在日常工作中我们经常遇到需要对服务进行升级,所以我们经常会涉及的就是滚动升级,蓝绿发布,灰度发布等不同的发布操作,而ingress-nginx支持通过Annotations配置来实现不同场景下的灰度发布和测试,可以满足金丝雀发布,蓝绿发布与A/B测试等业务场景,首先需要加nginx.ingress.kubernetes.io/canary: true注解来启用canary功能,然后可以启用以下配置金丝雀的注解

1:nginx.ingress.kubernetes.io/canary-by-header:基于Request Header的流量切分,适用于灰度发布以及A/B测试,当Request Header设置为always时,请求会将被一直发送到Canary版本:当Request Header设置为never时,请求不会被发送到Canary入口,对于任何其他Header值,将忽略Header,并通过优先级将请求与其他金丝雀规则进行优先级比较

2:nginx.ingress.kubernetes.io/canary-by-header-value:要匹配的Request Header的值,用于通知Ingress将请求到Canary Ingress中指定的服务,当Request Header设置为此值时,它将被路由到Canary入口,该规则允许用户自定义Request Header的值,必须与上一个annotation(canary-by-header)一起使用

3:nginx.ingress.kubernetes.io/canary-by-header-pattern:这与canary-by-header-value的工作方式相同,只是它进行PCRE正则匹配,请注意,当设置canary-by-header-value值时,此注解将被忽略,当给定的Regex在请求处理过程中导致错误时,该请求将被视为不匹配

4:nginx.ingress.kubernetes.io/canary-weight:基于服务权重的流量切分,适用于当蓝绿部署,权重范围0-100按百分比将请求路由到Canary Ingress中指定的服务,权重为0意味着该金丝雀规则不会向Canary入口的服务发送任何请求,权重为100意味着请求都将被发送到Canary入口

5:nginx.ingress.kubernetes.io/canary-by-cookie:基于Cookie的流量切分,适用于灰度发布与A/B测试,用于通知Ingress将请求路由到Canay Ingress中指定的服务的cookie,当cookie值设置为always时,它将被路由到Canary入口,当cookie值被设置为never时,请求不会被发送到Canary入口,对于任何其他值,将忽略cookie并将请求与其他金丝雀规则进行优先级的比较

6:nginx.ingress.kubernetes.io/canary-weight-total:流量总权重,如果未指定,则默认为100

# 需要注意的是,金丝雀规则按照优先级进行排序:canary-by-header -> canary-by-cookie -> canary-weight

总的来说可以把上面的几个annotation规则划分为如下两类:

1:基于权重的Canary规则

2:基于用户请求的Canary规则

那么我们下面就来做一个示例来对灰度发布进行一个说明

1:部署一个Production应用

# production-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: production

labels:

app: production

spec:

selector:

matchLabels:

app: production

template:

metadata:

labels:

app: production

spec:

containers:

- name: production

image: mirrorgooglecontainers/echoserver:1.10

ports:

- name: http

containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

# production-service.yaml

apiVersion: v1

kind: Service

metadata:

name: production

labels:

app: production

spec:

type: ClusterIP

ports:

- name: http

port: 8080

targetPort: 8080

selector:

app: production

# production-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: production

labels:

app: production

spec:

ingressClassName: nginx

rules:

- host: echo.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: production

port:

number: 8080

# 创建这个应用

[root@k-m-1 production]# kubectl apply -f .

deployment.apps/production created

ingress.networking.k8s.io/production created

service/production created

# 查看创建资源

[root@k-m-1 production]# kubectl get pod,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/production-7f9968b665-5xvhn 1/1 Running 0 41s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d7h

service/production ClusterIP 10.96.2.130 <none> 8080/TCP 41s

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/production nginx echo.192.168.3.236.nip.io 192.168.3.236 80 41s

# 验证应用是否可以正常访问

[root@k-m-1 production]# curl echo.192.168.3.236.nip.io

Hostname: production-fbd99746c-42g75

Pod Information:

node name: k-m-1

pod name: production-fbd99746c-42g75

pod namespace: default

pod IP: 100.114.94.157

Server values:

server_version=nginx: 1.13.3 - lua: 10008

Request Information:

client_address=192.168.3.236

method=GET

real path=/

query=

request_version=1.1

request_scheme=http

request_uri=http://echo.192.168.3.236.nip.io:8080/

Request Headers:

accept=*/*

host=echo.192.168.3.236.nip.io

user-agent=curl/7.61.1

x-forwarded-for=192.168.3.236

x-forwarded-host=echo.192.168.3.236.nip.io

x-forwarded-port=80

x-forwarded-proto=http

x-forwarded-scheme=http

x-real-ip=192.168.3.236

x-request-id=34910bf6634c6ca0a2fd80de5ca13922

x-scheme=http

Request Body:

-no body in request-

# 此时我们模拟灰度发布,也就是我们要创建一个新版本的应用了

# production-deploy-canary.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: production-canary

labels:

app: production-canary

spec:

selector:

matchLabels:

app: production-canary

template:

metadata:

labels:

app: production-canary

spec:

containers:

- name: production-canary

image: mirrorgooglecontainers/echoserver:1.10

ports:

- name: http

containerPort: 8080

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

# production-service-canary.yaml

apiVersion: v1

kind: Service

metadata:

name: production-canary

labels:

app: production-canary

spec:

type: ClusterIP

ports:

- name: http

port: 8080

targetPort: 8080

selector:

app: production-canary

# 我们部署一下这个canary的版本的服务

[root@k-m-1 production]# kubectl apply -f production-deploy-canary.yaml -f production-service-canary.yaml

deployment.apps/production-canary created

service/production-canary created

# 查看canary版本的应用

[root@k-m-1 production]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/production-canary-7779b5fd87-ndgnn 1/1 Running 0 10s

pod/production-fbd99746c-42g75 1/1 Running 2 (<invalid> ago) 13h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d21h

service/production ClusterIP 10.96.2.130 <none> 8080/TCP 14h

service/production-canary ClusterIP 10.96.3.126 <none> 8080/TCP 10s

# 第三步就是通过ingress进行流量的切分了

1:基于权重:基于权重的流量切分的典型应用场景就是蓝绿发布,可通过将权重设置为0或者100来实现,可将Green版本设置为主要部分,并将Blue版本的入口配置为Canary,最初,将权重设置为0,因此不会讲流量代理到Blue版本,一旦新版本测试和验证成功后,即可将Blue版本的权重设置为100,即所有流量从Green转向Blue,我们需要创建一个基于权重的Canary的Ingress

# production-ingress-canary.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: production-canary

labels:

app: production-canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 开启灰度发布机制

nginx.ingress.kubernetes.io/canary-weight: "30" # 分配30%的流量到Canary版本

spec:

ingressClassName: nginx

rules:

- host: echo.192.168.3.236.nip.io # 这里的域名要和Green版本的域名保持一致

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: production-canary # 这里就是Canary版本的Service了

port:

number: 8080

# 查看部署结果

[root@k-m-1 production]# kubectl get pod,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/production-canary-7779b5fd87-ndgnn 1/1 Running 0 13m

pod/production-fbd99746c-42g75 1/1 Running 2 (<invalid> ago) 13h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d21h

service/production ClusterIP 10.96.2.130 <none> 8080/TCP 14h

service/production-canary ClusterIP 10.96.3.126 <none> 8080/TCP 13m

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/production nginx echo.192.168.3.236.nip.io 192.168.3.236 80 14h

ingress.networking.k8s.io/production-canary nginx echo.192.168.3.236.nip.io 192.168.3.236 80 53s

# 然后我们多次访问来看看是什么情况

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 可以看到,这里面我们访问了10次,然后有三次访问到了我们的Canary版本,但是这个其实不是那么的稳定,因为你连续访问的时候可能会出现访问到两次或五次的情况,这个就是业务处理请求的逻辑的快慢的问题了,但是这个至少可以说明,我们的基于权重的灰度策略已经生效了,那么这个就是最简单的基于权重的灰度发布

# 基于Request Header:基于Request Header进行流量切分的典型应用场景就是灰度发布与A/B测试

我们基于上面的Canary的版本的Ingress新增一条Annotations

# production-ingress-canary.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: production-canary

labels:

app: production-canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 开启灰度发布机制

nginx.ingress.kubernetes.io/canary-by-header: canary # 前面讲过优先级的问题,所以下面的这个权重灰度策略会被忽略

nginx.ingress.kubernetes.io/canary-weight: "30"

spec:

ingressClassName: nginx

rules:

- host: echo.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: production-canary

port:

number: 8080

# 这里需要提一下,我们没有给header指定value,随意如果是never的话,流量就不会匹配到Canary版本,如果是always的话流量就只会匹配到Canary版本,但是如果是随机的Header,那么这条规则则会被忽略,并去执行其他的灰度策略

[root@k-m-1 production]# kubectl apply -f production-ingress-canary.yaml

ingress.networking.k8s.io/production-canary configured

# 匹配never的版本测试

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: never" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 匹配always的版本测试

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: always" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

# 都不匹配的版本测试,这里就走了权重的灰度策略

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: 123" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 当然上面是没有给定特定的value的值的,下面我们也可以给定一个特定的值

# production-ingress-canary.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: production-canary

labels:

app: production-canary

annotations:

nginx.ingress.kubernetes.io/canary: "true" # 开启灰度发布机制

nginx.ingress.kubernetes.io/canary-by-header-value: user-value # 当Header满足这个值时,流量会全部到Canary服务

nginx.ingress.kubernetes.io/canary-by-header: canary # 前面讲过优先级的问题,所以下面的这个权重灰度策略会被忽略

nginx.ingress.kubernetes.io/canary-weight: "30"

spec:

ingressClassName: nginx

rules:

- host: echo.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: production-canary

port:

number: 8080

[root@k-m-1 production]# kubectl apply -f production-ingress-canary.yaml

ingress.networking.k8s.io/production-canary configured

# 再次尝试配置never

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: never" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 再次尝试配置always

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: always" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 再次尝试随机value

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: 123" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 配置固定的value值

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -H "canary: user-value" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

# 可以看到只要配置了固定的value值,那么这个时候never和always就无效了,它们的效果与随机Header一样都是去寻找下一个灰度规则

# 基于Cookie与基于Request Header的annotation的用法类似,例如在A/B测试的时候需要让地域为北京的用户访问Canary版本,当cookie的annotation设置为nginx.ingress.kubernetes.io/canary-by-cookie: "users_from_Beijing",此时后台可对登录的用户进行请求的检查,如果该用户访问来源来自北京则设置cookie为users_from_Beijing的值为always,这样就可以确保北京的用户仅访问Canary的版本了,同样我们基于上面的Canary的版本去编辑

# production-ingress-canary.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: production-canary

labels:

app: production-canary

annotations:

nginx.ingress.kubernetes.io/canary: "true"

nginx.ingress.kubernetes.io/canary-by-cookie: "users_from_Beijing"

nginx.ingress.kubernetes.io/canary-weight: "30"

spec:

ingressClassName: nginx

rules:

- host: echo.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: production-canary

port:

number: 8080

[root@k-m-1 production]# kubectl apply -f production-ingress-canary.yaml

ingress.networking.k8s.io/production-canary configured

# 验证是否加了指定的Cookie仅访问到Canary版本

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -b "users_from_Beijing=always" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

# 如果匹配别的Cookie或者不匹配Cookie的时候就选择其他的灰度策略

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -b "xxx" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-canary-7779b5fd87-ndgnn

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

# 从不匹配Canary版本

[root@k-m-1 production]# for i in $(seq 1 10);do curl -s -b "users_from_Beijing=never" echo.192.168.3.236.nip.io | grep "Hostname"; done

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

Hostname: production-fbd99746c-42g75

7.4:HTTPS

如果说我们要使用HTTPS来访问这个应用的话,就需要监听443端口了,同样访问应用必然需要证书,这里我们可以使用openssl自建一个证书

[root@k-m-1 production]# mkdir ssl

[root@k-m-1 production]# cd ssl/

[root@k-m-1 ssl]# openssl req -x509 --nodes -days 365 -newkey rsa:2048 -keyout tls.key --out tls.crt -subj "/CN=nginx.192.168.3.236.nip.io"

Generating a RSA private key

..............................................+++++

......................+++++

writing new private key to 'tls.key'

-----

[root@k-m-1 ssl]# ls

tls.crt tls.key

[root@k-m-1 ssl]# kubectl create secret tls nginx-tls --cert=tls.crt --key=tls.key

secret/foo-tls created

# 这个时候我们就可以去创建一个HTTPS的应用了

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-with-auth

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: basic-auth

nginx.ingress.kubernetes.io/auth-realm: "Authentication Required"

spec:

ingressClassName: nginx

tls:

- hosts:

- nginx.192.168.3.236.nip.io

secretName: nginx-tls

rules:

- host: nginx.192.168.3.236.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx

port:

number: 80

[root@k-m-1 ingress]# kubectl get pod,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/nginx-586b477ddc-55wnl 1/1 Running 0 30s

pod/nginx-586b477ddc-ks7pv 1/1 Running 0 30s

pod/nginx-586b477ddc-lrjkb 1/1 Running 0 30s

pod/production-canary-7779b5fd87-ndgnn 1/1 Running 1 (<invalid> ago) 9h

pod/production-fbd99746c-42g75 1/1 Running 3 (<invalid> ago) 23h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d7h

service/nginx ClusterIP 10.96.0.86 <none> 80/TCP 30s

service/production ClusterIP 10.96.2.130 <none> 8080/TCP 23h

service/production-canary ClusterIP 10.96.3.126 <none> 8080/TCP 9h

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/ingress-with-auth nginx nginx.192.168.3.236.nip.io 192.168.3.236 80, 443 30s

ingress.networking.k8s.io/production nginx echo.192.168.3.236.nip.io 192.168.3.236 80 23h

ingress.networking.k8s.io/production-canary nginx echo.192.168.3.236.nip.io 192.168.3.236 80 9h

# 可以看到nginx.192.168.3.236.nip.io这个Ingress开启了443,然后我们模拟访问一下

[root@k-m-1 ingress]# curl -u foo:123 -I -k https://nginx.192.168.3.236.nip.io

HTTP/2 200

date: Mon, 03 Jul 2023 11:28:38 GMT

content-type: text/html

content-length: 615

last-modified: Tue, 13 Jun 2023 15:08:10 GMT

etag: "6488865a-267"

accept-ranges: bytes

strict-transport-security: max-age=15724800; includeSubDomains

# 欸这个时候发现https访问服务也没有问题了,这里说明一下,curl -k就是跳过不受信任的证书的报错,那么这些其实就是Ingress的基本使用,下面我们会对Ingress的进阶再来进行一波讲解演示

8:Ingress高级配置

上面我们学习了Ingress的基本的一些场景的使用,那么这边我们主要就是来针对Ingress进行一些高级特性的讲解和使用

8.1:TCP 与 UDP

由于Ingress资源对象中没有直接对TCP与UDP服务的支持,要在Ingress-nginx中提供支持,需要在控制器启动参数中添加--tcp-services-configmap和--udp-services-configmap标志指向一个ConfigMap,其中key是要使用的外部端口,value值是使用格式<namespace/service name>:<service port>:[PROXY]:[PROXY]暴露服务,端口可以使用端口号或者端口名称,最后两个字段是可选的,用于配置PROXY代理,那么我们接下来暴露一个MongoDB服务来看看具体的操作是怎样的

# mongo-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongo

labels:

app: mongo

spec:

selector:

matchLabels:

app: mongo

template:

metadata:

labels:

app: mongo

spec:

volumes:

- name: data

emptyDir: {}

containers:

- name: mongo

image: mongo:4.0

ports:

- name: tcp

containerPort: 27017

volumeMounts:

- name: data

mountPath: /data/db

# mongo-service.yaml

apiVersion: v1

kind: Service

metadata:

name: mongo

labels:

app: mongo

spec:

selector:

app: mongo

ports:

- name: tcp

port: 27017

targetPort: 27017

type: ClusterIP

[root@k-m-1 ingress]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/mongo-7bf698b468-z6vjd 1/1 Running 0 10h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d18h

service/mongo ClusterIP 10.96.0.130 <none> 27017/TCP 10h

# 正常的逻辑是我们现在要去创建一个Ingress去暴露这个服务了,但是因为我们现在暴露的是一个TCP服务,那么我们就要按照上面说的, 我们去创建一个configmap然后在ingress的启动参数里面指定一下

# tcp-ingress.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: ingress-nginx-tcp

namespace: ingress-nginx

data:

"27017": default/mongo:27017

[root@k-m-1 mongo]# kubectl apply -f tcp-ingress.yaml

configmap/ingress-nginx-tcp created

[root@k-m-1 mongo]# kubectl get cm -n ingress-nginx

NAME DATA AGE

ingress-nginx-controller 1 3d17h

ingress-nginx-tcp 1 33s

kube-root-ca.crt 1 3d17h

# 然后我们需要去修改Ingress-controller的启动参数

...

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

# 配置这个参数

- --tcp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-tcp

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

...

[root@k-m-1 mongo]# kubectl apply -f ../../ingress-controller/deploy.yaml

namespace/ingress-nginx unchanged

serviceaccount/ingress-nginx unchanged

serviceaccount/ingress-nginx-admission unchanged

role.rbac.authorization.k8s.io/ingress-nginx unchanged

role.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

configmap/ingress-nginx-controller unchanged

service/ingress-nginx-controller unchanged

service/ingress-nginx-controller-admission unchanged

daemonset.apps/ingress-nginx-controller configured

job.batch/ingress-nginx-admission-create unchanged

job.batch/ingress-nginx-admission-patch unchanged

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

# 查看新的状态

[root@k-m-1 mongo]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-njkj5 0/1 Completed 0 3d17h

ingress-nginx-admission-patch-hpvwj 0/1 Completed 0 3d17h

ingress-nginx-controller-fjj5k 1/1 Running 0 12s

# 查看端口占用,因为这里我用的是hostNetwork网络,所以ingress-controller在本地肯定会占用一个27017的端口的,这里我们来看看

[root@k-m-1 mongo]# netstat -nplt | grep 27017

tcp 0 0 0.0.0.0:27017 0.0.0.0:* LISTEN 642967/nginx: maste

tcp6 0 0 :::27017 :::* LISTEN 642967/nginx: maste

# 测试MongoDB连接,通过连接地址我们可以看出来,它走的是本地,而且本地监听是nginx,这就意味着我们走的是ingress-nginx的代理

[root@k-m-1 mongo]# mongosh

Current Mongosh Log ID: 64a34ecaac89be0ce3fcdfb4

Connecting to: mongodb://127.0.0.1:27017/?directConnection=true&serverSelectionTimeoutMS=2000&appName=mongosh+1.10.1

Using MongoDB: 4.0.28

Using Mongosh: 1.10.1

For mongosh info see: https://docs.mongodb.com/mongodb-shell/

To help improve our products, anonymous usage data is collected and sent to MongoDB periodically (https://www.mongodb.com/legal/privacy-policy).

You can opt-out by running the disableTelemetry() command.

------

The server generated these startup warnings when booting

2023-07-03T11:50:01.789+0000:

2023-07-03T11:50:01.789+0000: ** WARNING: Access control is not enabled for the database.

2023-07-03T11:50:01.789+0000: ** Read and write access to data and configuration is unrestricted.

2023-07-03T11:50:01.789+0000:

2023-07-03T11:50:01.789+0000:

2023-07-03T11:50:01.789+0000: ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2023-07-03T11:50:01.789+0000: ** We suggest setting it to 'never'

2023-07-03T11:50:01.789+0000:

------

test> show dbs

admin 32.00 KiB

config 12.00 KiB

local 32.00 KiB

# 这样就证明已经可以了

# 那么讲了TCP,其实UDP也是一样的,我们可以尝试暴露一下kube-dns到本地

# udp-ingress.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: ingress-nginx-udp

namespace: ingress-nginx

data:

"53": kube-system/kube-dns:53

# 同样的也需要创建这个cm和配置启动参数

[root@k-m-1 dns]# kubectl apply -f udp-ingress.yaml

configmap/ingress-nginx-udp created

[root@k-m-1 dns]# kubectl get cm -n ingress-nginx

NAME DATA AGE

ingress-nginx-controller 1 3d17h

ingress-nginx-tcp 1 23m

ingress-nginx-udp 1 7s

kube-root-ca.crt 1 3d17h

...

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --tcp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-tcp

- --udp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-udp

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

...

[root@k-m-1 dns]# kubectl apply -f ../../ingress-controller/deploy.yaml

namespace/ingress-nginx unchanged

serviceaccount/ingress-nginx unchanged

serviceaccount/ingress-nginx-admission unchanged

role.rbac.authorization.k8s.io/ingress-nginx unchanged

role.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx unchanged

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission unchanged

configmap/ingress-nginx-controller unchanged

service/ingress-nginx-controller unchanged

service/ingress-nginx-controller-admission unchanged

daemonset.apps/ingress-nginx-controller configured

job.batch/ingress-nginx-admission-create unchanged

job.batch/ingress-nginx-admission-patch unchanged

ingressclass.networking.k8s.io/nginx unchanged

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission configured

# 查看监听,可以看到UDP已经监听了,那么这个时候其实我们配置本地的地址的话是可以解析到K8S内部域名的

[root@k-m-1 mongo]# netstat -nplu | grep nginx

udp 0 0 0.0.0.0:53 0.0.0.0:* 660477/nginx: maste

udp6 0 0 :::53 :::* 660477/nginx: maste

# 测试解析一下mongo的svc名称

[root@k-m-1 mongo]# nslookup mongo.default.svc.cluster.local 192.168.3.236

Server: 192.168.3.236

Address: 192.168.3.236#53

Name: mongo.default.svc.cluster.local

Address: 10.96.0.130

# 可以看到解析到了Mongo这个服务了,本身我们不配置这个的话是无法做到的,但是这样就可以解析到它的FQDN了,这个功能其实在很多场景会用到,比如本地开发需要连接到集群的svc,这个方式其实也是一种方案

8.2:全局配置

你除了可以通过annotations对指定的Ingress进行定制之外,我们还可以配置ingress-nginx的全局配置,在控制器启动参数中通过flag --configmap指定了一个全局配置的ConfigMap对象,我们可以将一个全局的一些配置直接定义在该对象中即可

...

containers:

- args:

- /nginx-ingress-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

# 可以看到这个配置

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --tcp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-tcp

- --udp-services-configmap=$(POD_NAMESPACE)/ingress-nginx-udp

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

...

这个configmap其实已经存在了,我们可以直接对其进行edit操作

[root@k-m-1 mongo]# kubectl get cm -n ingress-nginx

NAME DATA AGE

# 也就是这个configmap

ingress-nginx-controller 1 3d18h

ingress-nginx-tcp 1 62m

ingress-nginx-udp 1 39m

kube-root-ca.crt 1 3d18h

[root@k-m-1 mongo]# kubectl get cm -n ingress-nginx ingress-nginx-controller -oyaml

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","data":{"allow-snippet-annotations":"true"},"kind":"ConfigMap","metadata":{"annotations":{},"labels":{"app.kubernetes.io/component":"controller","app.kubernetes.io/instance":"ingress-nginx","app.kubernetes.io/name":"ingress-nginx","app.kubernetes.io/part-of":"ingress-nginx","app.kubernetes.io/version":"1.7.1"},"name":"ingress-nginx-controller","namespace":"ingress-nginx"}}

creationTimestamp: "2023-06-30T04:56:40Z"

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.7.1

name: ingress-nginx-controller

namespace: ingress-nginx

resourceVersion: "8624"

uid: c14156bb-cb3a-489d-a4a4-6f59fee91a8c

默认只有一个配置,就是允许我们使用注解进行配置,我们如果有做过Nginx优化的可能都知道,有些配置要卸载http模块的,并不是具体到server模块,所以这些配置在ingress里面就是要写在这个cm内的,那么下面我们来看看具体的一些配置吧

apiVersion: v1

data:

allow-snippet-annotations: "true"

client-header-buffer-size: 32k # 需注意,不是下划线

client-max-body-size: 5m

use-gzip: "true"

gzip-level: "7"

large-client-header-buffers: 4 32k

proxy-connect-timeout: 11s

proxy-read-timeout: 12s

keep-alive: "75" # 启动keepalive,连接复用,提高QPS

keep-alive-requests: "100"

upstream-keepalive-connections: "10000"

upstream-keepalive-requests: "100"

upstream-keepalive-timeout: "60"

disable-ipv6: "true"

disable-ipv6-dns: "true"

max-worker-connections: "65535"

max-worker-open-files: "10240"

kind: ConfigMap

...

这里主要是列举一些,具体要怎么去优化Ingress-controller是要根据实际环境去配置的,那么我们就先部署一下上面的配置看一看效果,这里我们edit之后nginx会自动重载的,然后部署一个demo测试一下

[root@k-m-1 ingress]# curl -I nginx.192.168.3.236.nip.io

HTTP/1.1 200 OK

Date: Mon, 03 Jul 2023 23:43:30 GMT

Content-Type: text/html

Content-Length: 615

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Tue, 13 Jun 2023 15:08:10 GMT

ETag: "6488865a-267"

Accept-Ranges: bytes

# 可以看到相比上面的回显多了很多的配置参数,这个其实就是全局配置的作用所在,具体支持的参数可以去下面的地址查询

ConfigMap:https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/

此外我们往往还需要对ingress-nginx部署的节点进行性能优化,修改一些内核参数, 使得适配Nginx的使用场景,一般我们是直接去修改节点上的内核参数(可以参考官方:https://www.nginx.com/blog/tuning-nginx/进行调整),为了能够统一管理,我们可以使用initContainers来进行配置

initContainers:

image: busybox

name: init-sysctl

securityContext:

capabilities:

add:

- SYS_ADMIN

drop:

- ALL

command:

- /bin/sh

- -c

- |

mount -o remount rw /proc/sys

sysctl -w net.core.somaxconn=655350 # 积压队列设置,具体配置视具体情况而定

sysctl -w net.ipv4.tcp_tw_reuse=1

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w fs.file-max=1048576

sysctl -w fs.inotify.max_user_instances=16384

sysctl -w fs.inotify.max_user_watches=524288

sysctl -w fs.inotify.max_queued_events=16384

...

部署完成后initContainers就可以帮助我们修改节点的内核参数了,生产环境建议对节点的内核参数进行相应的优化,性能优化需要有丰富的经验,关于Nginx的性能优化参数可以参考如下链接:

https://cloud.tencent.com/developer/article/1026833

[root@k-m-1 ingress]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-njkj5 0/1 Completed 0 3d22h

ingress-nginx-admission-patch-hpvwj 0/1 Completed 0 3d22h

ingress-nginx-controller-8whvx 1/1 Running 0 89s

[root@k-m-1 ingress]# sysctl -a | grep net.core.somaxconn

net.core.somaxconn = 655350

[root@k-m-1 ingress]# sysctl -a | grep net.ipv4.tcp_tw_reuse

net.ipv4.tcp_tw_reuse = 1

[root@k-m-1 ingress]# sysctl -a | grep net.ipv4.ip_local_port_range

net.ipv4.ip_local_port_range = 1024 65535

[root@k-m-1 ingress]# sysctl -a | grep fs.file-max

fs.file-max = 1048576

[root@k-m-1 ingress]# sysctl -a | grep fs.inotify.max_user_instances

fs.inotify.max_user_instances = 16384

[root@k-m-1 ingress]# sysctl -a | grep fs.inotify.max_user_watches

fs.inotify.max_user_watches = 524288

[root@k-m-1 ingress]# sysctl -a | grep fs.inotify.max_queued_events

fs.inotify.max_queued_events = 16384

# 这是启动参数帮我们改完的结果,当我们配置完这些之后我们可以去进行一下压测,就可以感受到我们优化与没优化的区别了

8.3:gRPC

ingress-nginx控制器同样也支持gRPC服务的,gRPC是Google开源的一个高性能RPC通信框架,通过Protocol Buffers作为其IDL,在不同语言开发的平台上使用,同时gRPC基于HTTP/2协议实现,提供了多路复用,头部压缩,流控等特性,极大的提高了客户端与服务端通信效率,gRPC简介在gRPC服务中,客户端应用可以同本地方法一样调用到位于不同服务器上的服务端应用方法,可以更方笔的创建分布式应用和服务,同其他RPC框架一样,gRPC也需要定义一个服务接口,同时指定被远程调用的方法和返回类型,服务端需要实现被定义的接口,同时运行一个gRPC服务器来处理客户端请求

这里有一个gRPC的示例应用https://github.com/grpc/grpc-go/blob/master/examples/features/reflection/server/main.go来进行说明,首先我们需要将应用构建成镜像

FROM golang:buster as build

WORKDIR /go/src/greeter-server

RUN curl -o main.go https://github.com/grpc/grpc-go/blob/master/examples/features/reflection/server/main.go && \

go mod init geeter-server && \

go mod tidy && \

go build -o /greeter-server main.go

FROM gcr.dockerproxy.com/distroless/base-debian10:latest

COPY --from=build /greeter-server /

EXPOSE 50051

CMD ["/greeter-server"]

[root@k-m-1 grpc]# docker buildx build -t go-grpc-greeter-server:v0.1 .

[+] Building 104.9s (12/12) FINISHED

=> [internal] load build definition from dockerfile 0.0s

=> => transferring dockerfile: 425B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for gcr.dockerproxy.com/distroless/base-debian10:latest 4.0s

=> [internal] load metadata for docker.io/library/golang:buster 15.2s

=> [build 1/4] FROM docker.io/library/golang:buster@sha256:7f89be6ec02f7eb4b429e9a43f4ac7d49d2c0cb79a99a28e2d1efe1e7e8a3473 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 29B 0.0s

=> CACHED [stage-1 1/2] FROM gcr.dockerproxy.com/distroless/base-debian10:latest@sha256:101798a3b76599762d3528635113f046 0.0s

=> CACHED [build 2/4] WORKDIR /go/src/greeter-server 0.0s

=> CACHED [build 3/4] COPY main.go /main.go 0.0s

=> [build 4/4] RUN go mod init geeter-server && mv /main.go ./ && go mod tidy && go build -o /greeter-server main.go 88.6s

=> [stage-1 2/2] COPY --from=build /greeter-server / 0.0s

=> exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:516f2f1c9a0905a0e3c9ef80c078b5bcac3ca6d18365e936760427ba1319f377 0.0s

=> => naming to docker.io/library/go-grpc-greeter-server:v0.1 0.0s

[root@k-m-1 grpc]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

go-grpc-greeter-server v0.1 516f2f1c9a09 37 seconds ago 31.3MB

# 然后我们可以把它push到自己的仓库,然后去部署这个应用

# grpc-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-grpc-greeter-server

labels:

app: go-grpc-greeter-server

spec:

selector:

matchLabels:

app: go-grpc-greeter-server

template:

metadata:

labels:

app: go-grpc-greeter-server

spec:

containers:

- name: go-grpc-greeter-server

image: cnych/go-grpc-greeter-server:v0.1 # 我这里直接用了别人的镜像了

ports:

- name: grpc

containerPort: 50051

resources:

limits:

cpu: 100m

memory: 100Mi

requests:

cpu: 50m

memory: 50Mi

# grpc-service.yaml

apiVersion: v1

kind: Service

metadata:

name: go-grpc-greeter-server

labels:

app: go-grpc-greeter-server

spec:

selector:

app: go-grpc-greeter-server

ports:

- name: grpc

port: 50051

protocol: TCP

targetPort: 50051

type: ClusterIP

[root@k-m-1 grpc]# kubectl apply -f .

deployment.apps/go-grpc-greeter-server created

service/go-grpc-greeter-server created

[root@k-m-1 grpc]# kubectl get pod,svc

NAME READY STATUS RESTARTS AGE

pod/go-grpc-greeter-server-79c8bd4d4d-4vrp6 1/1 Running 0 16s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/go-grpc-greeter-server ClusterIP 10.96.3.34 <none> 50051/TCP 16s

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d3h

# 接下来我们就需要创建Ingress对象来暴露上面的gRPC服务了,由于gRPC服务只运行在HTTPS端口上,因此需要域名和对应的SSL证书,我们接着使用域名grpc.192.168.3.236.nip.io和自签SSL证书。

申请SSL证书

使用Ingress转发gRPC服务需要对应的域名拥有SSL证书,使用TLS协议进行通信,这里我们一样使用OpenSSL来生成自签证书

[ req ]

#default_bits = 2048

#default_md = sha256

#default_keyfile = privkey.pem

distinguished_name = req_distinguished_name

attributes = req_attributes

req_extensions = v3_req

[ req_distinguished_name ]

countryName = Country Name (2 letter code)

countryName_min = 2

countryName_max = 2

stateOrProvinceName = State or Province Name (full name)

localityName = Locality Name (eg, city)

0.organizationName = Organization Name (eg, company)

organizationalUnitName = Organizational Unit Name (eg, section)

commonName = Common Name (eg, your name or your server\'s hostname)

commonName_max = 64

[ req_attributes ]

challengePassword = A challenge password

challengePassword_min = 4

challengePassword_max = 20

[ v3_req ]

basicConstraints = CA:FALSE

keyUsage = nonRepudiation, digitalSignature, keyEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = grpc.192.168.3.236.nip.io

# 使用如下命令部署证书请求

[root@k-m-1 ssl]# openssl req -new -nodes -keyout grpc.key -out grpc.csr -config /etc/pki/tls/openssl.cnf -subj "/C=CN/ST=Beijing/L=Beijing/O=GITLAYZER/OU=TrainService/CN=grpc.192.168.3.236.nip.io"

Generating a RSA private key

.............+++++

...................................................................+++++

writing new private key to 'grpc.key'

-----

[root@k-m-1 ssl]# ls

grpc.csr grpc.ke

# 执行如下命令部署证书

[root@k-m-1 ssl]# openssl x509 -req -days 3650 -in grpc.csr -signkey grpc.key -out grpc.crt -extensions v3_req -extfile /etc/pki/tls/openssl.cnf

Signature ok

subject=C = CN, ST = Beijing, L = Beijing, O = GITLAYZER, OU = TrainService, CN = grpc.192.168.3.236.nip.io

Getting Private key

[root@k-m-1 ssl]# ls

grpc.crt grpc.csr grpc.key

# 然后创建一个tls的secret

[root@k-m-1 ssl]# kubectl create secret tls grpc-tls --cert=grpc.crt --key=grpc.key

secret/grpc-tls created

[root@k-m-1 ssl]# kubectl get secrets

NAME TYPE DATA AGE

basic-auth Opaque 1 2d3h

grpc-tls kubernetes.io/tls 2 6s

nginx-tls kubernetes.io/tls 2 21h

ssl kubernetes.io/tls 2 3d15h

# 接下来就是创建Ingress对象了

# grpc-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-grpc-greeter-server

labels:

app: go-grpc-greeter-server

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

# 这里必须指定后端服务的协议是GRPC

nginx.ingress.kubernetes.io/backend-protocol: "GRPC"

spec:

ingressClassName: nginx

tls:

- hosts:

- grpc.192.168.3.236.nip.io

secretName: grpc-tls

rules:

- host: grpc.192.168.3.236.nip.io