1:环境介绍

| 主机 |

IP |

Config |

系统 |

| kubernetes-master |

10.0.0.12 |

2C4G |

CentOS 7.9 |

| kubernetes-worker |

10.0.0.13 |

2C2G |

CentOS 7.9 |

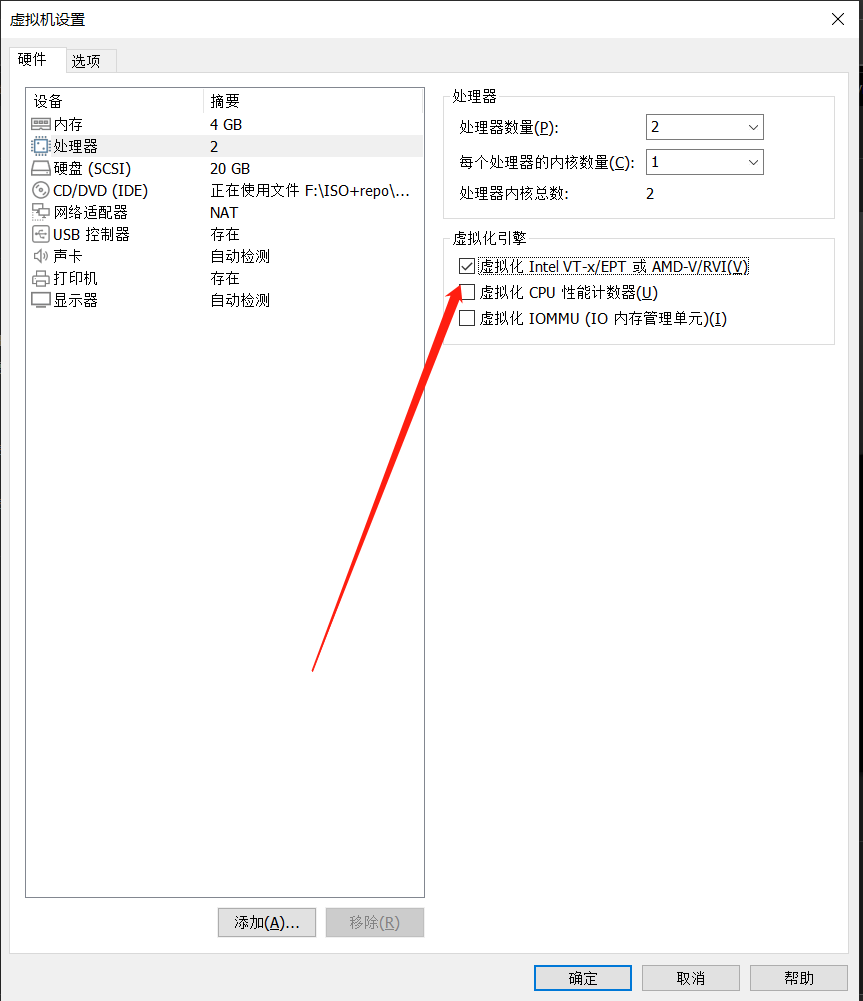

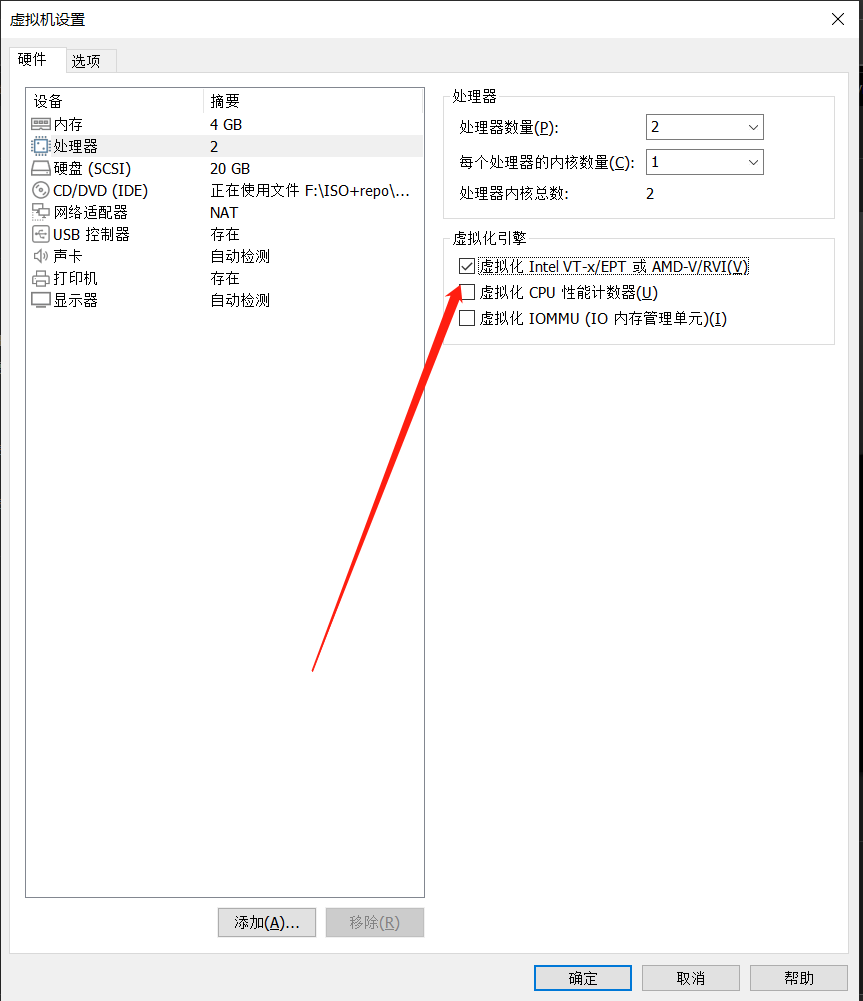

虚拟机一定是要开启CPU虚拟化功能才可以

2:基础配置(全部节点配置)

1:主机名配置

[root@10.0.0.12 ~]# hostnamectl set-hostname kubernetes-master-1

[root@10.0.0.13 ~]# hostnamectl set-hostname kubernetes-worker-1

2:配置Hosts解析

cat << eof>> /etc/hosts

10.0.0.12 kubernetes-master-1

10.0.0.13 kubernetes-worker-1

eof

3:配置时间同步

yum install -y ntpdate # 安装NTP

timedatectl set-timezone Asia/Shanghai # 修改时区

ntpdate ntp.aliyun.com # 同步时间

0 */1 * * * /usr/sbin/ntpdate ntp.aliyun.com # 写入计划任务

4:关闭防火墙

[root@kubernetes-master-1 ~]# systemctl disable firewalld --now

5:关闭swap分区与selinux

# 临时关闭selinux

[root@kubernetes-master-1 ~]# setenforce 0

# 永久关闭

[root@kubernetes-master-1 ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

# 临时关闭

[root@kubernetes-master-1 ~]# swapoff -a

# 永久关闭

[root@kubernetes-master-1 ~]# sed -i 's/.*swap.*/#&/g' /etc/fstab

6:加载IPVS模块

[root@kubernetes-master-1 ~]# yum -y install ipset ipvsadm

[root@kubernetes-master-1 ~]# cat << eof>> /etc/sysconfig/modules/ipvs.modules

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

eof

[root@kubernetes-master-1 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack

7:升级内核

[root@kubernetes-master-1 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-5.el7.elrepo.noarch.rpm

[root@kubernetes-master-1 ~]# yum --enablerepo=elrepo-kernel install -y kernel-lt

[root@kubernetes-master-1 ~]# grub2-set-default 0

[root@kubernetes-master-1 ~]# reboot

[root@kubernetes-master-1 ~]# uname -r

5.4.191-1.el7.elrepo.x86_64

[root@kubernetes-master-1 ~]# modprobe -- nf_conntrack

3:部署Containerd

[root@kubernetes-master-1 ~]# cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

# 1.20+需要开启br_netfilter

[root@kubernetes-master-1 ~]# modprobe overlay

[root@kubernetes-master-1 ~]# modprobe br_netfilter

# 配置内核参数

[root@kubernetes-master-1 ~]# cat << eof>>/etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

eof

[root@kubernetes-master-1 ~]# sysctl --system

# 部署源并安装containerd

[root@kubernetes-master-1 ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

[root@kubernetes-master-1 ~]# yum list |grep containerd

[root@kubernetes-master-1 ~]# yum -y install containerd.io

[root@kubernetes-master-1 ~]# mkdir -p /etc/containerd

[root@kubernetes-master-1 ~]# containerd config default > /etc/containerd/config.toml

# 修改cgroup Driver为systemd

[root@kubernetes-master-1 ~]# sed -ri 's#systemd_cgroup = false#systemd_cgroup = true#' /etc/containerd/config.toml

# 更改sandbox_image

[root@kubernetes-master-1 ~]# sed -ri 's#k8s.gcr.io\/pause:3.6#registry.aliyuncs.com\/google_containers\/pause:3.7#' /etc/containerd/config.toml

4:部署Kata

检查当前`虚拟机`是否支持嵌套虚拟化

# 在虚拟主机上执行,N表示不支持

[root@kubernetes-master-1 ~]# cat /sys/module/kvm_intel/parameters/nested

N

# 接下来我们来开启嵌套虚拟化

[root@kubernetes-master-1 ~]# modprobe -r kvm-intel

[root@kubernetes-master-1 ~]# modprobe kvm-intel nested=1

[root@kubernetes-master-1 ~]# cat /sys/module/kvm_intel/parameters/nested

Y

# 查看虚拟机是否支持虚拟化

[root@kubernetes-master-1 ~]# cat /proc/cpuinfo |grep vmx

# 配置kata仓库及安装kata

# 前提是我们需要安装了 yum-utils

[root@kubernetes-master-1 ~]# yum-config-manager --add-repo http://download.opensuse.org/repositories/home:/katacontainers:/releases:/x86_64:/stable-1.11/CentOS_7/home:katacontainers:releases:x86_64:stable-1.11.repo

# 安装

[root@kubernetes-master-1 ~]# yum -y install kata-runtime kata-proxy kata-shim --nogpgcheck

# 检查kata是否安装成功

[root@kubernetes-master-1 ~]# kata-runtime version

kata-runtime : 1.11.5

commit : 3fc5e06c2e50a265e97aae4b730e21e04969633e

OCI specs: 1.0.1-dev

# 配置containerd动态使用kata(默认runc)

[root@kubernetes-master-1 ~]# cat /etc/containerd/config.toml

version = 2

root = "/var/lib/containerd"

state = "/run/containerd"

plugin_dir = ""

disabled_plugins = []

required_plugins = []

oom_score = 0

[grpc]

address = "/run/containerd/containerd.sock"

tcp_address = ""

tcp_tls_cert = ""

tcp_tls_key = ""

uid = 0

gid = 0

max_recv_message_size = 16777216

max_send_message_size = 16777216

[ttrpc]

address = ""

uid = 0

gid = 0

[debug]

address = ""

uid = 0

gid = 0

level = ""

[metrics]

address = ""

grpc_histogram = false

[cgroup]

path = ""

[timeouts]

"io.containerd.timeout.shim.cleanup" = "5s"

"io.containerd.timeout.shim.load" = "5s"

"io.containerd.timeout.shim.shutdown" = "3s"

"io.containerd.timeout.task.state" = "2s"

[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

pause_threshold = 0.02

deletion_threshold = 0

mutation_threshold = 100

schedule_delay = "0s"

startup_delay = "100ms"

[plugins."io.containerd.grpc.v1.cri"]

disable_tcp_service = true

stream_server_address = "127.0.0.1"

stream_server_port = "0"

stream_idle_timeout = "4h0m0s"

enable_selinux = false

selinux_category_range = 1024

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7"

stats_collect_period = 10

systemd_cgroup = false

enable_tls_streaming = false

max_container_log_line_size = 16384

disable_cgroup = false

disable_apparmor = false

restrict_oom_score_adj = false

max_concurrent_downloads = 3

disable_proc_mount = false

unset_seccomp_profile = ""

tolerate_missing_hugetlb_controller = true

disable_hugetlb_controller = true

ignore_image_defined_volumes = false

[plugins."io.containerd.grpc.v1.cri".containerd]

snapshotter = "overlayfs"

default_runtime_name = "runc"

no_pivot = false

disable_snapshot_annotations = true

discard_unpacked_layers = false

[plugins."io.containerd.grpc.v1.cri".containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/bin/kata-runtime"

runtime_root = ""

privileged_without_host_devices = false

base_runtime_spec = ""

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

runtime_engine = ""

runtime_root = ""

privileged_without_host_devices = false

base_runtime_spec = ""

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata]

runtime_type = "io.containerd.kata.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.katacli]

runtime_type = "io.containerd.runc.v1"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.katacli.options]

NoPivotRoot = false

NoNewKeyring = false

ShimCgroup = ""

IoUid = 0

IoGid = 0

BinaryName = "/usr/bin/kata-runtime"

Root = ""

CriuPath = ""

SystemdCgroup = false

[plugins."io.containerd.grpc.v1.cri".cni]

bin_dir = "/opt/cni/bin"

conf_dir = "/etc/cni/net.d"

max_conf_num = 1

conf_template = ""

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://6ze43vnb.mirror.aliyuncs.com"]

[plugins."io.containerd.grpc.v1.cri".image_decryption]

key_model = ""

[plugins."io.containerd.grpc.v1.cri".x509_key_pair_streaming]

tls_cert_file = ""

tls_key_file = ""

[plugins."io.containerd.internal.v1.opt"]

path = "/opt/containerd"

[plugins."io.containerd.internal.v1.restart"]

interval = "10s"

[plugins."io.containerd.metadata.v1.bolt"]

content_sharing_policy = "shared"

[plugins."io.containerd.monitor.v1.cgroups"]

no_prometheus = false

[plugins."io.containerd.runtime.v1.linux"]

shim = "containerd-shim"

runtime = "runc"

runtime_root = ""

no_shim = false

shim_debug = false

[plugins."io.containerd.runtime.v2.task"]

platforms = ["linux/amd64"]

[plugins."io.containerd.service.v1.diff-service"]

default = ["walking"]

[plugins."io.containerd.snapshotter.v1.devmapper"]

root_path = ""

pool_name = ""

base_image_size = ""

async_remove = false

[root@kubernetes-master-1 ~]# systemctl daemon-reload

[root@kubernetes-master-1 ~]# systemctl restart containerd.service

[root@kubernetes-master-1 ~]# systemctl status containerd.service

5:部署Kubernetes

# 添加源地址

[root@kubernetes-master-1 ~]# cat << eof>> /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

eof

# 安装kubeadm kubectl kubelet

[root@kubernetes-master-1 ~]# yum -y install kubeadm-1.24.0-0 kubelet-1.24.0-0 kubectl-1.24.0-0

# 设置crictl

[root@kubernetes-master-1 ~]# cat << EOF >> /etc/crictl.yaml

runtime-endpoint: unix:///var/run/containerd/containerd.sock

image-endpoint: unix:///var/run/containerd/containerd.sock

timeout: 10

debug: false

EOF

# 生成配置

[root@kubernetes-master-1 ~]# kubeadm config print init-defaults > kubeadm-init.yaml

[root@kubernetes-master-1 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.12

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: kubernetes-master-1

taints:

- effect: "NoSchedule"

key: "node-role.kubernetes.io/master"

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.24.0

networking:

dnsDomain: cluster.local

serviceSubnet: 200.1.0.0/16

podSubnet: 100.1.0.0/16

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

cgroupDriver: systemd

# 查看所需镜像列表

[root@kubernetes-master-1 ~]# kubeadm config images list --config kubeadm-init.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.24.0

registry.aliyuncs.com/google_containers/pause:3.7

registry.aliyuncs.com/google_containers/etcd:3.5.3-0

registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 预拉取镜像

[root@kubernetes-master-1 ~]# kubeadm config images pull --config kubeadm-init.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.24.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.7

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 初始化集群

[root@kubernetes-master-1 ~]# kubeadm init --config=kubeadm-init.yaml

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.12:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:6fe3166a52d72deeb6b90e77c86caa75dce2ee13656f1ce238a25c59af6e2503

# 部署网络插件

[root@kubernetes-master-1 ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

6:验证Kata的可用性

1:创建runtimeClass

[root@kubernetes-master-1 ~]# cat kata.yaml

kind: RuntimeClass

apiVersion: node.k8s.io/v1

metadata:

name: kata-containers

handler: kata

2:创建3个Nginx测试容器并使用Kata

[root@kubernetes-master-1 ~]# cat nginx-1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-1

spec:

replicas: 1

selector:

matchLabels:

app: nginx-1

template:

metadata:

labels:

app: nginx-1

spec:

runtimeClassName: kata-containers

containers:

- name: nginx-1

image: nginx:alpine

ports:

- containerPort: 80

[root@kubernetes-master-1 ~]# cat nginx-2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-2

spec:

replicas: 1

selector:

matchLabels:

app: nginx-2

template:

metadata:

labels:

app: nginx-2

spec:

runtimeClassName: kata-containers

containers:

- name: nginx-2

image: nginx:alpine

ports:

- containerPort: 80

[root@kubernetes-master-1 ~]# cat nginx-3.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-3

annotations:

io.kubernetes.cri.untrusted-workload: "true"

spec:

replicas: 1

selector:

matchLabels:

app: nginx-3

template:

metadata:

labels:

app: nginx-3

spec:

containers:

- name: nginx-3

image: nginx:alpine

ports:

- containerPort: 80

[root@kubernetes-master-1 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-1-6cd7c88fff-x89vv 1/1 Running 0 3m58s

nginx-2-5d89b8b869-dpprr 1/1 Running 0 3m56s

nginx-3-78957bd984-pv4qv 1/1 Running 0 3m52s

# 去worker的Kata查看容器

[root@kubernetes-worker-1 ~]# kata-runtime list

ID PID STATUS BUNDLE CREATED OWNER

3d847b07ec3fbf228abb90557affd53e90ac2b8483860515a953e5f365e50194 -1 running /run/containerd/io.containerd.runtime.v2.task/k8s.io/3d847b07ec3fbf228abb90557affd53e90ac2b8483860515a953e5f365e50194 2022-07-31T06:03:40.341752158Z #0

912a2806949ff0f46d26fedf0c51664126d7dd9429b888b3a73e186790993daa -1 running /run/containerd/io.containerd.runtime.v2.task/k8s.io/912a2806949ff0f46d26fedf0c51664126d7dd9429b888b3a73e186790993daa 2022-07-31T06:03:40.172305331Z #0

04fdccc4d59256191ab98dbbdf1d432320f640f8e26c7434bdcd48d1950341b1 -1 running /run/containerd/io.containerd.runtime.v2.task/k8s.io/04fdccc4d59256191ab98dbbdf1d432320f640f8e26c7434bdcd48d1950341b1 2022-07-31T06:03:38.209287414Z #0

6cae70f9863b788accb7a128961c0938d0054835eef37aae9990f47aa6f688f9 -1 running /run/containerd/io.containerd.runtime.v2.task/k8s.io/6cae70f9863b788accb7a128961c0938d0054835eef37aae9990f47aa6f688f9 2022-07-31T06:03:38.409381969Z #0

# 再次启动一个Nginx容器

[root@kubernetes-master-1 ~]# kubectl exec -it pods/nginx-1-6cd7c88fff-x89vv -- uname -r

5.4.32-11.2.container

[root@kubernetes-master-1 ~]# kubectl exec -it pods/nginx-2-5d89b8b869-dpprr -- uname -r

5.4.32-11.2.container

[root@kubernetes-master-1 ~]# kubectl exec -it pods/nginx-3-78957bd984-pv4qv -- uname -r

5.4.208-1.el7.elrepo.x86_64

很明显的看到前面两个内核和第三个就不一样了

这就和我们的内核隔离开了