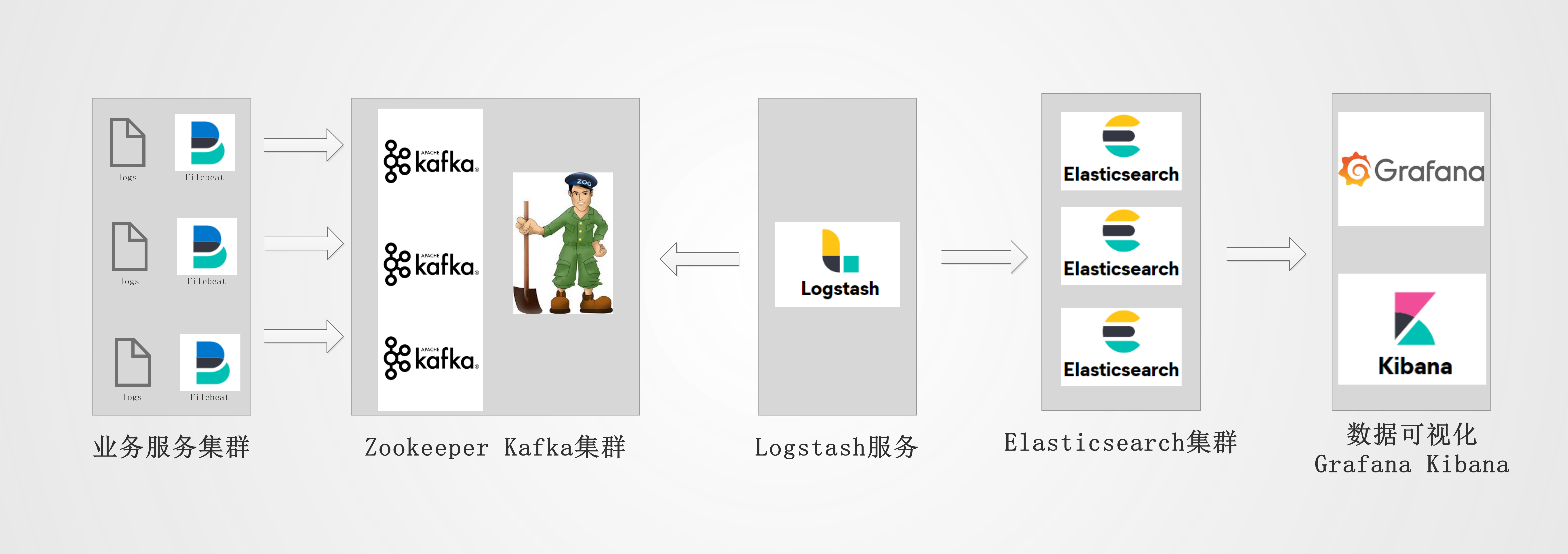

1:环境

| 10.0.0.10 |

ElasticSearch_master kafka+zk-1 |

| 10.0.0.11 |

ElasticSearch_node_1 kafka+zk-1 |

| 10.0.0.12 |

ElasticSearch_node_2 kafka+zk-2 |

| 10.0.0.13 |

logstash kibana cerebro |

| 服务 |

版本 |

| java |

1.8.0_221 |

| elasticsearch |

7.10.1 |

| filebeat |

7.10.1 |

| kibana |

7.10.1 |

| logstash |

7.10.1 |

| cerebro |

0.9.2-1 |

| kafka |

2.12-2.3.0 |

| zookeeper |

3.5.6 |

2:参数配置

ES节点执行(三个)

# 配置主机名

hostnamectl set-hostname elk_master_1

hostnamectl set-hostname elk_node_1

hostnamectl set-hostname elk_node_2

# 增加文件描述符

cat << eof>> /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 65536

* hard nproc 65536

* hard memlock unlimited

* soft memlock unlimited

eof

# 修改默认限制内存

cat << eof>>/etc/systemd/system.conf

DefaultLimitNOFILE=65536

DefaultLimitNPROC=32000

DefaultLimitMEMLOCK=infinity

eof

# 优化内核,对es支持

cat << eof>>/etc/sysctl.conf

# 关闭交换内存

vm.swappiness =0

# 影响java线程数量,建议修改为262144或者更高

vm.max_map_count= 262144

# 优化内核listen连接

net.core.somaxconn=65535

# 最大打开文件描述符数,建议修改为655360或者更高

fs.file-max=655360

# 开启ipv4转发

net.ipv4.ip_forward= 1

eof

# 修改hosts配置文件

cat << eof>> /etc/hosts

10.0.0.10 elk_master_1

10.0.0.11 elk_node_1

10.0.0.12 elk_node_2

eof

# 重启服务器

3:部署Zookeeper

ES节点执行(三个节点)

# 创建Zookeeper项目目录

mkdir -p /usr/local/zk/{log,data}

# 下载ZK并解压

wget https://dlcdn.apache.org/zookeeper/zookeeper-3.5.9/apache-zookeeper-3.5.9-bin.tar.gz

tar xf apache-zookeeper-3.5.9-bin.tar.gz

mv apache-zookeeper-3.5.9-bin zookeeper

# 修改配置文件

[root@elk_master_1 conf]# pwd

/root/zookeeper/conf

[root@elk_master_1 conf]# cat zoo.cfg

tickTime=2000

syncLimit=5

dataDir=/usr/local/zk/data

dataLogDir=/usr/lcoal/zk/log

maxClientCnxns=60

initLimit=10

clientPort=2181

server.1=10.0.0.10:2888:3888

server.2=10.0.0.11:2888:3888

server.3=10.0.0.12:2888:3888

# 写入节点标记

# 分别在三个节点/usr/local/zk/myid写入节点标记

[root@elk_master_1 ~]# echo "1" > /usr/local/zk/data/myid

[root@elk_node_1 ~]# echo "2" > /usr/local/zk/data/myid

[root@elk_node_2 ~]# echo "3" > /usr/local/zk/data/myid

# 安装java

yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

# 启动zookeeper集群

cd zookeeper/bin/

./zkServer.sh start

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /root/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

# 检查集群状态

./zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /root/zookeeper/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

# 设置全局变量

cat << eof>>/etc/profile

export ZOOKEEPER_INSTALL=/root/zookeeper/

export PATH=\$PATH:\$ZOOKEEPER_INSTALL/bin

eof

# 这样就可以全局使用zkServer.sh命令了

4:部署 Kafka

ES节点执行(三个节点)

# 下载解压kafka压缩包

wget https://downloads.apache.org/kafka/2.8.1/kafka_2.13-2.8.1.tgz

tar xf kafka_2.13-2.8.1.tgz

mv kafka_2.13-2.8.1 kafka

# 创建数据目录

mkdir -p /usr/local/kafka/{data}

# 配置kafka

cd kafka/config/

cat server.properties

broker.id=1 # 此ID不能唯一

listeners=PLAINTEXT://:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/usr/local/kafka/data

num.partitions=3

num.recovery.threads.per.data.dir=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.0.0.11,10.0.0.12,10.0.0.13

zookeeper.connection.timeout.ms=6000

############################# Server Basics #############################

# broker的id,值为整数,且必须唯一,在一个集群中不能重复

broker.id=1

############################# Socket Server Se:ttings #############################

# kafka默认监听的端口为9092 (默认与主机名进行连接)

listeners=PLAINTEXT://:9092

# 处理网络请求的线程数量,默认为3个

num.network.threads=3

# 执行磁盘IO操作的线程数量,默认为8个

num.io.threads=8

# socket服务发送数据的缓冲区大小,默认100KB

socket.send.buffer.bytes=102400

# socket服务接受数据的缓冲区大小,默认100KB

socket.receive.buffer.bytes=102400

# socket服务所能接受的一个请求的最大大小,默认为100M

socket.request.max.bytes=104857600

############################# Log Basics #############################

# kafka存储消息数据的目录

log.dirs=../kfkdata

# 每个topic默认的partition数量

num.partitions=3

# 在启动时恢复数据和关闭时刷新数据时每个数据目录的线程数量

num.recovery.threads.per.data.dir=1

############################# Log Flush Policy #############################

# 消息刷新到磁盘中的消息条数阈值

#log.flush.interval.messages=10000

# 消息刷新到磁盘中的最大时间间隔,1s

#log.flush.interval.ms=1000

############################# Log Retention Policy #############################

# 日志保留小时数,超时会自动删除,默认为7天

log.retention.hours=168

# 日志保留大小,超出大小会自动删除,默认为1G

#log.retention.bytes=1073741824

# 日志分片策略,单个日志文件的大小最大为1G,超出后则创建一个新的日志文件

log.segment.bytes=1073741824

# 每隔多长时间检测数据是否达到删除条件,300s

log.retention.check.interval.ms=300000

############################# Zookeeper #############################

# Zookeeper连接信息,如果是zookeeper集群,则以逗号隔开

zookeeper.connect=10.0.0.11,10.0.0.12,10.0.0.13

# 连接zookeeper的超时时间,6s

zookeeper.connection.timeout.ms=6000

# 启动kafka集群

cd kafka/bin/

./kafka-server-start.sh ../config/server.properties # 前台执行

./kafka-server-start.sh -daemon ../config/server.properties # 后台执行

# 任意节点测试

在创建topic在集群中的任意节点 发布消息订阅消息验证结果

./kafka-topics.sh --create --zookeeper 10.0.0.10:2181,10.0.0.11:2182,10.0.0.12:2181 --partitions 3 --replication-factor 1 --topic logs

5:部署elasticsearch

ES节点执行(三个节点)

# 下载安装elasticsearch

wget https://pan.cnsre.cn/d/Package/Linux/ELK/elasticsearch-7.10.1-x86_64.rpm # 可以从官方下载,我这里用的别人的盘

# 安装ES

rpm -ivh elasticsearch-7.10.1-x86_64.rpm

# 备份配置文件

cd /etc/elasticsearch/

cp elasticsearch.yml elasticsearch.yml.bak

# 创建目录

mkdir -p /usr/local/es/{data,snapshot,logs}

# 修改配置文件

#集群名

cluster.name: elk-cluster

#node名 不可重复

node.name: elk-1

#数据存储路径

path.data: /usr/local/es/data

#数据快照路径

path.repo: /usr/local/es/snapshot

#日志存储路径

path.logs: /usr/local/es/logs

#es绑定的ip地址,根据自己机器ip进行修改

network.host: 0.0.0.0

#服务端口

http.port: 9200

#集群master需要和node名设置一致

discovery.seed_hosts: ["10.0.0.10", "10.0.0.11", "10.0.0.12"]

cluster.initial_master_nodes: ["10.0.0.10", "10.0.0.11", "10.0.0.12"]

#允许跨域请求

http.cors.enabled: true

#* 表示支持所有域名

http.cors.allow-origin: "*"

#添加请求header

http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

#生产必须为true,内存锁定检查,目的是内存地址直接映射,减少一次copy时间

bootstrap.memory_lock: true

#系统过滤检查,防止数据损坏,考虑集群安全,生产设置成false

bootstrap.system_call_filter: false

#xpack配置

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /etc/elasticsearch/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /etc/elasticsearch/elastic-certificates.p12

eof

# 精简

cluster.name: elk-cluster

node.name: elk-1 # 此名称必须唯一

path.data: /usr/local/es/data

path.repo: /usr/local/es/snapshot

path.logs: /usr/local/es/logs

network.host: 0.0.0.0

http.port: 9200

discovery.seed_hosts: ["10.0.0.10", "10.0.0.11", "10.0.0.12"]

cluster.initial_master_nodes: ["10.0.0.10", "10.0.0.11", "10.0.0.12"]

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-headers: Authorization,X-Requested-With,Content-Length,Content-Type

bootstrap.memory_lock: true

bootstrap.system_call_filter: false

xpack.security.enabled: false # 从这里往下都没开

xpack.security.transport.ssl.enabled: false

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /etc/elasticsearch/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /etc/elasticsearch/elastic-certificates.p12

# 修改JVM

[root@elk_master_1 elasticsearch]# cat jvm.options | grep 1g

-Xms1g

-Xmx1g

将jvm.options文件中22-23行的1g设置为你的服务内存的一半

# 分配权限

chown -R elasticsearch:elasticsearch /usr/local/es/

# 启动服务

# 三个节点全部启动并加入开机启动

systemctl start elasticsearch.service

systemctl enable elasticsearch.service

# 使用xpack进行安全认证

# xpack的安全功能

1:TLS 功能。 可对通信进行加密

2:文件和原生 Realm。 可用于创建和管理用户

3:基于角色的访问控制。 可用于控制用户对集群 API 和索引的访问权限

4:通过针对 Kibana Spaces 的安全功能,还可允许在Kibana 中实现多租户。

# 但我这里并没有开启认证,但是可以操作一下

# 在任意一台机器操作

/usr/share/elasticsearch/bin/elasticsearch-certutil ca

/usr/share/elasticsearch/bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12

# 两条命令均一路回车即可,不需要给秘钥再添加密码

# 证书创建完成之后,默认在es的数据目录。

# 将证书拷贝到etc下,并给上权限。

ll /usr/share/elasticsearch/elastic-*

-rw------- 1 root root 3451 May 29 14:38 /usr/share/elasticsearch/elastic-certificates.p12

-rw------- 1 root root 2527 May 29 14:38 /usr/share/elasticsearch/elastic-stack-ca.p12

cp /usr/share/elasticsearch/elastic-* /etc/elasticsearch/

chown elasticsearch:elasticsearch /etc/elasticsearch/elastic-*

# 做完之后,将证书拷贝到其他节点

scp /etc/elasticsearch/elastic-certificates.p12 root@elk_node_1:/etc/elasticsearch/

scp /etc/elasticsearch/elastic-stack-ca.p12 root@elk_node_1:/etc/elasticsearch/

scp /etc/elasticsearch/elastic-certificates.p12 root@elk_node_2:/etc/elasticsearch/

scp /etc/elasticsearch/elastic-stack-ca.p12 root@elk_node_2:/etc/elasticsearch/

# 然后上面的xpack就可以打开了

---

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /etc/elasticsearch/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /etc/elasticsearch/elastic-certificates.p12

# 如果重启有问题,记得查一下拷贝过去证书的属主

# 为内置账号添加密码

ES中内置了几个管理其他集成组件的账号apm_system, beats_system, elastic, kibana, logstash_system, remote_monitoring_user使用之前,首先需要设置下密码。

/usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive

# 这里统一设置为 devopsdu 生产千万别这么玩

[root@elk_master_1 elasticsearch]# /usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]:

Reenter password for [elastic]:

Enter password for [apm_system]:

Reenter password for [apm_system]:

Enter password for [kibana_system]:

Reenter password for [kibana_system]:

Enter password for [logstash_system]:

Reenter password for [logstash_system]:

Enter password for [beats_system]:

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]:

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

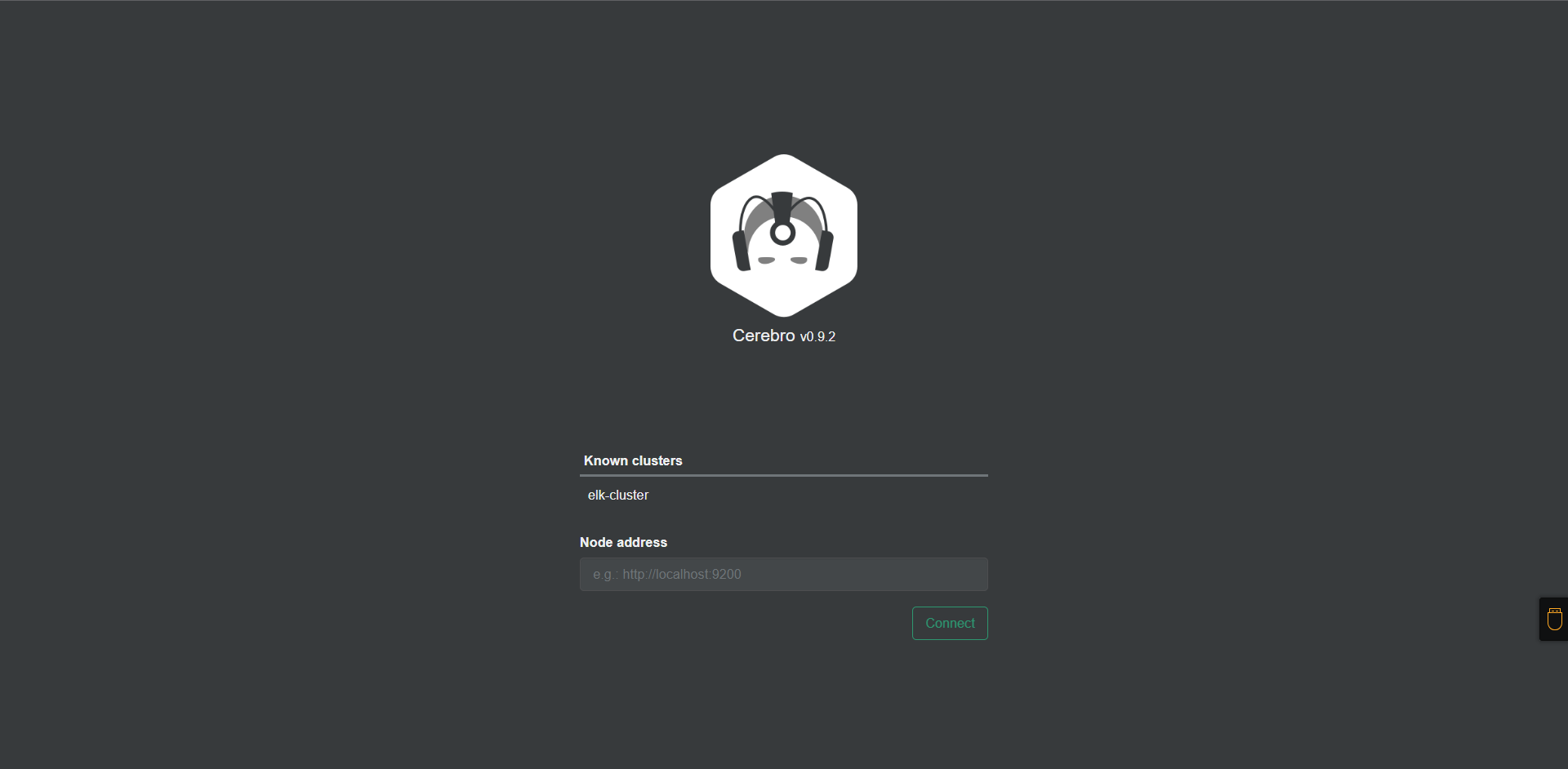

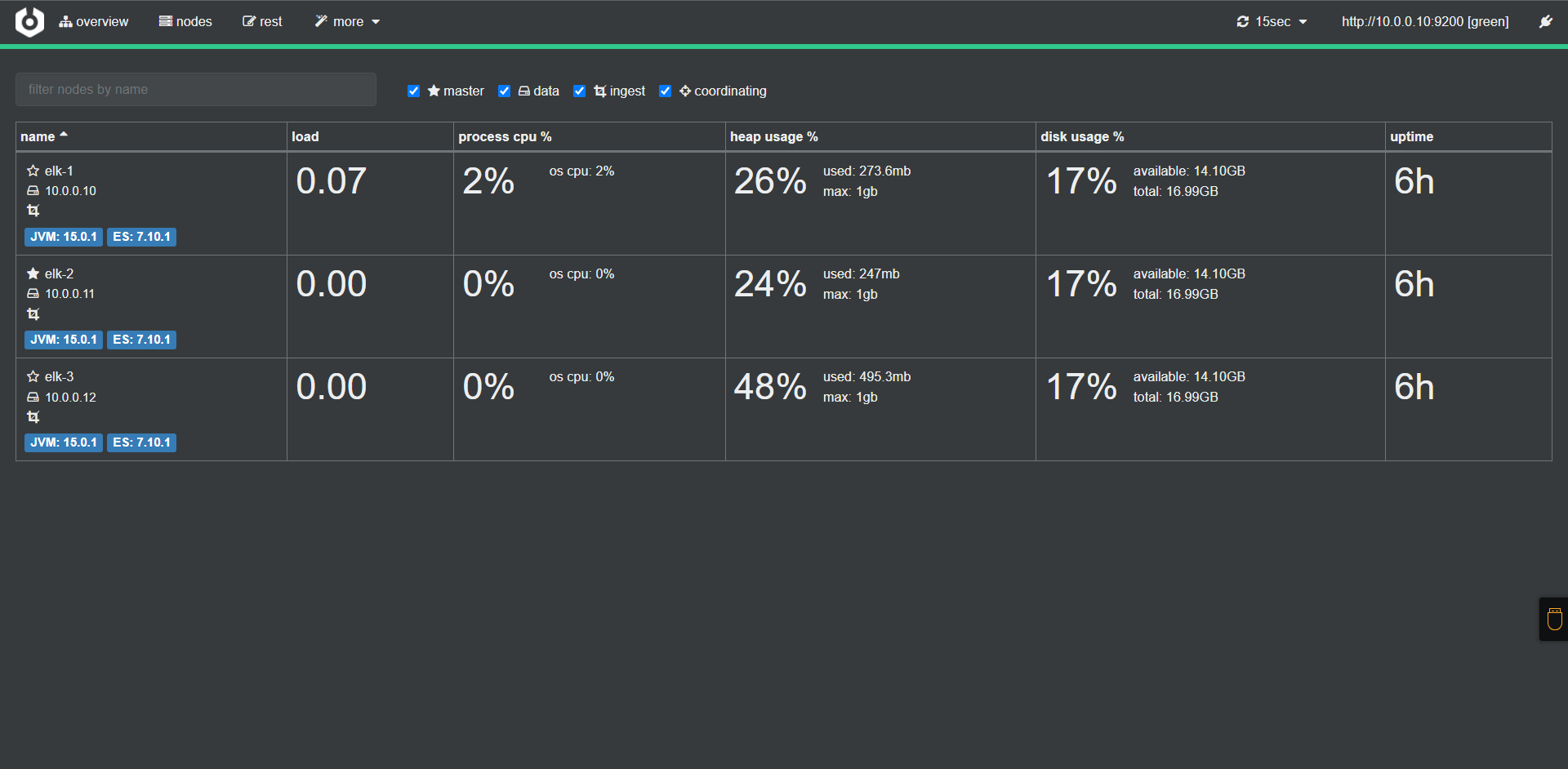

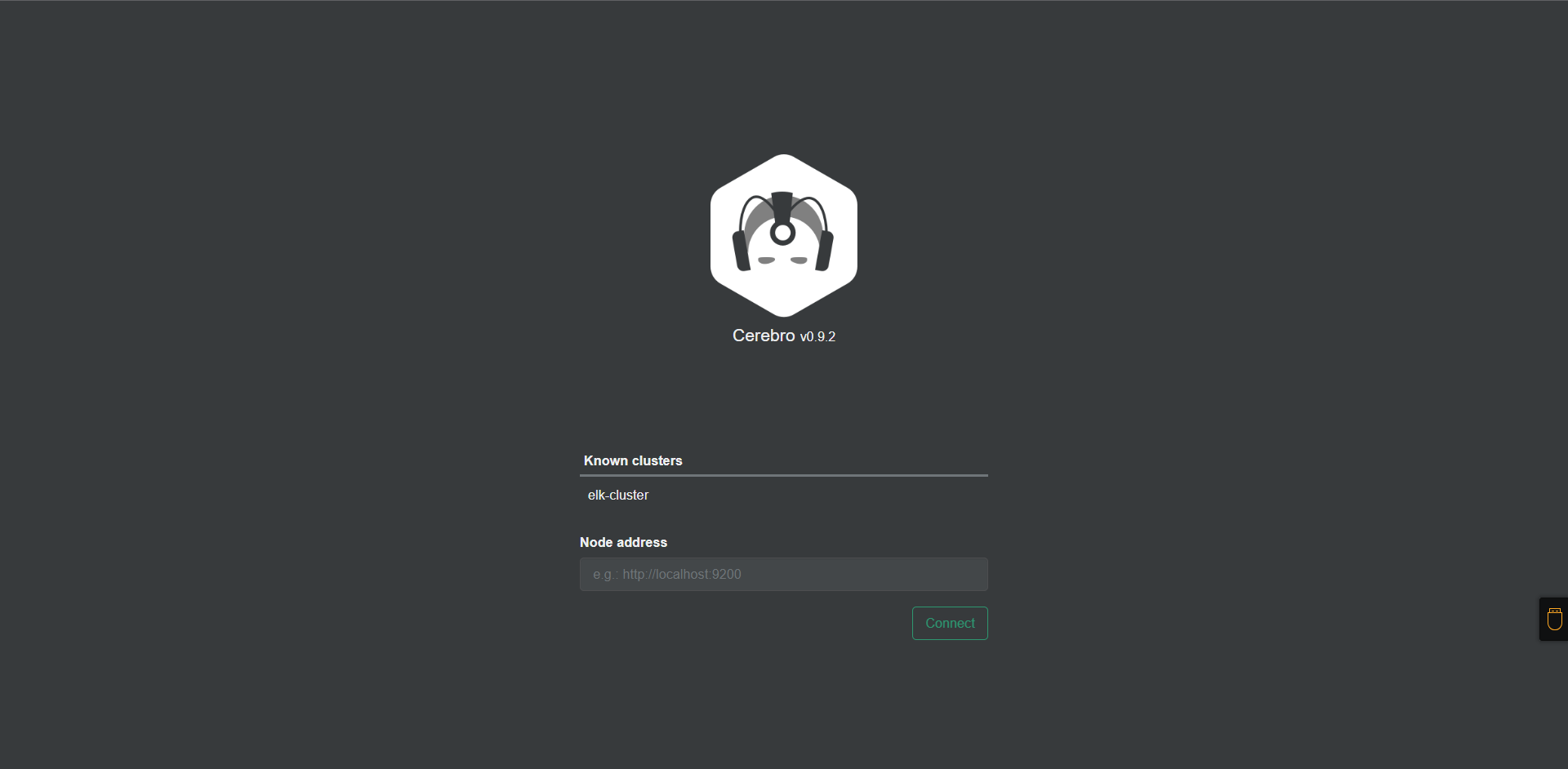

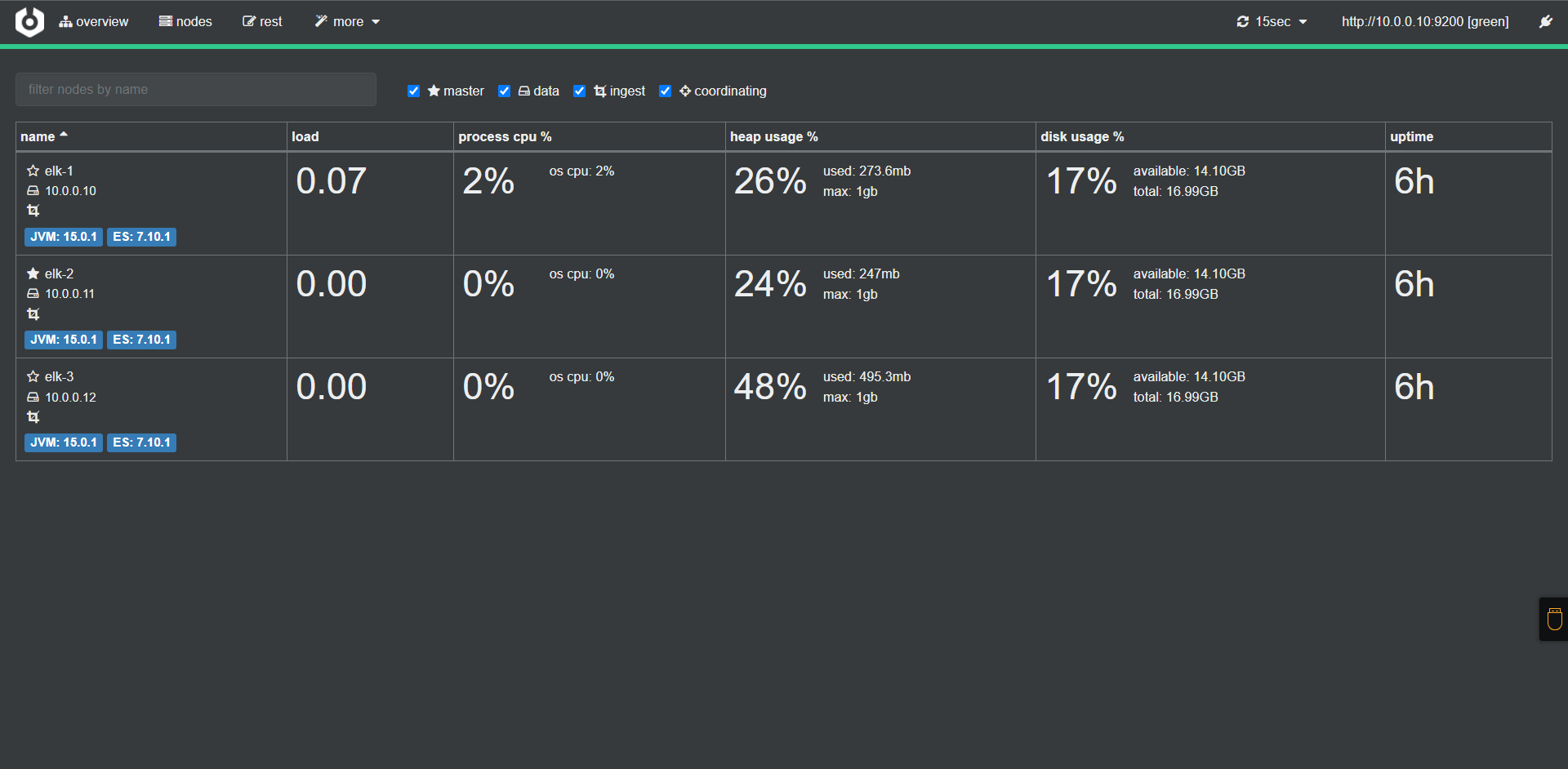

6:部署 Cerebro

# 下载安装 (Kibana主机部署)

wget https://pan.cnsre.cn/d/Package/Linux/ELK/cerebro-0.9.2-1.noarch.rpm

rpm -ivh cerebro-0.9.2-1.noarch.rpm

# 修改配置文件

# 修改/etc/cerebro/application.conf配置文件

# 找到对应配置修改为以下内容

data.path: "/var/lib/cerebro/cerebro.db"

# data.path = "./cerebro.db"

hosts = [

{

host = "http://10.0.0.10:9200"

name = "elk-cluster"

auth = {

username = "elstic"

password = "devops"

}

}

]

# 安装 java

yum install -y java-1.8.0-openjdk java-1.8.0-openjdk-devel

# 启动

systemctl status cerebro && systemctl enable cerebro

netstat -nplt | grep 9000

# 访问测试

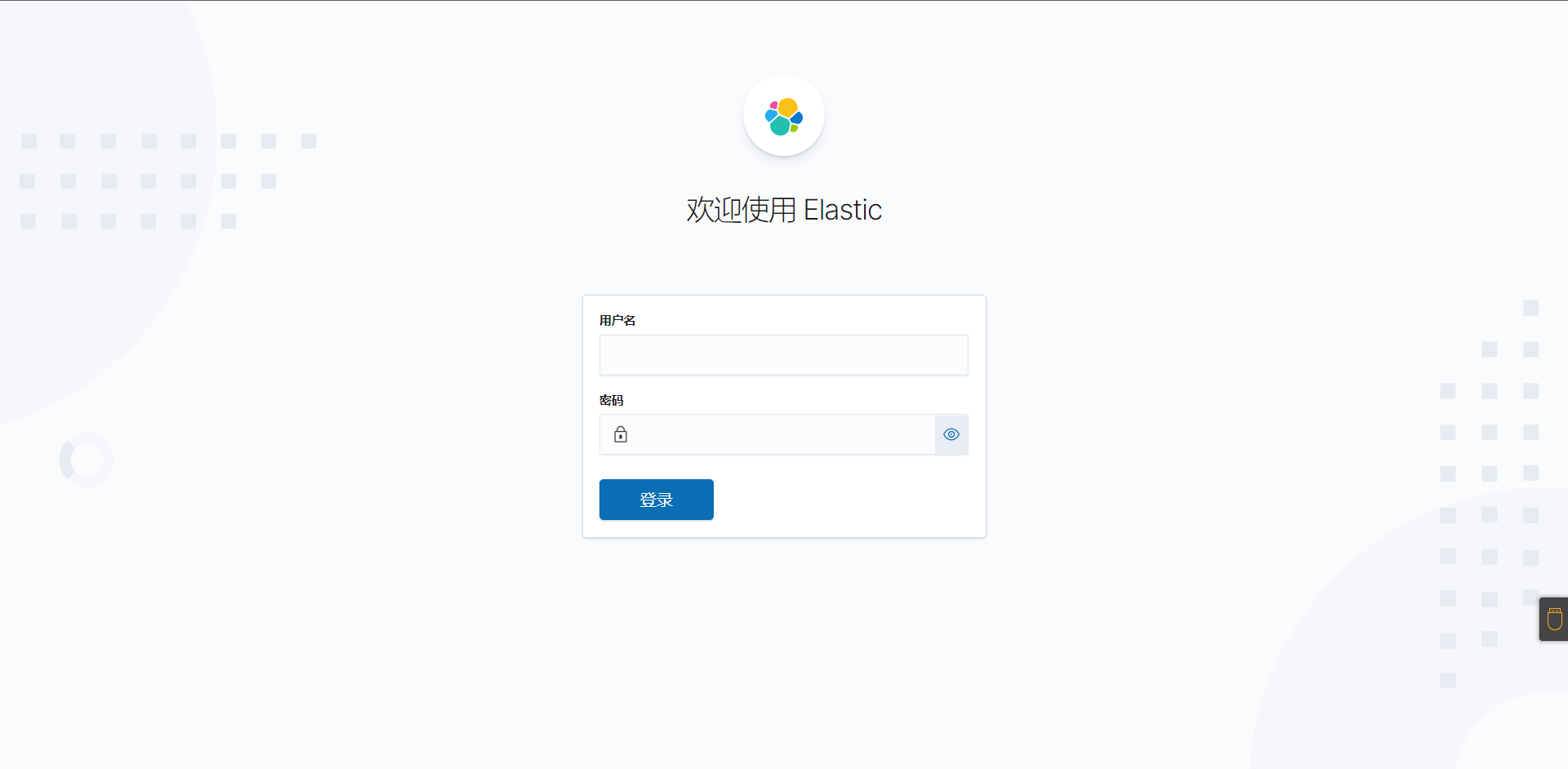

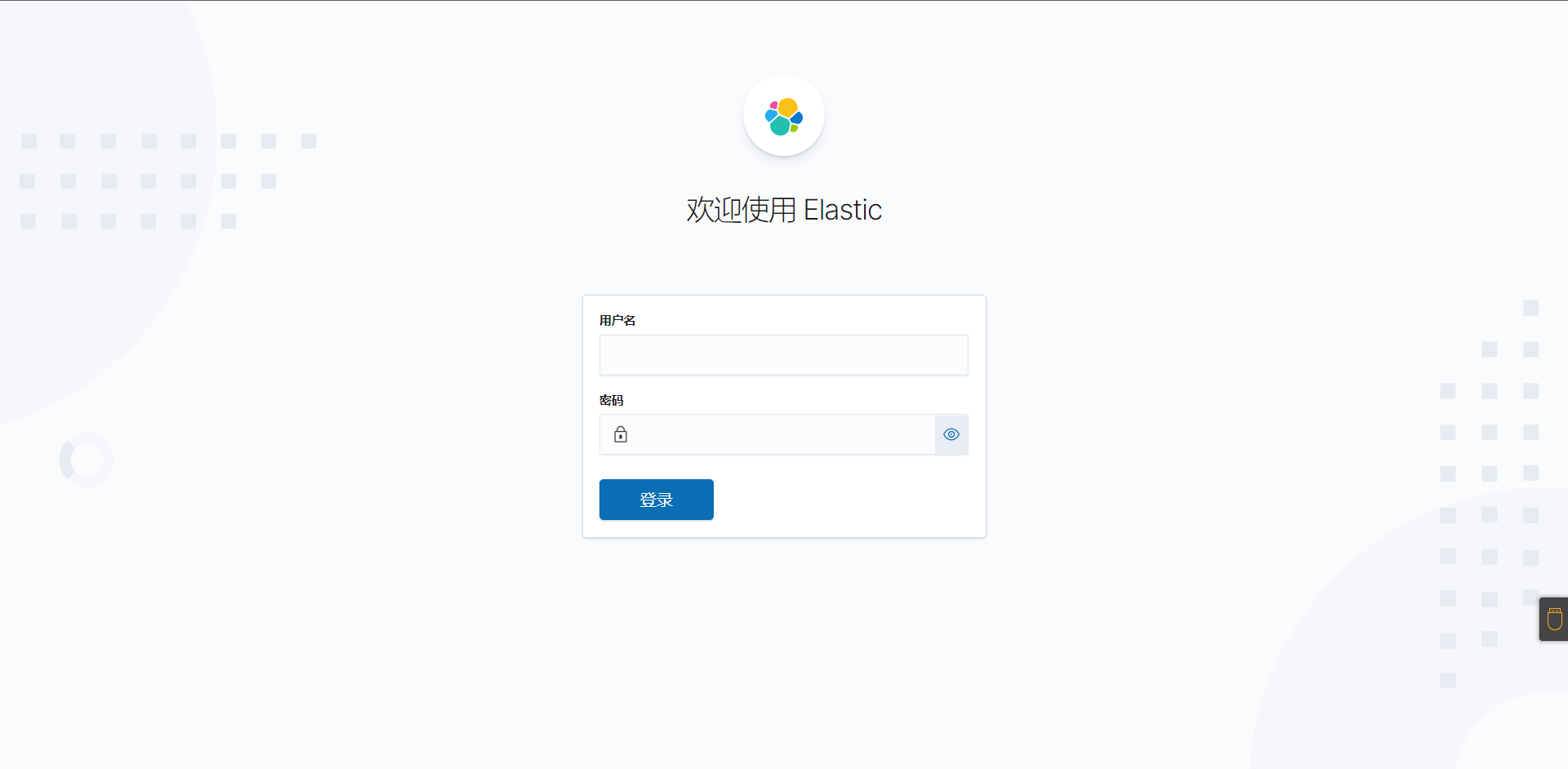

9:部署Kibana

Kibana 节点安装

# 下载安装

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.10.1-x86_64.rpm

rpm -ivh kibana-7.10.1-x86_64.rpm

# 修改备份配置文件

cd /etc/kibana/

cp kibana.yml kibana.yml.bak

[root@kibana kibana]# cat kibana.yml

server.port: 5601

server.host: 0.0.0.0

elasticsearch.hosts: ["http://10.0.0.10:9200/","http://10.0.0.11:9200/","http://10.0.0.12:9200/"] # ES主机

elasticsearch.username: "elastic" # 前面装ES配置的账号

elasticsearch.password: "devopsdu" # 配置的密码

i18n.locale: "zh-CN"

# 启动服务器

systemctl start kibana.service

systemctl enable kibana.service

# 检查服务

netstat -nplt | grep 5601

# 测试访问

前面使用elastic登录

账号:elastic

密码:devopsdu

10:部署filebeat

Kibana节点部署

# 下载filebeat

wget https://pan.cnsre.cn/d/Package/Linux/ELK/filebeat-7.10.1-x86_64.rpm

rpm -ivh filebeat-7.10.1-x86_64.rpm

# 备份配置并修改

cd /etc/filebeat/

cp filebeat.yml filebeat.yml.bak

# 修改配置

max_procs: 1 # 限制filebeat的进程数量,其实就是内核数,避免过多抢占业务资源

queue.mem.events: 256 # 存储于内存队列的事件数,排队发送 (默认4096)

queue.mem.flush.min_events: 128 # 小于queue.mem.events ,增加此值可提高吞吐量 (默认值2048)

filebeat.inputs: # inputs为复数,表名type可以有多个

- type: log # 输入类型

enable: true # 启用这个type配置

paths:

- /var/log/nginx/access.log # 收集nginx的access日志

json.keys_under_root: true # 默认Flase,还会将json解析的日志存储至messages字段

json.overwrite_keys: true # 覆盖默认的key,使用自定义json格式的key

max_bytes: 20480 # 单条日志的大小限制,建议限制(默认为10M,queue.mem.events * max_bytes 将是占有内存的一部)

fields: # 额外的字段

source: nginx-access # 自定义source字段,用于es建议索引(字段名小写,大写貌似不太行)

- type: log # 输入类型

enable: true # 启用这个type配置

paths:

- /var/log/nginx/error.log # 收集nginx的error日志

json.keys_under_root: true # 默认Flase,还会将json解析的日志存储至messages字段

json.overwrite_keys: true # 覆盖默认的key,使用自定义json格式的key

max_bytes: 20480 # 单条日志的大小限制,建议限制(默认为10M,queue.mem.events * max_bytes 将是占有内存的一部)

fields: # 额外的字段

source: nginx-error # 自定义source字段,用于es建议索引(字段名小写,大写貌似不太行)

setup.ilm.enabled: false # 自定义es的索引需要把ilm设置为false

output.kafka: # 输出到kafka

enabled: true # 该output配置是否启用

hosts: ["10.0.0.10:9092","10.0.0.11:9092","10.0.0.12:9092"] # kafka节点列表

topic: 'logstash-%{[fields.source]}' # kafka会创建该topic,然后logstash(可以过滤修改)会传给es作为索引名称

partition.hash:

reachable_only: true # 是否只发往可达分区

compression: gzip # 压缩

max_message_bytes: 1000000 # Event最大字节数。默认1000000。应小于等于kafka broker message.max.bytes值

required_acks: 1 # kafka ack等级

worker: 1 # kafka output的最大并发数

bulk_max_size: 2048 # 单次发往kafka的最大事件数

logging.to_files: true # 输出所有日志到file,默认true, 达到日志文件大小限制时,日志文件会自动限制替换

close_older: 30m # 如果文件在某个时间段内没有发生过更新,则关闭监控的文件handle。默认1h

force_close_files: false # 这个选项关闭一个文件,当文件名称的变化。只在window建议为true

close_inactive: 1m # 没有新日志采集后多长时间关闭文件句柄,默认5分钟,设置成1分钟,加快文件句柄关闭

close_timeout: 3h # 传输了3h后荏没有传输完成的话就强行关闭文件句柄,这个配置项是解决以上案例问题的key point

clean_inactive: 72h # 这个配置项也应该配置上,默认值是0表示不清理,不清理的意思是采集过的文件描述在registry文件里永不清理,在运行一段时间后,registry会变大,可能会带来问题

ignore_older: 70h # 设置了clean_inactive后就需要设置ignore_older,且要保证ignore_older < clean_inactive

# 启动服务

systemctl start filebeat.service && systemctl enable filebeat.service

11:部署logstash

Kibana 节点部署

# 下载安装

wget https://pan.cnsre.cn/d/Package/Linux/ELK/logstash-7.10.1-x86_64.rpm

rpm -ivh logstash-7.10.1-x86_64.rpm

# 备份并修改配置

cd /etc/logstash/

cp logstash.yml logstash.yml.bak

# 修改配置文件

[root@kibana logstash]# cat logstash.yml

http.host: "0.0.0.0"

# 指发送到Elasticsearch的批量请求的大小,值越大,处理则通常更高效,但增加了内存开销

pipeline.batch.size: 3000

# 指调整Logstash管道的延迟,过了该时间则logstash开始执行过滤器和输出

pipeline.batch.delay: 200

# 新增配置文件,从kafka获取日志

input { # 输入组件

kafka { # 从kafka消费数据

bootstrap_servers => ["10.0.11.172:9092,10.0.21.117:9092,10.0.11.208:9092"]

codec => "json" # 数据格式

#topics => ["3in1-topi"] # 使用kafka传过来的topic

topics_pattern => "logstash-.*" # 使用正则匹配topic

consumer_threads => 3 # 消费线程数量

decorate_events => true # 可向事件添加Kafka元数据,比如主题、消息大小的选项,这将向logstash事件中添加一个名为kafka的字段

auto_offset_reset => "latest" # 自动重置偏移量到最新的偏移量

#group_id => "logstash-node" # 消费组ID,多个有相同group_id的logstash实例为一个消费组

#client_id => "logstash1" # 客户端ID

fetch_max_wait_ms => "1000" # 指当没有足够的数据立即满足fetch_min_bytes时,服务器在回答fetch请求之前将阻塞的最长时间

}

}

filter{

# 当非业务字段时,无traceId则移除

#if ([message] =~ "traceId=null") { # 过滤组件,这里只是展示,无实际意义,根据自己的业务需求进行过滤

# drop {}

#}

mutate {

convert => ["Request time", "float"]

}

if [ip] != "-" {

geoip {

source => "ip"

target => "geoip"

# database => "/usr/share/GeoIP/GeoIPCity.dat"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float"]

}

}

}

output { # 输出组件

elasticsearch { # Logstash输出到es

hosts => ["10.0.11.172:9200","10.0.21.117:9200","10.0.11.208:9200"]

index => "logstash-%{[fields][source]}-%{+YYYY-MM-dd}" # 直接在日志中匹配

#index => "%{[@metadata][topic]}-%{+YYYY-MM-dd}" # 以日期建索引

user => "elastic"

password => "123"

}

#stdout {

# codec => rubydebug

#}

}

input {

kafka {

bootstrap_servers => ["10.0.0.10:9092,10.0.0.11:9092,10.0.0.12:9092"]

codec => "json"

topics_pattern => "logstash-.*"

consumer_threads => 3

decorate_events => true

auto_offset_reset => "latest"

fetch_max_wait_ms => "1000"

}

}

mutate {

convert => ["Request time", "float"]

}

if [ip] != "-" {

geoip {

source => "ip"

target => "geoip"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

mutate {

convert => [ "[geoip][coordinates]", "float"]

}

}

}

output {

elasticsearch {

hosts => ["10.0.0.10:9200","10.0.0.11:9200","10.0.0.12:9200"]

index => "logstash-%{[fields][source]}-%{+YYYY-MM-dd}"

user => "elastic"

password => "devopsdu"

}

}

# 测试接收日志

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/get-kafka-logs.conf、

# 登录kibana创建索引

# 选择管理–索引模式–创建索引模式

浙公网安备 33010602011771号

浙公网安备 33010602011771号