1:简介

在企业生产环境中,随着时间的迁移数据会存在磁盘空间不足,或者机器节点故障等情况。OSD又是实际存储数据,所以扩容和缩容OSD就很有必要性

随着我们数据量的增长,后期可能我们需要对osd进行扩容。目前扩容分为两种,一种为横向扩容,另外一种为纵向扩容

1:横向扩容scale out增加节点,添加更多的节点进来,让集群包含更多的节点

2:纵向扩容scale up增加磁盘,添加更多的硬盘进行,来增加存储容量

2:实战操作

2.1:横向扩容

横向扩容实际上就是把新机器加入Ceph集群而已,这里我快速过一遍

新增节点名称为 ceph-4

# NTP SERVER (ntp server 与阿里与ntp时间服务器进行同步)

[root@virtual_host ~]# yum install -y ntp

[root@virtual_host ~]# systemctl start ntpd

[root@virtual_host ~]# systemctl enable ntpd

Created symlink from /etc/systemd/system/multi-user.target.wants/ntpd.service to /usr/lib/systemd/system/ntpd.service.

[root@virtual_host ~]# timedatectl set-timezone Asia/Shanghai

[root@virtual_host ~]# timedatectl set-local-rtc 0

[root@virtual_host ~]# systemctl restart rsyslog

systemctl restart rsyslog

[root@virtual_host ~]# systemctl restart crond

ntp agent需要修改ntp server的地址

[root@virtual_host ~]# cat /etc/ntp.conf | grep server | grep -v "^#"

server 10.0.0.10 iburst

[root@virtual_host ~]# ntpq -pn

remote refid st t when poll reach delay offset jitter

==============================================================================

* 10.0.0.10 .INIT. 16 u - 64 0 0.000 0.000 0.000

# ceph节点操作相同

在ntp_agent节点添加定时同步任务

[root@virtual_host ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate 10.0.0.10

# 更改所有节点的host 加入新的机器

10.0.0.10 ceph-1

10.0.0.11 ceph-2

10.0.0.12 ceph-3

10.0.0.13 ceph-4

# 更改host

[root@virtual_host ~]# hostnamectl set-hostname ceph-4

# 关闭防火墙与selinux等

[root@ceph-4 ~]# systemctl stop firewalld

[root@ceph-4 ~]# systemctl disable firewalld

[root@ceph-4 ~]# iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

[root@ceph-4 ~]# iptables -P FORWARD ACCEPT

[root@ceph-4 ~]# setenforce 0

[root@ceph-4 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

# 配置源地址

[root@ceph-4 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@ceph-4 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@ceph-4 ~]# wget -O /etc/yum.repos.d/ceph.repo http://down.i4t.com/ceph/ceph.repo

[root@ceph-4 ~]# yum clean all && yum makecache

# 安装ceph

[root@ceph-4 ~]# yum install -y ceph vim wget

# 使用 ceph-1配置免密到 ceph-4

[root@ceph-1 ceph-deploy]# ssh-copy-id root@ceph-4

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'ceph-4 (10.0.0.13)' can't be established.

ECDSA key fingerprint is SHA256:2ysKwIOq1nrOh1CXWJyKU/DupX/wPD1PQeJeNHYQaC8.

ECDSA key fingerprint is MD5:7c:ae:c3:90:f6:6b:06:b1:46:1c:9d:81:7d:cc:2b:9d.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@ceph-4's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@ceph-4'"

and check to make sure that only the key(s) you wanted were added.

# 进入mon节点,分发配置文件,添加osd节点 (ceph-1操作)

[root@ceph-1 ceph-deploy]# cd /root/ceph-deploy/

[root@ceph-1 ceph-deploy]# ceph-deploy --overwrite-conf config push ceph-4

[root@ceph-1 ceph-deploy]# ceph-deploy osd create --data /dev/sdb ceph-4

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.06857 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0.00980 host ceph-4

6 hdd 0.00980 osd.6 up 1.00000 1.00000

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_WARN

Degraded data redundancy: 24/1380 objects degraded (1.739%), 3 pgs degraded

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 16h)

mgr: ceph-1(active, since 16h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 7 osds: 7 up (since 57s), 7 in (since 57s) # 因为前面的机器都带了两块盘所以加上ceph-4正好7块

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 460 objects, 867 MiB

usage: 9.9 GiB used, 60 GiB / 70 GiB avail

pgs: 24/1380 objects degraded (1.739%)

9/1380 objects misplaced (0.652%)

412 active+clean

3 active+recovery_wait+undersized+degraded+remapped

1 active+recovering+undersized+remapped

io:

recovery: 4.0 MiB/s, 2 objects/s

progress:

Rebalancing after osd.6 marked in

[============================..]

# 这样横向扩容就完成了

2.2:纵向扩容

纵向扩容即添加一块新硬盘即可 (我这里只添加ceph-4服务器一块10G盘),理想状态是 osd为8,虚拟化热加载就OK了

扫描命令如下

[root@ceph-4 ~]# echo "- - -" >/sys/class/scsi_host/host0/scan

[root@ceph-4 ~]# echo "- - -" >/sys/class/scsi_host/host1/scan

[root@ceph-4 ~]# echo "- - -" >/sys/class/scsi_host/host2/scan

[root@ceph-4 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm

sdb 8:16 0 10G 0 disk

└─ceph--d1007d7d--b140--4aa5--bf5d--9d7f1a111b3c-osd--block--568f45a6--cf64--4af0--89e1--e375228dfbda 253:2 0 10G 0 lvm

sdc 8:32 0 10G 0 disk

sr0 11:0 1 1024M 0 rom

可以看到sdc已经刷新出来了

如果我们新增的硬盘有数据和分区需要初始化,可以通过下面的命令进行处理

[root@ceph-4 ~]# fdisk -l /dev/sdc

Disk /dev/sdc: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@ceph-1 ceph-deploy]# ceph-deploy disk zap ceph-4 /dev/sdc

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy disk zap ceph-4 /dev/sdc

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f083f211440>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph-4

[ceph_deploy.cli][INFO ] func : <function disk at 0x7f083f45f938>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/sdc']

[ceph_deploy.osd][DEBUG ] zapping /dev/sdc on ceph-4

[ceph-4][DEBUG ] connected to host: ceph-4

[ceph-4][DEBUG ] detect platform information from remote host

[ceph-4][DEBUG ] detect machine type

[ceph-4][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: CentOS Linux 7.9.2009 Core

[ceph-4][DEBUG ] zeroing last few blocks of device

[ceph-4][DEBUG ] find the location of an executable

[ceph-4][INFO ] Running command: /usr/sbin/ceph-volume lvm zap /dev/sdc

[ceph-4][WARNIN] --> Zapping: /dev/sdc

[ceph-4][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-4][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdc bs=1M count=10 conv=fsync

[ceph-4][WARNIN] stderr: 10+0 records in

[ceph-4][WARNIN] 10+0 records out

[ceph-4][WARNIN] 10485760 bytes (10 MB) copied

[ceph-4][WARNIN] stderr: , 0.0217859 s, 481 MB/s

[ceph-4][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdc>

# 你可以理解为,这里其实就是使用了 dd对磁盘进行了覆盖

# 扩容

[root@ceph-1 ceph-deploy]# ceph-deploy osd create ceph-4 --data /dev/sdc

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_WARN

Degraded data redundancy: 153/1380 objects degraded (11.087%), 33 pgs degraded

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 16h)

mgr: ceph-1(active, since 16h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 8 osds: 8 up (since 29s), 8 in (since 29s); 9 remapped pgs # 可以看到,数量上来了

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 460 objects, 867 MiB

usage: 11 GiB used, 69 GiB / 80 GiB avail # 8块盘都是10G,大小为80G正好

pgs: 153/1380 objects degraded (11.087%)

9/1380 objects misplaced (0.652%)

377 active+clean

22 active+recovery_wait+degraded

11 active+recovery_wait+undersized+degraded+remapped

3 active+recovery_wait

2 active+recovering

1 active+recovering+undersized+remapped

io:

recovery: 11 MiB/s, 7 objects/s

progress:

Rebalancing after osd.7 marked in

[=======================.......]

# 通过ceph osd tree查看

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07837 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0.01959 host ceph-4

6 hdd 0.00980 osd.6 up 1.00000 1.00000

7 hdd 0.00980 osd.7 up 1.00000 1.00000

3:数据重分布

数据重分布原理

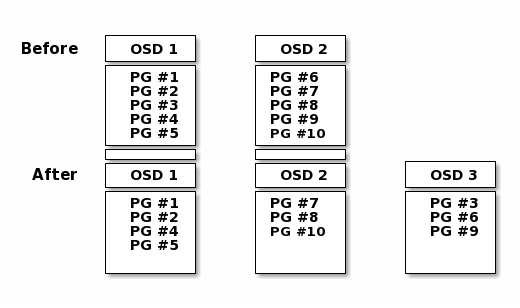

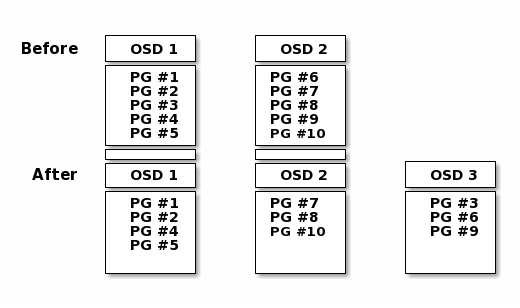

当Ceph OSD添加到Ceph存储集群时,集群映射会使用新的 OSD 进行更新。回到计算 PG ID中,这会更改集群映射。因此,它改变了对象的放置,因为它改变了计算的输入。下图描述了重新平衡过程(尽管相当粗略,因为它对大型集群的影响要小得多),其中一些但不是所有 PG 从现有 OSD(OSD 1 和 OSD 2)迁移到新 OSD(OSD 3) )。即使在再平衡时,许多归置组保持原来的配置,每个OSD都获得了一些额外的容量,因此在重新平衡完成后新 OSD 上没有负载峰值。

PG中存储的是subject,因为subject计算比较复杂,所以ceph会直接迁移pg保证集群平衡

# 我们将文件复制到CEPHFS文件存储中,通过ceph健康检查,就可以看到下面的PG同步的状态

[root@ceph-1 devopsdu]# dd if=/dev/zero of=devopsdu bs=1M count=4096

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 77s)

mgr: ceph-1(active, since 16h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 8 osds: 8 up (since 6m), 8 in (since 6m)

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 758 objects, 2.0 GiB

usage: 14 GiB used, 66 GiB / 80 GiB avail

pgs: 416 active+clean

io:

client: 52 MiB/s wr, 0 op/s rd, 48 op/s wr

# 当PG数据同步完成后,ceph集群health状态就变更为OK

# 温馨提示,当ceph osd执行重分配时,会影响ceph集群正常写入的操作。所以当更新升级osd节点时建议一台一台进行更新,或者临时关闭rebalance

ceph osd 重分布以及写入数据是可以指定2块网卡,生产环境建议ceph配置双网段。cluster_network为osd数据扩容同步重分配网卡,public_network为ceph客户端连接的网络。设置双网段可以减少重分布造成的影响

[root@ceph-1 ceph-deploy]# ceph osd set norebalance

norebalance is set

[root@ceph-1 ceph-deploy]# ceph osd set nobackfill

nobackfill is set

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_WARN

nobackfill,norebalance flag(s) set

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 6m)

mgr: ceph-1(active, since 16h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 8 osds: 8 up (since 11m), 8 in (since 11m)

flags nobackfill,norebalance

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 2.79k objects, 10 GiB

usage: 38 GiB used, 42 GiB / 80 GiB avail

pgs: 416 active+clean

# 当我们设置了norebalance nobackfill ,ceph会将重分布给暂停掉。ceph业务就可以访问正常

解除rebalance命令如下

[root@ceph-1 ceph-deploy]# ceph osd unset norebalance

norebalance is unset

[root@ceph-1 ceph-deploy]# ceph osd unset nobackfill

nobackfill is unset

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 6m)

mgr: ceph-1(active, since 16h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 8 osds: 8 up (since 12m), 8 in (since 12m)

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 2.79k objects, 10 GiB

usage: 38 GiB used, 42 GiB / 80 GiB avail

pgs: 416 active+clean

4:OSD缩容

当某个时间段我们OSD服务器受到外部因素影响,硬盘更换,或者是节点DOWN机需要手动剔除OSD节点。

目前ceph osd状态

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07837 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0.01959 host ceph-4

6 hdd 0.00980 osd.6 up 1.00000 1.00000

7 hdd 0.00980 osd.7 up 1.00000 1.00000

目前我们ceph节点一共有4台,其中ceph-1有2个osd节点。 假设我们ceph-4节点出现故障,软件或者硬件故障,需要将ceph-4从ceph集群中剔除

ceph osd perf 可以看到ceph中osd的延迟,如果生产中遇到哪块盘延迟较大,可以进行手动剔除

[root@ceph-1 ceph-deploy]# ceph osd perf

osd commit_latency(ms) apply_latency(ms)

7 0 0

6 0 0

5 0 0

4 0 0

0 0 0

1 0 0

2 0 0

3 0 0

故障发生后,如果一定时间后重新上线故障 OSD,那么 PG 会进行以下流程:

1:故障OSD上线,通知Monitor并注册,该OSD在上线前会读取存在持久化的设备的PGLog

2:Monitor 得知该OSD的旧id,因此会继续使用以前的PG分配,之前该OSD下线造成的Degraded PG会被通知该OSD已经中心加入

3:这时候分为两种情况,以下两种情况PG会标记自己为Peering状态并暂时停止处理请求

3.1:故障OSD是拥有Primary PG,它作为这部分数据权责主题,需要发送查询PG元数据请求给所有属于该PG的Replicate角色节点。该PG的Replicate角色节点实际上在故障OSD下线时期成为了Primary角色并维护了权威的PGLog,该PG在得到OSD的Primary PG的查询请求后会发送回应。Primary PG通过对比Replicate PG发送的元数据和PG版本信息后发现处于落后状态,因此会合并到PGLog并建立权威PGLog,同时会建立missing列表来标记过时数据

3.2:故障OSD是拥有Replicate PG,这时上线后故障OSD的Replicate PG会得到Primary PG的查询请求,发送自己这份过时的元数据和PGLog。Primary PG对比数据后发现该PG落后时,通过PGLog建立missing列表。

4:PG开始接受IO请求,但是PG所属的故障节点仍存在过时数据,故障节点的Primary PG会发起Pull请求从Replicate节点获得最新数据,Replicate PG会得到其它OSD节点的Primary PG的Push请求来恢复数据

4:恢复完成后标记自己Clean

# 第三步是PG唯一不处理请求的阶段,它通常会在1s内完成来减少不可用时间。但是这里仍然有其他问题,比如恢复期间故障OSD会维护missing列表,如果IO正好是处于missing列表的数据,那么PG会进行恢复数据的插队操作,主动将该IO涉及的数据从Replicate PG拉过来,提前恢复该部分数据。这个情况延迟大概几十毫秒

首先我们模拟ceph-4节点异常,异常的情况有很多,我直接down掉ceph-4

检查ceph状态

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07837 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0.01959 host ceph-4

6 hdd 0.00980 osd.6 down 1.00000 1.00000

7 hdd 0.00980 osd.7 down 1.00000 1.00000

ceph-4中的osd状态已经是down的状态

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_WARN

2 osds down

1 host (2 osds) down

Degraded data redundancy: 2158/8358 objects degraded (25.820%), 106 pgs degraded

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 21m)

mgr: ceph-1(active, since 17h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 8 osds: 6 up (since 39s), 8 in (since 26m)

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 2.79k objects, 10 GiB

usage: 38 GiB used, 42 GiB / 80 GiB avail

pgs: 2158/8358 objects degraded (25.820%)

233 active+undersized

106 active+undersized+degraded

77 active+clean

# 可以看到,大概有2158个object受到影响

通过ceph -s可以看到异常的osd在ceph-4上,osd的节点为osd.6,osd.7。下面执行osd out,可以将权重变小

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07837 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0.01959 host ceph-4

6 hdd 0.00980 osd.6 down 0 1.00000

7 hdd 0.00980 osd.7 down 0 1.00000

[root@ceph-1 ceph-deploy]# ceph osd out osd.6

osd.6 is already out.

[root@ceph-1 ceph-deploy]# ceph osd out osd.7

osd.7 is already out.

# 因为本身没权重,ceph就不会让此节点提供服务

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.07837 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0.01959 host ceph-4

6 hdd 0.00980 osd.6 down 0 1.00000

7 hdd 0.00980 osd.7 down 0 1.00000

# 可以看到后面的权重为0

删除CRUSHMAP信息,默认情况下ceph osd out不会删除crush中的信息

[root@ceph-1 ceph-deploy]# ceph osd crush dump | head

{

"devices": [

{

"id": 0,

"name": "osd.0",

"class": "hdd"

},

{

"id": 1,

"name": "osd.1",

从集群里面删除这个节点的记录

[root@ceph-1 ceph-deploy]# ceph osd crush rm osd.6

removed item id 6 name 'osd.6' from crush map

[root@ceph-1 ceph-deploy]# ceph osd crush rm osd.7

removed item id 7 name 'osd.7' from crush map

# 当前ceph osd里面没有任何数据了,但是ceph集群中还有保留

[root@ceph-1 ceph-deploy]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.05878 root default

-3 0.01959 host ceph-1

0 hdd 0.00980 osd.0 up 1.00000 1.00000

3 hdd 0.00980 osd.3 up 1.00000 1.00000

-5 0.01959 host ceph-2

1 hdd 0.00980 osd.1 up 1.00000 1.00000

4 hdd 0.00980 osd.4 up 1.00000 1.00000

-7 0.01959 host ceph-3

2 hdd 0.00980 osd.2 up 1.00000 1.00000

5 hdd 0.00980 osd.5 up 1.00000 1.00000

-9 0 host ceph-4

6 0 osd.6 down 0 1.00000

7 0 osd.7 down 0 1.00000

# 虽然没有提供数据,但是还有存在这个节点

从集群中删除异常节点

[root@ceph-1 ceph-deploy]# ceph osd rm osd.6

removed osd.6

[root@ceph-1 ceph-deploy]# ceph osd rm osd.7

removed osd.7

[root@ceph-1 ceph-deploy]# ceph -s | grep osd

osd: 6 osds: 6 up (since 93s), 6 in (since 19m)

删除auth中的osd key

# 此时我们通过ceph -s参数还可以看到有集群状态,是因为auth中osd的key没有删除

# 通过下面的命令删除auth中的key

# 查看auth list

[root@ceph-1 ceph-deploy]# ceph auth list|grep osd

installed auth entries:

caps: [osd] allow rwx

caps: [osd] allow rwx

caps: [osd] allow rwx

osd.0

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.1

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.2

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.3

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.4

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.5

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.6

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

osd.7

caps: [mgr] allow profile osd

caps: [mon] allow profile osd

caps: [osd] allow *

caps: [osd] allow *

client.bootstrap-osd

caps: [mon] allow profile bootstrap-osd

caps: [osd] allow rwx

caps: [osd] allow *

caps: [osd] allow *

caps: [osd] allow *

#删除osd.6 osd.7

[root@ceph-1 ceph-deploy]# ceph auth rm osd.6

updated

[root@ceph-1 ceph-deploy]# ceph auth rm osd.7

updated

# 注意不要删除osd

# 状态恢复

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 5m)

mgr: ceph-1(active, since 17h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 6 osds: 6 up (since 5m), 6 in (since 23m)

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 2.79k objects, 10 GiB

usage: 36 GiB used, 24 GiB / 60 GiB avail

pgs: 416 active+clean

浙公网安备 33010602011771号

浙公网安备 33010602011771号