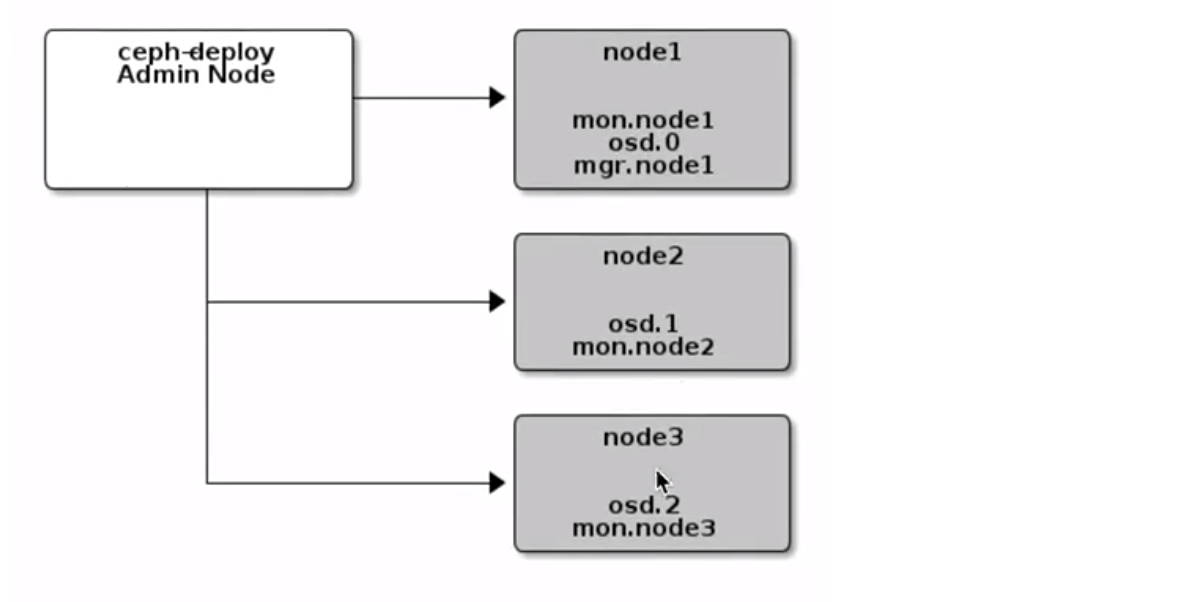

1:环境

| IP |

组件 |

| 10.0.0.10 |

Ceph,NTP |

| 10.0.0.11 |

Ceph |

| 10.0.0.12 |

Ceph |

2:配置

1:配置主机名与Hosts(全部主机操作)

[root@virtual_host ~]# hostnamectl set-hostname ceph-1

[root@virtual_host ~]# hostnamectl set-hostname ceph-2

[root@virtual_host ~]# hostnamectl set-hostname ceph-3

# 主机名需要和host设置相同,必须设置否则无法初始化,后续也有问题!

[root@ceph-1 ~]# cat << eof>> /etc/hosts

10.0.0.10 ceph-1

10.0.0.11 ceph-2

10.0.0.12 ceph-3

eof

2:配置NTP服务 (这里只需要在第一台节点操作即可)

[root@ceph-1 ~]# yum install -y ntp # 这个需要所有主机安装

[root@ceph-1 ~]# systemctl start ntpd

[root@ceph-1 ~]# systemctl enable ntpd

[root@ceph-1 ~]# timedatectl set-timezone Asia/Shanghai # (全部主机操作)

[root@ceph-1 ~]# timedatectl set-local-rtc 0 # (全部主机操作)

[root@ceph-1 ~]# systemctl restart rsyslog

[root@ceph-1 ~]# systemctl restart crond

#这样我们的ntp server自动连接到外网,进行同步 (时间同步完成在IP前面会有一个*号)

[root@ceph-1 ~]# ntpq -pn

remote refid st t when poll reach delay offset jitter

==============================================================================

*202.118.1.130 .PTP. 1 u 49 64 7 43.350 1.544 8.065

#NTP Agent (ntp agent同步ntp server时间)

[root@ceph-2 ~]# cat /etc/ntp.conf | grep server | grep -v "^#" # (全部ntp agent操作)

server 10.0.0.10 iburst

[root@ceph-2 ~]# systemctl restart ntpd # (全部ntp agent操作)

[root@ceph-2 ~]# systemctl enable ntpd # (全部ntp agent操作)

# 全部在ntp_agent节点添加定时同步任务

[root@ceph-2 ~]# crontab -l

*/5 * * * * /usr/sbin/ntpdate 10.0.0.10

# ntp时间服务器设置完成后在所有节点修改时区以及写入硬件

[root@ceph-2 ~]# systemctl restart rsyslog

[root@ceph-2 ~]# systemctl restart crond

3:ceph-1 设置免密

[root@ceph-1 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:YFp54WLJ3CFpx7pxjYz+1qB+pn/hhFBf+6JgFh4d+7k root@ceph-1

The key's randomart image is:

+---[RSA 2048]----+

| .oo |

| oo*+o. . |

| .@*+= + . |

| ==+* = . |

| .. *S+ . o |

| o B o + . |

| = * o o |

| . = = E |

| .o*.. |

+----[SHA256]-----+

[root@ceph-1 ~]# for i in ceph-1 ceph-2 ceph-3;do ssh-copy-id root@$i;done

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'ceph-1 (10.0.0.10)' can't be established.

ECDSA key fingerprint is SHA256:2ysKwIOq1nrOh1CXWJyKU/DupX/wPD1PQeJeNHYQaC8.

ECDSA key fingerprint is MD5:7c:ae:c3:90:f6:6b:06:b1:46:1c:9d:81:7d:cc:2b:9d.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@ceph-1's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@ceph-1'"

and check to make sure that only the key(s) you wanted were added.

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'ceph-2 (10.0.0.11)' can't be established.

ECDSA key fingerprint is SHA256:2ysKwIOq1nrOh1CXWJyKU/DupX/wPD1PQeJeNHYQaC8.

ECDSA key fingerprint is MD5:7c:ae:c3:90:f6:6b:06:b1:46:1c:9d:81:7d:cc:2b:9d.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@ceph-2's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@ceph-2'"

and check to make sure that only the key(s) you wanted were added.

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'ceph-3 (10.0.0.12)' can't be established.

ECDSA key fingerprint is SHA256:2ysKwIOq1nrOh1CXWJyKU/DupX/wPD1PQeJeNHYQaC8.

ECDSA key fingerprint is MD5:7c:ae:c3:90:f6:6b:06:b1:46:1c:9d:81:7d:cc:2b:9d.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@ceph-3's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'root@ceph-3'"

and check to make sure that only the key(s) you wanted were added.

4:所有节点关闭防火墙selinux(全部节点操作)

[root@ceph-1 ~]# systemctl stop firewalld

[root@ceph-1 ~]# systemctl disable firewalld

[root@ceph-1 ~]# iptables -F && iptables -X && iptables -F -t nat && iptables -X -t nat

[root@ceph-1 ~]# iptables -P FORWARD ACCEPT

[root@ceph-1 ~]# setenforce 0

[root@ceph-1 ~]# sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

5:ceph yum源配置(全部节点操作)

[root@ceph-1 ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@ceph-1 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

[root@ceph-1 ~]# wget -O /etc/yum.repos.d/ceph.repo http://down.i4t.com/ceph/ceph.repo

[root@ceph-1 ~]# yum clean all

[root@ceph-1 ~]# yum makecache

# 所有工作完成后建议所有节点重启一下服务器

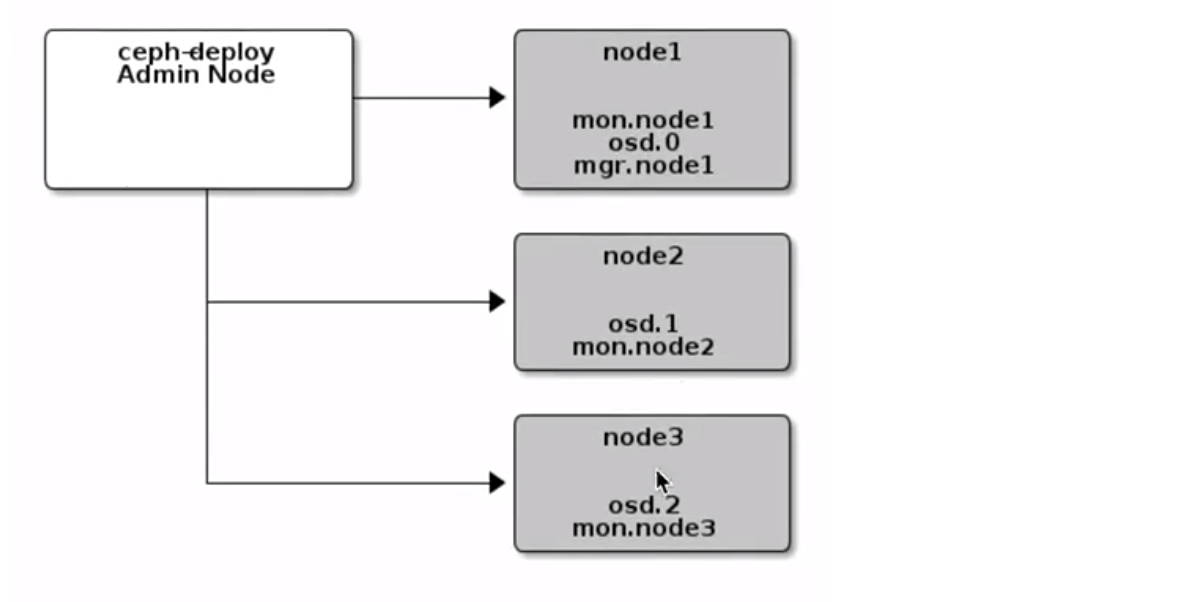

3:部署

1:Ceph-1部署节点安装依赖包以及ceph部署工具ceph-deploy

[root@ceph-1 ~]# yum install -y python-setuptools

[root@ceph-1 ~]# yum install -y ceph-deploy

# 查看版本

[root@ceph-1 ~]# ceph-deploy --version

2.0.1

2:部署Ceph

下面先运行单节点的monitor,部署monitor,接下来为ceph创建一个配置目录,后面操作需要在这个目录下进行

[root@ceph-1 ~]# mkdir ceph-deploy

[root@ceph-1 ~]# cd ceph-deploy/

[root@ceph-1 ceph-deploy]# pwd

/root/ceph-deploy

# 创建monitor

[root@ceph-1 ceph-deploy]# ceph-deploy new ceph-1 --public-network 10.0.0.0/8

# ceph-1代表部署monitor节点

# 参数设置

--cluster-network 集群对外的网络

--public-network 集群内通信的网络

#--public-network建议添加,否则后面添加monitor节点会提示错误

# 执行完毕后我们可以看到在/root/ceph-deploy目录会有为我们生成的一些文件

[root@ceph-1 ceph-deploy]# ls

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

ceph配置文件 ceph日志文件 keyring主要做身份验证

# 我们可以根据自身需要修改ceph.conf文件,比如上面创建集群中添加的网络,在这里也可以添加

添加ceph时间配置

[root@ceph-1 ceph-deploy]# echo "mon clock drift allowed = 2" >>/root/ceph-deploy/ceph.conf

[root@ceph-1 ceph-deploy]# echo "mon clock drift warn backoff = 30" >>/root/ceph-deploy/ceph.conf

[root@ceph-1 ceph-deploy]# cat /root/ceph-deploy/ceph.conf

[global]

fsid = fb39bc5c-ac88-4b06-a580-5df5611ee976

public_network = 10.0.0.0/8

mon_initial_members = ceph-1

mon_host = 10.0.0.10

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

mon clock drift allowed = 2

mon clock drift warn backoff = 30

在所有节点安装ceph相关软件包

[root@ceph-1 ceph-deploy]# yum install -y ceph ceph-mon ceph-mgr ceph-radosgw ceph-mds

#当然如果你不在乎网络问题,也可以使用官方推荐的安装方式,下面的方式会重新给我们配置yum源,这里不太推荐

ceph-deploy install ceph-1 ceph-2 ceph-3

接下来我们需要初始化monitor

# 需要进入到我们之前创建的ceph目录中

[root@ceph-1 ceph-deploy]# ceph-deploy mon create-initial

# 接下来我们在/root/ceph-deploy下面可以看到刚刚生成的一些文件

[root@ceph-1 ceph-deploy]# ls

ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph-deploy-ceph.log

ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.conf ceph.mon.keyring

# ceph.bootstrap-*为我们初始化的秘钥文件,包括osd、mds、mgr

将我们刚刚生成的文件拷贝到所有的节点上 (拷贝完成后就可以使用ceph -s参数)

# 把配置文件和admin密钥分发到各个节点

[root@ceph-1 ceph-deploy]# ceph-deploy admin ceph-1 ceph-2 ceph-3

# 禁用不安全模式

root@ceph-1 ceph-deploy]# ceph config set mon auth_allow_insecure_global_id_reclaim false

接下来我们执行ceph -s就可以看到已经初始化完毕

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-1 (age 3m) # 这里是包含一个monitor

mgr: no daemons active # 我们还没有创建mgr

osd: 0 osds: 0 up, 0 in # 当然osd也没有的

data: # 资源池我们还没有添加

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

# 我们可以看到cluster.health后面的状态为OK

创建 manager daemon (主要用于监控)

# 这里我们只是将ceph-1作为manager daemon节点

[root@ceph-1 ceph-deploy]# ceph-deploy mgr create ceph-1

# 接下来我们在执行ceph -s就会看到有一个刚添加的好的mgr节点

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-1 (age 5m)

mgr: ceph-1(active, since 13s)

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

4:部署OSD

OSD负责相应客户端请求返回具体数据的进程,一个Ceph集群一般都有很多个OSD

# 我这里使用了虚拟化可以直接热加磁盘,然后刷新一下就OK了(全部节点操作哦)

# 刷新磁盘

[root@ceph-1 ~]# echo "- - -" > /sys/class/scsi_host/host0/scan

[root@ceph-1 ~]# echo "- - -" > /sys/class/scsi_host/host1/scan

[root@ceph-1 ~]# echo "- - -" > /sys/class/scsi_host/host2/scan

[root@ceph-1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

---

sdb 8:16 0 10G 0 disk # #sdb就是我们添加了一个10G的硬盘

# 这里添加三台osd集群

[root@ceph-1 ~]# cd /root/ceph-deploy/

[root@ceph-1 ~]# ceph-deploy osd create ceph-1 --data /dev/sdb

[root@ceph-1 ~]# ceph-deploy osd create ceph-2 --data /dev/sdb

[root@ceph-1 ~]# ceph-deploy osd create ceph-3 --data /dev/sdb

# 接下来我们在查看ceph osd就可以看到有3台osd节点

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-1 (age 30m)

mgr: ceph-1(active, since 25m)

osd: 3 osds: 3 up (since 15s), 3 in (since 15s) # 发现三个节点

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 27 GiB / 30 GiB avail

pgs:

# 注:只有health状态为OK,证明集群同步正常

同样查看ceph osd的状态命令也有很多

1:ceph osd tree

2:ceph osd df

3:ceph osd ls

到这里我们就把ceph完毕3个osd。并且数据总大小为30G

# 如果期间我们有需要修改cpeh.conf的操作,只需要在ceph-1上修改,使用下面的命令同步到其他节点上

[root@ceph-1 ~]# ceph-deploy --overwrite-conf config push ceph-1 ceph-2 ceph-3

5:集群部署

部署monitor,接下来我们部署一个monitor高可用

扩展monitor

monitor负责保存OSD的元数据,所以monitor当然也需要高可用。 这里的monitor推荐使用奇数节点进行部署,我这里以3台节点部署

当我们添加上3个monitor节点后,monitor会自动进行选举,自动进行高可用

# 添加monitor (ceph-1操作)

[root@ceph-1 ceph-deploy]# cd /root/ceph-deploy/

[root@ceph-1 ceph-deploy]# ceph-deploy mon add ceph-2 --address 10.0.0.11

[root@ceph-1 ceph-deploy]# ceph-deploy mon add ceph-3 --address 10.0.0.12

# 检查

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 3s) # 已经将monitor节点信息显示出来

mgr: ceph-1(active, since 32m)

osd: 3 osds: 3 up (since 7m), 3 in (since 7m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 27 GiB / 30 GiB avail

pgs:

我们还可以使用ceph自带的命令,查看monitor选举情况,以及集群的健康状态

[root@ceph-1 ceph-deploy]# ceph quorum_status --format json-pretty

{

"election_epoch": 12,

"quorum": [

0,

1,

2

],

"quorum_names": [

"ceph-1", # 集群节点信息

"ceph-2",

"ceph-3"

],

"quorum_leader_name": "ceph-1", # 当前leader节点

"quorum_age": 47,

"monmap": {

"epoch": 3, # monitor节点数量

"fsid": "fb39bc5c-ac88-4b06-a580-5df5611ee976",

"modified": "2022-05-21 04:53:41.282706",

"created": "2022-05-21 04:16:13.898587",

"min_mon_release": 14,

"min_mon_release_name": "nautilus",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "ceph-1",

"public_addrs": {

"addrvec": [

{

"type": "v2", # 下面就可以看到我们每一个monitor的节点信息

"addr": "10.0.0.10:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.0.0.10:6789",

"nonce": 0

}

]

},

"addr": "10.0.0.10:6789/0",

"public_addr": "10.0.0.10:6789/0"

},

{

"rank": 1,

"name": "ceph-2",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.0.0.11:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.0.0.11:6789",

"nonce": 0

}

]

},

"addr": "10.0.0.11:6789/0",

"public_addr": "10.0.0.11:6789/0"

},

{

"rank": 2,

"name": "ceph-3",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "10.0.0.12:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "10.0.0.12:6789",

"nonce": 0

}

]

},

"addr": "10.0.0.12:6789/0",

"public_addr": "10.0.0.12:6789/0"

}

]

}

}

还可以使用dump参数查看关于monitor更细的信息

[root@ceph-1 ceph-deploy]# ceph mon dump

epoch 3

fsid fb39bc5c-ac88-4b06-a580-5df5611ee976

last_changed 2022-05-21 04:53:41.282706

created 2022-05-21 04:16:13.898587

min_mon_release 14 (nautilus)

0: [v2:10.0.0.10:3300/0,v1:10.0.0.10:6789/0] mon.ceph-1

1: [v2:10.0.0.11:3300/0,v1:10.0.0.11:6789/0] mon.ceph-2

2: [v2:10.0.0.12:3300/0,v1:10.0.0.12:6789/0] mon.ceph-3

dumped monmap epoch 3

# 更多关于monitor的信息可以使用ceph mon -h查看到

6:部署manager daemon

# 扩展manager daemon

# Ceph-MGR目前的主要功能是把集群的一些指标暴露给外界使用

mgr集群只有一个节点为active状态,其它的节点都为standby。只有当主节点出现故障后,standby节点才会去接管,并且状态变更为active

# 这里扩展mgr的方法与monitor方法类似

[root@ceph-1 ceph-deploy]# ceph-deploy mgr create ceph-2 ceph-3 # create后面为ceph节点的名称

# 检查

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 5m)

mgr: ceph-1(active, since 37m), standbys: ceph-2, ceph-3 # 可以看到状态

osd: 3 osds: 3 up (since 12m), 3 in (since 12m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 27 GiB / 30 GiB avail

pgs:

# 这里已经看到mgr已经添加上去,只有ceph-1节点为active,其余节点均为standbys

7:RBD 块存储

块存储是存储区域网络中使用的一个数据存储类型。在这种类型中,数据以块的形式存储在卷里,卷会挂载到节点上。可以为应用程序提供更大的存储容量,并且可靠性和性能都更高。

RBD协议,也就是Ceph块设备 (Ceph Block Device)。RBD除了可靠性和性能之外,还支持完整和增量式快照,精简的配置,写时复制(copy-on-write)式克隆。并且支持全内存式缓存。

目前CEPH RBD支持的最大镜像为16EB,镜像可以直接作为磁盘映射到物理裸机,虚拟机或者其他主机使用,KVM和Xen完全支持RBD,VMware等云厂商也支持RBD模式

RBD数据写入流程

1:创建资源池Pool

[root@ceph-1 ceph-deploy]# ceph osd pool create devopsdu 64 64

pool 'devopsdu' created

# devopsdu为pool名称

# pg为64个 (pg和pgp数量需要一致)

# pgp为64个

# 我们不需要指定副本,默认就为三个副本

# 查看 pool

[root@ceph-1 ceph-deploy]# ceph osd lspools

1 devopsdu

# 可以通过下面的命令获取到pool详细状态

[root@ceph-1 ceph-deploy]# ceph osd pool get devopsdu pg_num

pg_num: 64

[root@ceph-1 ceph-deploy]# ceph osd pool get devopsdu pgp_num

pgp_num: 64

# 还可以查看一下副本数量

[root@ceph-1 ceph-deploy]# ceph osd pool get devopsdu size

size: 3

# 当然这些默认的参数也是可以修改的,比如我们修改一下osd副本数量

[root@ceph-1 ceph-deploy]# ceph osd pool set devopsdu size 2

set pool 1 size to 2

[root@ceph-1 ceph-deploy]# ceph osd pool get devopsdu size

size: 2

RBD创建和映射

# 在创建镜像前我们还需要修改一下features值

# 在Centos7内核上,rbd很多特性都不兼容,目前3.0内核仅支持layering。所以我们需要删除其他特性

1:layering: 支持分层

2:striping: 支持条带化 v2

3:exclusive-lock: 支持独占锁

4:object-map: 支持对象映射(依赖 exclusive-lock)

5:fast-diff: 快速计算差异(依赖 object-map)

6:deep-flatten: 支持快照扁平化操作

7:journaling: 支持记录 IO 操作(依赖独占锁)

关闭不支持的特性一种是通过命令的方式修改,还有一种是在ceph.conf中添加rbd_default_features = 1来设置默认 features(数值仅是 layering 对应的 bit 码所对应的整数值)。

features编码如下

例如需要开启layering和striping,rbd_default_features = 3 (1+2)

属性 BIT码

layering 1

striping 2

exclusive-lock 4

object-map 8

fast-diff 16

deep-flatten 32

动态关闭

[root@ceph-1 ceph-deploy]# cd /root/ceph-deploy

[root@ceph-1 ceph-deploy]# echo "rbd_default_features = 1" >>ceph.conf

[root@ceph-1 ceph-deploy]# ceph-deploy --overwrite-conf config push ceph-1 ceph-2 ceph-3

# 当然也在rbd创建后手动删除,这种方式设置是临时性,一旦image删除或者创建新的image 时,还会恢复默认值。

# 需要按照从后往前的顺序,一条条删除

rbd feature disable xxx/xxx-rbd.img deep-flatten

rbd feature disable xxx/xxx-rbd.img fast-dif

rbd feature disable xxx/xxx-rbd.img object-map

rbd feature disable xxx/xxx-rbd.img exclusive-lock

RBD创建就是通过rbd命令来进行创建

# 这里我们使用ceph-01进行演示,创建rbd需要使用key,可以使用-k参数进行指定,我这里已经默认使用了admin的key,所以不需要指定

[root@ceph-1 ~]# rbd create -p devopsdu --image devopsdu-rbd.img --size 5

# -p pool名称

# --image 镜像名称(相当于块设备在ceph名称)

# --size 镜像大小 (块大小)

# 创建rbd设备还可以通过下面的方式简写

# 省略-p和--image参数

[root@ceph-1 ~]# rbd create devopsdu/devopsdu-rbd.img --size 5G

# 查看rbd

[root@ceph-1 ~]# rbd -p devopsdu ls

devopsdu-rbd.img

# 删除rbd,同样,删除rbd也可以简写,或者加入-p和--image

[root@ceph-1 ~]# rbd rm devopsdu/devopsdu-rbd.img

Removing image: 100% complete...done.

[root@ceph-1 ~]# rbd -p devopsdu ls

# 我们可以通过info查看rbd信息,支持简写

[root@ceph-1 ~]# rbd info devopsdu/devopsdu-rbd.img

rbd image 'devopsdu-rbd.img':

size 5 MiB in 2 objects # 可以看到空间大小,和有多少个object

order 22 (4 MiB objects) # 每个object大小

snapshot_count: 0

id: 11f4a0ee931

block_name_prefix: rbd_data.11f4a0ee931 # id号

format: 2

features: layering # 如果前面我们设置features 为1,这里只可以看到一个layering

op_features:

flags:

create_timestamp: Sat May 21 05:15:52 2022

access_timestamp: Sat May 21 05:15:52 2022

modify_timestamp: Sat May 21 05:15:52 2022

# 第二种查看方式

[root@ceph-1 ~]# rbd info -p devopsdu --image devopsdu-rbd.img

rbd image 'devopsdu-rbd.img':

size 5 MiB in 2 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 11f4a0ee931

block_name_prefix: rbd_data.11f4a0ee931

format: 2

features: layering

op_features:

flags:

create_timestamp: Sat May 21 05:15:52 2022

access_timestamp: Sat May 21 05:15:52 2022

modify_timestamp: Sat May 21 05:15:52 2022

# 块文件挂载

# 接下来我们要进行rbd的挂载 (这里不建议分区,如果分区,后续扩容比较麻烦,容易存在丢数据的情况。在分区不够的情况下建议多块rbd)

[root@ceph-1 ~]# rbd map devopsdu/devopsdu-rbd.img

/dev/rbd0

# devopsdu 为pool名称,当然可以通过-p

# devopsdu-rbd.img 为rbd文件名称

# 上面已经生成了块设备,我们还可以通过下面的命令进行查看

[root@ceph-1 ~]# rbd device ls

id pool namespace image snap device

0 devopsdu devopsdu-rbd.img - /dev/rbd0

# 可以看到pool名称,img名称,以及设备信息

# 同样我们可以通过fdisk命令看到,现在块设备就相当于我们在服务器上插入了一块硬盘,挂载即可使用

[root@ceph-1 ~]# fdisk -l

---

Disk /dev/rbd0: 5368 MB, 5368709120 bytes, 10485760 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

# 这里不建议进行分区

[root@ceph-1 ~]# mkfs.xfs /dev/rbd0

Discarding blocks...Done.

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=163840 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=1310720, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

# 现在就可以进行挂载使用了

[root@ceph-1 ~]# mkdir /mnt/devopsdu

[root@ceph-1 ~]# mount /dev/rbd0 /mnt/devopsdu/

[root@ceph-1 ~]# df -Th

---

/dev/rbd0 xfs 5.0G 33M 5.0G 1% /mnt/devopsdu

[root@ceph-1 ~]# cd /mnt/devopsdu/

[root@ceph-1 devopsdu]# echo "devopsdu" >devopsdu

[root@ceph-1 devopsdu]# cat devopsdu

devopsdu

RBD扩容

# 目前我们的rbd大小为5个G,这里我们演示将它扩展到10G。在不丢数据的情况下

[root@ceph-1 devopsdu]# rbd -p devopsdu ls

devopsdu-rbd.img

[root@ceph-1 devopsdu]# rbd info devopsdu/devopsdu-rbd.img

rbd image 'devopsdu-rbd.img':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 121ea5f61967

block_name_prefix: rbd_data.121ea5f61967

format: 2

features: layering

op_features:

flags:

create_timestamp: Sat May 21 05:18:34 2022

access_timestamp: Sat May 21 05:18:34 2022

modify_timestamp: Sat May 21 05:18:34 2022

[root@ceph-1 devopsdu]# df -Th

---

/dev/rbd0 xfs 5.0G 33M 5.0G 1% /mnt/devopsdu

# 接下来使用resize参数进行扩容

[root@ceph-1 devopsdu]# rbd resize devopsdu/devopsdu-rbd.img --size 10G

Resizing image: 100% complete...done.

# devopsdu为pool名称

# devopsdu-rbd.img为镜像文件

# --size 为扩容后镜像大小

现在我们可以在查看一下devopsdu-rbd.img镜像大小

[root@ceph-1 devopsdu]# rbd info devopsdu/devopsdu-rbd.img

rbd image 'devopsdu-rbd.img':

size 10 GiB in 2560 objects

order 22 (4 MiB objects)

snapshot_count: 0

id: 121ea5f61967

block_name_prefix: rbd_data.121ea5f61967

format: 2

features: layering

op_features:

flags:

create_timestamp: Sat May 21 05:18:34 2022

access_timestamp: Sat May 21 05:18:34 2022

modify_timestamp: Sat May 21 05:18:34 2022

# 扩容之后我们的设备是已经扩容上去,但是我们的文件系统并没有扩容上

[root@ceph-1 devopsdu]# fdisk -l

---

Disk /dev/rbd0: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

[root@ceph-1 devopsdu]# df -Th

---

/dev/rbd0 xfs 5.0G 33M 5.0G 1% /mnt/devopsdu

# 接下来我们需要使用resize2fs对文件系统进行扩容

关于resize2fs解释:

调整ext2ext3ext4文件系统的大小,它可以放大或者缩小没有挂载的文件系统的大小。如果文件系统已经挂载,它可以扩大文件系统的大小,前提是内核支持在线调整大小

此命令的适用范围:RedHat、RHEL、Ubuntu、CentOS、SUSE、openSUSE、Fedora。

只需要执行resize2fs加上文件系统的地址即可,扩容完毕我们就可以看到/dev/rbd0大小为10G

[root@ceph-1 devopsdu]# resize2fs /dev/rbd0

# 这里需要注意是文件系统如果是XFS需要使用以下方式

[root@ceph-1 devopsdu]# xfs_growfs /dev/rbd0

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=163840 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0 spinodes=0

data = bsize=4096 blocks=1310720, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

data blocks changed from 1310720 to 2621440

[root@ceph-1 devopsdu]# df -Th

---

/dev/rbd0 xfs 10G 33M 10G 1% /mnt/devopsdu

# 我们可以到挂载的目录进行查看,之前创建的数据也还在

[root@ceph-1 devopsdu]# cd /mnt/devopsdu/

[root@ceph-1 devopsdu]# ls

devopsdu

[root@ceph-1 devopsdu]# cat devopsdu

devopsdu

# 对于扩容一般会涉及三方面的内容: 1.底层存储(rbd resize) 2.磁盘分区的扩容 (例如mbr分区) 3.Linux文件系统的扩容所以这里不建议在rbd块设备进行分区

CEPH警告处理:

# 当我们osd有数据写入时,我们在查看ceph集群。发现ceph集群目前有警告这时候我们就需要处理这些警告

[root@ceph-1 devopsdu]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_WARN

application not enabled on 1 pool(s)

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 7h)

mgr: ceph-1(active, since 8h), standbys: ceph-2, ceph-3

osd: 3 osds: 3 up (since 7h), 3 in (since 7h)

data:

pools: 1 pools, 64 pgs

objects: 175 objects, 611 MiB

usage: 4.7 GiB used, 25 GiB / 30 GiB avail

pgs: 64 active+clean

io:

client: 50 MiB/s wr, 0 op/s rd, 45 op/s wr

# 当我们创建pool资源池后,必须制定它使用ceph应用的类型 (ceph块设备、ceph对象网关、ceph文件系统)如果我们不指定类型,集群health会提示HEALTH_WARN

# 我们可以通过ceph health detail命令查看ceph健康详情的信息

[root@ceph-1 devopsdu]# ceph health detail

HEALTH_WARN application not enabled on 1 pool(s)

POOL_APP_NOT_ENABLED application not enabled on 1 pool(s)

application not enabled on pool 'devopsdu'

use 'ceph osd pool application enable <pool-name> <app-name>', where <app-name> is 'cephfs', 'rbd', 'rgw', or freeform for custom applications.

# 接下来我们将这个pool资源池进行分类,将abcdocker pool标示为rbd类型

[root@ceph-1 devopsdu]# ceph osd pool application enable devopsdu rbd

enabled application 'rbd' on pool 'devopsdu'

[root@ceph-1 devopsdu]# ceph health detail

HEALTH_OK

# 如果我们在创建pool进行初始化后,就不会提示这个报错rbd pool init <pool-name>

当我们初始化后,rbd会将我们的pool修改为rbd格式。 健康状态自然就不会报错

设置完毕后,我们通过下面的命令可以看到pool目前的类型属于rbd类型

[root@ceph-1 devopsdu]# ceph osd pool application get devopsdu

{

"rbd": {}

}

处理完application告警,我们继续查看ceph健康信息

[root@ceph-1 devopsdu]# ceph health detail

HEALTH_OK

[root@ceph-1 devopsdu]# ceph crash ls-new # 发现无告警 # 若有告警,记下ID

# 如果出现了告警,我们如何处理呢?

第一种方法

[root@ceph-1 devopsdu]# ceph crash archive <ID>

第二种方法 (将所有的crashed打包归档)

[root@ceph-1 devopsdu]# ceph crash archive-all

[root@ceph-1 devopsdu]# ceph crash ls-new

[root@ceph-1 devopsdu]# ceph -s

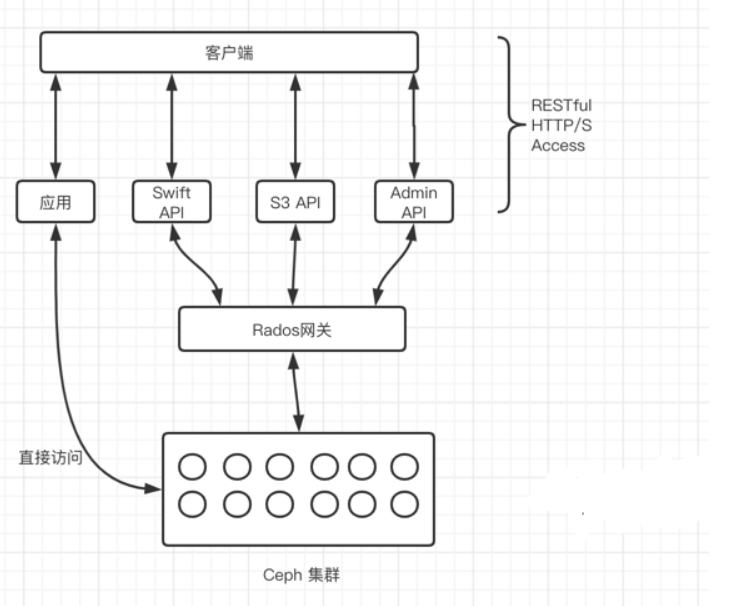

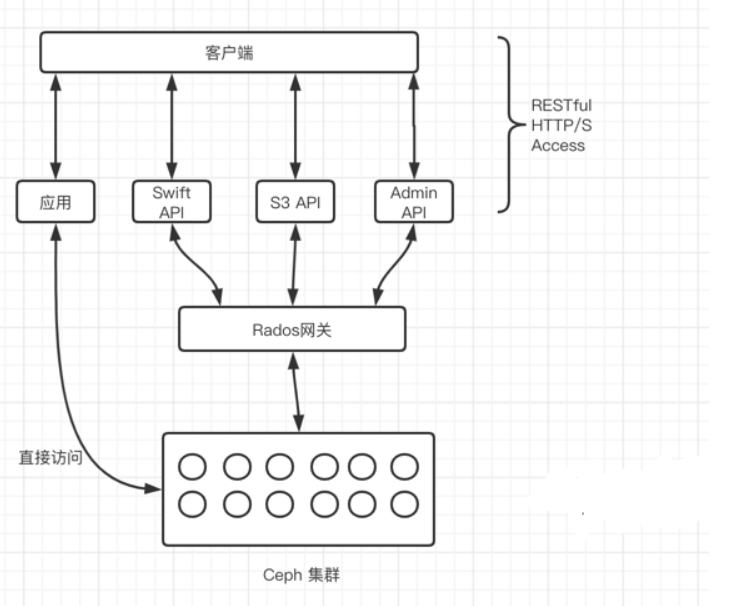

8:RGW对象存储

对象存储是什么?

可以理解是一个海量的存储空间,可以通过API在任何时间、任何地点访问对象存储里面的数据。我们常用的阿里云OSS、七牛云存储以及百度网盘、私有网盘等都属于对象存储。

Cpeh是一个分布式对象存储系统,通过它的对象网关(object gateway),也就是RADOS网关(radosgw)提供对象存储接口。RADOS网关利用librgw (RADOS网关库)和librados这些库,允许应用程序跟CEPH对象存储建立连接。Ceph通过RESTful API提供可访问且最稳定的多租户对象存储解决方案之一。

RADOS网关提供RESTful接口让用户的应用程序将数据存储到CEPH集群中。RADOS网关接口满足以下特点;

1:兼容Swift: 这是为了OpenStack Swift API提供的对象存储功能

2:兼容S3: 这是为Amazon S3 API提供的对象存储功能

3:Admin API: 这也称为管理API或者原生API,应用程序可以直接使用它来获取访问存储系统的权限以及管理存储系统

除了上述的特点,对象存储还有以下特点

4:支持用户认证

5:使用率分析

6:支持分片上传 (自动切割上传重组)

7:支持多站点部署、多站点复制

部署RGW存储网关

使用ceph对象存储我们需要安装对象存储网关(RADOSGW)

ceph-radosgw软件包我们之前是已经安装过了,这里可以检查一下

[root@ceph-1 devopsdu]# rpm -qa | grep ceph-radosgw

ceph-radosgw-14.2.22-0.el7.x86_64

# 如果没有安装可以通过yum install ceph-radosgw安装

# 部署对象存储网关

这里我使用ceph-1当做存储网关来使用

[root@ceph-1 ceph-deploy]# ceph-deploy rgw create ceph-1

# 检查

[root@ceph-1 ceph-deploy]# ceph -s | grep rgw

rgw: 1 daemon active (ceph-1)

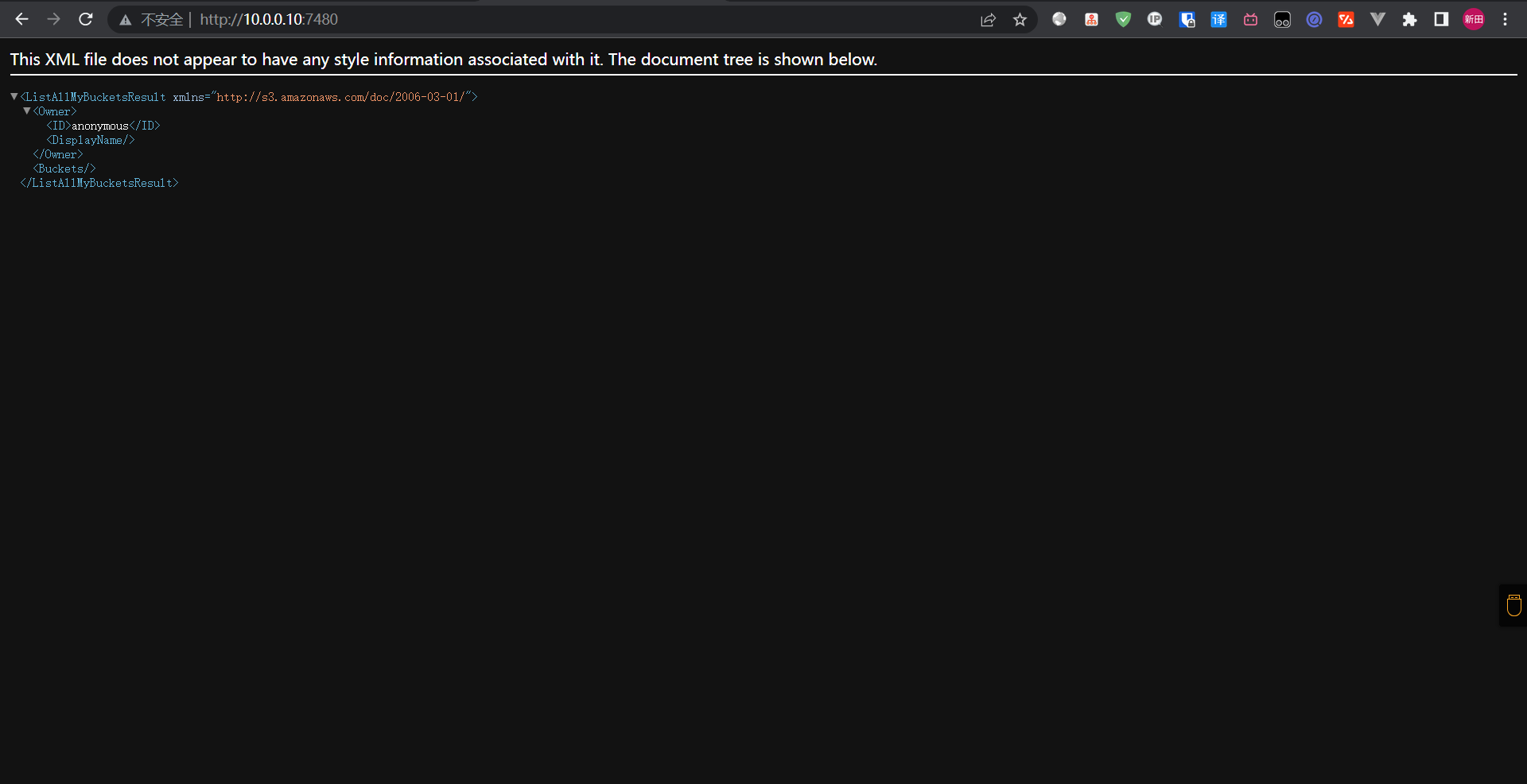

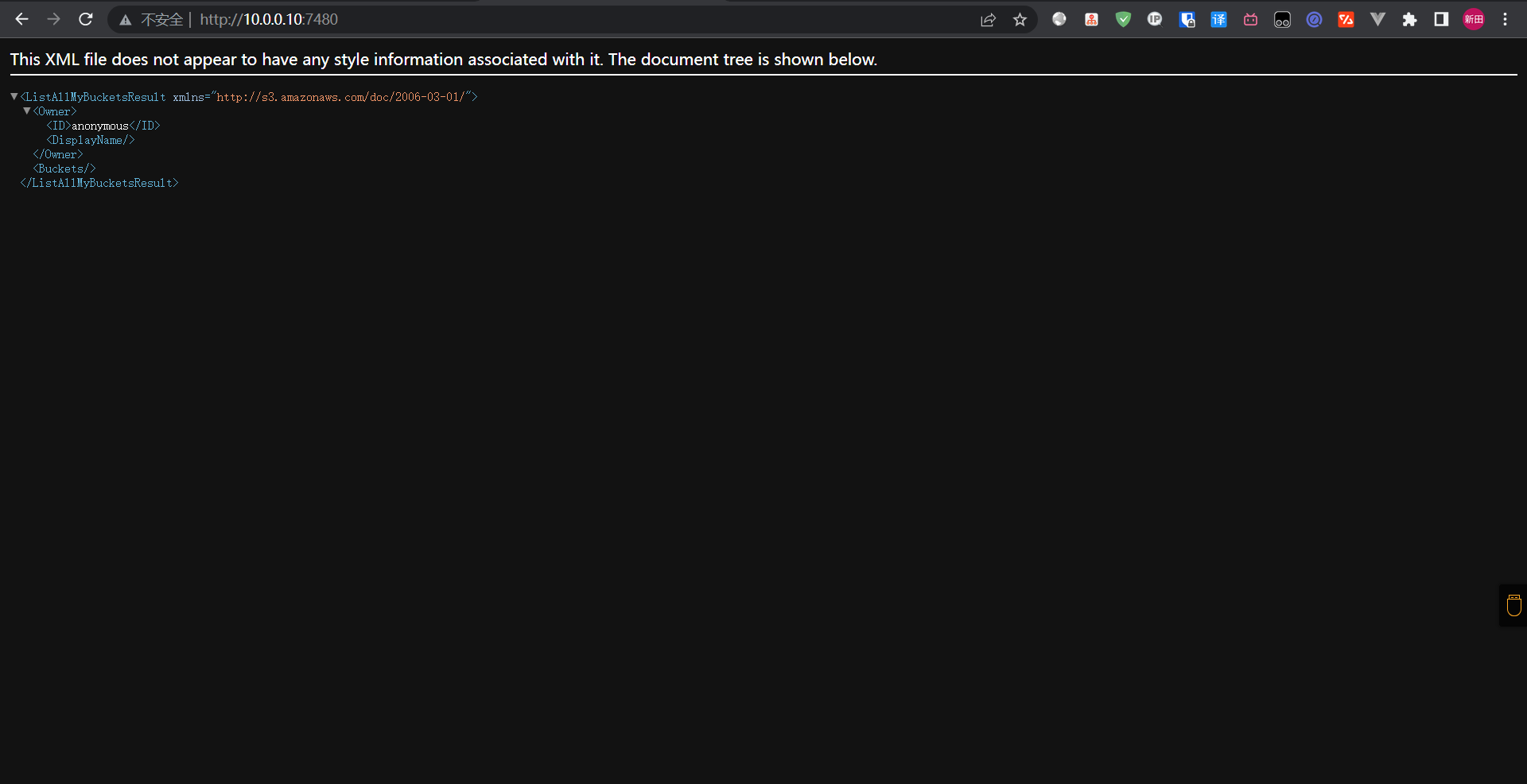

# 并且radosgw监听7480端口

[root@ceph-1 ceph-deploy]# netstat -nplt | grep 7480

tcp 0 0 0.0.0.0:7480 0.0.0.0:* LISTEN 16990/radosgw

tcp6 0 0 :::7480 :::* LISTEN 16990/radosgw

到这里就部署完成了

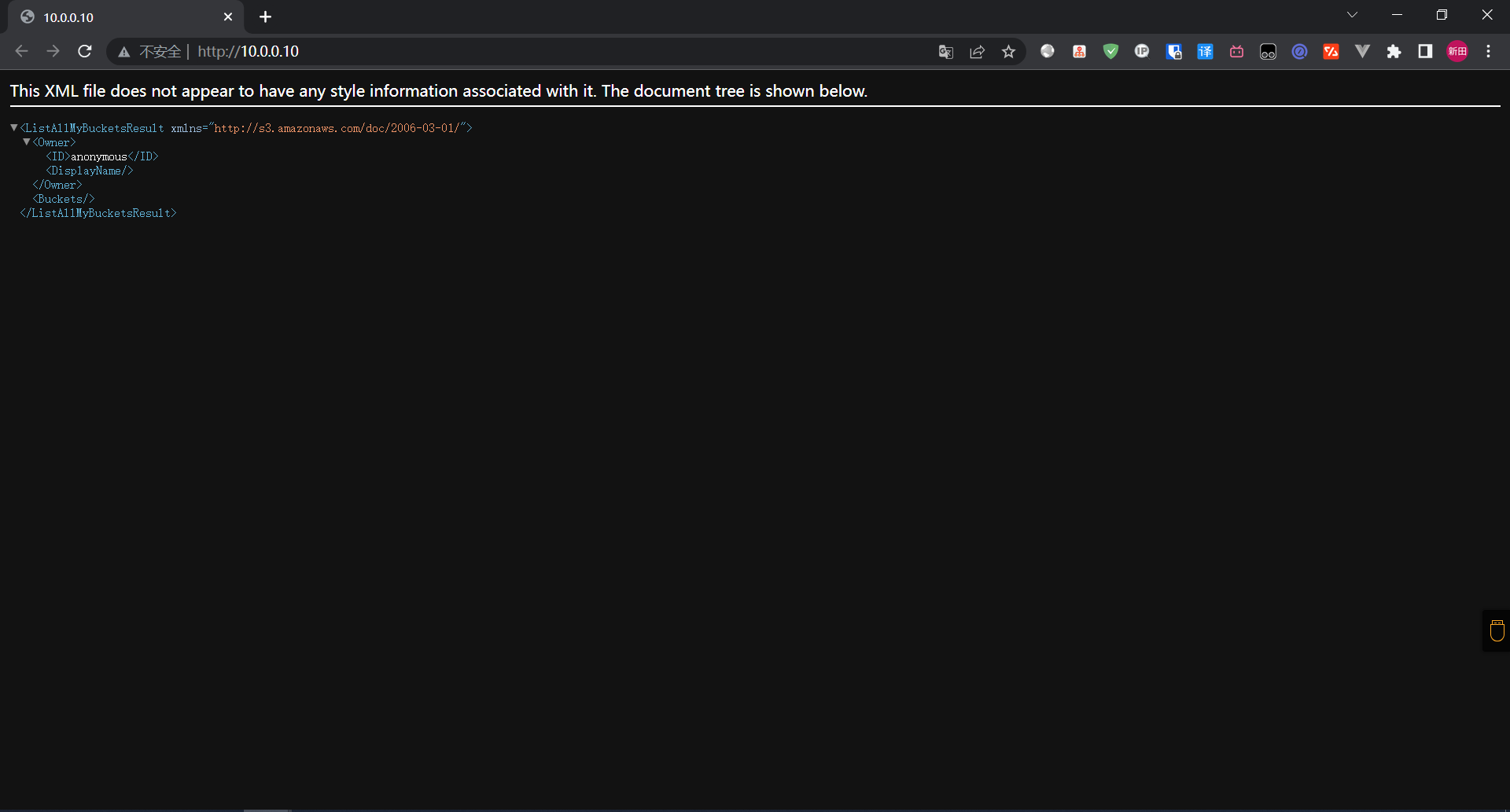

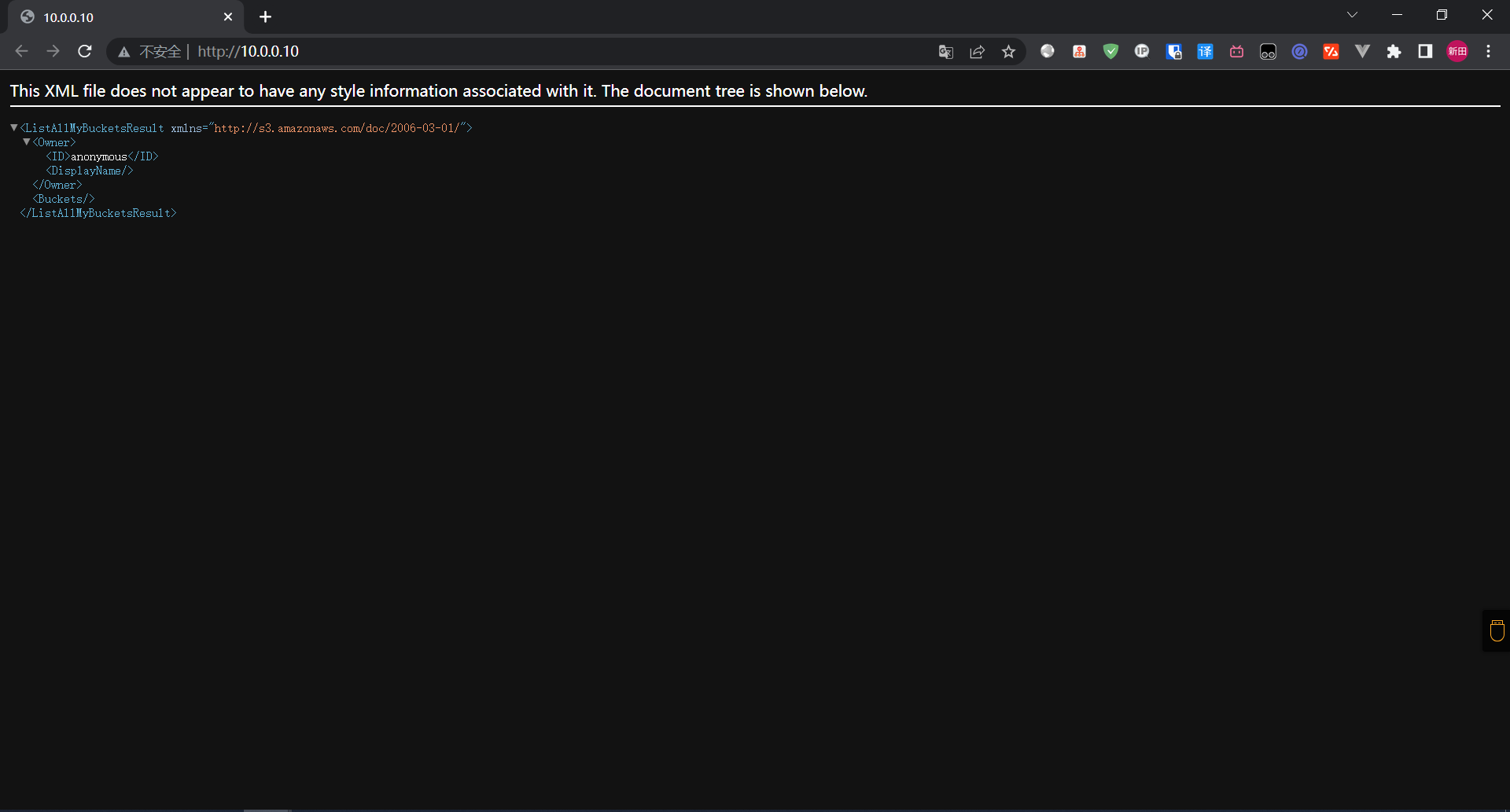

接下来我们进行修改一下端口,默认是7480;这里我们将端口修改为80端口

[root@ceph-1 ceph-deploy]# cat /root/ceph-deploy/ceph.conf

----

[client.rgw.ceph-1]

rgw_frontends = "civetweb port=80"

# client.rgw.[主机名] 这里需要注意修改的主机名

# 还需要注意修改的目录 (这里修改的目录是/root/ceph-deploy/ceph.conf)

修改完毕后,我们将配置分发下去;要让集群的主机都生效

[root@ceph-1 ceph-deploy]# ceph-deploy --overwrite-conf config push ceph-1 ceph-2 ceph-3

# 这里其实只需要复制到rgw网关节点就行,但是为了配置统一,我们将配置文件分发到集群的各个节点

push到各个机器后并没有生效,push相当于scp。文件没有生效,所以还需要重启rgw

[root@ceph-1 ceph-deploy]# systemctl restart ceph-radosgw.target

[root@ceph-1 ceph-deploy]# netstat -nplt | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 17845/radosgw

---

如果你想让http支持https也是在ceph配置文件中添加参数

# 例子如下

[client.rgw.ceph-1]

rgw_frontends = civetweb port=443s ssl_certificate=/etc/ceph/keyandcert.pem

调用对象存储网关

我们先创建一个s3的用户,获取到key之后访问对象存储

[root@ceph-1 ceph-deploy]# cd /root/ceph-deploy/

[root@ceph-1 ceph-deploy]# radosgw-admin user create --uid ceph-s3-user --display-name "Ceph S3 User Devopsdu"

{

"user_id": "ceph-s3-user", # uid名称

"display_name": "Ceph S3 User Devopsdu", # 名称

"email": "",

"suspended": 0,

"max_buckets": 1000, # 默认创建有1000个backets配额

"subusers": [],

"keys": [

{

"user": "ceph-s3-user",

"access_key": "ZTYPHT3WFT77QYGFMLYU",

#这里的key是我们后期访问radosgw的认证,如果忘记了可以通过radosgw-admin user info --uid ceph-s3-user查看

"secret_key": "f5N1T13yRwKXFkcbXCs7j5bsIRnC0gRsyNtNITsl"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"default_storage_class": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"check_on_raw": false,

"max_size": -1,

"max_size_kb": 0,

"max_objects": -1

},

"temp_url_keys": [],

"type": "rgw",

"mfa_ids": []

}

# --uid 指定一个uid名称

# --display-name 指定一个全名

这里的key我们已经获取到了,接下来我们使用s3的接口进行访问

[root@ceph-1 ceph-deploy]# yum install python-boto

# 编写Python脚本

import boto

import boto.s3.connection

access_key = 'ZTYPHT3WFT77QYGFMLYU' # 这里需要替换我们创建的key

secret_key = 'f5N1T13yRwKXFkcbXCs7j5bsIRnC0gRsyNtNITsl' # 这里也需要替换

conn = boto.connect_s3(\

aws_access_key_id=access_key,

aws_secret_access_key=secret_key,

host="10.0.0.10",

port=80,

is_secure=False, calling_format=boto.s3.connection.OrdinaryCallingFormat()

)

bucket = conn.create_bucket('ceph-s3-bucket')

for bucket in conn.get_all_buckets():

print ("{name}".format(

name = bucket.name,

created = bucket.creation_date,

))

执行脚本

PS E:\Pycharm Professional\kubemanager> python .\s3_client.py

ceph-s3-bucket

# 查看索引

[root@ceph-1 ceph-deploy]# ceph osd lspools

1 devopsdu

2 .rgw.root

3 default.rgw.control

4 default.rgw.meta

5 default.rgw.log

6 default.rgw.buckets.index

我们可以看到在pool创建了一个default.rgw.buckets.index的索引

# 命令行调用

# SDK调用方式不太适合运维操作,运维更倾向于命令行操作。下面我们进行命令行操作调用

# 这里使用s3cmd工具来进行配置

[root@ceph-1 ceph-deploy]# yum install -y s3cmd

# 针对s3cmd我们需要修改一些配置参数

[root@ceph-1 ceph-deploy]# s3cmd --configure

Enter new values or accept defaults in brackets with Enter.

Refer to user manual for detailed description of all options.

Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables.

Access Key: ZTYPHT3WFT77QYGFMLYU # 这里填写我们的key

Secret Key: f5N1T13yRwKXFkcbXCs7j5bsIRnC0gRsyNtNITsl # secret key填写

Default Region [US]:

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.

S3 Endpoint [s3.amazonaws.com]: 10.0.0.10:80 # s3地址

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be used

if the target S3 system supports dns based buckets.

DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: 10.0.0.10:80/%(bucket)s # s3访问格式

Encryption password is used to protect your files from reading

by unauthorized persons while in transfer to S3

Encryption password: # 无密码

Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3

servers is protected from 3rd party eavesdropping. This method is

slower than plain HTTP, and can only be proxied with Python 2.7 or newer

Use HTTPS protocol [Yes]: no # 不开启https

On some networks all internet access must go through a HTTP proxy.

Try setting it here if you can't connect to S3 directly

HTTP Proxy server name: # 没有设置代理名称

New settings:

Access Key: ZTYPHT3WFT77QYGFMLYU

Secret Key: f5N1T13yRwKXFkcbXCs7j5bsIRnC0gRsyNtNITsl

Default Region: US

S3 Endpoint: 10.0.0.10:80

DNS-style bucket+hostname:port template for accessing a bucket: 10.0.0.10:80/%(bucket)s

Encryption password: passwd

Path to GPG program: /usr/bin/gpg

Use HTTPS protocol: False

HTTP Proxy server name:

HTTP Proxy server port: 0

Test access with supplied credentials? [Y/n] y # 是否测试访问权限

Please wait, attempting to list all buckets...

Success. Your access key and secret key worked fine :-)

Now verifying that encryption works...

Success. Encryption and decryption worked fine :-)

Save settings? [y/N] y # 保存配置

Configuration saved to '/root/.s3cfg'

# 需要将signature_v2 改成true

[root@ceph-1 ceph-deploy]# sed -i 's/signature_v2 = False/signature_v2 = True/g' /root/.s3cfg

s3cmd 目前支持两种认真的方式一种是 v2,一种是 v4,而 s3cmd 2.x 版本默认用的是 v4,而 1.x 版本默认用的是 v2,这两种认证方式是有区别的,简单来说就是 v4 除了像 v2,那样需要S3桶的 accesskey 和 secretkey,还需要如 date 等信息来签名,然后放到 http request 的 Header 上,而 s3cmd 2.x 版本支持通过在 .s3cfg 配置文件,增加选项 signature_v2 = True 来修改认证方式,所以说,如果想快速解决这个403的问题,让用户加上这个选项就可以了

s3cmd使用

# 查看bucket

[root@ceph-1 ceph-deploy]# s3cmd ls

2022-05-21 05:33 s3://ceph-s3-bucket

---

# 创建bucket

[root@ceph-1 ceph-deploy]# s3cmd mb s3://ceph-s3-devopsdu

Bucket 's3://ceph-s3-devopsdu/' created

[root@ceph-1 ceph-deploy]# s3cmd ls

2022-05-21 05:33 s3://ceph-s3-bucket

2022-05-21 05:41 s3://ceph-s3-devopsdu

# 查看s3://ceph-s3-devopsdu内容

[root@ceph-1 ceph-deploy]# s3cmd ls s3://ceph-s3-devopsdu

# 空内容

# s3cmd 上传

# 上传/etc目录到s3中的/etc目录

# --recursive 递归上传

[root@ceph-1 ~]# s3cmd put ./devopsdu s3://ceph-s3-devopsdu/devopsdu/ --recursive

#查看

[root@ceph-1 ~]# s3cmd ls s3://ceph-s3-devopsdu

DIR s3://ceph-s3-devopsdu/devopsdu/

[root@ceph-1 ~]# s3cmd ls s3://ceph-s3-devopsdu/devopsdu/devopsdu/

2022-05-21 06:54 894317568 s3://ceph-s3-devopsdu/devopsdu/devopsdu/bitnami-lampstack-7.4.14-7-r52-linux-debian-10-x86_64-nami.ova

# 如果put提示ERROR: S3 error: 416 (InvalidRange)

1:需要将ceph.conf配置文件进行修改,添加mon_max_pg_per_osd = 1000 或者 多加几个OSD

2:重启ceph-mon (systemctl restart ceph-mon

# s3cmd 下载

[root@ceph-1 /]# cd /opt/

[root@ceph-1 opt]# ls

[root@ceph-1 opt]# s3cmd get s3://ceph-s3-devopsdu/ --recursive

[root@ceph-1 opt]# ll devopsdu/*

total 873360

-rw-r--r-- 1 root root 894317568 May 21 06:54 bitnami-lampstack-7.4.14-7-r52-linux-debian-10-x86_64-nami.ova

# s3cmd 删除

[root@ceph-1 opt]# s3cmd del s3://ceph-s3-devopsdu/devopsdu/bitnami-lampstack-7.4.14-7-r52-linux-debian-10-x86_64-nami.ova

delete: 's3://ceph-s3-devopsdu/devopsdu/bitnami-lampstack-7.4.14-7-r52-linux-debian-10-x86_64-nami.ova'

[root@ceph-1 opt]# s3cmd ls s3://ceph-s3-devopsdu/devopsdu/devopsdu

DIR s3://ceph-s3-devopsdu/devopsdu/devopsdu/

# 删除整个目录,需要添加--recursive递归

[root@ceph-1 opt]# s3cmd del s3://ceph-s3-devopsdu/devopsdu/ --recursive

[root@ceph-1 opt]# s3cmd ls s3://ceph-s3-devopsdu/

最终我们数据会在pools里面生成

[root@ceph-1 opt]# cd /root/ceph-deploy/

[root@ceph-1 ceph-deploy]# ceph osd lspools

1 devopsdu

2 .rgw.root

3 default.rgw.control

4 default.rgw.meta

5 default.rgw.log

6 default.rgw.buckets.index

7 default.rgw.buckets.non-ec

8 default.rgw.buckets.data

[root@ceph-1 ceph-deploy]# rados -p default.rgw.buckets.data ls

[root@ceph-1 ceph-deploy]# rados -p default.rgw.buckets.index ls

.dir.5e5f41d1-6135-459e-9e37-13ff82b26552.5070.3

.dir.5e5f41d1-6135-459e-9e37-13ff82b26552.5070.1

.dir.5e5f41d1-6135-459e-9e37-13ff82b26552.5070.2

# index为索引,data为数据

9:CephFS 文件系统

为什么需要使用CephFS

由于RBD不可以多个主机共享同一块磁盘,出现很多客户端需要写入数据的问题,这时就需要CephFS文件系统

我们使用高可用安装mds

[root@ceph-1 ceph-deploy]# ceph-deploy mds create ceph-1 ceph-2 ceph-3

# 这里我们将ceph-1 ceph-2 ceph-3都加入到集群中来

接下来我们可以看到,已经有mds了,数量为3个,状态为启动等待的状态

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 10h)

mgr: ceph-1(active, since 10h), standbys: ceph-2, ceph-3

mds: 3 up:standby # 可以看到

osd: 6 osds: 6 up (since 26m), 6 in (since 26m)

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 8 pools, 288 pgs

objects: 666 objects, 1.7 GiB

usage: 11 GiB used, 49 GiB / 60 GiB avail

pgs: 288 active+clean

# 因为没有文件系统,所以3个节点状态是启动,但是后面为等待的状态

# 创建pool

一个Ceph文件系统至少需要连个RADOS池,一个用于数据,一个用于元数据。

1:对元数据池使用更好的复制级别,因为此池中的任何数据丢失都可能导致整个文件系统无法访问

2:对元数据池使用SSD等低延迟存储,因为这将直接影响观察到的客户端文件系统操作的延迟。

3:用于创建文件的数据池是默认数据池,是存储所有inode回溯信息的位置,用于硬链接管理和灾难恢复。因此,在CephFS中创建的所有inode在默认数据池中至少有一个对象。

# 创建存储池,数据data,元数据metadata

# 创建名称为cephfs_data 的pool, pg数量为64

[root@ceph-1 ceph-deploy]# ceph osd pool create cephfs_data 64 64

pool 'cephfs_data' created

[root@ceph-1 ceph-deploy]# ceph osd pool create cephfs_metadata 64 64

pool 'cephfs_metadata' created

[root@ceph-1 ceph-deploy]# ceph osd pool ls

---

cephfs_data

cephfs_metadata

# 通常,元数据池最多有几GB的数据,建议使用比较小的PG数,64或者128常用于大型集群

# 创建文件系统

接下来需要创建文件系统,将刚刚创建的pool关联起来

[root@ceph-1 ceph-deploy]# ceph fs new cephfs_devopsdu cephfs_metadata cephfs_data

new fs with metadata pool 10 and data pool 9

# cephfs-devopsdu 为文件系统名称

# cephfs_metadata 为元数据的pool

# cephfs_data 为数据pool

创建完毕后可以通过下面的命令进行查看

[root@ceph-1 ceph-deploy]# ceph fs ls

name: cephfs_devopsdu, metadata pool: cephfs_metadata, data pools: [cephfs_data ]

# 此时,我们查看mds状态,已经有一个状态为active,另外2个为standby状态

[root@ceph-1 ceph-deploy]# ceph -s

cluster:

id: fb39bc5c-ac88-4b06-a580-5df5611ee976

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 10h)

mgr: ceph-1(active, since 11h), standbys: ceph-2, ceph-3

mds: cephfs_devopsdu:1 {0=ceph-1=up:active} 2 up:standby

osd: 6 osds: 6 up (since 31m), 6 in (since 31m)

rgw: 1 daemon active (ceph-1)

task status:

data:

pools: 10 pools, 416 pgs

objects: 688 objects, 1.7 GiB

usage: 11 GiB used, 49 GiB / 60 GiB avail

pgs: 416 active+clean

使用cephfs

# 内核驱动挂载

# 创建挂载点

[root@ceph-1 ceph-deploy]# mkdir /devopsdu

# 执行挂载命令

[root@ceph-1 ceph-deploy]# mount -t ceph ceph-1:6789:/ /devopsdu -o name=admin

[root@ceph-1 ceph-deploy]# df

---

10.0.0.10:6789:/ 15781888 0 15781888 0% /devopsdu

# ceph-1为mon节点ip,/为挂载/目录 /abcdocker 挂载点地址,name=admin,使用admin用户的权

# 这里分配的空间是整个ceph的空间大小

我们可以看到,挂载完linux内核会自动加载ceph模块

[root@ceph-1 ceph-deploy]# lsmod | grep ceph

ceph 363016 1

libceph 306750 2 rbd,ceph

dns_resolver 13140 1 libceph

libcrc32c 12644 2 xfs,libceph

# 在内核中挂载性能会比较高一点,但是有一些场景内核可能不太支持,所以可以使用用户FUSE挂载

用户空间FUSE挂载

用户空间挂载主要使用的是ceph-fuse客户端,我们需要单独安装这个客户端

[root@ceph-1 ceph-deploy]# yum install ceph-fuse -y

# 接下来创建本地的挂载点

[root@ceph-1 ceph-deploy]# mkdir /mnt/devopsdu_fuse

[root@ceph-1 ceph-deploy]# ceph-fuse -n client.admin -m 10.0.0.10:6789 /mnt/devopsdu_fuse/

ceph-fuse[20601]: starting ceph client

2022-05-21 16:13:35.279 7f4a51e23f80 -1 init, newargv = 0x5632d094e370 newargc=9

ceph-fuse[20601]: starting fuse

[root@ceph-1 ceph-deploy]# df -Th

---

10.0.0.10:6789:/ ceph 16G 0 16G 0% /devopsdu

ceph-fuse fuse.ceph-fuse 16G 0 16G 0% /mnt/devopsdu_fuse

1:client.admin 默认有一个client.admin的用户

2:10.0.0.10:6789 mon地址 (也可以写多个,逗号分隔)

# 如果我们不指定mon,默认找的是/etc/ceph/ceph.conf里面的配置文件

浙公网安备 33010602011771号

浙公网安备 33010602011771号