python 敏感词识别处理

方案一: jeiba分词检测

定义词库

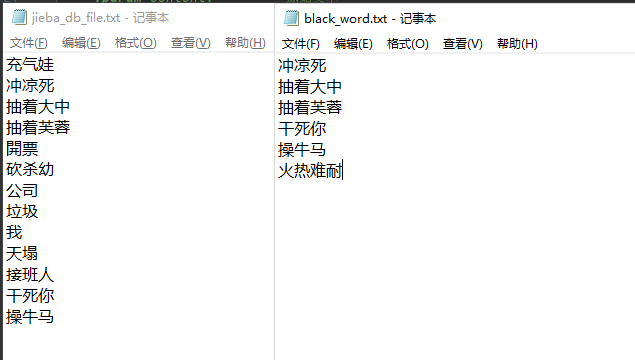

1、敏感词库:black_word.txt

2、jeiba 分词库:jieba_db_file.txt (jeiba的词库内容需要包含敏感词库的内容)

(我这简单的就用文本来记录了,这些词库都可以通过数据库来存储,对企业来说通过可视化页面去增删改可能会更方便运营处理)

代码示例

import os

import jieba

black_word_list = list()

def load_word(file_path):

# fixme: 有条件的通过数据库来缓存,将敏感词库加载到缓存数据库中,而不是内存缓存,这样能减少资源占用

assert os.path.isfile(file_path), "load_word fail. [{}] file not exist!".format(file_path)

with open(file_path, 'r', encoding='utf-8') as wf:

_lines = wf.readlines()

tmp_list = [w.strip() for w in _lines if w.strip() != '']

return tmp_list

def set_jieba_db_cache(jieba_db_file):

jieba.load_userdict(jieba_db_file)

def algorithm_word_jieba_cut(content, replace_word="***"):

"""

敏感词识别算法

结巴分词

:param content: 原始文本

:param replace_word: 敏感词替换的字符

:return: (识别到的敏感词列表, 原始文本被处理后的内容)

"""

global black_word_list

filter_word_list = []

content_word_list = jieba.cut(content) # 将内容通过jieba库进行分词

tmp_rnt_content = ''

last_not_black = True # 记录上一次是否敏感词

for word in content_word_list:

# TODO 这个 black_word_list 若这个敏感词库数据量挺大时, 就需要考虑资源问题,建议通过缓存数据库进行一定长度分段获取, 再进行匹配判断

if word in black_word_list: # 跟敏感词库进行比较

print("black_word = {}".format(word))

filter_word_list.append(word)

if last_not_black:

tmp_rnt_content += replace_word

last_not_black = False

else:

tmp_rnt_content += word

last_not_black = True

return list(set(filter_word_list)), tmp_rnt_content

def _init():

global black_word_list

# 设置jieba词库

jieba_db_file = r"G:\info\jieba_db_file.txt"

set_jieba_db_cache(jieba_db_file)

# 加载敏感词库

file_path = r"G:\info\black_word.txt"

black_word_list = load_word(file_path)

def main():

# 初始化词库

_init()

# 将文本内容 进行敏感词识别

content_str = "今天非常生气,我想要干死你都,操牛马的。"

black_words, replace_content = algorithm_word_jieba_cut(content_str, replace_word="***")

print(black_words)

print(replace_content)

if __name__ == '__main__':

main()

方案二:字典树(Trie)检测

定义敏感词字符列表

['badword1', 'badword2', 'sensitive'] # 敏感词列表

代码示例

class TrieNode:

def __init__(self):

self.children = {}

self.is_end_of_word = False

class Trie:

def __init__(self):

self.root = TrieNode()

def insert(self, word):

node = self.root

for char in word:

if char not in node.children:

node.children[char] = TrieNode()

node = node.children[char]

node.is_end_of_word = True

def build_trie(filter_word_list):

trie = Trie()

for word in filter_word_list:

trie.insert(word)

return trie

def replace_sensitive_words_with_trie(content, trie, replacement='***'):

result = []

i = 0

matched_words = [] # List to store matched sensitive words

while i < len(content):

j = i

node = trie.root

start_index = None

match_length = 0

current_match = '' # Track the current matching word

# Try to find a match in the trie

while j < len(content) and content[j] in node.children:

current_match += content[j]

node = node.children[content[j]]

if node.is_end_of_word:

start_index = i

match_length = j - i + 1

j += 1

if start_index is not None:

# If a match was found, add the replacement string to the result

result.append(replacement)

matched_words.append(current_match[:match_length]) # Add matched word to list

i = start_index + match_length

else:

# If no match was found, add the current character to the result

result.append(content[i])

i += 1

return ''.join(result), '; '.join(matched_words)

if __name__ == "__main__":

filter_word_list = ['badword1', 'badword2', 'sensitive'] # 敏感词列表

content = "This is a badly formed sentence with badword1 inside." # 输入的句子

trie = build_trie(filter_word_list)

sanitized_content, matched_words = replace_sensitive_words_with_trie(content, trie)

方案三:

敏感词过滤:

github:https://github.com/observerss/textfilter/blob/master/filter.py

>>> f = DFAFilter()

>>> f.add("sexy")

>>> f.filter("hello sexy baby")

hello **** baby

敏感词库:

github:https://github.com/konsheng/Sensitive-lexicon/tree/main/Vocabulary

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· winform 绘制太阳,地球,月球 运作规律

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· AI与.NET技术实操系列(五):向量存储与相似性搜索在 .NET 中的实现

· 超详细:普通电脑也行Windows部署deepseek R1训练数据并当服务器共享给他人