RocketMQ源码解析-消息消费

RocketMQ源码解析-消息消费

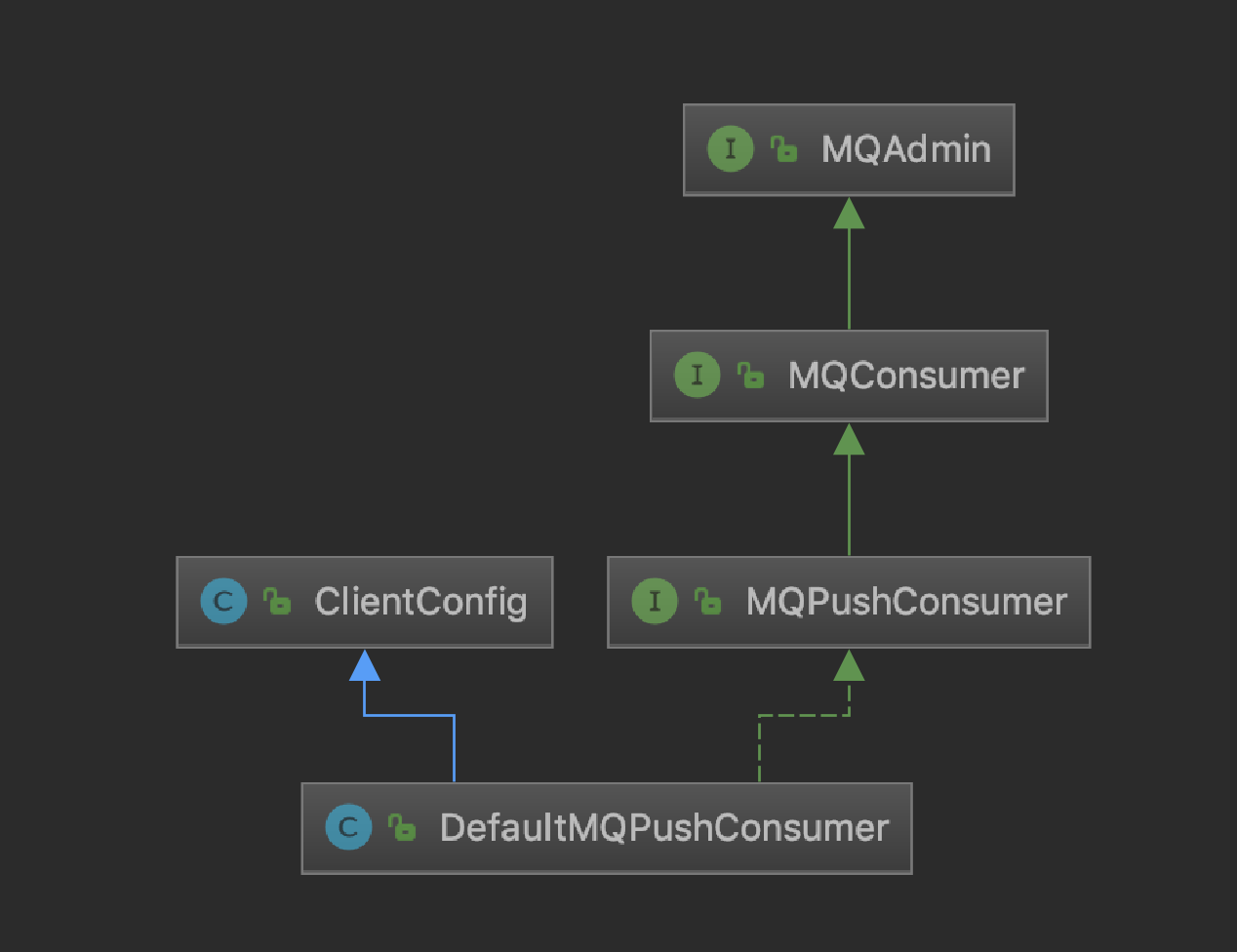

1.消费者相关类

2.消费者的启动

3.消息的拉取

4.消息的消费

5.消费队列的负载

6.消费进度管理

看了很多遍的代码,还是决定动手把记录下来,梳理一下整体结构和实现细节

RocketMQ中消息的消费分为2种方式,一种是pull模式,一种为push模式(基于pull模式实现),大部分的业务场合下业界用的比较多的是push模式,一句话你没有特殊需求就用push,push模式可以达到准实时的消息推送

那什么时候可以用pull模式呢?比如在高并发的场景下,消费端的性能可能会达到瓶颈的情况下,消费端可以采用pull模式,消费端根据自身消费情况去拉取,虽然push模式在消息拉取的过程中也会有流控(当前ProcessQueue队列有1000条消息还没有消费或者当前ProcessQueue中最大偏移量和最小偏移量超过2000将会触发流控,流控的策略就是延迟50ms再拉取消息),但是这个值在实际情况下,可能每台机器的性能都不太一样,会不好控制。

本文以集群模式push方式分析

消费者相关类

- MQConsumer

sendMessageBack(),If consuming failure,message will be send back to the broker,and delay consuming some time,如果消费端消费失败会把消息发送回broker端,稍后会重新消费

fetchSubscribeMessageQueues(topic) ,Fetch message queues from consumer cache according to the topic,根据topic从消费端缓存中获取MessageQueue的Set集合 - MQPushConsumer

registerMessageListener() 注册消息事件监听器,有并发模式和顺序模式俩种

subscribe() 基于主题订阅消息,可以带上消息过滤表达式subExpression,TAG或者SQL92,类模式过滤会在5.0.0版本中去掉,不建议使用 - DefaultMQPushConsumer

先看下DefaultMQPushConsumer的重要参数,如果第一次看会不记得,后面的代码看多了慢慢会体会到这些参数的作用,类中持有DefaultMQPushConsumerImpl对象,DefaultMQPushConsumerImpl类实现了大部分的消息消费功能

//消费组

private String consumerGroup;

//消费端模式,默认为集群模式,还有一种广播模式

private MessageModel messageModel = MessageModel.CLUSTERING;

//根据消费进度从broker拉取不到消息时采取的策略

//1.CONSUME_FROM_LAST_OFFSET 最大偏移量开始

//2.CONSUME_FROM_FIRST_OFFSET 最小偏移量开始

//3.CONSUME_FROM_TIMESTAMP 从消费者启动时间戳开始

private ConsumeFromWhere consumeFromWhere = ConsumeFromWhere.CONSUME_FROM_LAST_OFFSET;

//集群模式下消息队列负载策略

private AllocateMessageQueueStrategy allocateMessageQueueStrategy;

//消息过滤关系

private Map<String /* topic */, String /* sub expression */> subscription = new HashMap<String, String>();

//消息消费监听器

private MessageListener messageListener;

//消息消费进度存储器

private OffsetStore offsetStore;

//消费线程最小线程数

private int consumeThreadMin = 20;

//消费线程最大线程数,因为消费线程池用的是无界队列,所以这个参数用不上,原因请参考线程池原理

private int consumeThreadMax = 64;

//动态调整线程数量的阀值

private long adjustThreadPoolNumsThreshold = 100000;

//并发消费时拉取消息前会有流控,会判断处理队列中最大偏移量和最小偏移量的跨度,不能大于2000

private int consumeConcurrentlyMaxSpan = 2000;

//push模式下任务拉取的时间间隔

private long pullInterval = 0;

//每次消费者实际消费的数量,不是从broker端拉取的数量

private int consumeMessageBatchMaxSize = 1;

//从broker端拉取的数量

private int pullBatchSize = 32;

//是否每次拉取之后都跟新订阅关系

private boolean postSubscriptionWhenPull = false;

//消息最大消费重试次数

private int maxReconsumeTimes = -1;

//延迟将该消息提交到消费者的线程池等待时间,默认1s

private long suspendCurrentQueueTimeMillis = 1000;

//消费超时时间,15分钟

private long consumeTimeout = 15;

消费者的启动

代码入口,DefaultMQPushConsumerImpl#start()方法

public synchronized void start() throws MQClientException {

switch (this.serviceState) {

case CREATE_JUST:

...

this.serviceState = ServiceState.START_FAILED;

//1.检查配置信息

this.checkConfig();

//2.加工订阅信息,将Map<String /* topic*/, String/* subExtentions*/>转换为Map<String,SubscriptionData>,同时,如果消息消费模式为集群模式,还需要为该消费组创建一个重试主题。

this.copySubscription();

进入DefaultMQPushConsumerImpl#copySubscription()方法:

//2.1.从defaultMQPushConsumer中获取订阅关系

//Subscription数据是通过subscribe方法放入的

Map<String, String> sub = this.defaultMQPushConsumer.getSubscription();

if (sub != null) {

for (final Map.Entry<String, String> entry : sub.entrySet()) {

final String topic = entry.getKey();

final String subString = entry.getValue();

SubscriptionData subscriptionData = FilterAPI.buildSubscriptionData(this.defaultMQPushConsumer.getConsumerGroup(),

topic, subString);

//2.2.把组装好的订阅关系保存到rebalanceImpl中

this.rebalanceImpl.getSubscriptionInner().put(topic, subscriptionData);

}

}

if (null == this.messageListenerInner) {

this.messageListenerInner = this.defaultMQPushConsumer.getMessageListener();

}

switch (this.defaultMQPushConsumer.getMessageModel()) {

case BROADCASTING:

break;

case CLUSTERING:

//2.3.集群模式下给topic创建一个retry的topic,retry+comsumerGroup

final String retryTopic = MixAll.getRetryTopic(this.defaultMQPushConsumer.getConsumerGroup());

SubscriptionData subscriptionData = FilterAPI.buildSubscriptionData(this.defaultMQPushConsumer.getConsumerGroup(),

retryTopic, SubscriptionData.SUB_ALL);

this.rebalanceImpl.getSubscriptionInner().put(retryTopic, subscriptionData);

···

回到DefaultMQPushConsumerImpl#start()方法

//3.创建MQClientInstance实例,这个实例在一个JVM中消费者和生产者共用,MQClientManager中维护了一个factoryTable,类型为ConcurrentMap,保存了clintId和MQClientInstance

this.mQClientFactory = MQClientManager.getInstance().getAndCreateMQClientInstance(this.defaultMQPushConsumer, this.rpcHook);

//4.负载均衡

this.rebalanceImpl.setConsumerGroup(this.defaultMQPushConsumer.getConsumerGroup());

this.rebalanceImpl.setMessageModel(this.defaultMQPushConsumer.getMessageModel());

//5.队列默认分配算法 默认平均hash队列算法,建议不要使用默认的算法

this.rebalanceImpl.setAllocateMessageQueueStrategy(this.defaultMQPushConsumer.getAllocateMessageQueueStrategy());

this.rebalanceImpl.setmQClientFactory(this.mQClientFactory);

//6.pullAPIWrapper拉取消息的API包装类,主要有消息的拉取方法和接受拉取到的消息

this.pullAPIWrapper = new PullAPIWrapper(

mQClientFactory,

this.defaultMQPushConsumer.getConsumerGroup(), isUnitMode());

this.pullAPIWrapper.registerFilterMessageHook(filterMessageHookList);

下面开始关于消息的消费进度,DefaultMQPushConsumerImpl#start()

//7.消费进度存储,如果是集群模式,使用远程存储RemoteBrokerOffsetStore,如果是广播模式,则使用本地存储LocalFileOffsetStore,

if (this.defaultMQPushConsumer.getOffsetStore() != null) {

this.offsetStore = this.defaultMQPushConsumer.getOffsetStore();

} else {

switch (this.defaultMQPushConsumer.getMessageModel()) {

case BROADCASTING:

this.offsetStore = new LocalFileOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup());

break;

case CLUSTERING:

this.offsetStore = new RemoteBrokerOffsetStore(this.mQClientFactory, this.defaultMQPushConsumer.getConsumerGroup());

break;

default:

break;

}

this.defaultMQPushConsumer.setOffsetStore(this.offsetStore);

}

//8.加载消息进度

this.offsetStore.load();

offsetStore是用来操作消费进度的对象,我们看一下RemoteBrokerOffsetStore对象,push模式消费进度最后持久化在broker端,但是consumer端在内存中也持有消费进度,RemoteBrokerOffsetStore参数:

public class RemoteBrokerOffsetStore implements OffsetStore {

private final static InternalLogger log = ClientLogger.getLog();

//MQ客户端实例,该实例被同一个客户端的消费者、生产者共用

private final MQClientInstance mQClientFactory;

// MQ消费组

private final String groupName;

//消费进度存储(内存中)

private ConcurrentMap<MessageQueue, AtomicLong> offsetTable =

new ConcurrentHashMap<MessageQueue, AtomicLong>();

public RemoteBrokerOffsetStore(MQClientInstance mQClientFactory, String groupName) {

this.mQClientFactory = mQClientFactory;

this.groupName = groupName;

}

RemoteBrokerOffsetStore对象中有一个offsetTable的对象,这个就是用于在消费端内存中存储消费进度的,消费进度后文会着重介绍,第8步的load()方法,RemoteBrokerOffsetStore对象中是一个空方法,为什么为空,todo,继续回到DefaultMQPushConsumerImpl#start()

//9.判断是顺序消息还是并发消息

if (this.getMessageListenerInner() instanceof MessageListenerOrderly) {

this.consumeOrderly = true;

this.consumeMessageService =

new ConsumeMessageOrderlyService(this, (MessageListenerOrderly) this.getMessageListenerInner());

} else if (this.getMessageListenerInner() instanceof MessageListenerConcurrently) {

this.consumeOrderly = false;

this.consumeMessageService =

new ConsumeMessageConcurrentlyService(this, (MessageListenerConcurrently) this.getMessageListenerInner());

}

//10.消息消费服务并启动

this.consumeMessageService.start();

MessageListenerInner也就是MessageListener,这个是我们注册消费回调类的时间创建的,顺序消息和并发消息会分别创建不同的consumeMessageService,consumeMessageService是消费逻辑类,启动consumeMessageService,我们去consumeMessageService里面看一下

public void start() {

this.cleanExpireMsgExecutors.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

cleanExpireMsg();

}

}, this.defaultMQPushConsumer.getConsumeTimeout(), this.defaultMQPushConsumer.getConsumeTimeout(), TimeUnit.MINUTES);

}

consumeMessageService.start()方法中启动了一个清理过期消息的线程,消息过期时间为15分钟,15分钟清理一次,延迟15分钟启动。继续回到DefaultMQPushConsumerImpl#start()

//11.注册消费者

boolean registerOK = mQClientFactory.registerConsumer(this.defaultMQPushConsumer.getConsumerGroup(), this);

if (!registerOK) {

this.serviceState = ServiceState.CREATE_JUST;

this.consumeMessageService.shutdown();

throw new MQClientException("The consumer group[" + this.defaultMQPushConsumer.getConsumerGroup()

+ "] has been created before, specify another name please." + FAQUrl.suggestTodo(FAQUrl.GROUP_NAME_DUPLICATE_URL),

null);

}

进mQClientFactory.registerConsumer()方法看下,mQClientFactory是我们上面第3步创建的MQClientInstance实例

public boolean registerConsumer(final String group, final MQConsumerInner consumer) {

if (null == group || null == consumer) {

return false;

}

MQConsumerInner prev = this.consumerTable.putIfAbsent(group, consumer);

if (prev != null) {

log.warn("the consumer group[" + group + "] exist already.");

return false;

}

return true;

}

registerConsumer方法只是把consumer信息放到了MQClientInstance的consumerTable中,并没有向nameServer中注册,继续回到DefaultMQPushConsumerImpl#start()

//12.MQClientInstance启动

mQClientFactory.start();

log.info("the consumer [{}] start OK.", this.defaultMQPushConsumer.getConsumerGroup());

this.serviceState = ServiceState.RUNNING;

break;

启动MQClientInstance,去看下start方法中做了哪些事情

public void start() throws MQClientException {

synchronized (this) {

switch (this.serviceState) {

case CREATE_JUST:

this.serviceState = ServiceState.START_FAILED;

// If not specified,looking address from name server

if (null == this.clientConfig.getNamesrvAddr()) {

this.mQClientAPIImpl.fetchNameServerAddr();

}

// Start request-response channel

this.mQClientAPIImpl.start();

//12.1 定时任务

this.startScheduledTask();

//12.2 Start pull service

this.pullMessageService.start();

//12.3 Start rebalance service

this.rebalanceService.start();

//12.4 Start push service

this.defaultMQProducer.getDefaultMQProducerImpl().start(false);

log.info("the client factory [{}] start OK", this.clientId);

this.serviceState = ServiceState.RUNNING;

break;

12.1启动了定时任务,看下启动了哪里定时任务

private void startScheduledTask() {

//每隔2分钟尝试获取一次NameServer地址

if (null == this.clientConfig.getNamesrvAddr()) {

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

MQClientInstance.this.mQClientAPIImpl.fetchNameServerAddr();

} catch (Exception e) {

log.error("ScheduledTask fetchNameServerAddr exception", e);

}

}

}, 1000 * 10, 1000 * 60 * 2, TimeUnit.MILLISECONDS);

}

//每隔30S尝试更新主题路由信息

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

MQClientInstance.this.updateTopicRouteInfoFromNameServer();

} catch (Exception e) {

log.error("ScheduledTask updateTopicRouteInfoFromNameServer exception", e);

}

}

}, 10, this.clientConfig.getPollNameServerInterval(), TimeUnit.MILLISECONDS);

//每隔30S 进行Broker心跳检测

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

MQClientInstance.this.cleanOfflineBroker();

MQClientInstance.this.sendHeartbeatToAllBrokerWithLock();

} catch (Exception e) {

log.error("ScheduledTask sendHeartbeatToAllBroker exception", e);

}

}

}, 1000, this.clientConfig.getHeartbeatBrokerInterval(), TimeUnit.MILLISECONDS);

//默认每隔5秒持久化ConsumeOffset

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

MQClientInstance.this.persistAllConsumerOffset();

} catch (Exception e) {

log.error("ScheduledTask persistAllConsumerOffset exception", e);

}

}

}, 1000 * 10, this.clientConfig.getPersistConsumerOffsetInterval(), TimeUnit.MILLISECONDS);

//默认每隔1S检查线程池适配

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

MQClientInstance.this.adjustThreadPool();

} catch (Exception e) {

log.error("ScheduledTask adjustThreadPool exception", e);

}

}

}, 1, 1, TimeUnit.MINUTES);

}

每隔2分钟尝试获取一次NameServer地址

每隔30S尝试更新主题路由信息

每隔30S 进行Broker心跳检测

默认每隔5秒持久化ConsumeOffset

默认每隔1S检查线程池适配

todo,这里的定时任务线程后文分析

12.2启动了消息拉取任务

12.3启动了消息负载均衡

现在我们回到DefaultMQPushConsumerImpl#start()方法

//13.更新TopicRouteData

this.updateTopicSubscribeInfoWhenSubscriptionChanged();

//14.检测broker状态

this.mQClientFactory.checkClientInBroker();

//15.发送心跳

this.mQClientFactory.sendHeartbeatToAllBrokerWithLock();

//16.重新负载

this.mQClientFactory.rebalanceImmediately();

第15步会向所有broker发送心跳

第16步会触发第一次负载均衡

到这里消费端就是启动完成了

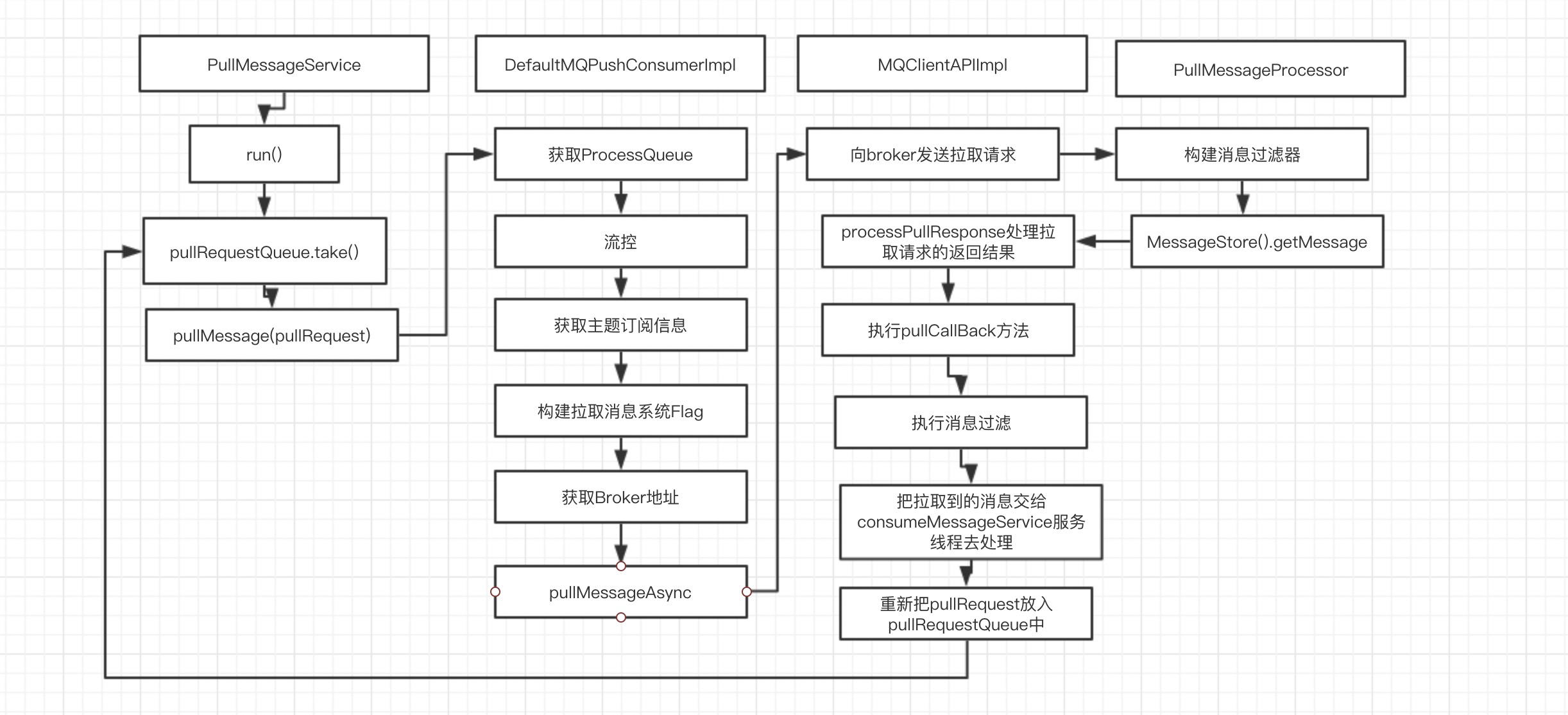

消息的拉取

分析一下PUSH模式下的集群模式消息拉取代码

同一个消费组内有多个消费者,一个topic主题下又有多个消费队列,那么消费者是怎么分配这些消费队列的呢,从上面的启动的代码中是不是还记得在org.apache.rocketmq.client.impl.factory.MQClientInstance#start中,启动了pullMessageService服务线程,这个服务线程的作用就是拉取消息,我们去看下他的run方法:

@Override

public void run() {

while (!this.isStopped()) {

try {

//从LinkedBlockingQueue中拉取pullRequest

PullRequest pullRequest = this.pullRequestQueue.take();

this.pullMessage(pullRequest);

} catch (InterruptedException ignored) {

} catch (Exception e) {

log.error("Pull Message Service Run Method exception", e);

}

}

从pullRequestQueue中获取pullRequest,如果pullRequestQueue为空,那么线程将阻塞直到有pullRequest放入,那么pullRequest是什么时候放入的呢,有2个地方:

public void executePullRequestLater(final PullRequest pullRequest, final long timeDelay) {

if (!isStopped()) {

this.scheduledExecutorService.schedule(new Runnable() {

@Override

public void run() {

PullMessageService.this.executePullRequestImmediately(pullRequest);

}

}, timeDelay, TimeUnit.MILLISECONDS);

} else {

log.warn("PullMessageServiceScheduledThread has shutdown");

}

}

public void executePullRequestImmediately(final PullRequest pullRequest) {

try {

this.pullRequestQueue.put(pullRequest);

} catch (InterruptedException e) {

log.error("executePullRequestImmediately pullRequestQueue.put", e);

}

}

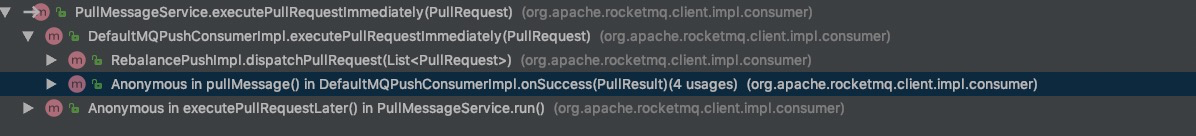

executePullRequestImmediately 和 executePullRequestLater,一个是立即放入pullRequest,一个是延迟放入pullRequest,什么时候需要延迟放入pullRequest呢,都是出现异常的情况下,什么时候立即放入呢,我们看下这个方法的调用链:

有3个地方调用,去掉延迟调用的那一处,还有两处

- 第一个是RebalancePushImpl#dispatchPullRequest中创建,这个是消息队列的负载均衡,- 第二个是消息拉取完成之后,又重新把pullRequest放入pullRequestQueue中

我们看下PullRequest类里面有哪些东东

public class PullRequest {

private String consumerGroup;

private MessageQueue messageQueue;

private ProcessQueue processQueue;

private long nextOffset;

private boolean lockedFirst = false;

1.consumerGroup 消费组

2.messageQueue 消费队列

3.ProcessQueue 承载拉取到的消息的对象

4.nextOffset 下次拉取消息的点位

如果从pullRequestQueue中take到pullRequest,那么执行this.pullMessage(pullRequest);

private void pullMessage(final PullRequest pullRequest) {

final MQConsumerInner consumer = this.mQClientFactory.selectConsumer(pullRequest.getConsumerGroup());

if (consumer != null) {

DefaultMQPushConsumerImpl impl = (DefaultMQPushConsumerImpl) consumer;

impl.pullMessage(pullRequest);

这里的consumer直接强制转换成了DefaultMQPushConsumerImpl,为什么呢,看来这个拉取服务只为push模式服务,那么pull模式呢,TODO一下,回头看看,进入DefaultMQPushConsumerImpl.pullMessage方法,这个方法就是整个消息拉取的关键方法

public void pullMessage(final PullRequest pullRequest) {

//1.获取处理队列ProcessQueue

final ProcessQueue processQueue = pullRequest.getProcessQueue();

//2.如果dropped=true,那么return

if (processQueue.isDropped()) {

log.info("the pull request[{}] is dropped.", pullRequest.toString());

return;

}

//3.然后更新该消息队列最后一次拉取的时间

pullRequest.getProcessQueue().setLastPullTimestamp(System.currentTimeMillis());

try {

//4.如果消费者 服务状态不为ServiceState.RUNNING,默认延迟3秒再执行

this.makeSureStateOK();

} catch (MQClientException e) {

log.warn("pullMessage exception, consumer state not ok", e);

//4.1 这个方法在上文分析过,延迟执行放入pullRequest操作

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_EXCEPTION);

return;

}

//5.是否暂停,如果有那么延迟3s执行,目前我没有发现哪里有调用暂停,可能是为以后预留

if (this.isPause()) {

log.warn("consumer was paused, execute pull request later. instanceName={}, group={}", this.defaultMQPushConsumer.getInstanceName(), this.defaultMQPushConsumer.getConsumerGroup());

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_SUSPEND);

return;

}

这里想说一下很多地方都用到了状态,是否停止,暂停这样的属性,一般都是用volatile去修饰,在不同线程中起到通信的作用。

//6.消息的拉取会有流量控制,当processQueue没有消费的消息的数量达到(默认1000个)会触发流量控制

long cachedMessageCount = processQueue.getMsgCount().get();

long cachedMessageSizeInMiB = processQueue.getMsgSize().get() / (1024 * 1024);

if (cachedMessageCount > this.defaultMQPushConsumer.getPullThresholdForQueue()) {

//PullRequest延迟50ms后,放入LinkedBlockQueue中,每触发1000次打印一次警告

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message count exceeds the threshold {}, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

}

//7.当processQueue中没有消费的消息体总大小 大于(默认100m)时,触发流控,

if (cachedMessageSizeInMiB > this.defaultMQPushConsumer.getPullThresholdSizeForQueue()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueFlowControlTimes++ % 1000) == 0) {

log.warn(

"the cached message size exceeds the threshold {} MiB, so do flow control, minOffset={}, maxOffset={}, count={}, size={} MiB, pullRequest={}, flowControlTimes={}",

this.defaultMQPushConsumer.getPullThresholdSizeForQueue(), processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), cachedMessageCount, cachedMessageSizeInMiB, pullRequest, queueFlowControlTimes);

}

return;

}

//8.如果不是顺序消息,判断processQueue中消息的最大间距,就是消息的最大位置和最小位置的差值如果大于默认值2000,那么触发流控

if (!this.consumeOrderly) {

if (processQueue.getMaxSpan() > this.defaultMQPushConsumer.getConsumeConcurrentlyMaxSpan()) {

this.executePullRequestLater(pullRequest, PULL_TIME_DELAY_MILLS_WHEN_FLOW_CONTROL);

if ((queueMaxSpanFlowControlTimes++ % 1000) == 0) {

log.warn(

"the queue's messages, span too long, so do flow control, minOffset={}, maxOffset={}, maxSpan={}, pullRequest={}, flowControlTimes={}",

processQueue.getMsgTreeMap().firstKey(), processQueue.getMsgTreeMap().lastKey(), processQueue.getMaxSpan(),

pullRequest, queueMaxSpanFlowControlTimes);

}

return;

}

上面的6,7,8步都在进行流控判断,防止消费端压力太大,未消费消息太多

//9.获取主题订阅信息

final SubscriptionData subscriptionData = this.rebalanceImpl.getSubscriptionInner().get(pullRequest.getMessageQueue().getTopic());

这里通过pullRequest的messageQueue获取topic,再从rebalanceImpl中通过topic获取SubscriptionData,作用是去broker端拉取消息的时候,broker端要知道拉取哪个topic下的信息,过滤tag是什么

//10.new一个回调方法,这个回调方法在broker端拉取完消息将调用

PullCallback pullCallback = new PullCallback() {

这一步的回调方法后文再分析,先过

//11.如果是集群消费模式,从内存中获取MessageQueue的commitlog偏移量

boolean commitOffsetEnable = false;

long commitOffsetValue = 0L;

if (MessageModel.CLUSTERING == this.defaultMQPushConsumer.getMessageModel()) {

commitOffsetValue = this.offsetStore.readOffset(pullRequest.getMessageQueue(), ReadOffsetType.READ_FROM_MEMORY);

if (commitOffsetValue > 0) {

commitOffsetEnable = true;

}

}

从内存中获取MessageQueue的commitLog偏移量,为什么是从内存中获取呢,集群模式消费进度不是存储在broker端的吗?这个问题我们留着,等我们分析消费进度机制的时候再来看

String subExpression = null;

boolean classFilter = false;

//12.这里又去获取了一遍SubscriptionData,上面不是获取了吗,没有必要的感觉

SubscriptionData sd = this.rebalanceImpl.getSubscriptionInner().get(pullRequest.getMessageQueue().getTopic());

if (sd != null) {

if (this.defaultMQPushConsumer.isPostSubscriptionWhenPull() && !sd.isClassFilterMode()) {

//过滤信息

subExpression = sd.getSubString();

}

//是否是类过滤模式,现在已经不建议用类过滤模式了,5.0版本之后将弃用

classFilter = sd.isClassFilterMode();

}

//13.构建拉取消息系统Flag: 是否支持comitOffset,suspend,subExpression,classFilter

int sysFlag = PullSysFlag.buildSysFlag(

commitOffsetEnable, // commitOffset

true, // suspend

subExpression != null, // subscription

classFilter // class filter

);

//14.调用pullAPI方法来拉取消息

this.pullAPIWrapper.pullKernelImpl(

pullRequest.getMessageQueue(), // 消息消费队列

subExpression,//消息订阅子模式subscribe( topicName, "模式")

subscriptionData.getExpressionType(),

subscriptionData.getSubVersion(),// 版本

pullRequest.getNextOffset(),//拉取位置

this.defaultMQPushConsumer.getPullBatchSize(),//从broker端拉取多少消息

sysFlag,// 系统标记,FLAG_COMMIT_OFFSET FLAG_SUSPEND FLAG_SUBSCRIPTION FLAG_CLASS_FILTER

commitOffsetValue,// 当前消息队列 commitlog日志中当前的最新偏移量(内存中)

BROKER_SUSPEND_MAX_TIME_MILLIS, // 允许的broker 暂停的时间,毫秒为单位,默认为15s

CONSUMER_TIMEOUT_MILLIS_WHEN_SUSPEND, // 超时时间,默认为30s

CommunicationMode.ASYNC, // 超时时间,默认为30s

pullCallback // pull 回调

);

进入PullAPIWrapper#pullKernelImpl,看下具体的拉取实现

//15.查找Broker信息

FindBrokerResult findBrokerResult =

this.mQClientFactory.findBrokerAddressInSubscribe(mq.getBrokerName(),

this.recalculatePullFromWhichNode(mq), false);

//16.如果没有找到对应的broker,那么重新从nameServer拉取信息

if (null == findBrokerResult) {

this.mQClientFactory.updateTopicRouteInfoFromNameServer(mq.getTopic());

findBrokerResult =

this.mQClientFactory.findBrokerAddressInSubscribe(mq.getBrokerName(),

this.recalculatePullFromWhichNode(mq), false);

}

根据brokerName,brokerId从mQClientFactory中查找Broker信息,最后调用MQClientAPIImpl#pullMessage去broker端拉取消息,MQClientAPIImpl封装了网络通信的一些API,我们找到broker端处理拉取请求的入口,根据RequestCode.PULL_MESSAGE搜索,找到PullMessageProcessor#processRequest方法:

final GetMessageResult getMessageResult =

this.brokerController.getMessageStore().getMessage(requestHeader.getConsumerGroup(), requestHeader.getTopic(),

requestHeader.getQueueId(), requestHeader.getQueueOffset(), requestHeader.getMaxMsgNums(), messageFilter);

这个方法调用了MessageStore.getMessage()获取消息,方法的参数的含义:

String group, 消息组名称

String topic, topic名称

int queueId, 队列ID,就是ConsumerQueue的ID

long offset, 待拉取偏移量

int maxMsgNums, 最大拉取数量

MessageFilter messageFilter 消息过滤器

GetMessageStatus status = GetMessageStatus.NO_MESSAGE_IN_QUEUE;

//待查找的队列的偏移量

long nextBeginOffset = offset;

//当前队列最小偏移量

long minOffset = 0;

//当前队列最大偏移量

long maxOffset = 0;

GetMessageResult getResult = new GetMessageResult();

//当前commitLog最大偏移量

final long maxOffsetPy = this.commitLog.getMaxOffset();

//根据topicId和queueId获取consumeQueue

ConsumeQueue consumeQueue = findConsumeQueue(topic, queueId);

minOffset = consumeQueue.getMinOffsetInQueue();

maxOffset = consumeQueue.getMaxOffsetInQueue();

//当前队列没有消息

if (maxOffset == 0) {

status = GetMessageStatus.NO_MESSAGE_IN_QUEUE;

nextBeginOffset = nextOffsetCorrection(offset, 0);

} else if (offset < minOffset) {

status = GetMessageStatus.OFFSET_TOO_SMALL;

nextBeginOffset = nextOffsetCorrection(offset, minOffset);

} else if (offset == maxOffset) {

status = GetMessageStatus.OFFSET_OVERFLOW_ONE;

nextBeginOffset = nextOffsetCorrection(offset, offset);

} else if (offset > maxOffset) {

status = GetMessageStatus.OFFSET_OVERFLOW_BADLY;

if (0 == minOffset) {

nextBeginOffset = nextOffsetCorrection(offset, minOffset);

} else {

nextBeginOffset = nextOffsetCorrection(offset, maxOffset);

}

}

- maxOffset == 0

- offset < minOffset

- offset == maxOffset

- offset > maxOffset

这四种情况下都是异常情况,只有minOffset <= offset <= maxOffset情况下才是正常,从commitLog获取到数据之后,返回

getResult.setStatus(status);

getResult.setNextBeginOffset(nextBeginOffset);

getResult.setMaxOffset(maxOffset);

getResult.setMinOffset(minOffset);

这里minOffset和maxOffset都是broker端的consumeQueue中的最大最小值,现在已经从commitLog中拿到了需要消费的消息,回到PullMessageProcessor#processRequest中

if (getMessageResult.isSuggestPullingFromSlave()) {

responseHeader.setSuggestWhichBrokerId(subscriptionGroupConfig.getWhichBrokerWhenConsumeSlowly());

} else {

responseHeader.setSuggestWhichBrokerId(MixAll.MASTER_ID);

}

如果从节点数据包含下一次拉取偏移量,设置下一次拉取任务的brokerId,如果commitLog标记可用并且当前节点为主节点,那么更新消息的消费进度,关于消费进度后文会单独分析

服务端消息拉取处理完毕之后,我们回到consumer端,我们进入MQClientAPIImpl#processPullResponse

PullStatus pullStatus = PullStatus.NO_NEW_MSG;

switch (response.getCode()) {

case ResponseCode.SUCCESS:

pullStatus = PullStatus.FOUND;

break;

case ResponseCode.PULL_NOT_FOUND:

pullStatus = PullStatus.NO_NEW_MSG;

break;

case ResponseCode.PULL_RETRY_IMMEDIATELY:

pullStatus = PullStatus.NO_MATCHED_MSG;

break;

case ResponseCode.PULL_OFFSET_MOVED:

pullStatus = PullStatus.OFFSET_ILLEGAL;

break;

default:

throw new MQBrokerException(response.getCode(), response.getRemark());

}

根据服务端返回的结果code码来处理拉取结果,组装PullResultExt

return new PullResultExt(pullStatus, responseHeader.getNextBeginOffset(), responseHeader.getMinOffset(),

responseHeader.getMaxOffset(), null, responseHeader.getSuggestWhichBrokerId(), response.getBody());

现在调用pullCallback.onSuccess(pullResult);我们进入pullCallback中:

pullResult = DefaultMQPushConsumerImpl.this.pullAPIWrapper.processPullResult(pullRequest.getMessageQueue(), pullResult,

subscriptionData);

pullAPIWrapper.processPullResult是处理拉取到的消息进行解码成一条条消息,并且执行tag模式的消息过滤,并且执行hook操作并填充到MsgFoundList中,接下来按照正常流程分析,就是拉取到消息的情况

long prevRequestOffset = pullRequest.getNextOffset();

pullRequest.setNextOffset(pullResult.getNextBeginOffset());

...

if (pullResult.getMsgFoundList() == null || pullResult.getMsgFoundList().isEmpty()) {

DefaultMQPushConsumerImpl.this.executePullRequestImmediately(pullRequest);

}

先跟新下一次拉取的偏移量,如果MsgFoundList为空,那么立即触发下一次拉取,为什么可能为空呢,因为有tag过滤,服务端只验证了tag的hashcode,为什么要采用这样的方式呢,还有个疑问就是,这个时候被过滤掉的消息怎么才能被其他消费者消费,因为broker端已经提交了消费进度。

boolean dispatchToConsume = processQueue.putMessage(pullResult.getMsgFoundList());

//消费消息服务提交

DefaultMQPushConsumerImpl.this.consumeMessageService.submitConsumeRequest(

pullResult.getMsgFoundList(),

processQueue,

pullRequest.getMessageQueue(),

dispatchToConsume);

将msgFoundList提交保存到processQueue中,承载的对象是msgTreeMap,processQueue中用到了读写锁,后面分析一下,然后将拉取到的消息提交到consumeMessageService线程中,这里将是消费消息的入口处,到这里消息的拉取就完成了,这次消息拉取完成后,pullRequest将会被重新放入pullrequestQueue中,再次进行消息的拉取。下面一张流程图就是消息拉取的整个过程

消息的消费

消息拉取到了之后,消费者要进行消息的消费,消息的消费主要是consumeMessageService线程做的,我们先看下consumeMessageService的构造函数

public ConsumeMessageConcurrentlyService(DefaultMQPushConsumerImpl defaultMQPushConsumerImpl,

MessageListenerConcurrently messageListener) {

this.defaultMQPushConsumerImpl = defaultMQPushConsumerImpl;

this.messageListener = messageListener;

this.defaultMQPushConsumer = this.defaultMQPushConsumerImpl.getDefaultMQPushConsumer();

this.consumerGroup = this.defaultMQPushConsumer.getConsumerGroup();

this.consumeRequestQueue = new LinkedBlockingQueue<Runnable>();

this.consumeExecutor = new ThreadPoolExecutor(

//线程池的常驻线程数:consumeThreadMin

this.defaultMQPushConsumer.getConsumeThreadMin(),

//线程池的最大线程数:consumeThreadMax

this.defaultMQPushConsumer.getConsumeThreadMax(),

1000 * 60,

TimeUnit.MILLISECONDS,

this.consumeRequestQueue,

//线程池中的线程名:ConsumeMessageThread_

new ThreadFactoryImpl("ConsumeMessageThread_"));

this.scheduledExecutorService = Executors.newSingleThreadScheduledExecutor(new ThreadFactoryImpl("ConsumeMessageScheduledThread_"));

this.cleanExpireMsgExecutors = Executors.newSingleThreadScheduledExecutor(new ThreadFactoryImpl("CleanExpireMsgScheduledThread_"));

}

在这个构造函数中,new了一个名字叫consumeExecutor的线程池,在并发消费的模式下,这个线程池也就是消费消息的方式,我们先回到消息消费的入口处,我们上面也提到了在回调函数中

//消费消息服务提交

DefaultMQPushConsumerImpl.this.consumeMessageService.submitConsumeRequest(

pullResult.getMsgFoundList(),

processQueue,

pullRequest.getMessageQueue(),

dispatchToConsume);

把拉取到的消息(默认为32条)提交到consumeMessageService中,进入submitConsumeRequest方法:

final int consumeBatchSize = this.defaultMQPushConsumer.getConsumeMessageBatchMaxSize();

if (msgs.size() <= consumeBatchSize) {

ConsumeRequest consumeRequest = new ConsumeRequest(msgs, processQueue, messageQueue);

try {

//consumeExecutor : 消费端消费线程池

this.consumeExecutor.submit(consumeRequest);

} catch (RejectedExecutionException e) {

this.submitConsumeRequestLater(consumeRequest);

}

}

第一步:获取默认的处理大小,一直觉得这个字段的命名有点歧义,这个字段是用来处理消费端每次消费消息的条数,不是从broker端拉取过来的消息的条数

第二步:判断从broker拉取过来的消息是否大于consumeBatchSize,一般consumeBatchSize都设置为1,默认值也是1,下面直接去看else逻辑

for (int total = 0; total < msgs.size(); ) {

List<MessageExt> msgThis = new ArrayList<MessageExt>(consumeBatchSize);

for (int i = 0; i < consumeBatchSize; i++, total++) {

if (total < msgs.size()) {

msgThis.add(msgs.get(total));

} else {

break;

}

}

ConsumeRequest consumeRequest = new ConsumeRequest(msgThis, processQueue, messageQueue);

try {

this.consumeExecutor.submit(consumeRequest);

} catch (RejectedExecutionException e) {

for (; total < msgs.size(); total++) {

msgThis.add(msgs.get(total));

}

this.submitConsumeRequestLater(consumeRequest);

}

}

把消息按照consumeBatchSize分组,组装成ConsumeRequest对象,提交到consumeExecutor线程池中,我们看下ConsumeRequest的run方法

public void run() {

if (this.processQueue.isDropped()) {

log.info("the message queue not be able to consume, because it's dropped. group={} {}", ConsumeMessageConcurrentlyService.this.consumerGroup, this.messageQueue);

return;

}

第一步:判断processQueue的dropped属性,这个属性在负载均衡中会处理,判断需不需要继续消费这个processQueue拉取到的消息

MessageListenerConcurrently listener = ConsumeMessageConcurrentlyService.this.messageListener;

ConsumeConcurrentlyContext context = new ConsumeConcurrentlyContext(messageQueue);

ConsumeConcurrentlyStatus status = null;

第二步:拿到业务系统定义的消息监听listener

ConsumeMessageContext consumeMessageContext = null;

if (ConsumeMessageConcurrentlyService.this.defaultMQPushConsumerImpl.hasHook()) {

consumeMessageContext = new ConsumeMessageContext();

consumeMessageContext.setConsumerGroup(defaultMQPushConsumer.getConsumerGroup());

consumeMessageContext.setProps(new HashMap<String, String>());

consumeMessageContext.setMq(messageQueue);

consumeMessageContext.setMsgList(msgs);

consumeMessageContext.setSuccess(false);

ConsumeMessageConcurrentlyService.this.defaultMQPushConsumerImpl.executeHookBefore(consumeMessageContext);

}

第三步:判断是否有钩子函数,执行before方法

//设置消息的重试主题,并开始消费消息,并返回该批次消息消费结果:

long beginTimestamp = System.currentTimeMillis();

boolean hasException = false;

ConsumeReturnType returnType = ConsumeReturnType.SUCCESS;

try {

ConsumeMessageConcurrentlyService.this.resetRetryTopic(msgs);

if (msgs != null && !msgs.isEmpty()) {

for (MessageExt msg : msgs) {

MessageAccessor.setConsumeStartTimeStamp(msg, String.valueOf(System.currentTimeMillis()));

}

}

status = listener.consumeMessage(Collections.unmodifiableList(msgs), context);

} catch (Throwable e) {

log.warn("consumeMessage exception: {} Group: {} Msgs: {} MQ: {}",

RemotingHelper.exceptionSimpleDesc(e),

ConsumeMessageConcurrentlyService.this.consumerGroup,

msgs,

messageQueue);

hasException = true;

}

第四步:调用resetRetryTopic方法设置消息的重试主题

第五步:执行listener.consumeMessage,业务系统具体去消费消息,如果消费成功那么返回status返回CONSUME_SUCCESS,如果有异常想重试,那么返回RECONSUME_LATER

if (ConsumeMessageConcurrentlyService.this.defaultMQPushConsumerImpl.hasHook()) {

consumeMessageContext.setStatus(status.toString());

consumeMessageContext.setSuccess(ConsumeConcurrentlyStatus.CONSUME_SUCCESS == status);

ConsumeMessageConcurrentlyService.this.defaultMQPushConsumerImpl.executeHookAfter(consumeMessageContext);

}

第六步:执行钩子函数after方法

//对消费结果的处理

if (!processQueue.isDropped()) {

ConsumeMessageConcurrentlyService.this.processConsumeResult(status, context, this);

} else {

log.warn("processQueue is dropped without process consume result. messageQueue={}, msgs={}", messageQueue, msgs);

}

第七步:processConsumeResult来对消费结果进行处理,进入processConsumeResult方法

int ackIndex = context.getAckIndex();

if (consumeRequest.getMsgs().isEmpty())

return;

switch (status) {

case CONSUME_SUCCESS:

if (ackIndex >= consumeRequest.getMsgs().size()) {

ackIndex = consumeRequest.getMsgs().size() - 1;

}

int ok = ackIndex + 1;

int failed = consumeRequest.getMsgs().size() - ok;

this.getConsumerStatsManager().incConsumeOKTPS(consumerGroup, consumeRequest.getMessageQueue().getTopic(), ok);

this.getConsumerStatsManager().incConsumeFailedTPS(consumerGroup, consumeRequest.getMessageQueue().getTopic(), failed);

break;

case RECONSUME_LATER:

ackIndex = -1;

this.getConsumerStatsManager().incConsumeFailedTPS(consumerGroup, consumeRequest.getMessageQueue().getTopic(),

consumeRequest.getMsgs().size());

break;

default:

break;

}

第八步:定义了ackIndex,这个值初始化等于Integer.MAX_VALUE,如果返回成功,那么ackIndex=消息数-1,如果返回失败ackIndex=-1

case CLUSTERING:

//集群模式下

List<MessageExt> msgBackFailed = new ArrayList<MessageExt>(consumeRequest.getMsgs().size());

for (int i = ackIndex + 1; i < consumeRequest.getMsgs().size(); i++) {

MessageExt msg = consumeRequest.getMsgs().get(i);

//发送sendMessageBack

boolean result = this.sendMessageBack(msg, context);

if (!result) {

msg.setReconsumeTimes(msg.getReconsumeTimes() + 1);

msgBackFailed.add(msg);

}

}

if (!msgBackFailed.isEmpty()) {

consumeRequest.getMsgs().removeAll(msgBackFailed);

//更新消息消费进度,不管消费成功与否,上述这些消息消费成功,其实就是修改消费偏移量。(失败的,会进行重试,会创建新的消息)

this.submitConsumeRequestLater(msgBackFailed, consumeRequest.getProcessQueue(), consumeRequest.getMessageQueue());

}

第九步:集群模式下,判断ackIndex,如果等于-1,那么就要调用sendMessageBack方法,就是消息的ACK,所以在RocketMQ中,只有失败的消息才会ACK,这个方法是把消费失败的消息重新发送给broker,broker的处理逻辑就是根据重试次数依托定时消息机制来完成消息重试,broker在重试消息的时候会创建一个条新的消息,而不是用老的消息,如果到达一定的次数,那么进入死信队列,我在工作中会把即将进入死信队列的消息拿出来以json的格式放入mongodb中,通过界面的方法展示这些失败的消息,并在界面上继续提供重试的功能来处理这些失败的消息。如果重新发送失败,那么会延迟5s后重新消费。

long offset = consumeRequest.getProcessQueue().removeMessage(consumeRequest.getMsgs());

if (offset >= 0 && !consumeRequest.getProcessQueue().isDropped()) {

this.defaultMQPushConsumerImpl.getOffsetStore().updateOffset(consumeRequest.getMessageQueue(), offset, true);

}

第十步:不管是消费成功还是消费失败的消息,都会更新消费进度,首先从processQueue中移除所有消费成功的消息并返回offset,这里要注意一点,就是这个offset是processQueue中的msgTreeMap的最小的key,为什么要这样做呢,我的理解也是无奈之举,因为消费进度的推进是offset决定的,因为是线程池消费,不能保证先消费的是offset大的那条消息,所以推进消费进度只能取最小的那条消息的offset,这样在消费端重启的时候就可能会导致消息重复消费。

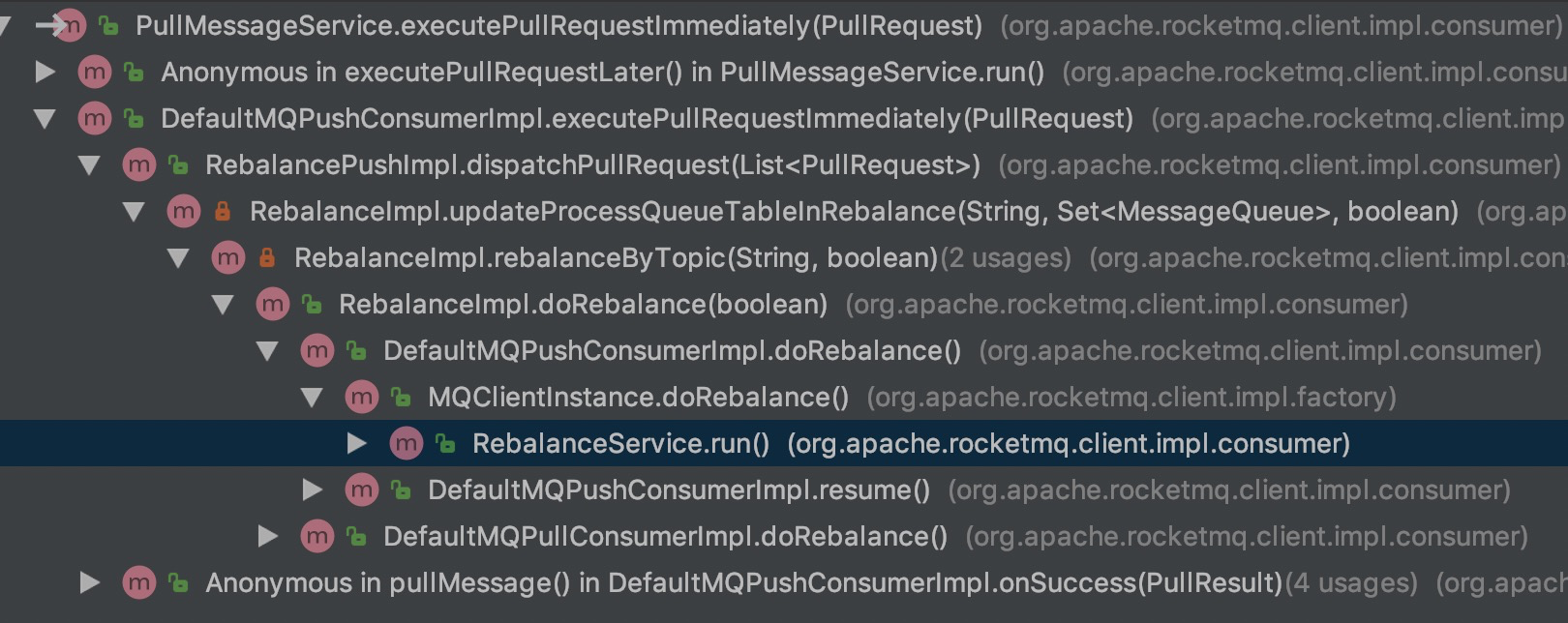

消费队列的负载

上文中我们知道pullMessageService启动的时候,会从pullRequestQueue去take拉取任务,但是第一次启动的时候pullRequestQueue会是空的,那么pullRequest是从哪里创建放入pullRequestQueue中的呢?

从这个调用链关系中可以看出来,是在RebalanceService启动的时候触发的,RebalanceService是MQClientInstance的持有的属性,随着MQClientInstance的启动而启动,从run方法开始

public void run() {

while (!this.isStopped()) {

this.waitForRunning(waitInterval);

this.mqClientFactory.doRebalance();

}

每隔20s进行一次负载均衡

public void doRebalance() {

for (Map.Entry<String, MQConsumerInner> entry : this.consumerTable.entrySet()) {

MQConsumerInner impl = entry.getValue();

if (impl != null) {

try {

impl.doRebalance();

拿到MQConsumerInner,调用doRebalance方法

public void doRebalance(final boolean isOrder) {

Map<String, SubscriptionData> subTable = this.getSubscriptionInner();

if (subTable != null) {

for (final Map.Entry<String, SubscriptionData> entry : subTable.entrySet()) {

final String topic = entry.getKey();

try {

this.rebalanceByTopic(topic, isOrder);

} catch (Throwable e) {

if (!topic.startsWith(MixAll.RETRY_GROUP_TOPIC_PREFIX)) {

log.warn("rebalanceByTopic Exception", e);

}

}

}

}

this.truncateMessageQueueNotMyTopic();

}

遍历每一个主题,对每个主题下的消息队列进行负载均衡,看下rebalanceByTopic方法,以集群模式来分析:

//从topicSubscribeInfoTable列表中获取与该topic相关的所有消息队列

Set<MessageQueue> mqSet = this.topicSubscribeInfoTable.get(topic);

第一步,取出该topic下所有的MessageQueue,MessageQueue记录了topic,brokerName,queueId

List<String> cidAll = this.mQClientFactory.findConsumerIdList(topic, consumerGroup);

第二步,从broker中获取当前consumerGroup下所有消费者id,那么从哪个broker获取呢,consumer启动的时候向所有broker发送心跳包,如果cidAll或者mqSet任意一个为空,那么终止本次负载均衡

//对主题的消息队列排序

Collections.sort(mqAll);

//消费者ID进行排序

Collections.sort(cidAll);

//计算当前消费者ID(mqClient.clientId) 分配出需要拉取的消息队列

AllocateMessageQueueStrategy strategy = this.allocateMessageQueueStrategy;

List<MessageQueue> allocateResult = null;

try {

allocateResult = strategy.allocate(

this.consumerGroup,

this.mQClientFactory.getClientId(),

mqAll,

cidAll);

} catch (Throwable e) {

log.error("AllocateMessageQueueStrategy.allocate Exception. allocateMessageQueueStrategyName={}", strategy.getName(),

e);

return;

}

第三步,分别对mqAll和cidAll排序,保证同一个消费组下的顺序一致,因为后面需要分配,然后根据策略,把mqClient分配给clientId,AllocateMessageQueueStrategy是分配算法接口,推荐使用平均分配算法和平均轮训算法

boolean changed = this.updateProcessQueueTableInRebalance(topic, allocateResultSet, isOrder);

跟新主题对应的消息处理队列,并判断消息队列负载之后是否跟之前发生了变化,有2种情况会发生变化

- 第一种,原来有,负载之后没有这个MessageQueue了,那么要把这个MessageQueue消费进度持久化,把对应processQueue的状态dropped设置为true停止消费

- 第二种,原来没有,负载之后有了新的MessageQueue,需要把给这个新的MessageQueue创建新的pullRequest,放入到pullMessageService中去消费。

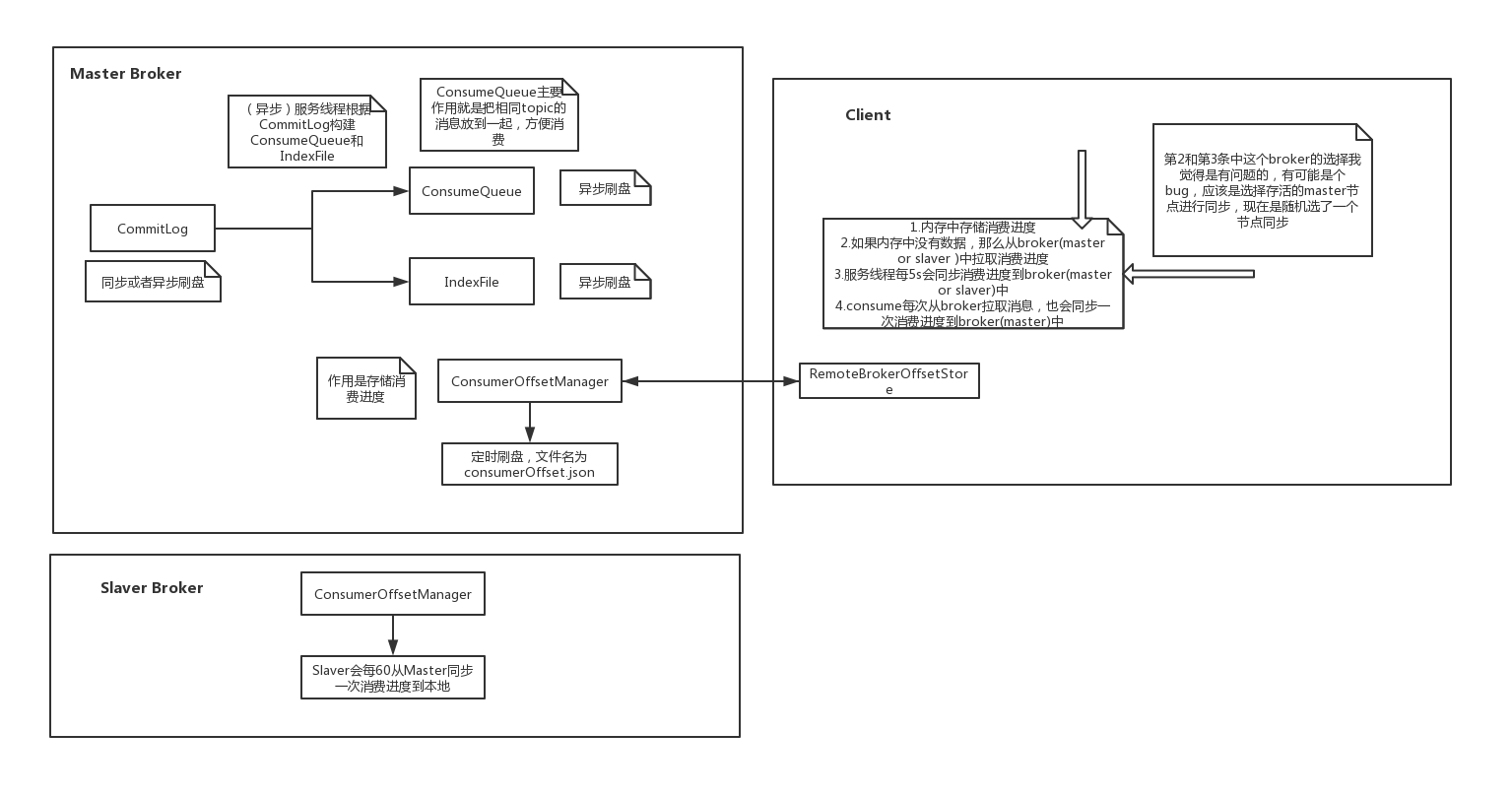

消费进度管理

集群模式下,消费进度是需要存储在broker端的,这样在消息队列重新负载之后,消费进度需要每个消费者都能访问到。消息存储的接口是OffsetStore,这个类存在于DefaultMQPushConsumerImpl中,

public interface OffsetStore {

void load() throws MQClientException;

void updateOffset(final MessageQueue mq, final long offset, final boolean increaseOnly);

long readOffset(final MessageQueue mq, final ReadOffsetType type);

void persistAll(final Set<MessageQueue> mqs);

void persist(final MessageQueue mq);

void removeOffset(MessageQueue mq);

Map<MessageQueue, Long> cloneOffsetTable(String topic);

void updateConsumeOffsetToBroker(MessageQueue mq, long offset, boolean isOneway);

}

offsetStore中提供了消费进度读取,更新,持久化,移除等方法,在集群模式下,消费进度接口的实现类是RemoteBrokerOffsetStore,先看下RemoteBrokerOffsetStore中有哪些属性

//MQ客户端实例,该实例被同一个客户端的消费者、生产者共用

private final MQClientInstance mQClientFactory;

// MQ消费组

private final String groupName;

//消费进度存储(内存中)

private ConcurrentMap<MessageQueue, AtomicLong> offsetTable =

new ConcurrentHashMap<MessageQueue, AtomicLong>();

主要有3个参数,其中offsetTable是在内存中存储消费进度,不是说消费进度存储在broker端吗,怎么内存中也有存储呢,我们先来看下消费进度存储过程,消费被成功消费完成之后,会调用updateOffset方法,我们看下这个方法做了什么

//跟新消费进度

@Override

public void updateOffset(MessageQueue mq, long offset, boolean increaseOnly) {

if (mq != null) {

AtomicLong offsetOld = this.offsetTable.get(mq);

//如果当前并没有存储该mq的offset,则把传入的offset放入内存中(map)

if (null == offsetOld) {

offsetOld = this.offsetTable.putIfAbsent(mq, new AtomicLong(offset));

}

//如果offsetOld不为空,这里如果不为空,说明同时对一个MQ消费队列进行消费,并发执行

if (null != offsetOld) {

//根据increaseOnly更新原先的offsetOld的值,这个值是个局部变量

if (increaseOnly) {

MixAll.compareAndIncreaseOnly(offsetOld, offset);

} else {

offsetOld.set(offset);

}

}

}

}

这一步的操作就是把offset存储到offsetTable中,那什么时候offset会存储到broker中呢,在MQClientInstance中,会有定时任务每隔5s会持久化一次消费进度,调用的是persistAll方法,最终会将所有队列的消费进度调用updateConsumeOffsetToBroker方法保存到broker中,我们去broker中看下保存消费进度的处理逻辑

private RemotingCommand updateConsumerOffset(ChannelHandlerContext ctx, RemotingCommand request)

throws RemotingCommandException {

···

this.brokerController.getConsumerOffsetManager().commitOffset(RemotingHelper.parseChannelRemoteAddr(ctx.channel()), requestHeader.getConsumerGroup(),

requestHeader.getTopic(), requestHeader.getQueueId(), requestHeader.getCommitOffset());

···

}

ConsumerOffsetManager是来管理broker端的offset的,最终消费进度存储到了offsetTable中,我们发现消费存储到了broker端,也是在内存中,那么broker端肯定会有一个定时任务线程去持久化,我们在brokerController中看到

this.scheduledExecutorService.scheduleAtFixedRate(new Runnable() {

@Override

public void run() {

try {

BrokerController.this.consumerOffsetManager.persist();

} catch (Throwable e) {

log.error("schedule persist consumerOffset error.", e);

}

}

}, 1000 * 10, this.brokerConfig.getFlushConsumerOffsetInterval(), TimeUnit.MILLISECONDS);

每10s会持久化一次消费进度,存储的文件名称为consumerOffset.json,下面总结一下消费进度保存的过程

参考文档:

- 《RocketMQ技术内幕:RocketMQ架构设计与实现》

- RocketMQ官方文档

- RocketMQ社区分享