《Cloud Native Infrastructure》CHAPTER 1(1)

What Is Cloud Native Infrastructure?

Infrastructure is all the software and hardware that support applications.This includes data centers, operating systems, deployment pipelines, configuration management, and any system or software needed to support the life cycle of applications.

Countless time and money has been spent on infrastructure. Through years of evolving(改进) the technology and refining(精炼) practices, some companies have been able to run infrastructure and applications at massive scale and with renowned(著名的) agility. Efficiently(高效地) running infrastructure accelerates(加速) business by enabling faster iteration and shorter times to market.

Cloud native infrastructure is a requirement to effectively run cloud native applications. Without the right design and practices to manage infrastructure, even the best cloud native application can go to waste. Immense(巨大的) scale is not a prerequisite(先决条件) to follow the practices laid out in this book, but if you want to reap(收获) the rewards(回报) of the cloud, you should heed(注意) the experience of those who have pioneered(开拓) these patterns.

Before we explore how to build infrastructure designed to run applications in the cloud, we need to understand how we got where we are. First, we’ll discuss the benefits of adopting(采用) cloud native practices. Next, we’ll look at a brief history of infrastructure and then discuss features of the next stage, called “cloud native,” and how it relates(联系) to your applications, the platform where it runs, and your business.

Once you understand the problem, we’ll show you the solution and how to implement it.

Cloud Native Benefits

The benefits of adopting the patterns in this book are numerous(许多的). They are modeled(为榜样) after successful companies such as Google, Netflix, and Amazon—not that the patterns alone(单独的) guaranteed(保证) their success, but they provided the scalability and agility these companies needed to succeed.

By choosing to run your infrastructure in a public cloud, you are able to produce value faster and focus on your business objectives(目标). Building only what you need to create your product, and consuming services from other providers, keeps your lead time(交付周期) small and agility high. Some people may be hesitant(犹豫的) because of “vendor lock-in”(厂商绑定) but the worst kind of lock-in is the one you build yourself. See Appendix B for more information about different types of lock-in and what you should do about it.

Consuming services also lets you build a customized(定制的) platform with the services you need (sometimes called Services as a Platform [SaaP]). When you use cloud-hosted(云托管) services, you do not need expertise(专长) in operating every service your applications require. This dramatically(极大的) impacts your ability to change and adds value to your business.

When you are unable to consume services, you should build applications to manage infrastructure. When you do so, the bottleneck(瓶颈) for scale no longer depends on how many servers can be managed per operations engineer. Instead, you can approach scaling your infrastructure the same way as scaling your applications. In other words, if you are able to run applications that can scale, you can scale(拓展) your infrastructure with applications.

The same benefits apply for making infrastructure that is resilient(弹性的) and easy to debug. You can gain insight into(对..深刻理解) your infrastructure by using the same tools you use to manage your business applications.

Cloud native practices can also bridge(弥补) the gap(隔阂) between traditional engineering roles (a common goal of DevOps). Systems engineers will be able to learn best practices from applications, and application engineers can take ownership of the infrastructure where their applications run.

Cloud native infrastructure is not a solution for every problem, and it is your responsibility to know if it is the right solution for your environment (see Chapter 2). However, its success is evident(明显地) in the companies that created the practices and the many other companies that have adopted the tools that promote these patterns. See Appendix C for one example.

Before we dive into the solution, we need to understand how these patterns evolved(演变) from the problems that created them.

Servers【物理服务器】

At the beginning of the internet, web infrastructure got its start with physical servers. Servers are big, noisy, and expensive, and they require a lot of power and people to keep them running. They are cared for extensively and kept running as long as possible. Compared to cloud infrastructure, they are also more difficult to purchase(购买) and prepare for an application to run on them.

Once you buy one, it’s yours to keep, for better or worse. Servers fit into the well established capital expenditure cost of business. The longer you can keep a physical server running, the more value you will get from your money spent. It is always important to do proper(合适的) capacity(容量) planning and make sure you get the best return on investment(投资).

Physical servers are great because they’re powerful and can be configured however you want. They have a relatively low failure rate(故障率) and are engineered to avoid failures with redundant(多余的) power supplies(电力), fans, and RAID controllers. They also last a long time. Businesses can squeeze extra value out of hardware they purchase through extended warranties(维修期) and replacement(更换) parts.

However, physical servers lead to(导致) waste. Not only are the servers never fully utilized(利用), but they also come with a lot of overhead. It’s difficult to run multiple applications on the same server. Software conflicts, network routing, and user access all become more complicated when a server is maximally(最大地) utilized with multiple applications.

Hardware virtualization promised to solve some of these problems.

Virtualization 【虚拟化】

Virtualization emulates(模拟) a physical server’s hardware in software. A virtual server can be created on demand(按需), is entirely(完全地) programmable in software, and never wears out(磨损) so long as you can emulate the hardware.

Using a hypervisor2 increases these benefits because you can run multiple virtual machines (VMs) on a physical server. It also allows applications to be portable(可移植的) because you can move a VM from one physical server to another.

One problem with running your own virtualization platform, however, is that VMs still require hardware to run. Companies still need to have all the people and processes required to run physical servers, but now capacity planning becomes harder because they have to account for VM overhead too. At least, that was the case until the public cloud.

Infrastructure as a Service 【IaaS】

Infrastructure as a Service (IaaS) is one of the many offerings of a cloud provider. It provides raw networking, storage, and compute that customers can consume as needed. It also includes support services such as identity and access management (IAM), provisioning, and inventory(库存) systems.

IaaS allows companies to get rid of(摆脱) all of their hardware and to rent VMs or physical servers from someone else. This frees up a lot of people resources and gets rid of pro‐ cesses that were needed for purchasing, maintenance, and, in some cases, capacity planning.

IaaS fundamentally changed infrastructure’s relationship with businesses. Instead of being a capital expenditure benefited from over time, it is an operational expense for running your business. Businesses can pay for their infrastructure the same way they pay for electricity and people’s time. With billing based on consumption, the sooner you get rid of infrastructure, the smaller your operational costs will be.

Hosted infrastructure also made consumable HTTP Application Programming Inter‐ faces (APIs) for customers to create and manage infrastructure on demand. Instead of needing a purchase order and waiting for physical items to ship, engineers can make an API call, and a server will be created. The server can be deleted and dis‐ carded just as easily.

Running your infrastructure in a cloud does not make your infrastructure cloud native. IaaS still requires infrastructure management. Outside of purchasing and managing physical resources, you can—and many companies do—treat IaaS identi‐ cally to the traditional infrastructure they used to buy and rack in their own data centers.

Even without “racking and stacking,” there are still plenty of operating systems, moni‐ toring software, and support tools. Automation tools3 have helped reduce the time it takes to have a running application, but oftentimes ingrained processes can get in the way of reaping the full benefit of IaaS.

Platform as a Service 【PaaS】

Just as IaaS hides physical servers from VM consumers, platform as a service (PaaS) hides operating systems from applications. Developers write application code and define the application dependencies, and it is the platform’s responsibility to create the necessary infrastructure to run, manage, and expose it. Unlike IaaS, which still requires infrastructure management, in a PaaS the infrastructure is managed by the platform provider.

It turns out, PaaS limitations required developers to write their applications differ‐ ently to be effectively managed by the platform. Applications had to include features that allowed them to be managed by the platform without access to the underlying operating system. Engineers could no longer rely on SSHing to a server and reading log files on disk. The application’s life cycle and management were now controlled by the PaaS, and engineers and applications needed to adapt.

With these limitations came great benefits. Application development cycles were reduced because engineers did not need to spend time managing infrastructure. Applications that embraced running on a platform were the beginning of what we now call “cloud native applications.” They exploited the platform limitations in their code and in many cases changed how applications are written today.

12-Factor Applications

Heroku was one of the early pioneers who offered a publicly consumable PaaS. Through many years of expanding its own platform, the company was able to identify patterns that helped applications run better in their environment. There are 12 main factors that Heroku defines that a developer should try to implement.

The 12 factors are about making developers efficient by separating code logic from data; automating as much as possible; having distinct build, ship, and run stages; and declaring all the application’s dependencies.

If you consume all your infrastructure through a PaaS provider, congratulations, you already have many of the benefits of cloud native infrastructure. This includes plat‐ forms such as Google App Engine, AWS Lambda, and Azure Cloud Services. Any successful cloud native infrastructure will expose a self-service platform to applica‐ tion engineers to deploy and manage their code.

However, many PaaS platforms are not enough for everything a business needs. They often limit language runtimes, libraries, and features to meet their promise of abstracting away the infrastructure from the application. Public PaaS providers will also limit which services can integrate with the applications and where those applica‐ tions can run.

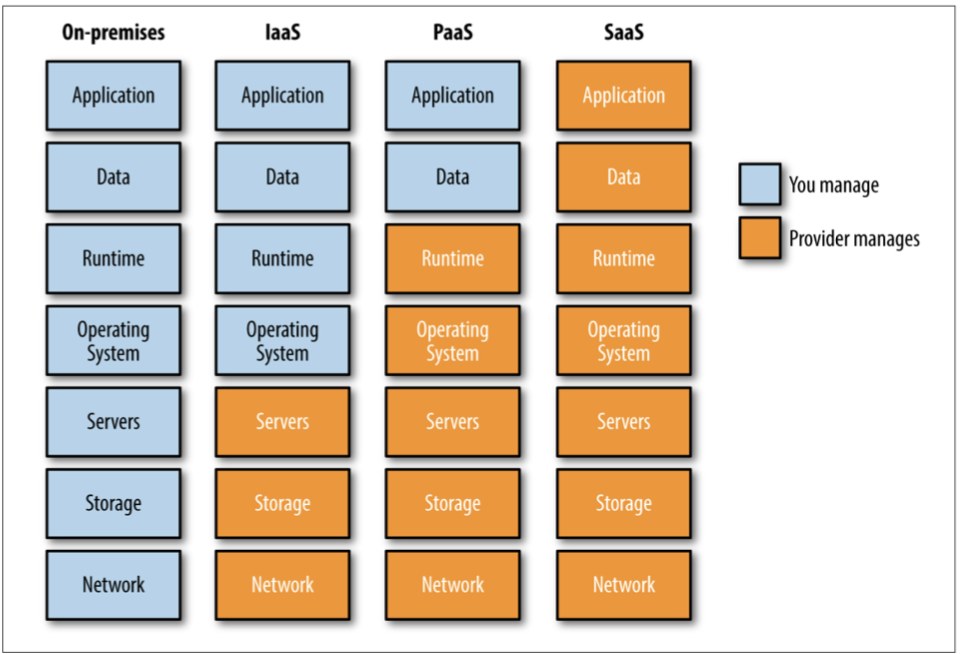

Public platforms trade application flexibility to make infrastructure somebody else’s problem. Figure 1-1 is a visual representation of the components you will need to manage if you run your own data center, create infrastructure in an IaaS, run your applications on a PaaS, or consume applications through software as a service (SaaS).

The fewer infrastructure components you are required to run, the better; but running all your applications in a public PaaS provider may not be an option.