安装部署 kubernetes 第二集 下

第四章:kubernetes的服务暴露插件–traefik

-

K8S的DNS实现了服务在集群“内”被自动发现,那如何是的服务在K8S集群“外”被使用和访问呢?

- 使用NodePort型的Service

- 注意:无法使用kube-proxy的ipvs模型,只能使用iptables模型

- 使用Ingress资源

- 注意:Ingress只能调度并暴露7层应用,特指http和https协议

- 使用NodePort型的Service

-

Ingress是K8S API的标准资源类型之一,也是一种核心资源,它其实就是一组基于域名和URL路径,把用户的请求转发至指定Service资源的规则

-

可以将集群外部的请求流量,转发至集群内部,从而实现“服务暴露”

-

Ingress控制器是能够为Ingress资源监听某套接字,然后根据Ingress规则匹配机制路由调度流量的一个插件

-

说白了,Ingress没啥神秘的,就是个nginx+一段go脚本而已

-

常用的Ingress控制器的实现软件

- Ingress-nginx

- HAProxy

- Traefik

- …

1.使用NodePort型Service暴露服务

注意:使用这种方法暴露服务,要求kube-proxy的代理类型为:iptables

1、第一步更改为proxy-mode更改为iptables,调度方式为RR [root@shkf6-243 ~]# cat /opt/kubernetes/server/bin/kube-proxy.sh #!/bin/sh ./kube-proxy \ --cluster-cidr 172.6.0.0/16 \ --hostname-override shkf6-243.host.com \ --proxy-mode=iptables \ --ipvs-scheduler=rr \ --kubeconfig ./conf/kube-proxy.kubeconfig [root@shkf6-244 ~]# cat /opt/kubernetes/server/bin/kube-proxy.sh #!/bin/sh ./kube-proxy \ --cluster-cidr 172.6.0.0/16 \ --hostname-override shkf6-243.host.com \ --proxy-mode=iptables \ --ipvs-scheduler=rr \ --kubeconfig ./conf/kube-proxy.kubeconfig 2.使iptables模式生效 [root@shkf6-243 ~]# supervisorctl restart kube-proxy-6-243 kube-proxy-6-243: stopped kube-proxy-6-243: started [root@shkf6-243 ~]# ps -ef|grep kube-proxy root 26694 12008 0 10:25 ? 00:00:00 /bin/sh /opt/kubernetes/server/bin/kube-proxy.sh root 26695 26694 0 10:25 ? 00:00:00 ./kube-proxy --cluster-cidr 172.6.0.0/16 --hostname-override shkf6-243.host.com --proxy-mode=iptables --ipvs-scheduler=rr --kubeconfig ./conf/kube-proxy.kubeconfig root 26905 13466 0 10:26 pts/0 00:00:00 grep --color=auto kube-proxy [root@shkf6-244 ~]# supervisorctl restart kube-proxy-6-244 kube-proxy-6-244: stopped kube-proxy-6-244kube-proxy-6-244: started [root@shkf6-244 ~]# ps -ef|grep kube-proxy root 25998 11030 0 10:22 ? 00:00:00 /bin/sh /opt/kubernetes/server/bin/kube-proxy.sh root 25999 25998 0 10:22 ? 00:00:00 ./kube-proxy --cluster-cidr 172.6.0.0/16 --hostname-override shkf6-243.host.com --proxy-mode=iptables --ipvs-scheduler=rr --kubeconfig ./conf/kube-proxy.kubeconfig [root@shkf6-243 ~]# tail -fn 11 /data/logs/kubernetes/kube-proxy/proxy.stdout.log [root@shkf6-244 ~]# tail -fn 11 /data/logs/kubernetes/kube-proxy/proxy.stdout.log 3.清理现有的ipvs规则 [root@shkf6-243 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.96.0.1:443 nq -> 192.168.6.243:6443 Masq 1 0 0 -> 192.168.6.244:6443 Masq 1 0 0 TCP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0 TCP 10.96.0.2:9153 nq -> 172.6.244.3:9153 Masq 1 0 0 TCP 10.96.3.154:80 nq -> 172.6.243.3:80 Masq 1 0 0 UDP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0 [root@shkf6-243 ~]# ipvsadm -D -t 10.96.0.1:443 [root@shkf6-243 ~]# ipvsadm -D -t 10.96.0.2:53 [root@shkf6-243 ~]# ipvsadm -D -t 10.96.0.2:9153 [root@shkf6-243 ~]# ipvsadm -D -t 10.96.3.154:80 [root@shkf6-243 ~]# ipvsadm -D -u 10.96.0.2:53 [root@shkf6-243 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn [root@shkf6-244 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.96.0.1:443 nq -> 192.168.6.243:6443 Masq 1 0 0 -> 192.168.6.244:6443 Masq 1 1 0 TCP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0 TCP 10.96.0.2:9153 nq -> 172.6.244.3:9153 Masq 1 0 0 TCP 10.96.3.154:80 nq -> 172.6.243.3:80 Masq 1 0 0 UDP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0 [root@shkf6-244 ~]# ipvsadm -D -t 10.96.0.1:443 [root@shkf6-244 ~]# ipvsadm -D -t 10.96.0.2:53 [root@shkf6-244 ~]# ipvsadm -D -t 10.96.0.2:9153 [root@shkf6-244 ~]# ipvsadm -D -t 10.96.3.154:80 [root@shkf6-244 ~]# ipvsadm -D -u 10.96.0.2:53 [root@shkf6-244 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn

1.修改nginx-ds的service资源配置清单

[root@shkf6-243 ~]# cat /root/nginx-ds-svc.yaml apiVersion: v1 kind: Service metadata: labels: app: nginx-ds name: nginx-ds namespace: default spec: ports: - port: 80 protocol: TCP nodePort: 8000 selector: app: nginx-ds sessionAffinity: None type: NodePort

[root@shkf6-243 ~]# kubectl apply -f nginx-ds-svc.yaml service/nginx-ds created

2.重建nginx-ds的service资源

[root@shkf6-243 ~]# cat nginx-ds.yaml apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: nginx-ds spec: template: metadata: labels: app: nginx-ds spec: containers: - name: my-nginx image: harbor.od.com/public/nginx:curl command: ["nginx","-g","daemon off;"] ports: - containerPort: 80 [root@shkf6-243 ~]# kubectl apply -f nginx-ds.yaml daemonset.extensions/nginx-ds created

3.查看service

[root@shkf6-243 ~]# kubectl get svc nginx-ds NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx-ds NodePort 10.96.1.170 <none> 80:8000/TCP 2m20s [root@shkf6-243 ~]# netstat -lntup|grep 8000 tcp6 0 0 :::8000 :::* LISTEN 26695/./kube-proxy [root@shkf6-244 ~]# netstat -lntup|grep 8000 tcp6 0 0 :::8000 :::* LISTEN 25999/./kube-proxy nodePort本质 [root@shkf6-244 ~]# iptables-save | grep 8000 -A KUBE-FIREWALL -m comment --comment "kubernetes firewall for dropping marked packets" -m mark --mark 0x8000/0x8000 -j DROP -A KUBE-MARK-DROP -j MARK --set-xmark 0x8000/0x8000 -A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-ds:" -m tcp --dport 8000 -j KUBE-MARK-MASQ -A KUBE-NODEPORTS -p tcp -m comment --comment "default/nginx-ds:" -m tcp --dport 8000 -j KUBE-SVC-T4RQBNWQFKKBCRET

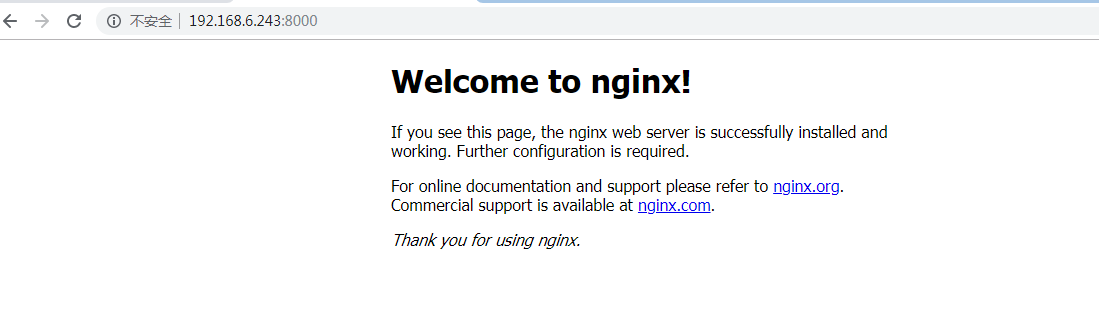

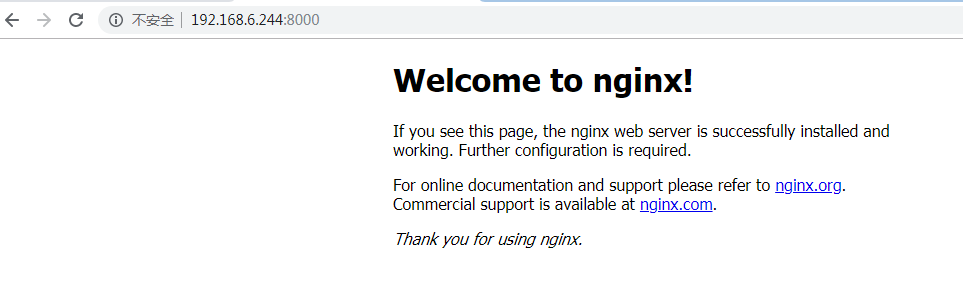

4.浏览器访问

5.还原成ipvs

删除service和pod

[root@shkf6-243 ~]# kubectl delete -f nginx-ds.yaml

daemonset.extensions "nginx-ds" deleted

[root@shkf6-243 ~]# kubectl delete -f nginx-ds-svc.yaml

service "nginx-ds" deleted

[root@shkf6-243 ~]# netstat -lntup|grep 8000

[root@shkf6-243 ~]# 在运算节点上:

[root@shkf6-243 ~]# cat /opt/kubernetes/server/bin/kube-proxy.sh #!/bin/sh ./kube-proxy \ --cluster-cidr 172.6.0.0/16 \ --hostname-override shkf6-243.host.com \ --proxy-mode=ipvs \ --ipvs-scheduler=nq \ --kubeconfig ./conf/kube-proxy.kubeconfig [root@shkf6-243 ~]# supervisorctl restart kube-proxy-6-243 kube-proxy-6-243: stopped kube-proxy-6-243: started [root@shkf6-243 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.96.0.1:443 nq -> 192.168.6.243:6443 Masq 1 0 0 -> 192.168.6.244:6443 Masq 1 0 0 TCP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0 TCP 10.96.0.2:9153 nq -> 172.6.244.3:9153 Masq 1 0 0 TCP 10.96.3.154:80 nq -> 172.6.243.3:80 Masq 1 0 0 UDP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0

[root@shkf6-244 ~]# cat /opt/kubernetes/server/bin/kube-proxy.sh #!/bin/sh ./kube-proxy \ --cluster-cidr 172.6.0.0/16 \ --hostname-override shkf6-243.host.com \ --proxy-mode=ipvs \ --ipvs-scheduler=nq \ --kubeconfig ./conf/kube-proxy.kubeconfig [root@shkf6-244 ~]# supervisorctl restart kube-proxy-6-244 kube-proxy-6-244: stopped kube-proxy-6-244: started [root@shkf6-244 ~]# ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 10.96.0.1:443 nq -> 192.168.6.243:6443 Masq 1 0 0 -> 192.168.6.244:6443 Masq 1 0 0 TCP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0 TCP 10.96.0.2:9153 nq -> 172.6.244.3:9153 Masq 1 0 0 TCP 10.96.3.154:80 nq -> 172.6.243.3:80 Masq 1 0 0 UDP 10.96.0.2:53 nq -> 172.6.244.3:53 Masq 1 0 0

2.部署traefik(ingress控制器)

1.准备traefik镜像

在运维主机上shkf6-245.host.com上:

[root@shkf6-245 traefik]# docker pull traefik:v1.7.2-alpine v1.7.2-alpine: Pulling from library/traefik 4fe2ade4980c: Pull complete 8d9593d002f4: Pull complete 5d09ab10efbd: Pull complete 37b796c58adc: Pull complete Digest: sha256:cf30141936f73599e1a46355592d08c88d74bd291f05104fe11a8bcce447c044 Status: Downloaded newer image for traefik:v1.7.2-alpine docker.io/library/traefik:v1.7.2-alpine [root@shkf6-245 traefik]# docker images|grep traefik traefik v1.7.2-alpine add5fac61ae5 13 months ago 72.4MB [root@shkf6-245 traefik]# docker tag add5fac61ae5 harbor.od.com/public/traefik:v1.7.2 [root@shkf6-245 traefik]# docker push !$ docker push harbor.od.com/public/traefik:v1.7.2 The push refers to repository [harbor.od.com/public/traefik] a02beb48577f: Pushed ca22117205f4: Pushed 3563c211d861: Pushed df64d3292fd6: Pushed v1.7.2: digest: sha256:6115155b261707b642341b065cd3fac2b546559ba035d0262650b3b3bbdd10ea size: 1157

2.准备资源配置清单

[root@shkf6-245 traefik]# cat /data/k8s-yaml/traefik/rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: traefik-ingress-controller namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: traefik-ingress-controller rules: - apiGroups: - "" resources: - services - endpoints - secrets verbs: - get - list - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: traefik-ingress-controller roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: traefik-ingress-controller subjects: - kind: ServiceAccount name: traefik-ingress-controller namespace: kube-system

[root@shkf6-245 traefik]# cat /data/k8s-yaml/traefik/ds.yaml apiVersion: extensions/v1beta1 kind: DaemonSet metadata: name: traefik-ingress namespace: kube-system labels: k8s-app: traefik-ingress spec: template: metadata: labels: k8s-app: traefik-ingress name: traefik-ingress spec: serviceAccountName: traefik-ingress-controller terminationGracePeriodSeconds: 60 containers: - image: harbor.od.com/public/traefik:v1.7.2 name: traefik-ingress ports: - name: controller containerPort: 80 hostPort: 81 - name: admin-web containerPort: 8080 securityContext: capabilities: drop: - ALL add: - NET_BIND_SERVICE args: - --api - --kubernetes - --logLevel=INFO - --insecureskipverify=true - --kubernetes.endpoint=https://192.168.6.66:7443 - --accesslog - --accesslog.filepath=/var/log/traefik_access.log - --traefiklog - --traefiklog.filepath=/var/log/traefik.log - --metrics.prometheus

[root@shkf6-245 traefik]# cat /data/k8s-yaml/traefik/svc.yaml kind: Service apiVersion: v1 metadata: name: traefik-ingress-service namespace: kube-system spec: selector: k8s-app: traefik-ingress ports: - protocol: TCP port: 80 name: controller - protocol: TCP port: 8080 name: admin-web

[root@shkf6-245 traefik]# cat /data/k8s-yaml/traefik/ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: traefik-web-ui namespace: kube-system annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: traefik.od.com http: paths: - path: / backend: serviceName: traefik-ingress-service servicePort: 8080

3.依次执行创建

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/rbac.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ds.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/svc.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/traefik/ingress.yaml3.解析域名

[root@shkf6-241 ~]# cat /var/named/od.com.zone $ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2019111205 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 192.168.6.241 harbor A 192.168.6.245 k8s-yaml A 192.168.6.245 traefik A 192.168.6.66 [root@shkf6-241 ~]# named-checkconf [root@shkf6-241 ~]# systemctl restart named

4.配置反向代理

[root@shkf6-241 ~]# cat /etc/nginx/conf.d/od.com.conf upstream default_backend_traefik { server 192.168.6.243:81 max_fails=3 fail_timeout=10s; server 192.168.6.244:81 max_fails=3 fail_timeout=10s; } server { server_name *.od.com; location / { proxy_pass http://default_backend_traefik; proxy_set_header Host $http_host; proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for; } } [root@shkf6-241 ~]# nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful [root@shkf6-241 ~]# nginx -s reload

[root@shkf6-242 ~]# vim /etc/nginx/conf.d/od.com.conf [root@shkf6-242 ~]# cat /etc/nginx/conf.d/od.com.conf upstream default_backend_traefik { server 192.168.6.243:81 max_fails=3 fail_timeout=10s; server 192.168.6.244:81 max_fails=3 fail_timeout=10s; } server { server_name *.od.com; location / { proxy_pass http://default_backend_traefik; proxy_set_header Host $http_host; proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for; } } [root@shkf6-242 ~]# nginx -t nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful [root@shkf6-242 ~]# nginx -s reload

5.检查

[root@shkf6-244 ~]# kubectl get all -n kube-system NAME READY STATUS RESTARTS AGE pod/coredns-6b6c4f9648-x5zvz 1/1 Running 0 18h pod/traefik-ingress-bhhkv 1/1 Running 0 17m pod/traefik-ingress-mm2ds 1/1 Running 0 17m NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/coredns ClusterIP 10.96.0.2 <none> 53/UDP,53/TCP,9153/TCP 18h service/traefik-ingress-service ClusterIP 10.96.3.175 <none> 80/TCP,8080/TCP 17m NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/traefik-ingress 2 2 2 2 2 <none> 17m NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/coredns 1/1 1 1 18h NAME DESIRED CURRENT READY AGE replicaset.apps/coredns-6b6c4f9648 1 1 1 18h

如果pod没有起来没有起来请重启docker,原因是我上面测试了nodeport,防火墙规则改变了

[root@shkf6-243 ~]# systemctl restart docker

[root@shkf6-244 ~]# systemctl restart docker

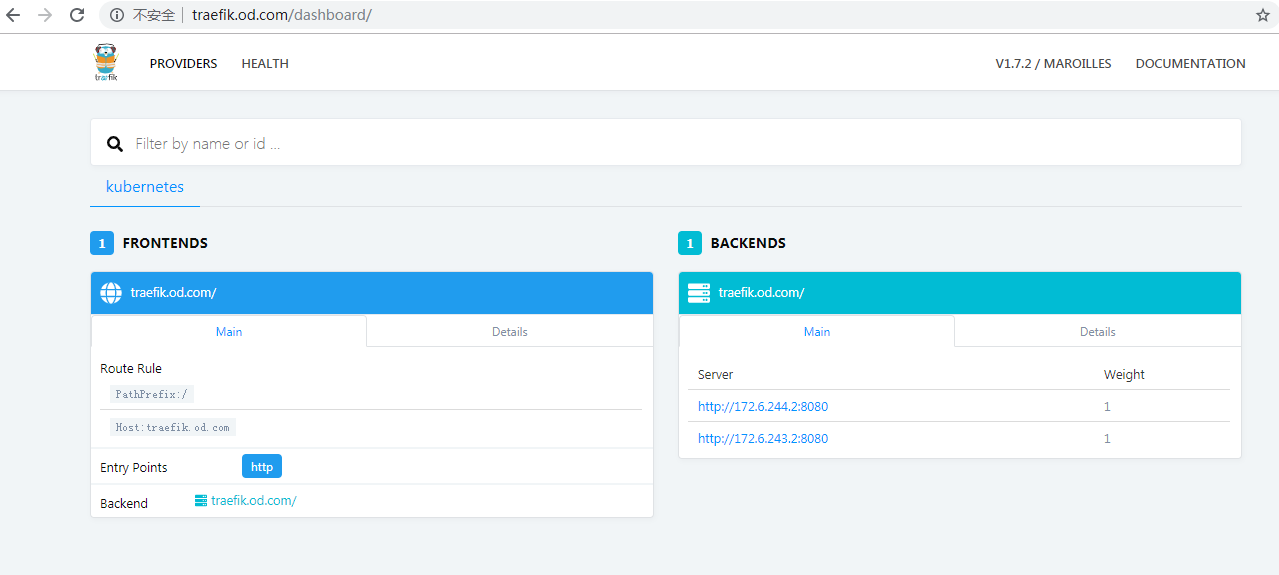

6.浏览器访问

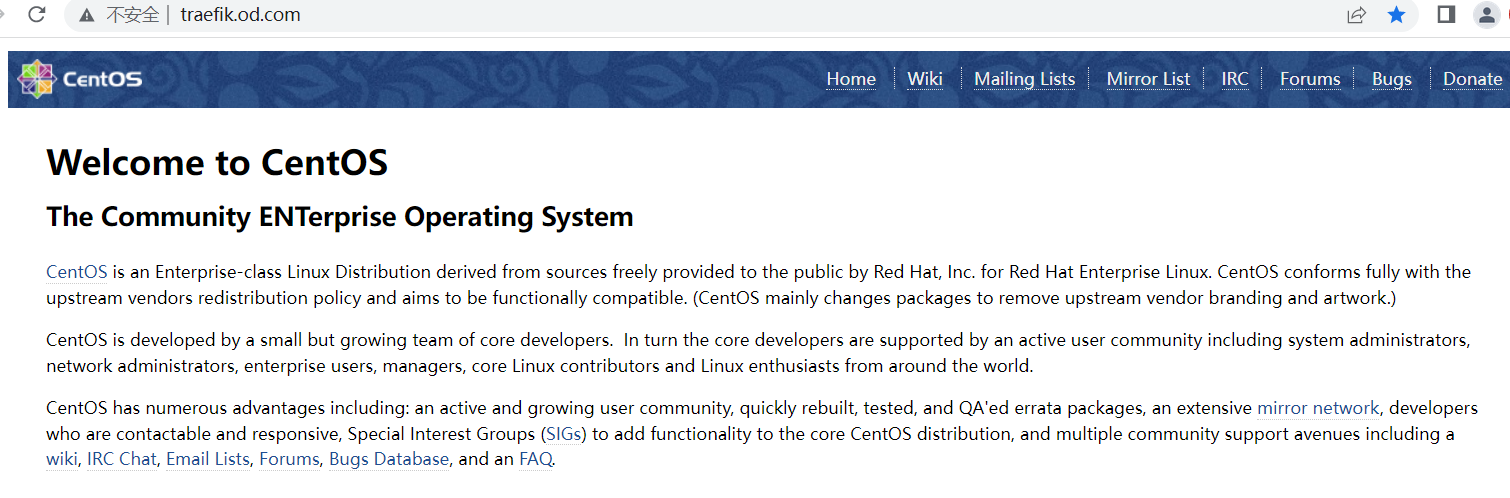

注意:如果访问出现 Welcome to CentOS, 如下图所示:

则说明 nginx 未正确加载 conf.d 目录下的 od.com.conf 配置文件!

解决办法: 在nginx.conf 中使用 include 强制加载。

include可以用在任何地方,前提是include的文件自身语法正确。 include文件路径可以是绝对路径,也可以是相对路径,相对路径以nginx.conf为基准,同时可以使用通配符。 # 绝路径 include /etc/conf/nginx.conf # 相对路径 include port/89.conf # 通配符 include*.conf

第五章:K8S的GUI资源管理插件-仪表篇

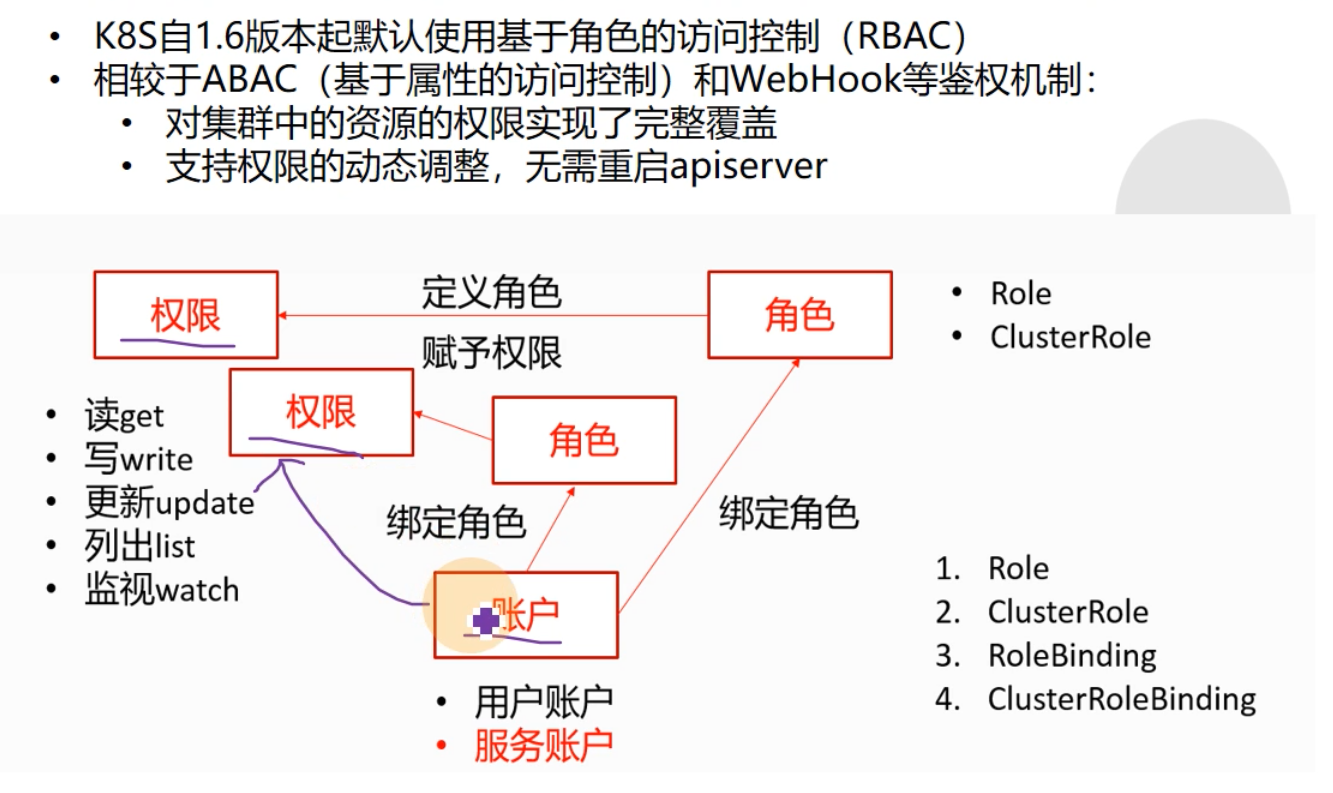

K8S的RBAC 鉴权:权限不是直接对用户授权,而是通过绑定角色,通过对角色的授权来达到权限的控制。

1.部署kubenetes-dashborad

1.准备dashboard镜像

运维主机SHKF6-245.host.com上:

[root@shkf6-245 ~]# docker pull sunrisenan/kubernetes-dashboard-amd64:v1.10.1 [root@shkf6-245 ~]# docker pull sunrisenan/kubernetes-dashboard-amd64:v1.8.3 [root@shkf6-245 ~]# docker images |grep dash sunrisenan/kubernetes-dashboard-amd64 v1.10.1 f9aed6605b81 11 months ago 122MB sunrisenan/kubernetes-dashboard-amd64 v1.8.3 0c60bcf89900 21 months ago 102MB [root@shkf6-245 ~]# docker tag f9aed6605b81 harbor.od.com/public/kubernetes-dashboard-amd64:v1.10.1 [root@shkf6-245 ~]# docker push !$ [root@shkf6-245 ~]# docker tag 0c60bcf89900 harbor.od.com/public/kubernetes-dashboard-amd64:v1.8.3 [root@shkf6-245 ~]# docker push !$

2.准备配置清单

运维主机SHKF6-245.host.com上:

-

创建目录

[root@shkf6-245 ~]# mkdir -p /data/k8s-yaml/dashboard && cd /data/k8s-yaml/dashboard -

rbac

[root@shkf6-245 dashboard]# cat rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile name: kubernetes-dashboard-admin namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: kubernetes-dashboard-admin namespace: kube-system labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: kubernetes-dashboard-admin namespace: kube-system

Deployment

[root@shkf6-245 dashboard]# cat dp.yaml apiVersion: apps/v1 kind: Deployment metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical containers: - name: kubernetes-dashboard image: harbor.od.com/public/kubernetes-dashboard-amd64:v1.8.3 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 50m memory: 100Mi ports: - containerPort: 8443 protocol: TCP args: # PLATFORM-SPECIFIC ARGS HERE - --auto-generate-certificates volumeMounts: - name: tmp-volume mountPath: /tmp livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard-admin tolerations: - key: "CriticalAddonsOnly" operator: "Exists"

Service

[root@shkf6-245 dashboard]# cat svc.yaml apiVersion: v1 kind: Service metadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: k8s-app: kubernetes-dashboard ports: - port: 443 targetPort: 8443

ingress

[root@shkf6-245 dashboard]# cat ingress.yaml apiVersion: extensions/v1beta1 kind: Ingress metadata: name: kubernetes-dashboard namespace: kube-system annotations: kubernetes.io/ingress.class: traefik spec: rules: - host: dashboard.od.com http: paths: - backend: serviceName: kubernetes-dashboard servicePort: 443

3.依次执行创建

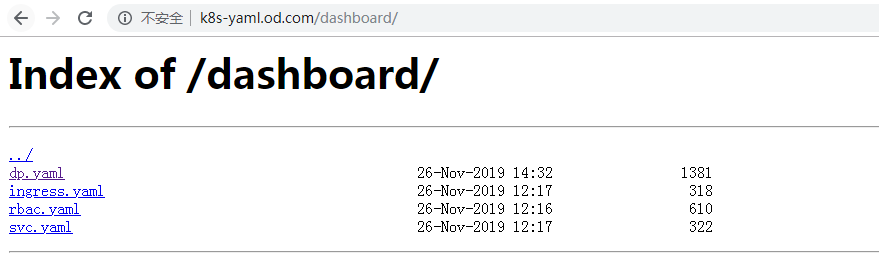

浏览器打开:http://k8s-yaml.od.com/dashboard/检查资源配置清单文件是否正确创建

在SHKF6-243.host.com机器上:

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/rbac.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dp.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/svc.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/ingress.yaml2.解析域名

- 添加解析记录

[root@shkf6-241 ~]# cat /var/named/od.com.zone $ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2019111208 ; serial # 向后滚动+1 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 192.168.6.241 harbor A 192.168.6.245 k8s-yaml A 192.168.6.245 traefik A 192.168.6.66 dashboard A 192.168.6.66 # 添加这条解析

- 重启named并检查

[root@shkf6-241 ~]# systemctl restart named

[root@shkf6-243 ~]# dig dashboard.od.com @10.96.0.2 +short

192.168.6.66

[root@shkf6-243 ~]# dig dashboard.od.com @192.168.6.241 +short

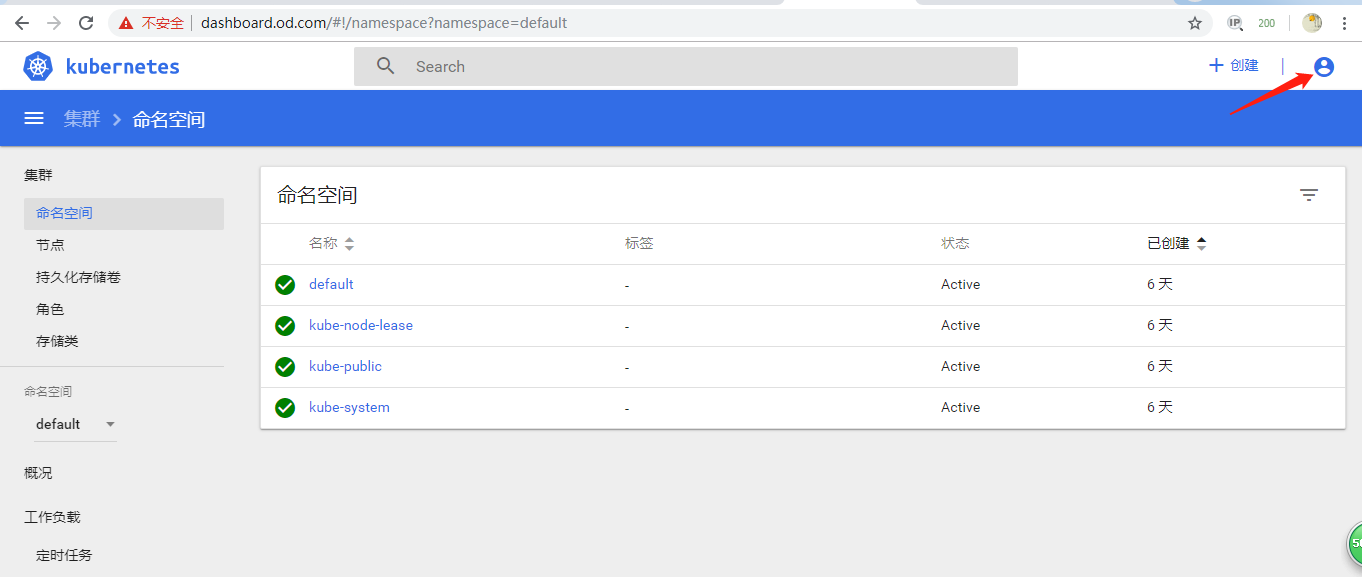

192.168.6.663.浏览器访问

浏览器访问:http://dashboard.od.com

4.配置认证

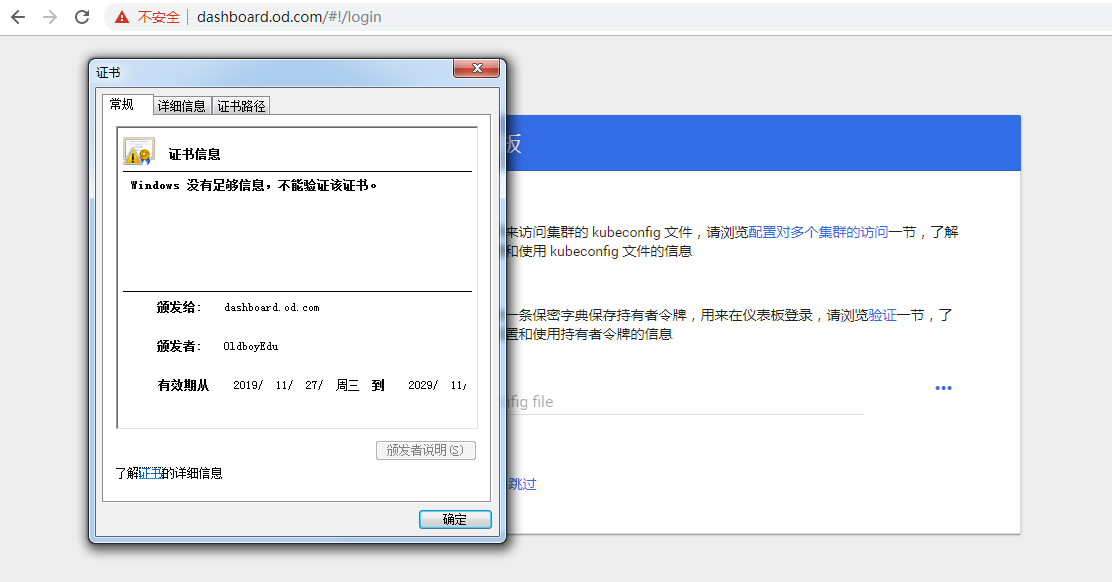

1.签发证书

[root@shkf6-245 certs]# (umask 077; openssl genrsa -out dashboard.od.com.key 2048) Generating RSA private key, 2048 bit long modulus ............................+++ ........+++ e is 65537 (0x10001) [root@shkf6-245 certs]# openssl req -new -key dashboard.od.com.key -out dashboard.od.com.csr -subj "/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops" [root@shkf6-245 certs]# openssl x509 -req -in dashboard.od.com.csr -CA ca.pem -CAkey ca-key.pem -CAcreateserial -out dashboard.od.com.crt -days 3650 Signature ok subject=/CN=dashboard.od.com/C=CN/ST=BJ/L=Beijing/O=OldboyEdu/OU=ops Getting CA Private Key [root@shkf6-245 certs]# ll dash* -rw-r--r-- 1 root root 1196 Nov 27 12:52 dashboard.od.com.crt -rw-r--r-- 1 root root 1005 Nov 27 12:52 dashboard.od.com.csr -rw------- 1 root root 1679 Nov 27 12:52 dashboard.od.com.key

2.检查证书

[root@shkf6-245 certs]# cfssl-certinfo -cert dashboard.od.com.crt { "subject": { "common_name": "dashboard.od.com", "country": "CN", "organization": "OldboyEdu", "organizational_unit": "ops", "locality": "Beijing", "province": "BJ", "names": [ "dashboard.od.com", "CN", "BJ", "Beijing", "OldboyEdu", "ops" ] }, "issuer": { "common_name": "OldboyEdu", "country": "CN", "organization": "od", "organizational_unit": "ops", "locality": "beijing", "province": "beijing", "names": [ "CN", "beijing", "beijing", "od", "ops", "OldboyEdu" ] }, "serial_number": "11427294234507397728", "not_before": "2019-11-27T04:52:30Z", "not_after": "2029-11-24T04:52:30Z", "sigalg": "SHA256WithRSA", "authority_key_id": "", "subject_key_id": "", "pem": "-----BEGIN CERTIFICATE-----\nMIIDRTCCAi0CCQCeleeP167KYDANBgkqhkiG9w0BAQsFADBgMQswCQYDVQQGEwJD\nTjEQMA4GA1UECBMHYmVpamluZzEQMA4GA1UEBxMHYmVpamluZzELMAkGA1UEChMC\nb2QxDDAKBgNVBAsTA29wczESMBAGA1UEAxMJT2xkYm95RWR1MB4XDTE5MTEyNzA0\nNTIzMFoXDTI5MTEyNDA0NTIzMFowaTEZMBcGA1UEAwwQZGFzaGJvYXJkLm9kLmNv\nbTELMAkGA1UEBhMCQ04xCzAJBgNVBAgMAkJKMRAwDgYDVQQHDAdCZWlqaW5nMRIw\nEAYDVQQKDAlPbGRib3lFZHUxDDAKBgNVBAsMA29wczCCASIwDQYJKoZIhvcNAQEB\nBQADggEPADCCAQoCggEBALeeL9z8V3ysUqrAuT7lEKcF2bi0pSuwoWfFgfBtGmQa\nQtyNaOlyemEexeUOKaIRsNlw0fgcK6HyyLkaMFsVa7q+bpYBPKp4d7lTGU7mKJNG\nNcCU21G8WZYS4jVtd5IYSmmfNkCnzY7l71p1P+sAZNZ7ht3ocNh6jPcHLMpETLUU\nDaKHmT/5iAhxmgcE/V3DUnTawU9PXF2WnICL9xJtmyErBKF5KDIEjC1GVjC/ZLtT\nvEgbH57TYgrp4PeCEAQTtgNbVJuri4awaLpHkNz2iCTNlWpLaLmV1jT1NtChz6iw\n4lDfEgS6YgDh9ZhlB2YvnFSG2eq4tGm3MKorbuMq9S0CAwEAATANBgkqhkiG9w0B\nAQsFAAOCAQEAG6szkJDIvb0ge2R61eMBVe+CWHHSE6X4EOkiQCaCi3cs8h85ES63\nEdQ8/FZorqyZH6nJ/64OjiW1IosXRTFDGMRunqkJezzj9grYzUKfkdGxTv+55IxM\ngtH3P9wM1EeNwdJCpBq9xYaPzZdu0mzmd47BP7nuyrXzkMSecC/d+vrKngEnUXaZ\n9WK3eGnrGPmeW7z5j9uVsimzNlri8i8FNBTGCDx2sgJc16MtYfGhORwN4oVXCHiS\n4A/HVSYMUeR4kGxoX9RUbf8vylRsdEbKQ20M5cbWQAAH5LNig6jERRsuylEh4uJE\nubhEbfhePgZv+mkFQ6tsuIH/5ETSV4v/bg==\n-----END CERTIFICATE-----\n" }

3.配置nginx

在shkf6-241和shkf6-242上:

- 拷贝证书

~]# mkdir /etc/nginx/conf.d/certs

~]# scp shkf6-245:/opt/certs/dashboard.od.com.crt /etc/nginx/certs/

~]# scp shkf6-245:/opt/certs/dashboard.od.com.key /etc/nginx/certs/- 配置虚拟主机dashboard.od.com.conf,走https

[root@shkf6-241 ~]# cat /etc/nginx/conf.d/dashboard.od.com.conf server { listen 80; server_name dashboard.od.com; rewrite ^(.*)$ https://${server_name}$1 permanent; } server { listen 443 ssl; server_name dashboard.od.com; ssl_certificate "certs/dashboard.od.com.crt"; ssl_certificate_key "certs/dashboard.od.com.key"; ssl_session_cache shared:SSL:1m; ssl_session_timeout 10m; ssl_ciphers HIGH:!aNULL:!MD5; ssl_prefer_server_ciphers on; location / { proxy_pass http://default_backend_traefik; proxy_set_header Host $http_host; proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for; } }

- 重载nginx配置

~]# nginx -s reload- 刷新页面检查

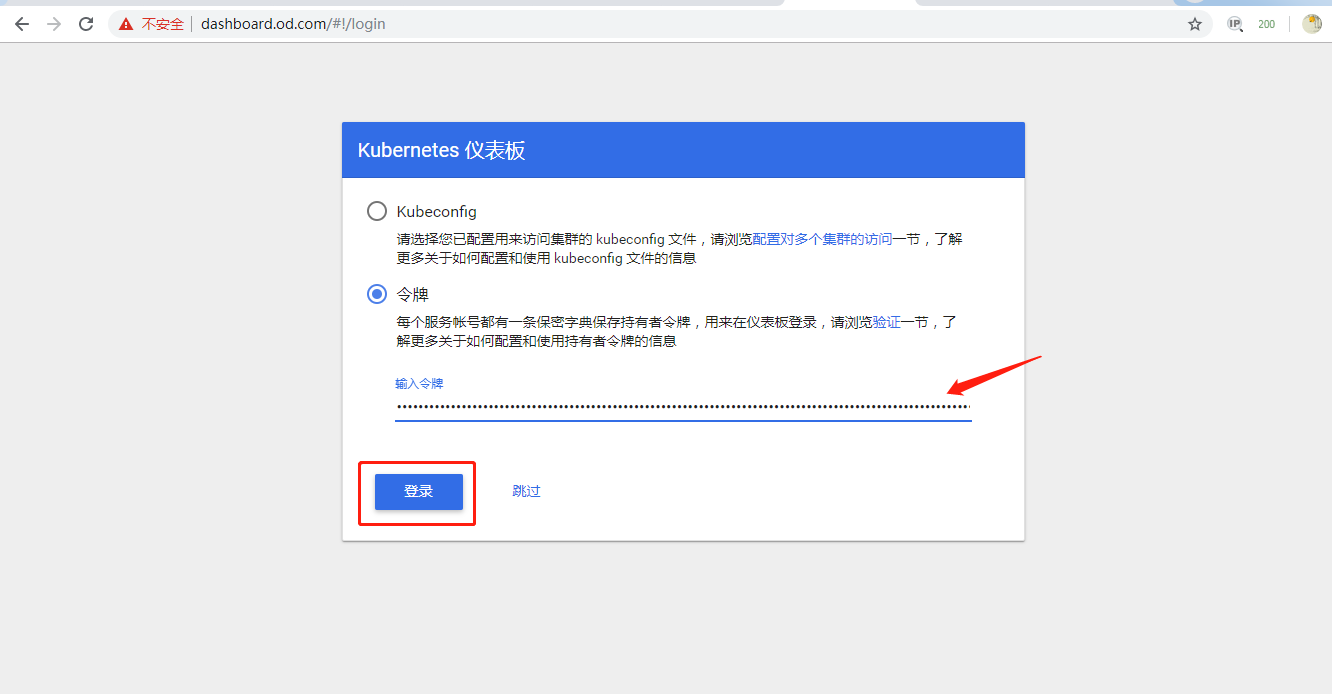

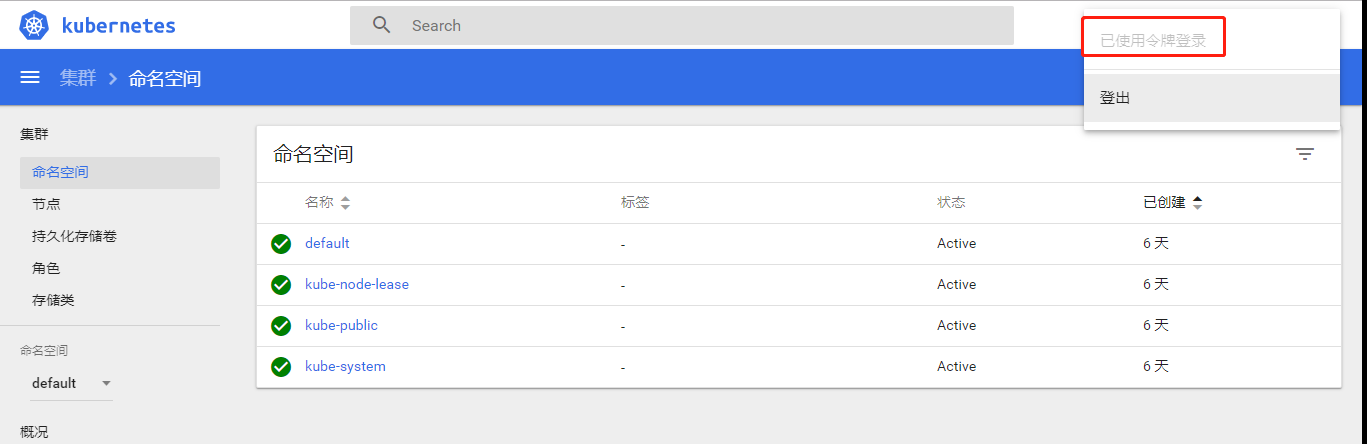

4.获取kubernetes-dashboard-admin-token

[root@shkf6-243 ~]# kubectl get secrets -n kube-system

NAME TYPE DATA AGE

coredns-token-r5s8r kubernetes.io/service-account-token 3 5d19h

default-token-689cg kubernetes.io/service-account-token 3 6d14h

kubernetes-dashboard-admin-token-w46s2 kubernetes.io/service-account-token 3 16h

kubernetes-dashboard-key-holder Opaque 2 14h

traefik-ingress-controller-token-nkfb8 kubernetes.io/service-account-token 3 5d1h[root@shkf6-243 ~]# kubectl describe secret kubernetes-dashboard-admin-token-w46s2 -n kube-system |tail Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin kubernetes.io/service-account.uid: 11fedd46-3591-4c15-b32d-5818e5aca7d8 Type: kubernetes.io/service-account-token Data ==== ca.crt: 1346 bytes namespace: 11 bytes token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi13NDZzMiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjExZmVkZDQ2LTM1OTEtNGMxNS1iMzJkLTU4MThlNWFjYTdkOCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.HkVak9znUafeh4JTkzGRiH3uXVjcuHMTOsmz58xJy1intMn25ouC04KK7uplkAtd_IsA6FFo-Kkdqc3VKZ5u5xeymL2ccLaLiCXnlxAcVta5CuwyyO4AXeS8ss-BMKCAfeIldnqwJRPX2nzORJap3CTLU0Cswln8x8iXisA_gBuNVjiWzJ6tszMRi7vX1BM6rp6bompWfNR1xzBWifjsq8J4zhRYG9sVi9Ec3_BZUEfIc0ozFF91Jc5qCk2L04y8tHBauVuJo_ecgMdJfCDk7VKVnyF3Z-Fb8cELNugmeDlKYvv06YHPyvdxfdt99l6QpvuEetbMGAhh5hPOd9roVw

5.验证toke登录

6.升级dashboard为v1.10.1

在shkf6-245.host.com上:

- 更改镜像地址:

[root@shkf6-245 dashboard]# grep image dp.yaml image: harbor.od.com/public/kubernetes-dashboard-amd64:v1.10.1

在shkf6-243.host.com上:

- 应用配置:

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dp.yaml

7.dashboard 官方给的rbac-minimal

dashboard]# cat rbac-minimal.yaml apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile name: kubernetes-dashboard namespace: kube-system --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile name: kubernetes-dashboard-minimal namespace: kube-system rules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics from heapster. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:"] verbs: ["get"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kubernetes-dashboard-minimal namespace: kube-system labels: k8s-app: kubernetes-dashboard addonmanager.kubernetes.io/mode: Reconcile roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboard-minimal subjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kube-system

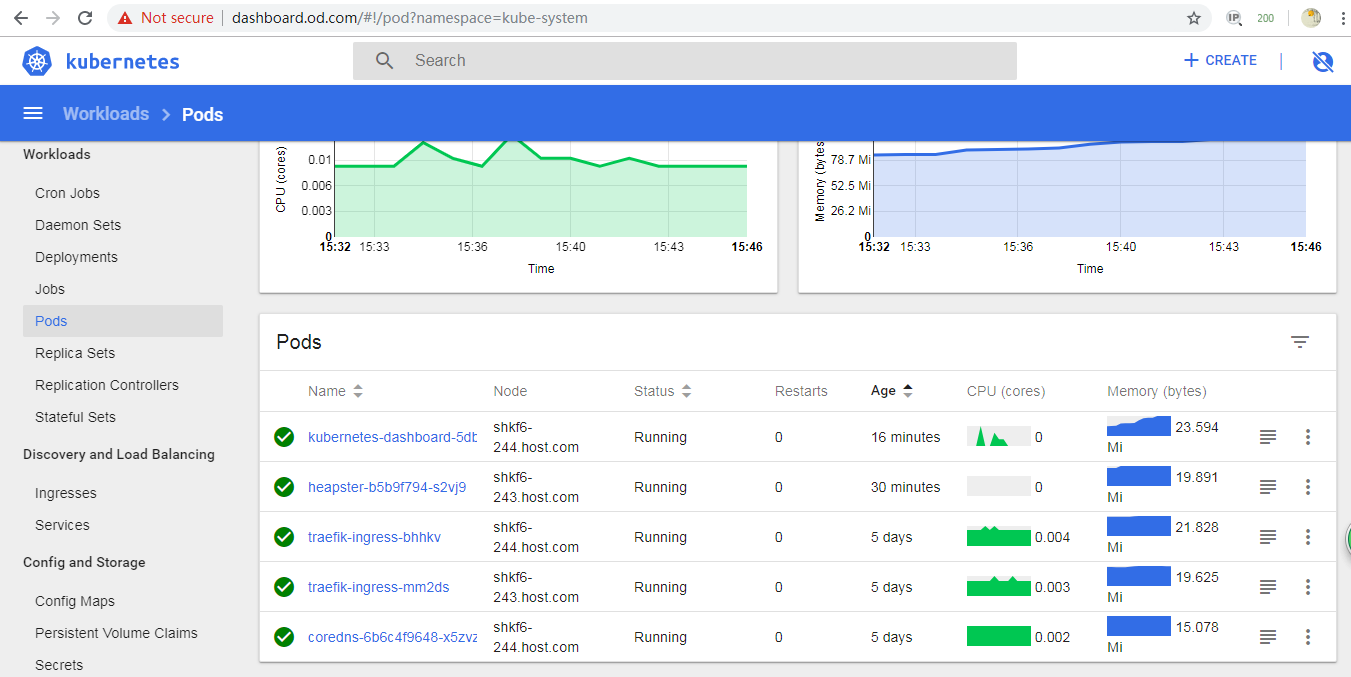

5.部署heapster

1.准备heapster镜像

[root@shkf6-245 ~]# docker pull sunrisenan/heapster:v1.5.4

[root@shkf6-245 ~]# docker images|grep heapster

sunrisenan/heapster v1.5.4 c359b95ad38b 9 months ago 136MB

[root@shkf6-245 ~]# docker tag c359b95ad38b harbor.od.com/public/heapster:v1.5.4

[root@shkf6-245 ~]# docker push !$2.准备资源配置清单

- rbac.yaml

[root@shkf6-245 ~]# cat /data/k8s-yaml/heapster/rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: heapster namespace: kube-system --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: heapster roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:heapster subjects: - kind: ServiceAccount name: heapster namespace: kube-system

Deployment

[root@shkf6-245 ~]# cat /data/k8s-yaml/heapster/dp.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: heapster namespace: kube-system spec: replicas: 1 template: metadata: labels: task: monitoring k8s-app: heapster spec: serviceAccountName: heapster containers: - name: heapster image: harbor.od.com/public/heapster:v1.5.4 imagePullPolicy: IfNotPresent command: - /opt/bitnami/heapster/bin/heapster - --source=kubernetes:https://kubernetes.default

service

[root@shkf6-245 ~]# cat /data/k8s-yaml/heapster/svc.yaml apiVersion: v1 kind: Service metadata: labels: task: monitoring # For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons) # If you are NOT using this as an addon, you should comment out this line. kubernetes.io/cluster-service: 'true' kubernetes.io/name: Heapster name: heapster namespace: kube-system spec: ports: - port: 80 targetPort: 8082 selector: k8s-app: heapster

3.应用资源配置清单

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/heapster/rbac.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/heapster/dp.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/heapster/svc.yaml4.重启dashboard(可以不重启)

[root@shkf6-243 ~]# kubectl delete -f http://k8s-yaml.od.com/dashboard/dp.yaml

[root@shkf6-243 ~]# kubectl apply -f http://k8s-yaml.od.com/dashboard/dp.yaml5.检查

- 主机检查

[root@shkf6-243 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

shkf6-243.host.com 149m 3% 3643Mi 47%

shkf6-244.host.com 130m 3% 3300Mi 42%

[root@shkf6-243 ~]# kubectl top pod -n kube-public

NAME CPU(cores) MEMORY(bytes)

nginx-dp-5dfc689474-dm555 0m 10Mi - 浏览器检查

第六章:k8s平滑升级

第一步:观察哪台机器pod少

[root@shkf6-243 src]# kubectl get nodes NAME STATUS ROLES AGE VERSION shkf6-243.host.com Ready master,node 6d16h v1.15.2 shkf6-244.host.com Ready master,node 6d16h v1.15.2 [root@shkf6-243 src]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-6b6c4f9648-x5zvz 1/1 Running 0 5d22h 172.6.244.3 shkf6-244.host.com <none> <none> heapster-b5b9f794-s2vj9 1/1 Running 0 76m 172.6.243.4 shkf6-243.host.com <none> <none> kubernetes-dashboard-5dbdd9bdd7-qlk52 1/1 Running 0 62m 172.6.244.4 shkf6-244.host.com <none> <none> traefik-ingress-bhhkv 1/1 Running 0 5d4h 172.6.244.2 shkf6-244.host.com <none> <none> traefik-ingress-mm2ds 1/1 Running 0 5d4h 172.6.243.2 shkf6-243.host.com <none> <none>

第二步:在负载均衡了禁用7层和4层

略第三步:摘除node节点

[root@shkf6-243 src]# kubectl delete node shkf6-243.host.com

node "shkf6-243.host.com" deleted

[root@shkf6-243 src]# kubectl get node

NAME STATUS ROLES AGE VERSION

shkf6-244.host.com Ready master,node 6d17h v1.15.2第四步:观察运行POD情况

[root@shkf6-243 src]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-6b6c4f9648-x5zvz 1/1 Running 0 5d22h 172.6.244.3 shkf6-244.host.com <none> <none>

heapster-b5b9f794-dlt2z 1/1 Running 0 15s 172.6.244.6 shkf6-244.host.com <none> <none>

kubernetes-dashboard-5dbdd9bdd7-qlk52 1/1 Running 0 64m 172.6.244.4 shkf6-244.host.com <none> <none>

traefik-ingress-bhhkv 1/1 Running 0 5d4h 172.6.244.2 shkf6-244.host.com <none> <none>第五步:检查dns

[root@shkf6-243 src]# dig -t A kubernetes.default.svc.cluster.local @10.96.0.2 +short

10.96.0.1第六步:开始升级

[root@shkf6-243 ~]# cd /opt/src/ [root@shkf6-243 src]# wget http://down.sunrisenan.com/k8s/kubernetes/kubernetes-server-linux-amd64-v1.15.4.tar.gz [root@shkf6-243 src]# tar xf kubernetes-server-linux-amd64-v1.15.4.tar.gz [root@shkf6-243 src]# mv kubernetes /opt/kubernetes-v1.15.4 [root@shkf6-243 src]# cd /opt/ [root@shkf6-243 opt]# rm -f kubernetes [root@shkf6-243 opt]# ln -s /opt/kubernetes-v1.15.4 kubernetes [root@shkf6-243 opt]# cd /opt/kubernetes [root@shkf6-243 kubernetes]# rm -f kubernetes-src.tar.gz [root@shkf6-243 kubernetes]# cd server/bin/ [root@shkf6-243 bin]# rm -f *.tar [root@shkf6-243 bin]# rm -f *tag [root@shkf6-243 bin]# cp -r /opt/kubernetes-v1.15.2/server/bin/cert . [root@shkf6-243 bin]# cp -r /opt/kubernetes-v1.15.2/server/bin/conf . [root@shkf6-243 bin]# cp -r /opt/kubernetes-v1.15.2/server/bin/*.sh . [root@shkf6-243 bin]# systemctl restart supervisord.service [root@shkf6-243 bin]# kubectl get node NAME STATUS ROLES AGE VERSION shkf6-243.host.com Ready <none> 16s v1.15.4 shkf6-244.host.com Ready master,node 6d17h v1.15.2

升级另一台:

[root@shkf6-244 src]# kubectl get node NAME STATUS ROLES AGE VERSION shkf6-243.host.com Ready <none> 95s v1.15.4 shkf6-244.host.com Ready master,node 6d17h v1.15.2 [root@shkf6-244 src]# kubectl delete node shkf6-244.host.com node "shkf6-244.host.com" deleted [root@shkf6-244 src]# kubectl get node NAME STATUS ROLES AGE VERSION shkf6-243.host.com Ready <none> 3m2s v1.15.4 [root@shkf6-244 src]# kubectl get pods -n kube-system -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES coredns-6b6c4f9648-bxqcp 1/1 Running 0 20s 172.6.243.3 shkf6-243.host.com <none> <none> heapster-b5b9f794-hjx74 1/1 Running 0 20s 172.6.243.4 shkf6-243.host.com <none> <none> kubernetes-dashboard-5dbdd9bdd7-gj6vc 1/1 Running 0 20s 172.6.243.5 shkf6-243.host.com <none> <none> traefik-ingress-4hl97 1/1 Running 0 3m22s 172.6.243.2 shkf6-243.host.com <none> <none> [root@shkf6-244 src]# dig -t A kubernetes.default.svc.cluster.local @10.96.0.2 +short 10.96.0.1 [root@shkf6-244 src]# wget http://down.sunrisenan.com/k8s/kubernetes/kubernetes-server-linux-amd64-v1.15.4.tar.gz [root@shkf6-244 src]# tar xf kubernetes-server-linux-amd64-v1.15.4.tar.gz [root@shkf6-244 src]# mv kubernetes /opt/kubernetes-v1.15.4 [root@shkf6-244 ~]# cd /opt/ [root@shkf6-244 opt]# rm -f kubernetes [root@shkf6-244 opt]# ln -s /opt/kubernetes-v1.15.4 kubernetes [root@shkf6-244 opt]# cd kubernetes [root@shkf6-244 kubernetes]# rm -f kubernetes-src.tar.gz [root@shkf6-244 kubernetes]# cd server/bin/ [root@shkf6-244 bin]# rm -f *.tar *tag [root@shkf6-244 bin]# cp -r /opt/kubernetes-v1.15.2/server/bin/cert . [root@shkf6-244 bin]# cp -r /opt/kubernetes-v1.15.2/server/bin/conf . [root@shkf6-244 bin]# cp -r /opt/kubernetes-v1.15.2/server/bin/*.sh . [root@shkf6-244 bin]# systemctl restart supervisord.service [root@shkf6-244 kubernetes]# kubectl get node NAME STATUS ROLES AGE VERSION shkf6-243.host.com Ready <none> 16m v1.15.4 shkf6-244.host.com Ready <none> 5m7s v1.15.4

此时,dashiboart 安装完成,升级测试完成,意味着单纯的搭建K8S 平台初步完成!!