import requests

from bs4 import BeautifulSoup

import time

headers={

#'User-Agent':'Nokia6600/1.0 (3.42.1) SymbianOS/7.0s Series60/2.0 Profile/MIDP-2.0 Configuration/CLDC-1.0'

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/55.0.2883.87 Safari/537.36'

}

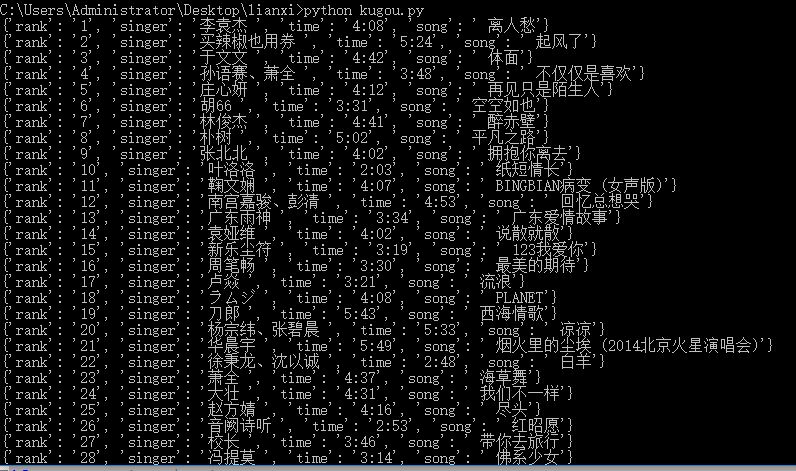

def get_info(url):

res=requests.get(url,headers=headers)

soup=BeautifulSoup(res.text,'lxml')

ranks=soup.select('span.pc_temp_num')

titles=soup.select(' div.pc_temp_songlist > ul > li > a')

times=soup.select('span.pc_temp_tips_r > span')

for rank,title,time in zip(ranks,titles,times):

data={

'rank':rank.get_text().strip(),

'singer':title.get_text().split('-')[0],

'song':title.get_text().split('-')[1],

'time':time.get_text().strip()

}

print(data)

if __name__=='__main__':

urls=['http://www.kugou.com/yy/rank/home/{}-8888.html?from=homepage'.format(str(i)) for i in range(1,4)]

for url in urls:

get_info(url)

time.sleep(1)

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号