Person Transfer GAN to Bridge Domain Gap for Person Re-identification

注:原创不易,转载请务必注明原作者和出处,感谢支持!

相关背景

行人再识别(Person Re-identification, Person ReID)是指给定一个行人的图片/视频(probe),然后从一个监控网络所拍摄的图片/视频(gallery)库中识别出该行人的这个一个过程。其可以看做是一个基于内容的图像检索(CBIR)的一个子问题。

论文题目:Person Transfer GAN to Bridge Domain Gap for Person Re-identification

来源:CVPR 2018

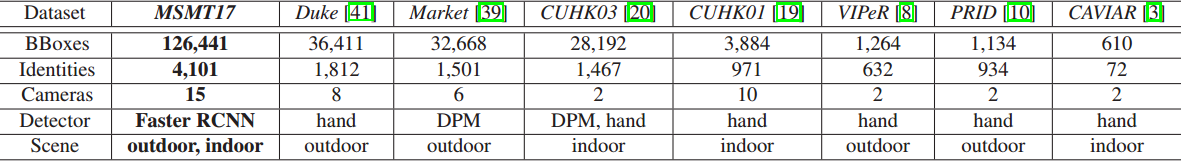

摘要:Although the performance of person Re-Identification(ReID) has been significantly boosted, many challengins issues in real scenarios have not been fully investigated, e.g., the complex scenes and lighting variations, viewpoint and pose changes, and the large number of identities in a camera network. To facilitate the research towards conquering those issues, this paper contributes a new dataset called MSMT17 with many important features, e.g., 1) the raw videos are taken by an 15-camera network deployed in both indoor and outdoor scenes, 2) the videos cover a long period of time and present complex lighting variations, and 3) it contains currently the largest number of annotated identities, i.e. 4101 identities and 126441 bounding boxes. We also observe that, domain gap commonly exists between datasets, which essentially causes severe performance drop when training and testing on different datasets. This results in that available training data cannot be effectively leveraged for new testing domains. To relieve the expensive costs of annotating new training samples, we propose a Person Transfer Generative Adversarial Network(PTGAN) to bridge the domain gap. Comprehensive experiments show that the domain gap could be substantially narrowed-down by the PTGAN.

主要内容

MSMT17

数据集网址:http://www.pkuvmc.com

针对目前Person ReID数据集存在的缺陷:

- 数据量规模小

- 场景单一

- 数据采集的时间跨度短,光照变化不明显

- 数据标注方式不合理

本文发布了一个新的Person ReID数据集——MSMT17。MSMT17是目前为止数据量规模最大的Person ReID数据集。共有126441个Bounding Boxes,4101个Identities,15个Cameras,涵盖了indoor和outdoor两个场景,Detector用的是更为先进的Faster RCNN。

Person Transfer GAN(PTGAN)

Domain Gap现象

举个例子,比如在CUHK03数据集上训练好的模型放到PRID数据集上测试,结果rank-1的准确率只有2.0%。在不同的Person ReID数据集上进行算法的训练和测试会导致ReID的性能急剧下降。而这种下降是普遍存在的。这意味着基于旧有的训练数据训练到的模型无法直接应用在新的数据集中,如何降低Domain Gap的影响以利用好旧有的标注数据很有研究的必要。为此本文提出了PTGAN模型。

造成Domain Gap现象的原因是复杂的,可能是由于光照、图像分辨率、人种、季节和背景等复杂因素造成的。

比如,我们在数据集B上做Person ReID任务时,为了更好地利用现有数据集A的训练数据,我们可以试着将数据集A中的行人图片迁移到目标数据集B当中。但由于Domain Gap的存在,在迁移时,要求算法能够做到以下两点:

- 被迁移的行人图片应该具有和目标数据集图片相一致的style,这是为了尽可能地降低因为style不一致所导致的Domain Gap所带来的性能下降。

- 具有区分不同行人能力的外观特征(appearance)和身份线索(identity cues)应该在迁移之后保持不变!因为迁移前和迁移后的行人具有相同的label,即他们应该是同一个人。

因为Person Transfer与Unpaired Image-to-Image Translation任务类似,所以本文选择在Unpaired Image-to-Image Translation任务中表现优异的Cycle-GAN模型基础上,提出了Person Transfer GAN模型。PTGAN模型的loss函数\(L_{PTGAN}\)被设计成如下公式:

其中:

\(L_{Style}\):the style loss

\(L_{ID}\):the identity loss

\(\lambda_1\):the parameter for the trade-off between the two losses above

定义下列符号,则\(L_{Style}\)可以表示成:

\(G\):the style mapping function from dataset A to dataset B

\(\overline{G}\):the style mapping function from dataset B to dataset A

\(D_A\):the style discriminator for dataset A

\(D_B\):the style discriminator for dataset B

其中:

\(L_{GAN}\):the standard adversarial loss

\(L_{cyc}\):the cycle consistency loss

定义下列符号,则\(L_{ID}\)可以表示成:

\(a\)和\(b\):original image from dataset A and B

\(G(a)\)和\(\overline{G}(b)\):transferred image from image a and b

\(M(a)\)和\(M(b)\):forground mask of image a and b

迁移效果图

总结

- 本文发布了一个更接近实际应用场景的新数据集MSMT17,因其更接近实际的复杂应用场景,使得MSMT17数据集更具挑战性和研究价值

- 本文提出了一个能够降低Domain Gap影响的PTGAN模型,并通过实验证明其有效性