阶段一模块四(hbase)

Hbase作业

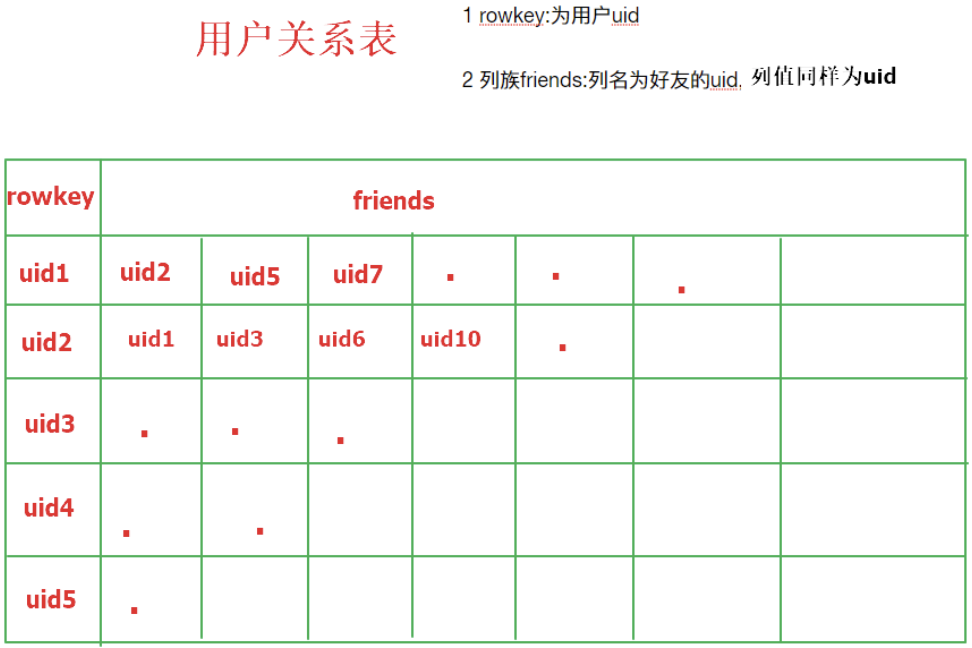

在社交网站,社交APP上会存储有大量的用户数据以及用户之间的关系数据,比如A用户的好友列表会展示出他所有的好友,现有一张Hbase表,存储就是当前注册用户的好友关系数据,如下

需求

-

使用Hbase相关API创建一张结构如上的表

-

删除好友操作实现(好友关系双向,一方删除好友,另一方也会被迫删除好友)

例如:uid1用户执行删除uid2这个好友,则uid2的好友列表中也必须删除uid1

思路分析

-

创建表。RelationDemo.java

package com.lagou.hbase; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.hbase.HBaseConfiguration; import org.apache.hadoop.hbase.HColumnDescriptor; import org.apache.hadoop.hbase.HTableDescriptor; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import org.apache.hadoop.hbase.protobuf.generated.HBaseProtos; import org.apache.hadoop.hbase.util.Bytes; import org.junit.After; import org.junit.Before; import org.junit.Test; import java.io.IOException; import java.util.ArrayList; /** * hbase客户端 */ public class RelationDemo { //创建一张维护社交好友关系的hbase /* 表名:relation rowkey:uid 列族:friends */ static Configuration conf = null; static Connection conn = null; static { conf = HBaseConfiguration.create(); conf.set("hbase.zookeeper.quorum", "hadoop01,hadoop02"); conf.set("hbase.zookeeper.property.clientPort", "2181"); //通过conf获取到hbase集群的连接 try { conn = ConnectionFactory.createConnection(conf); } catch (IOException e) { e.printStackTrace(); } } // 创建表方法 public void createRelationTable() throws IOException { final Admin admin = conn.getAdmin(); final HTableDescriptor relation = new HTableDescriptor(TableName.valueOf("relation")); final HColumnDescriptor cf = new HColumnDescriptor("friends"); final HTableDescriptor hTableDescriptor = relation.addFamily(cf); admin.createTable(hTableDescriptor); System.out.println("relation表创建成功!!"); admin.close(); } //初始化部分数据 public void initRelationData() throws IOException { final Table relation = conn.getTable(TableName.valueOf("relation")); //插入uid1用户 final Put uid1 = new Put(Bytes.toBytes("uid1")); uid1.addColumn(Bytes.toBytes("friends"), Bytes.toBytes("uid2"), Bytes.toBytes("uid2")); uid1.addColumn(Bytes.toBytes("friends"), Bytes.toBytes("uid3"), Bytes.toBytes("uid3")); uid1.addColumn(Bytes.toBytes("friends"), Bytes.toBytes("uid4"), Bytes.toBytes("uid4")); //插入uid2用户 final Put uid2 = new Put(Bytes.toBytes("uid2")); uid2.addColumn(Bytes.toBytes("friends"), Bytes.toBytes("uid1"), Bytes.toBytes("uid1")); uid2.addColumn(Bytes.toBytes("friends"), Bytes.toBytes("uid3"), Bytes.toBytes("uid3")); uid2.addColumn(Bytes.toBytes("friends"), Bytes.toBytes("uid4"), Bytes.toBytes("uid4")); //准备list集合 final ArrayList<Put> puts = new ArrayList<Put>(); puts.add(uid1); puts.add(uid2); //执行写入 relation.put(puts); System.out.println("数据初始化成功!!"); } //某个用户其中一个好友, public void deleteFriedns(String uid, String friend) throws IOException { /* 1 需要根据传入用户删除其列族中对应好友的列 2 其好友的列族对应需要删除该用户的列 */ final Table relation = conn.getTable(TableName.valueOf("relation")); final Delete delete = new Delete(Bytes.toBytes(uid)); delete.addColumn(Bytes.toBytes("friends"), Bytes.toBytes(friend));//最好使用协处理器实现 relation.delete(delete); } public static void main(String[] args) throws IOException { RelationDemo r = new RelationDemo(); r.createRelationTable();//创建表 r.initRelationData();//初始化数据 //假设uid1用户删除了uid2这个好友,需要执行删除操作 // r.deleteFriedns("uid1", "uid2"); } } -

使用Observer协处理器,当执行删除操作的时候,触发另外删除一个操作。MyProcesser.java

package com.lagou.hbase; import org.apache.hadoop.hbase.Cell; import org.apache.hadoop.hbase.CellUtil; import org.apache.hadoop.hbase.util.Bytes; import org.apache.hadoop.hbase.TableName; import org.apache.hadoop.hbase.client.*; import org.apache.hadoop.hbase.coprocessor.BaseRegionObserver; import org.apache.hadoop.hbase.coprocessor.ObserverContext; import org.apache.hadoop.hbase.coprocessor.RegionCoprocessorEnvironment; import org.apache.hadoop.hbase.regionserver.wal.WALEdit; import java.io.IOException; import java.util.List; import java.util.Map; import java.util.NavigableMap; import java.util.Set; /** * 协处理器 * 当删除好友时,触发另外一个操作 */ public class MyProcessor extends BaseRegionObserver { //predelete //postdelete:通过判断数据是否已经删除来跳出递归 @Override public void postDelete(ObserverContext<RegionCoprocessorEnvironment> e, Delete delete, WALEdit edit, Durability durability) throws IOException { HTableInterface relation = e.getEnvironment().getTable(TableName.valueOf("relation")); //获取rowkey byte[] rowkeyUid = delete.getRow(); //获取到所有的cell对象 NavigableMap<byte[], List<Cell>> familyCellMap = delete.getFamilyCellMap(); Set<Map.Entry<byte[], List<Cell>>> entries = familyCellMap.entrySet(); for (Map.Entry<byte[], List<Cell>> entry : entries) { //列族信息 System.out.println(Bytes.toString(entry.getKey())); // 该列族中所有的单元格 List<Cell> cells = entry.getValue(); for (Cell cell : cells) { //rowkey信息 byte[] rowkey = CellUtil.cloneRow(cell); //列信息 byte[] column = CellUtil.cloneQualifier(cell); //验证删除的目标数据是否存在,存在则执行删除否则不执行,必须有此判断否则造成协处理器被循环调用耗尽资源 boolean flag = relation.exists(new Get(column).addColumn(Bytes.toBytes("friends"), rowkey)); if(flag){ Delete myDelete = new Delete(column).addColumn(Bytes.toBytes("friends"), rowkey); relation.delete(myDelete); } } } } } -

将项目打包上传到hdfs

hadoop fs -put /root/jars/myprocessor.jar /processor -

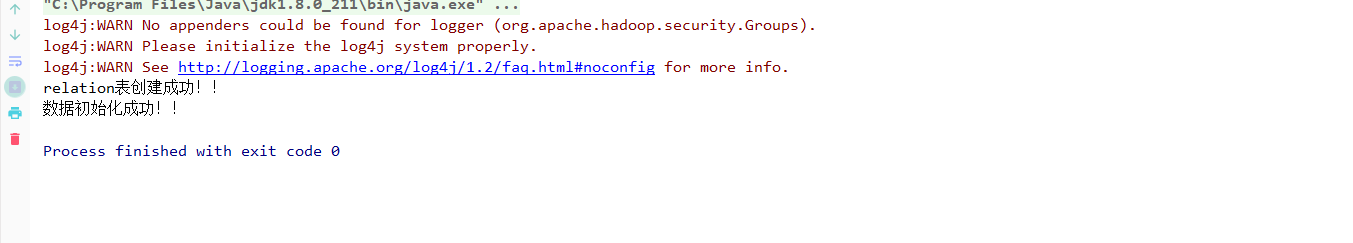

执行RelationDemo类,创建表,添加数据

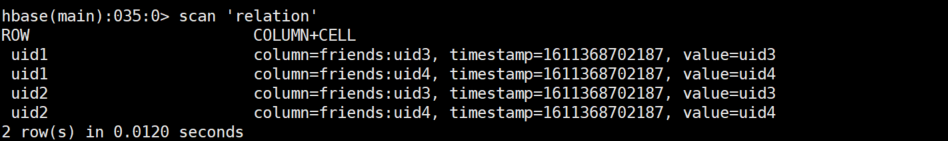

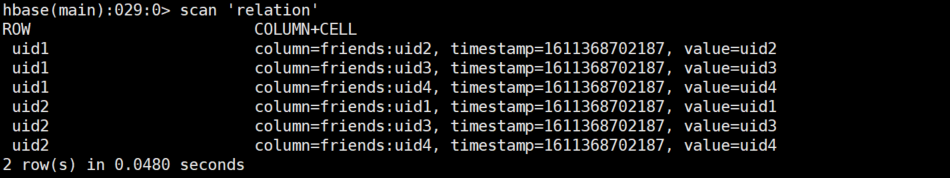

查看数据

-

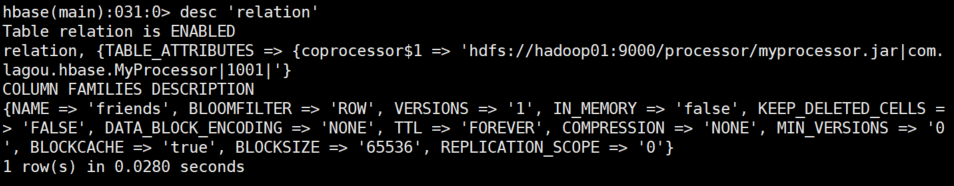

添加协处理器

alter 'relation',METHOD => 'table_att','Coprocessor'=>'hdfs://hadoop01:9000/processor/myprocessor.jar|com.lagou.hbase.MyProcessor|1001|

-

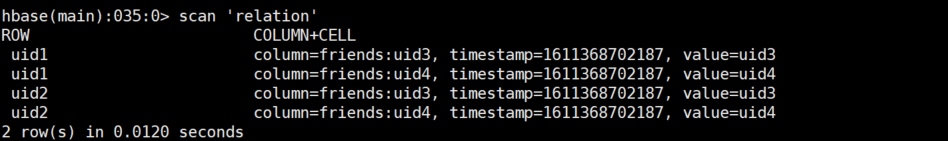

执行删除操作

delete 'relation','uid1','friends:uid2'