【深度学习pytorch】线性回归

线性回归从零开始实现

利用张量和自动微分

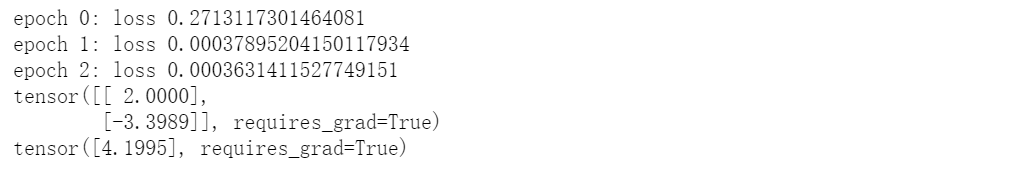

import torch import random # 生成数据集 def synthetic_data(w, b, num_examples): """生成y=Xw+b+噪声""" X = torch.normal(0, 1, (num_examples, len(w))) y = torch.matmul(X, w) + b y += torch.normal(0, 0.01, y.shape) return X, y.reshape((-1, 1)) true_w = torch.tensor([2, -3.4]) true_b = 4.2 features, labels = synthetic_data(true_w, true_b, 1000) # 小批量读取数据集 def data_iter(features, labels, batch_size): example_nums = len(features) indices = list(range(example_nums)) random.shuffle(indices) for i in range(0, example_nums, batch_size): batch_indices = torch.tensor(indices[i:min(i+batch_size, example_nums)]) yield features[batch_indices], labels[batch_indices] # 定义模型、损失函数、优化算法 def lin_reg(X, w, b): return torch.matmul(X, w) + b def loss_fun(y_hat, y): return ((y_hat - y) ** 2 / 2).sum() def sgd(paras, lr, batch_size): with torch.no_grad(): for para in paras: para -= lr * para.grad / batch_size para.grad.zero_() # 训练模型 def train(w, b, features, labels, lr, batch_size, epoches): for i in range(epoches): for X, y in data_iter(features, labels, batch_size): y_hat = lin_reg(X, w, b) loss = loss_fun(y, y_hat) loss.backward() sgd([w, b], lr, batch_size) loss = loss_fun(y, y_hat) print('epoch {0}: loss {1}'.format(i, loss)) return w, b w = torch.normal(0, 0.01, size=(2,1), requires_grad=True) b = torch.zeros(1, requires_grad=True) w, b = train(w, b, features, labels, 0.03, 10, 3) print(w) print(b)

线性回归简洁实现

使用深度学习框架

import torch import random import numpy as np from torch.utils import data from torch import nn # 生成数据集 def synthetic_data(w, b, num_examples): """生成y=Xw+b+噪声""" X = torch.normal(0, 1, (num_examples, len(w))) y = torch.matmul(X, w) + b y += torch.normal(0, 0.01, y.shape) return X, y.reshape((-1, 1)) true_w = torch.tensor([2, -3.4]) true_b = 4.2 features, labels = synthetic_data(true_w, true_b, 1000) # 读取数据集 def load_array(data_arrays, batch_size, is_train=True): """构造一个PyTorch数据迭代器""" dataset = data.TensorDataset(*data_arrays) return data.DataLoader(dataset, batch_size, shuffle=is_train) batch_size = 10 data_iter = load_array((features, labels), batch_size) # 定义模型 net = nn.Sequential(nn.Linear(2, 1)) # nn是神经网络的缩写 """Sequential类将多个层串联在一起。 ... 当给定输入数据时,Sequential实例将数据传入到第一层, 然后将第一层的输出作为第二层的输入,以此类推""" """nn.Linear第一个参数指定输入特征形状,第二个指定输出特征形状""" # 初始化模型参数 net[0].weight.data.normal_(0, 0.01) net[0].bias.data.fill_(0) # 损失函数 loss = nn.MSELoss() # L2, 返回所有样本损失的均值 # 优化算法 trainer = torch.optim.SGD(net.parameters(), lr=0.03) # 训练模型 num_epochs = 5 for epoch in range(num_epochs): for X, y in data_iter: l = loss(net(X) ,y) trainer.zero_grad() l.backward() trainer.step() l = loss(net(features), labels) print(f'epoch {epoch + 1}, loss {l:f}') print(w) print(b)

分类:

深度学习

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· C#/.NET/.NET Core技术前沿周刊 | 第 29 期(2025年3.1-3.9)

· 从HTTP原因短语缺失研究HTTP/2和HTTP/3的设计差异