前言:

logstash 和filebeat都具有日志收集功能,filebeat更轻量,占用资源更少,但logstash 具有filter功能,能过滤分析日志。一般结构都是filebeat采集日志,然后发送到消息队列,redis,kafka。然后logstash去获取,利用filter功能过滤分析,然后存储到elasticsearch中

1. 拉取logstash镜像

sudo docker pull logstash:7.6.0

2. Docker构建logstash容器

创建一个logstash容器:

sudo docker run -it -d -p 5044:5044 -p 5045:5045 --name logstash1 --net mynetwork logstash:7.6.0

把容器的配置文件拷贝出来到宿主机中,进行修改修改

sudo docker cp logstash1:/usr/share/logstash/config/ /home/xujk/Work/Docker/elasticsearch/logstash

sudo docker cp logstash1:/usr/share/logstash/pipeline/ /home/xujk/Work/Docker/elasticsearch/logstash

sudo docker cp logstash1:/usr/share/logstash/logstash-core/lib/jars/ /web/logstash/

创建logstash容器,进行配置文件挂载,方便修改

sudo docker run -d -p 5044:5044 -p 5045:5045 \ --privileged=true -v /home/xujk/Work/Docker/elasticsearch/logstash/config/:/usr/share/logstash/config/ -v /home/xujk/Work/Docker/elasticsearch/logstash/pipeline/:/usr/share/logstash/pipeline/ --name=logstash1 logstash:7.6.0

修改配置文件:/config/logstash.yml

http.host: "0.0.0.0"

#xpack.monitoring.elasticsearch.hosts: [ "http://elasticsearch:9200" ]

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch.username: logstash_system

xpack.monitoring.elasticsearch.password: xujingkun

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.231.132:9200" ]

![]()

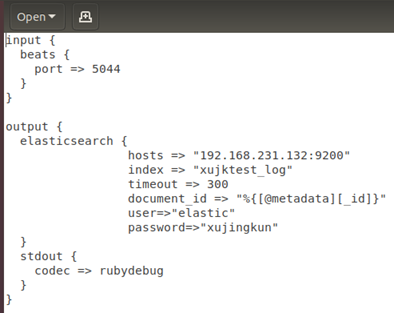

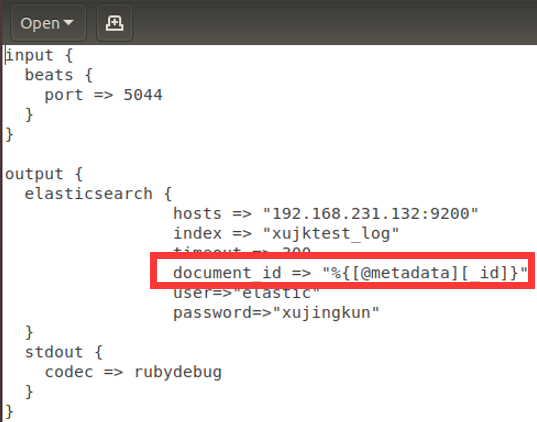

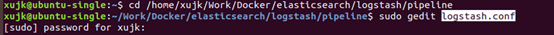

修改配置文件:/pipeline/logstash.conf

input {

beats {

port => 5044

}

}

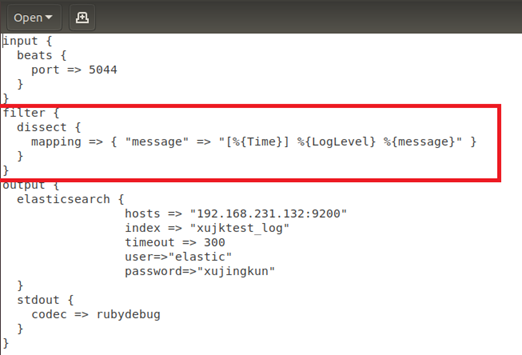

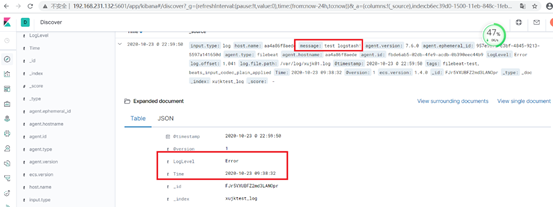

filter {

dissect {

mapping => { "message" => "[%{Time}] %{LogLevel} %{message}" }

}

}

output {

elasticsearch {

hosts => "192.168.231.132:9200"

index => "xujktest_log"

timeout => 300

user=>"elastic"

password=>"xujingkun"

}

stdout {

codec => rubydebug

}

}

![]()

logstash配置好,上一文中的filebeat修改配置文件,输出到logstash中,详情见上一文

Logstash使拥过滤插件dissect:

dissect的应用有一定的局限性:主要适用于每行格式相似且分隔符明确简单的场景

FAQ&遇到问题:

logstash同步日志数据,只有一条数据

![]()

至此,ELK日志收集实践告一段落!

![]()

![]()

![]()

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号