pytorch自定义RNN结构(附代码)

pytorch自定义LSTM结构(附代码)

有时我们可能会需要修改LSTM的结构,比如用分段线性函数替代非线性函数,这篇博客主要写如何用pytorch自定义一个LSTM结构,并在IMDB数据集上搭建了一个单层反向的LSTM网络,验证了自定义LSTM结构的功能。

@

一、整体程序框架

如果要处理一个维度为【batch_size, length, input_dim】的输入,则需要的LSTM结构如图1所示:

layers表示LSTM的层数,batch_size表示批处理大小,length表示长度,input_dim表示每个输入的维度。

其中,每个LSTMcell执行的表达式如下所示:

二、LSTMcell

LSTMcell的计算函数如下所示;其中nn.Parameter表示该张量为模型可训练参数;

class LSTMCell(nn.Module):

def __init__(self, input_size, hidden_size):

super(LSTMCell, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.weight_cx = nn.Parameter(torch.Tensor(hidden_size, input_size)) #初始化8个权重矩阵

self.weight_ch = nn.Parameter(torch.Tensor(hidden_size, hidden_size))

self.weight_fx = nn.Parameter(torch.Tensor(hidden_size, input_size))

self.weight_fh = nn.Parameter(torch.Tensor(hidden_size, hidden_size))

self.weight_ix = nn.Parameter(torch.Tensor(hidden_size, input_size))

self.weight_ih = nn.Parameter(torch.Tensor(hidden_size, hidden_size))

self.weight_ox = nn.Parameter(torch.Tensor(hidden_size, input_size))

self.weight_oh = nn.Parameter(torch.Tensor(hidden_size, hidden_size))

self.bias_c = nn.Parameter(torch.Tensor(hidden_size)) #初始化4个偏置bias

self.bias_f = nn.Parameter(torch.Tensor(hidden_size))

self.bias_i = nn.Parameter(torch.Tensor(hidden_size))

self.bias_o = nn.Parameter(torch.Tensor(hidden_size))

self.reset_parameters() #初始化参数

def reset_parameters(self):

stdv = 1.0 / math.sqrt(self.hidden_size)

for weight in self.parameters():

weight.data.uniform_(-stdv, stdv)

def forward(self, input, hc):

h, c = hc

i = F.linear(input, self.weight_ix, self.bias_i) + F.linear(h, self.weight_ih) #执行矩阵乘法运算

f = F.linear(input, self.weight_fx, self.bias_f) + F.linear(h, self.weight_fh)

g = F.linear(input, self.weight_cx, self.bias_c) + F.linear(h, self.weight_ch)

o = F.linear(input, self.weight_ox, self.bias_o) + F.linear(h, self.weight_oh)

i = F.sigmoid(i) #激活函数

f = F.sigmoid(f)

g = F.tanh(g)

o = F.sigmoid(o)

c = f * c + i * g

h = o * F.tanh(c)

return h, c

三、LSTM整体程序

如图1所示,一个完整的LSTM是由很多LSTMcell操作组成的,LSTMcell的数量,取决于layers的大小;每个LSTMcell运行的次数取决于length的大小

1. 多层LSTMcell

需要的库函数:

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

import math

假如我们设计的LSTM层数layers大于1,第一层的LSTM输入维度是input_dim,输出维度是hidden_dim,那么其他各层的输入维度和输出维度都是hidden_dim(下层的输出会成为上层的输入),因此,定义layers个LSTMcell的函数如下所示:

self.lay0 = LSTMCell(input_size,hidden_size)

if layers > 1:

for i in range(1, layers):

lay = LSTMCell(hidden_size,hidden_size)

setattr(self, 'lay{}'.format(i), lay)

其中setattr()函数的作用是,把lay变成self.lay 'i' ,如果layers = 3,那么这段程序就和下面这段程序是一样的

self.lay0 = LSTMCell(input_size,hidden_size)

self.lay1 = LSTMCell(hidden_size,hidden_size)

self.lay2 = LSTMCell(hidden_size,hidden_size)

2. 多层LSTM处理不同长度的输入

每个LSTMcell都需要(h_t-1和c_t-1)作为状态信息输入,若没有指定初始状态,我们就自定义一个值为0的初始状态

if initial_states is None:

zeros = Variable(torch.zeros(input.size(0), self.hidden_size))

initial_states = [(zeros, zeros), ] * self.layers #初始状态

states = initial_states

outputs = []

length = input.size(1)

for t in range(length):

x = input[:, t, :]

for l in range(self.layers):

hc = getattr(self, 'lay{}'.format(l))(x, states[l])

states[l] = hc #如图1所示,左面的输出(h,c)做右面的状态信息输入

x = hc[0] #如图1所示,下面的LSTMcell的输出h做上面的LSTMcell的输入

outputs.append(hc) #将得到的最上层的输出存储起来

其中getattr()函数的作用是,获得括号内的字符串所代表的属性;若l = 3,则下面这两段代码等价:

hc = getattr(self, 'lay{}'.format(l))(x, states[l])

hc = self.lay3(x, states[3])

3. 整体程序

class LSTM(nn.Module):

def __init__(self, input_size, hidden_size, layers=1, sequences=True):

super(LSTM, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.layers = layers

self.sequences = sequences

self.lay0 = LSTMCell(input_size,hidden_size)

if layers > 1:

for i in range(1, layers):

lay = LSTMCell(hidden_size,hidden_size)

setattr(self, 'lay{}'.format(i), lay)

def forward(self, input, initial_states=None):

if initial_states is None:

zeros = Variable(torch.zeros(input.size(0), self.hidden_size))

initial_states = [(zeros, zeros), ] * self.layers

states = initial_states

outputs = []

length = input.size(1)

for t in range(length):

x = input[:, t, :]

for l in range(self.layers):

hc = getattr(self, 'lay{}'.format(l))(x, states[l])

states[l] = hc

x = hc[0]

outputs.append(hc)

if self.sequences: #是否需要图1最上层里从左到右所有的LSTMcell的输出

hs, cs = zip(*outputs)

h = torch.stack(hs).transpose(0, 1)

c = torch.stack(cs).transpose(0, 1)

output = (h, c)

else:

output = outputs[-1] # #只输出图1最右上角的LSTMcell的输出

return output

三、反向LSTM

定义两个LSTM,然后将输入input1反向,作为input2,就可以了

代码如下所示:

import torch

input1 = torch.rand(2,3,4)

inp = input1.unbind(1)[::-1] #从batch_size所在维度拆开,并倒序排列

input2 = inp[0]

for i in range(1, len(inp)): #倒序后的tensor连接起来

input2 = torch.cat((input2, inp[i]), dim=1)

x, y, z = input1.size() #两个输入同维度

input2 = input2.resize(x, y, z)

OK,反向成功

四、实验

在IMDB上搭建一个单层,双向,LSTM结构,加一个FC层;

self.rnn1 = LSTM(embedding_dim, hidden_dim, layers = n_layers, sequences=False)

self.rnn2 = LSTM(embedding_dim, hidden_dim, layers = n_layers, sequences=False)

self.fc = nn.Linear(hidden_dim * 2, output_dim)

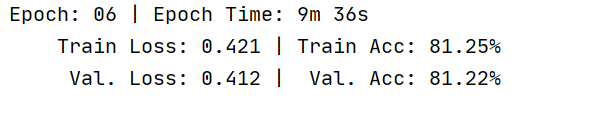

运行结果如图:

时间有限,只迭代了6次,实验证明,自定义的RNN程序,可以收敛。