Hive自定义UDTF函数

UDTF(User-Defined Table-Generating Functions)一进多出,如lateral view explore()

实现方法:

1)继承org.apache.hadoop.hive.ql.udf.generic.GenericUDTF

2)重写initialize、process、close方法

UDTF首先会调用initialize方法,此方法返回UDTF的返回行的信息(返回个数,类型,名称)。初始化完成后,会调用process方法,对传入的参数进行处理,可以通过forword()方法把结果返回。最后close()方法调用,对需要清理的方法进行清理

应用案例

需求:使用自定义UDTF函数获取两个时间之间的时间列表

package com.sjck.hive.udf;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Calendar;

import java.util.Date;

import org.apache.hadoop.hive.ql.exec.UDFArgumentException;

import org.apache.hadoop.hive.ql.exec.UDFArgumentLengthException;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory;

import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector;

import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory;

public class DateMap extends GenericUDTF {

@Override

public void close() throws HiveException {

}

@Override

public StructObjectInspector initialize(ObjectInspector[] args) throws UDFArgumentException {

if (args.length != 2) {

throw new UDFArgumentLengthException("DateMap takes only two argument");

}

ArrayList<String> fieldNames = new ArrayList<String>();

ArrayList<ObjectInspector> fieldOIs = new ArrayList<ObjectInspector>();

fieldNames.add("begin_date"); //指定输出参数名称

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

fieldNames.add("end_date");

fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector);

return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs);

}

@Override

public void process(Object[] args) throws HiveException {

try {

String begin = String.valueOf(args[0]);

String end = String.valueOf(args[1]);

SimpleDateFormat timeFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");

int days = (int) (dateFormat.parse(end).getTime() - dateFormat.parse(begin).getTime())

/ (60 * 60 * 24 * 1000);

Date startDate = timeFormat.parse(begin);

Date endDate = timeFormat.parse(end);

Calendar ecalendar = Calendar.getInstance();

ecalendar.setTime(startDate);

Date d1 = startDate;

Date d2 = null;

if(days==0){

d2=endDate;

}else{

ecalendar.add(Calendar.DATE, 1);

ecalendar.set(Calendar.HOUR_OF_DAY, 0);

ecalendar.set(Calendar.MINUTE, 0);

ecalendar.set(Calendar.SECOND, 0);

d2=ecalendar.getTime();

}

String datas[][] = new String[days + 1][2];

datas[0][0] = timeFormat.format(d1);

datas[0][1] = timeFormat.format(d2);

for (int i = 1; i < days + 1; i++) {

d1 = d2;

ecalendar.add(Calendar.DATE, 1);

d2 = ecalendar.getTime();

if (d2.after(endDate)) {

d2 = endDate;

}

datas[i][0] = timeFormat.format(d1);

datas[i][1] = timeFormat.format(d2);

}

for (int i = 0; i < datas.length; i++) {

String[] s = new String[2];

s[0] = datas[i][0];

s[1] = datas[i][1];

forward(s);

}

} catch (ParseException e) {

e.printStackTrace();

}

}

}

将代码打成jar包上传到服务器上,我这边是上传到hdfs上的

hadoop fs -put hive-udf.jar /user/hive/udf

声明函数

create function datemap AS 'com.sjck.hive.udf.DateMap' using jar 'hdfs://nameservice1/user/hive/udf/hive-udf.jar';

使用

方式一:直接放在select后面

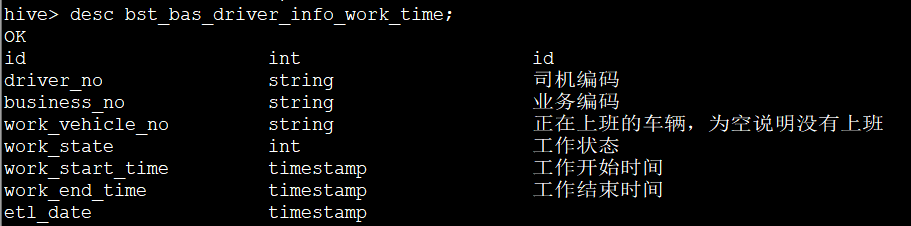

看下使用到表结构

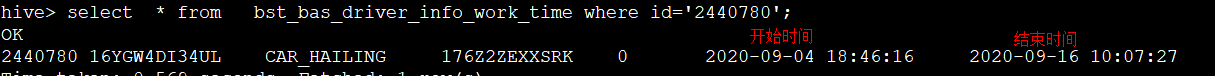

select * from bst_bas_driver_info_work_time where id='2440780' //看看选择的一条数据

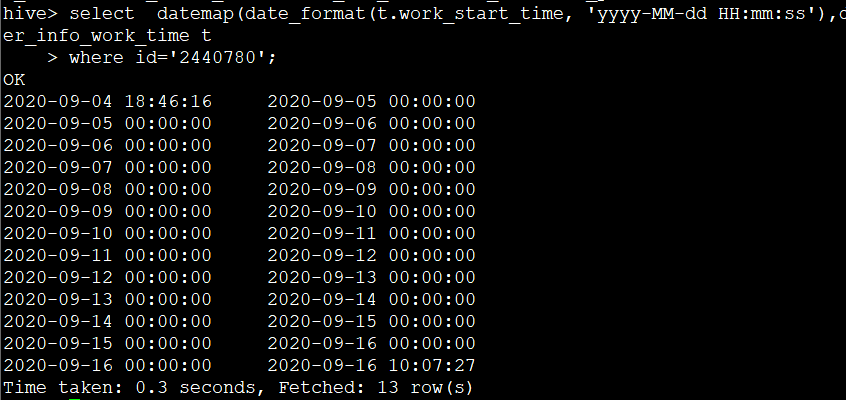

使用datemap函数获取开始时间-结束时间中间的时间(按天展开)

select datemap(date_format(t.work_start_time, 'yyyy-MM-dd HH:mm:ss'),date_format(t.work_end_time, 'yyyy-MM-dd HH:mm:ss')) as (begin_date,end_date) from bst_bas_driver_info_work_time t

where id='2440780'

注意:

1)不可以添加其他字段使用:

select datemap(date_format(t.work_start_time, 'yyyy-MM-dd HH:mm:ss'),date_format(t.work_end_time, 'yyyy-MM-dd HH:mm:ss')) as (begin_date,end_date) from bst_bas_driver_info_work_time t where id='2440780'

2)不可以嵌套调用:

select datemap(datemap(xx,xxx),datemap(xx,xxx)) from bst_bas_driver_info_work_time

3)不可以和group by/cluster by/distribute by/sort by一起使用:

select datemap(xx,xxx)as (begin_date,end_date) from bst_bas_driver_info_work_time group by begin_date, end_date

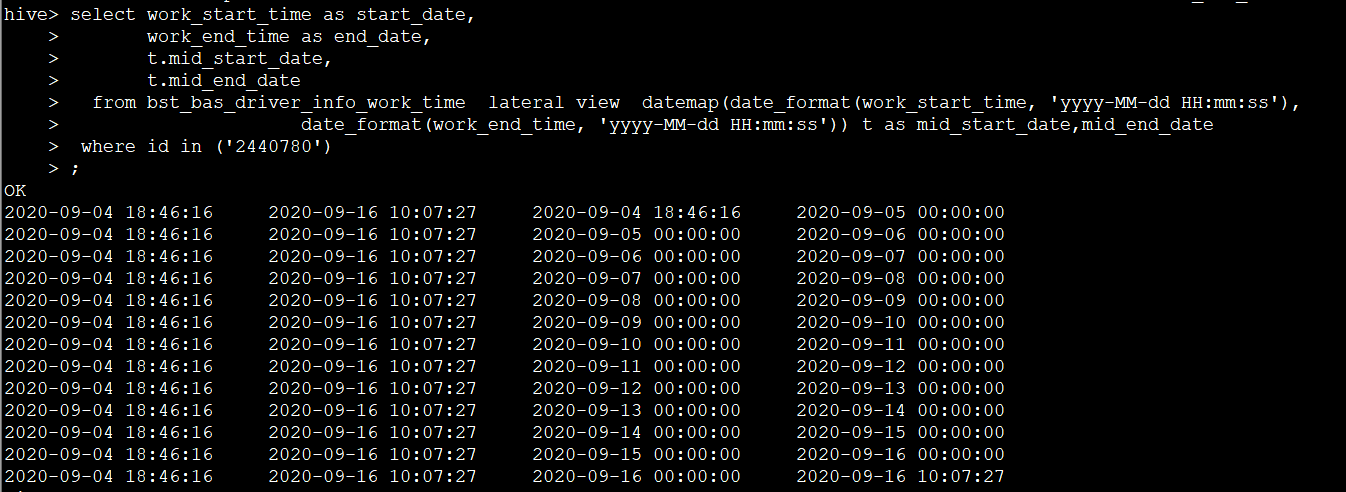

方式二:和lateral view一起使用

select work_start_time as start_date,

work_end_time as end_date,

t.mid_start_date,

t.mid_end_date

from bst_bas_driver_info_work_time lateral view datemap(date_format(work_start_time, 'yyyy-MM-dd HH:mm:ss'), date_format(work_end_time, 'yyyy-MM-dd HH:mm:ss')) t as mid_start_date,

mid_end_date

where id in ('2440780')

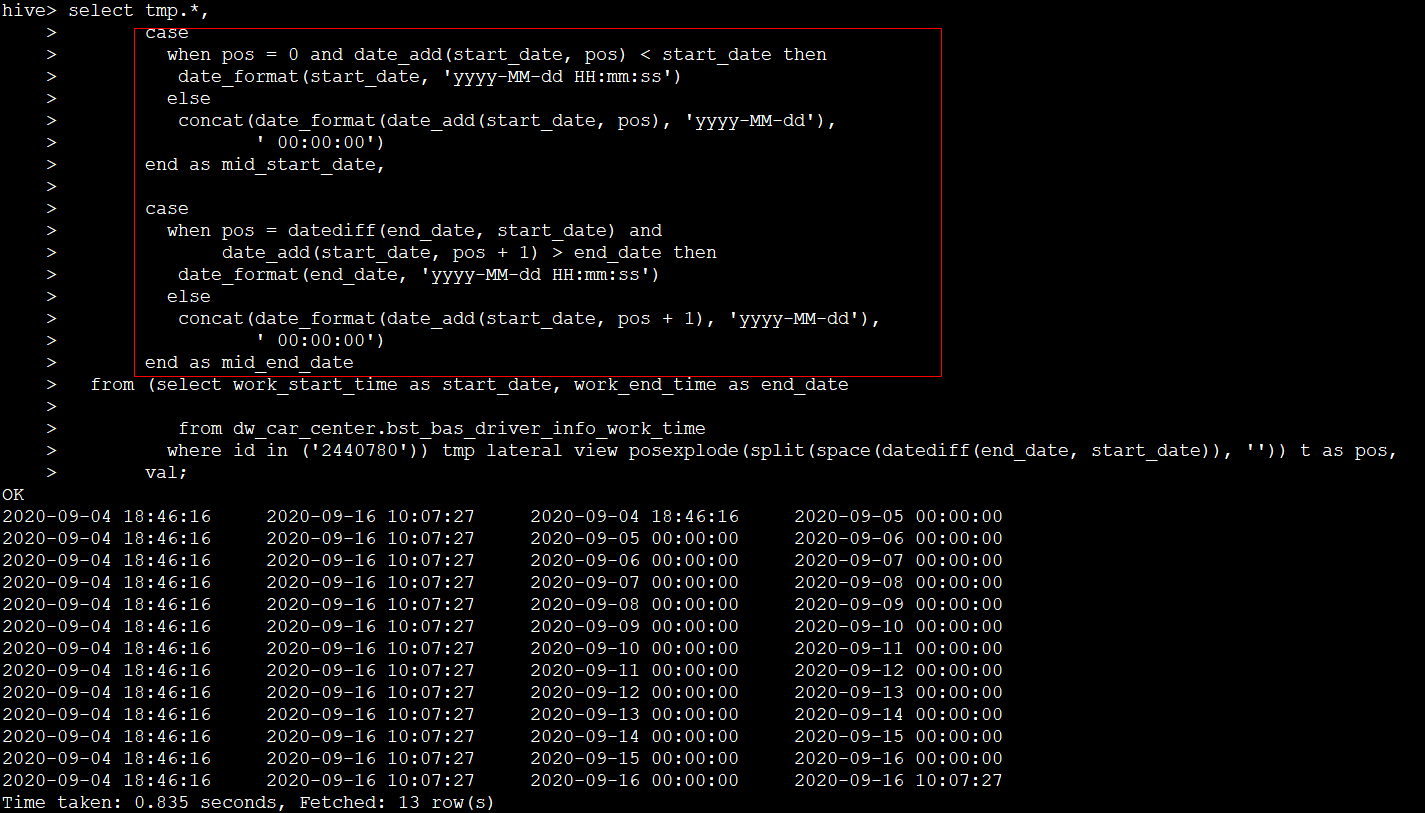

其实这个功能用posexplode也可以完成,看下代码:

效果一样,只不过中间逻辑自己还得单独做处理下,代码参考我的另一篇https://www.cnblogs.com/kopao/p/13750818.html

这里重点在于UDTF自定义函数的实现