离线安装 Kubernetes 1.18.3 版本,万字教程,企业级部署!

离线安装 Kubernetes 1.18.3 版本

目录

一、Kubernetes 简介

1.Kubernetes 架构设计图

2.Kubernetes 常见组件介绍

二、Kubernetes 二进制方式安装

1.创建 CA 证书和密钥

2.安装 ETCD 组件

- 1、创建 ETCD 证书和密钥

- 2、生成证书和密钥

- 3、创建启动脚本

- 4、启动 ETCD

3.安装 Flannel 网络插件

- 1、创建 Flannel 证书和密钥

- 2、生成证书和密钥

- 3、编写启动脚本

- 4、启动并验证

4.安装 Docker 服务

- 1、创建启动脚本

- 2、启动 Docker

5.安装 Kubectl 服务

- 1、创建 Admin 证书和密钥

- 3、生成证书和密钥

- 4、创建 Kubeconfig 文件

- 5、创建 Kubectl 配置文件,并配置命令补全工具

三、安装 Kubenetes 相关组件

- 1、安装 Kube-APIServer 组件

- 1、创建 Kubernetes 证书和密钥

- 2、生成证书和密钥

- 3、配置 Kube-APIServer 审计

- 4、配置 Metrics-Server

- 5、创建启动脚本

- 6、启动 Kube-APIServer 并验证

2.安装 Controller Manager 组件

- 1、创建 Controller Manager 证书和密钥

- 2、生成证书和密钥

- 3、创建 Kubeconfig 文件

- 4、创建启动脚本

- 4、启动并验证

3.安装 Kube-Scheduler 组件

- 1、创建 Kube-Scheduler 证书和密钥

- 2、生成证书和密钥

- 3、创建 Kubeconfig 文件

- 4、创建 Kube-Scheduler 配置文件

- 5、创建启动脚本

- 6、启动并验证

4.安装 Kubelet 组件

- 1、创建 Kubelet 启动脚本

- 2、启动并验证

- 3、Approve CSR 请求

- 4、手动 Approve Server Cert Csr

- 5、Kubelet API 接口配置

5.安装 Kube-Proxy 组件

- 1、创建 Kube-Proxy 证书和密钥

- 2、生成证书和密钥

- 3、创建 Kubeconfig 文件

- 4、创建 Kube-Proxy 配置文件

- 4、创建启动脚本

- 5、启动并验证

6.安装 CoreDNS 插件

- 1、修改 Coredns 配置

- 2、创建 Coredns 并启动

- 3、验证

7.安装 Dashboard 仪表盘

- 1、创建证书

- 2、修改 Dashboard 配置

- 3、验证

一、Kubernetes 简介

Kubernetes,也称为 K8s,是由 Google 公司开源的容器集群管理系统,在 Docker 技术的基础上,为容器化的应用提供部署运行、资源调度、服务发现和动态伸缩等一系列完整功能,提高了大规模容器集群管理的便捷性。Kubernetes 官方

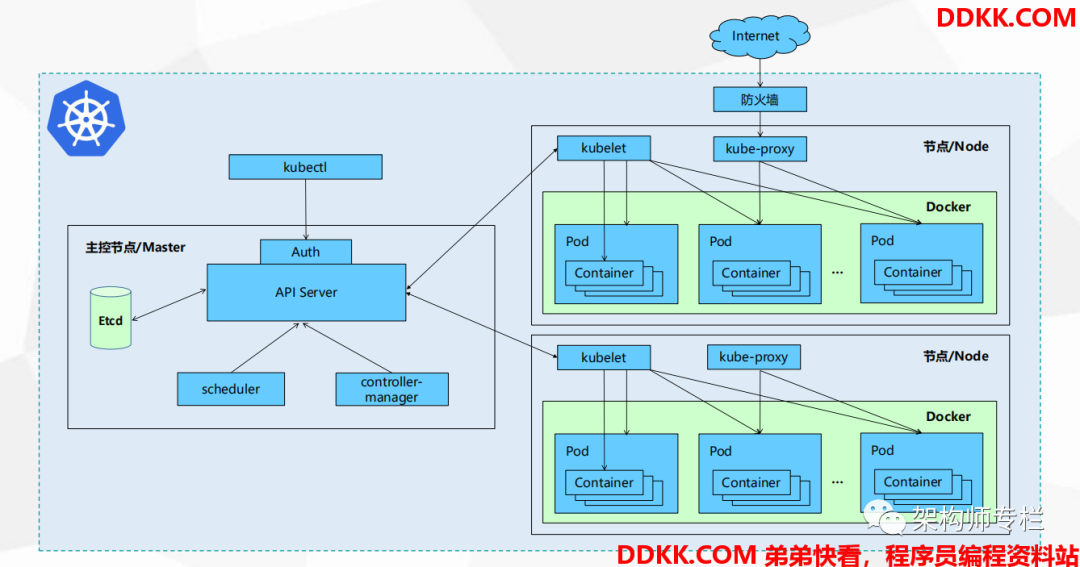

1.Kubernetes 架构设计图

Kubernetes 是由一个 Master 和多个 Node 组成,Master 通过 API 提供服务,并接收 Kubectl 发送过来的请求来调度管理整个集群。

Kubectl 是 K8s 平台的管理命令。

2.Kubernetes 常见组件介绍

- APIServer: 所有服务的统一访问入口,并提供认证、授权、访问控制、API 注册和发现等机制;

- Controller Manager(控制器): 主要就是用来维持 Pod 的一个副本数,比如故障检测、自动扩展、滚动更新等;

- Scheduler(调度器): 主要就是用来分配任务到合适的节点上(资源调度)

- ETCD: 键值对数据库,存放了 K8s 集群中所有的重要信息(持久化)

- Kubelet: 直接和容器引擎交互,用来维护容器的一个生命周期;同时也负责 Volume(CVI)和网络(CNI)的管理;

- Kube-Porxy: 用于将规则写入至 iptables 或 IPVS 来实现服务的映射访问;

其它组件:

- CoreDNS:主要就是用来给 K8s 的 Service 提供一个域名和 IP 的对应解析关系。

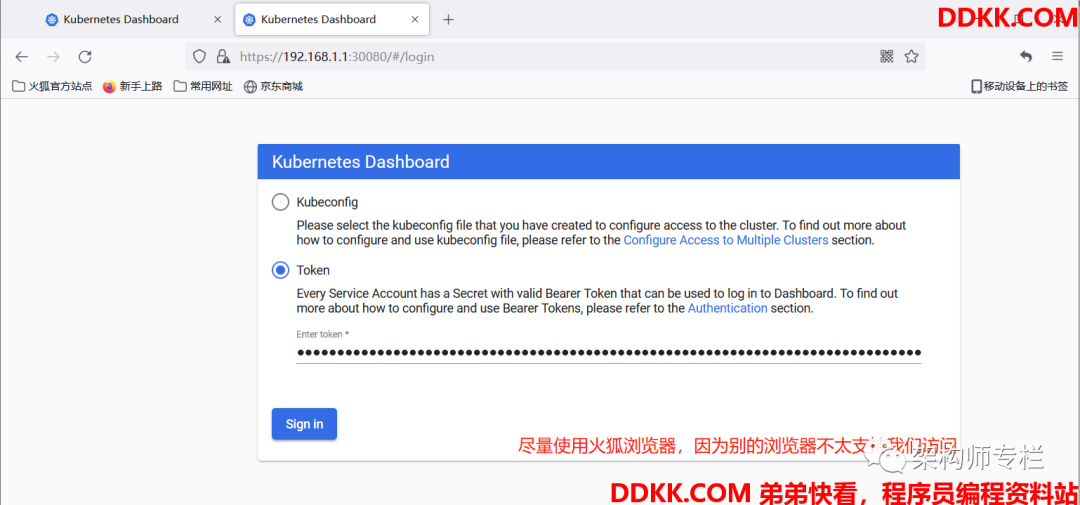

- Dashboard:主要就是用来给 K8s 提供一个 B/S 结构的访问体系(即,我们可以通过 Web 界面来对 K8s 进行管理)

- Ingress Controller:主要就是用来实现 HTTP 代理(七层),官方的 Service 仅支持 TCP\UDP 代理(四层)

- Prometheus:主要就是用来给 K8s 提供一个监控能力,使我们能够更加清晰的看到 K8s 相关组件及 Pod 的使用情况。

- ELK:主要就是用来给 K8s 提供一个日志分析平台。

Kubernetes 工作原理:

- 用户可以通过 Kubectl 命令来提交需要运行的 Docker Container 到 K8s 的 APIServer 组件中;

- 接着 APIServer 接收到用户提交的请求后,会将请求存储到 ETCD 这个键值对存储中;

- 然后由 Controller Manager 组件来创建出用户定义的控制器类型(Pod ReplicaSet Deployment DaemonSet 等)

- 然后 Scheduler 组件会对 ETCD 进行扫描,并将用户需要运行的 Docker Container 分配到合适的主机上;

- 最后由 Kubelet 组件来和 Docker 容器进行交互,创建、删除、停止容器等一系列操作。

kube-proxy 主要就是为 Service 提供服务的,来实现内部从 Pod 到 Service 和外部 NodePort 到 Service 的访问。

二、Kubernetes 二进制方式安装

我们下面的安装方式就是单纯的使用二进制方式安装,并没有对 Kube-APIServer 组件进行高可用配置,因为像我们安装 K8s 的话,其实主要还是为了学习 K8s,通过 K8s 来完成某些事情,所以并不需要关心高可用这块的东西。

要是对Kubernetes 做高可用的话,其实并不难,像一些在云上的 K8s,一般都是通过 SLB 来代理到两台不同服务器上,来实现高可用;而像云下的 K8s,基本上也是如上,我们可以通过 Keepalived 加 Nginx 来实现高可用。

准备工作:

| 主机名 | 操作系统 | IP 地址 | 所需组件 |

|---|---|---|---|

k8s-master01 |

CentOS 7.4 | 192.168.1.1 | 所有组件都安装 (合理利用资源) |

k8s-master02 |

CentOS 7.4 | 192.168.1.2 | 所有组件都安装 |

k8s-node |

CentOS 7.4 | 192.168.1.3 | docker kubelet kube-proxy |

1)在各个节点上配置主机名,并配置 Hosts 文件

[root@ddkk.com ~]# hostnamectl set-hostname k8s-master01

[root@ddkk.com ~]# bash

[root@k8s-master01 ~]# cat <<END >> /etc/hosts

192.168.1.1 k8s-master01

192.168.1.2 k8s-master02

192.168.1.3 k8s-node01

END

2)在k8s-master01 上配置 SSH 密钥对,并将公钥发送给其余主机

[root@k8s-master01 ~]# ssh-keygen -t rsa # 三连回车

[root@k8s-master01 ~]# ssh-copy-id root@192.168.1.1

[root@k8s-master01 ~]# ssh-copy-id root@192.168.1.2

[root@k8s-master01 ~]# ssh-copy-id root@192.168.1.3

3)编写 K8s 初始环境脚本

[root@k8s-master01 ~]# vim k8s-init.sh

#!/bin/bash

#****************************************************************#

# ScriptName: k8s-init.sh

# Initialize the machine. This needs to be executed on every machine.

# Mkdir k8s directory

yum -y install wget ntpdate && ntpdate ntp1.aliyun.com

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum -y install epel-release

mkdir -p /opt/k8s/bin/

mkdir -p /data/k8s/docker

mkdir -p /data/k8s/k8s

# Disable the SELinux.

swapoff -a

sed -i '/swap/s/^/#/' /etc/fstab

# Turn off and disable the firewalld.

systemctl stop firewalld

systemctl disable firewalld

# Modify related kernel parameters & Disable the swap.

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.tcp_tw_recycle = 0

vm.swappiness = 0

vm.overcommit_memory = 1

vm.panic_on_oom = 0

net.ipv6.conf.all.disable_ipv6 = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf >& /dev/null

# Add ipvs modules

cat > /etc/sysconfig/modules/ipvs.modules << EOF

!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- br_netfilter

modprobe -- nf_conntrack

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

source /etc/sysconfig/modules/ipvs.modules

# Install rpm

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget gcc gcc-c++ make libnl libnl-devel libnfnetlink-devel openssl-devel vim openssl-devel bash-completion

# ADD k8s bin to PATH

echo 'export PATH=/opt/k8s/bin:$PATH' >> /root/.bashrc && chmod +x /root/.bashrc && source /root/.bashrc

[root@k8s-master01 ~]# bash k8s-init.sh

4)配置环境变量

[root@k8s-master01 ~]# vim environment.sh

#!/bin/bash

# 生成 EncryptionConfig 所需的加密 Key

export ENCRYPTION_KEY=$(head -c 32 /dev/urandom | base64)

# 集群 Master 机器 IP 数组

export MASTER_IPS=(192.168.1.1 192.168.1.2)

# 集群 Master IP 对应的主机名数组

export MASTER_NAMES=(k8s-master01 k8s-master02)

# 集群 Node 机器 IP 数组

export NODE_IPS=(192.168.1.3)

# 集群 Node IP 对应的主机名数组

export NODE_NAMES=(k8s-node01)

# 集群所有机器 IP 数组

export ALL_IPS=(192.168.1.1 192.168.1.2 192.168.1.3)

# 集群所有 IP 对应的主机名数组

export ALL_NAMES=(k8s-master01 k8s-master02 k8s-node01)

# Etcd 集群服务地址列表

export ETCD_ENDPOINTS="https://192.168.1.1:2379,https://192.168.1.2:2379"

# Etcd 集群间通信的 IP 和端口

export ETCD_NODES="k8s-master01=https://192.168.1.1:2380,k8s-master02=https://192.168.1.2:2380"

# Kube-apiserver 的 IP 和端口

export KUBE_APISERVER="https://192.168.1.1:6443"

# 节点间互联网络接口名称

export IFACE="ens32"

# Etcd 数据目录

export ETCD_DATA_DIR="/data/k8s/etcd/data"

# Etcd WAL 目录. 建议是 SSD 磁盘分区. 或者和 ETCD_DATA_DIR 不同的磁盘分区

export ETCD_WAL_DIR="/data/k8s/etcd/wal"

# K8s 各组件数据目录

export K8S_DIR="/data/k8s/k8s"

# Docker 数据目录

export DOCKER_DIR="/data/k8s/docker"

## 以下参数一般不需要修改

# TLS Bootstrapping 使用的 Token. 可以使用命令 head -c 16 /dev/urandom | od -An -t x | tr -d ' ' 生成

BOOTSTRAP_TOKEN="41f7e4ba8b7be874fcff18bf5cf41a7c"

# 最好使用当前未用的网段来定义服务网段和 Pod 网段

# 服务网段. 部署前路由不可达. 部署后集群内路由可达(kube-proxy 保证)

SERVICE_CIDR="10.20.0.0/16"

# Pod 网段. 建议 /16 段地址. 部署前路由不可达. 部署后集群内路由可达(flanneld 保证)

CLUSTER_CIDR="10.10.0.0/16"

# 服务端口范围 (NodePort Range)

export NODE_PORT_RANGE="1-65535"

# Flanneld 网络配置前缀

export FLANNEL_ETCD_PREFIX="/kubernetes/network"

# Kubernetes 服务 IP (一般是 SERVICE_CIDR 中第一个 IP)

export CLUSTER_KUBERNETES_SVC_IP="10.20.0.1"

# 集群 DNS 服务 IP (从 SERVICE_CIDR 中预分配)

export CLUSTER_DNS_SVC_IP="10.20.0.254"

# 集群 DNS 域名(末尾不带点号)

export CLUSTER_DNS_DOMAIN="cluster.local"

# 将二进制目录 /opt/k8s/bin 加到 PATH 中

export PATH=/opt/k8s/bin:$PATH

- 上面像那些 IP 地址和网卡啥的,你们要改成自身对应的信息。

[root@k8s-master01 ~]# chmod +x environment.sh && source environment.sh

下面的这些操作,我们只需要在 k8s-master01 主机上操作即可(因为下面我们会通过 for 循环来发送到其余主机上)

1.创建 CA 证书和密钥

因为Kubernetes 系统的各个组件需要使用 TLS 证书对其通信加密以及授权认证,所以我们需要在安装前先生成相关的 TLS 证书;我们可以使用 openssl cfssl easyrsa 来生成 Kubernetes 的相关证书,我们下面使用的是 cfssl 方式。

1)安装 cfssl 工具集

[root@k8s-master01 ~]# mkdir -p /opt/k8s/cert

[root@k8s-master01 ~]# curl -L https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -o /opt/k8s/bin/cfssl

[root@k8s-master01 ~]# curl -L https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -o /opt/k8s/bin/cfssljson

[root@k8s-master01 ~]# curl -L https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -o /opt/k8s/bin/cfssl-certinfo

[root@k8s-master01 ~]# chmod +x /opt/k8s/bin/*

2)创建根证书配置文件

[root@k8s-master01 ~]# mkdir -p /opt/k8s/work

[root@k8s-master01 ~]# cd /opt/k8s/work/

[root@k8s-master01 work]# cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"expiry": "876000h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

- signing:表示当前证书可用于签名其它证书;

- server auth:表示 Client 可以用这个 CA 对 Server 提供的证书进行校验;

- client auth:表示 Server 可以用这个 CA 对 Client 提供的证书进行验证;

- "expiry": "876000h":表示当前证书有效期为 100 年;

3)创建根证书签名请求文件

[root@k8s-master01 work]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

- CN:Kube-APIServer 将会把这个字段作为请求的用户名,来让浏览器验证网站是否合法。

- C:国家;ST:州,省;L:地区,城市;O:组织名称,公司名称;OU:组织单位名称,公司部门。

4)生成 CA 密钥 ca-key.pem 和证书 ca.pem

[root@k8s-master01 work]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

- 生成证书后,因为 Kubernetes 集群需要 双向 TLS 认证,所以我们可以将生成的文件传送到所有主机中。

5)使用 for 循环来遍历数组,将配置发送给所有主机

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

ssh root@${

all_ip} "mkdir -p /etc/kubernetes/cert"

scp ca*.pem ca-config.json root@${

all_ip}:/etc/kubernetes/cert

done

2.安装 ETCD 组件

ETCD 是基于 Raft 的分布式 key-value 存储系统,由 CoreOS 开发,常用于服务发现、共享配置以及并发控制(如 leader 选举、分布式锁等);Kubernetes 主要就是用 ETCD 来存储所有的运行数据。

下载ETCD

[root@k8s-master01 work]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.22/etcd-v3.3.22-linux-amd64.tar.gz

[root@k8s-master01 work]# tar -zxf etcd-v3.3.22-linux-amd64.tar.gz

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp etcd-v3.3.22-linux-amd64/etcd root@${

master_ip}:/opt/k8s/bin

ssh root@${

master_ip} "chmod +x /opt/k8s/bin/"

done

1)创建 ETCD 证书和密钥

[root@k8s-master01 work]# cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.1.1",

"192.168.1.2"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

- hosts:用来指定给 ETCD 授权的 IP 地址或域名列表。

2)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

ssh root@${

master_ip} "mkdir -p /etc/etcd/cert"

scp etcd*.pem root@${

master_ip}:/etc/etcd/cert/

done

3)创建启动脚本

[root@k8s-master01 work]# cat > etcd.service.template << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

Documentation=https://github.com/coreos

[Service]

Type=notify

WorkingDirectory=${

ETCD_DATA_DIR}

ExecStart=/opt/k8s/bin/etcd \

--enable-v2=true \

--data-dir=${

ETCD_DATA_DIR} \

--wal-dir=${

ETCD_WAL_DIR} \

--name=##MASTER_NAME## \

--cert-file=/etc/etcd/cert/etcd.pem \

--key-file=/etc/etcd/cert/etcd-key.pem \

--trusted-ca-file=/etc/kubernetes/cert/ca.pem \

--peer-cert-file=/etc/etcd/cert/etcd.pem \

--peer-key-file=/etc/etcd/cert/etcd-key.pem \

--peer-trusted-ca-file=/etc/kubernetes/cert/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--listen-peer-urls=https://##MASTER_IP##:2380 \

--initial-advertise-peer-urls=https://##MASTER_IP##:2380 \

--listen-client-urls=https://##MASTER_IP##:2379,http://127.0.0.1:2379 \

--advertise-client-urls=https://##MASTER_IP##:2379 \

--initial-cluster-token=etcd-cluster-0 \

--initial-cluster=${

ETCD_NODES} \

--initial-cluster-state=new \

--auto-compaction-mode=periodic \

--auto-compaction-retention=1 \

--max-request-bytes=33554432 \

--quota-backend-bytes=6442450944 \

--heartbeat-interval=250 \

--election-timeout=2000

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

[root@k8s-master01 work]# for (( A=0; A < 2; A++ ))

do

sed -e "s/##MASTER_NAME##/${MASTER_NAMES[A]}/" -e "s/##MASTER_IP##/${MASTER_IPS[A]}/" etcd.service.template > etcd-${

MASTER_IPS[A]}.service

done

4)启动 ETCD

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp etcd-${

master_ip}.service root@${

master_ip}:/etc/systemd/system/etcd.service

ssh root@${

master_ip} "mkdir -p ${ETCD_DATA_DIR} ${ETCD_WAL_DIR}"

ssh root@${

master_ip} "systemctl daemon-reload && systemctl enable etcd && systemctl restart etcd"

done

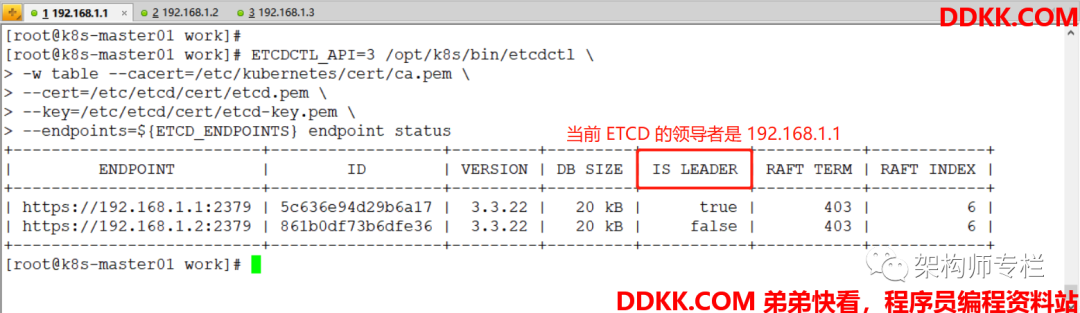

查看ETCD 当前的 Leader(领导)

[root@k8s-master01 work]# ETCDCTL_API=3 /opt/k8s/bin/etcdctl </span>

-w table --cacert=/etc/kubernetes/cert/ca.pem

--cert=/etc/etcd/cert/etcd.pem

--key=/etc/etcd/cert/etcd-key.pem

--endpoints=${

ETCD_ENDPOINTS} endpoint status

3.安装 Flannel 网络插件

Flannel 是一种基于 overlay 网络的跨主机容器网络解决方案,也就是将 TCP 数据封装在另一种网络包里面进行路由转发和通信。Flannel 是使用 Go 语言开发的,主要就是用来让不同主机内的容器实现互联。

下载Flannel

[root@k8s-master01 work]# mkdir flannel

[root@k8s-master01 work]# wget https://github.com/coreos/flannel/releases/download/v0.11.0/flannel-v0.11.0-linux-amd64.tar.gz

[root@k8s-master01 work]# tar -zxf flannel-v0.11.0-linux-amd64.tar.gz -C flannel

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp flannel/{

flanneld,mk-docker-opts.sh} root@${

all_ip}:/opt/k8s/bin/

ssh root@${

all_ip} "chmod +x /opt/k8s/bin/*"

done

1)创建 Flannel 证书和密钥

[root@k8s-master01 work]# cat > flanneld-csr.json << EOF

{

"CN": "flanneld",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

2)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes flanneld-csr.json | cfssljson -bare flanneld

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

ssh root@${

all_ip} "mkdir -p /etc/flanneld/cert"

scp flanneld*.pem root@${

all_ip}:/etc/flanneld/cert

done

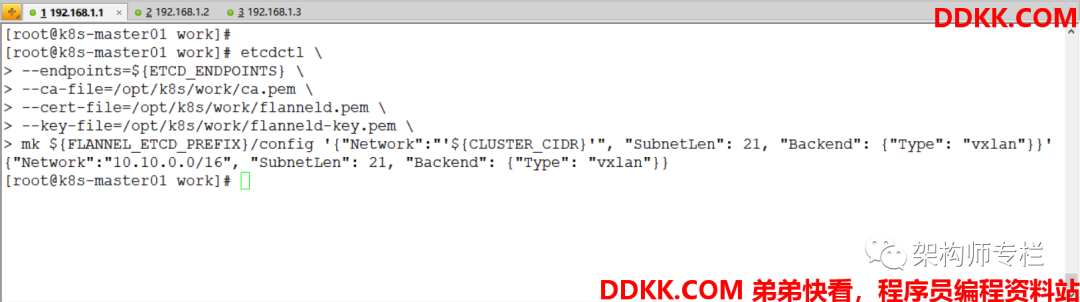

配置Pod 的网段信息

[root@k8s-master01 work]# etcdctl </span>

--endpoints=${

ETCD_ENDPOINTS}

--ca-file=/opt/k8s/work/ca.pem

--cert-file=/opt/k8s/work/flanneld.pem

--key-file=/opt/k8s/work/flanneld-key.pem

mk ${

FLANNEL_ETCD_PREFIX}/config '{"Network":"'${

CLUSTER_CIDR}'", "SubnetLen": 21, "Backend": {"Type": "vxlan"}}'

3)编写启动脚本

[root@k8s-master01 work]# cat > flanneld.service << EOF

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/opt/k8s/bin/flanneld \

-etcd-cafile=/etc/kubernetes/cert/ca.pem \

-etcd-certfile=/etc/flanneld/cert/flanneld.pem \

-etcd-keyfile=/etc/flanneld/cert/flanneld-key.pem \

-etcd-endpoints=${

ETCD_ENDPOINTS} \

-etcd-prefix=${

FLANNEL_ETCD_PREFIX} \

-iface=${

IFACE} \

-ip-masq

ExecStartPost=/opt/k8s/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

4)启动并验证

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp flanneld.service root@${

all_ip}:/etc/systemd/system/

ssh root@${

all_ip} "systemctl daemon-reload && systemctl enable flanneld --now"

done

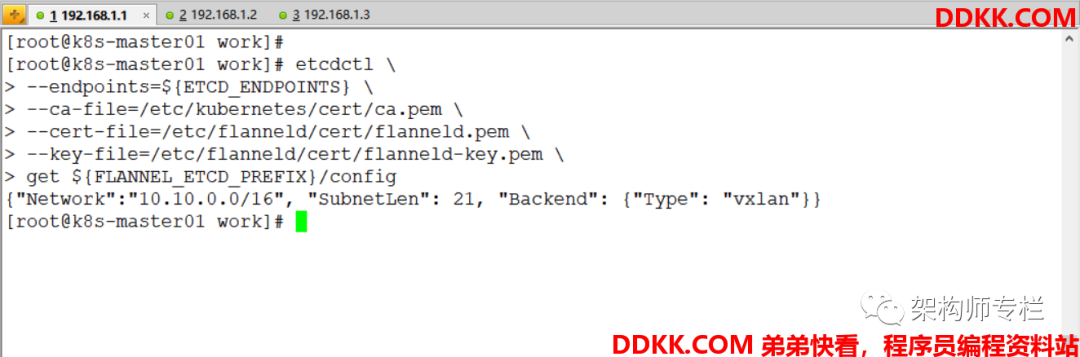

1)查看 Pod 网段信息

[root@k8s-master01 work]# etcdctl </span>

--endpoints=${

ETCD_ENDPOINTS}

--ca-file=/etc/kubernetes/cert/ca.pem

--cert-file=/etc/flanneld/cert/flanneld.pem

--key-file=/etc/flanneld/cert/flanneld-key.pem

get ${

FLANNEL_ETCD_PREFIX}/config

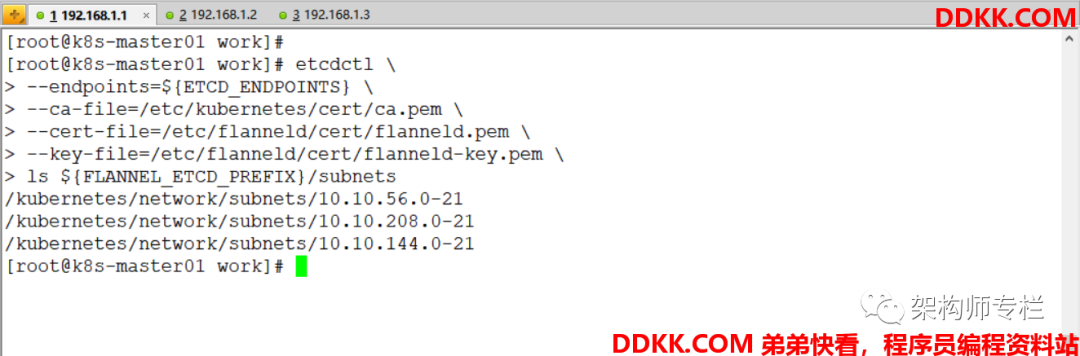

2)查看已分配的 Pod 子网段列表

[root@k8s-master01 work]# etcdctl </span>

--endpoints=${

ETCD_ENDPOINTS}

--ca-file=/etc/kubernetes/cert/ca.pem

--cert-file=/etc/flanneld/cert/flanneld.pem

--key-file=/etc/flanneld/cert/flanneld-key.pem

ls ${

FLANNEL_ETCD_PREFIX}/subnets

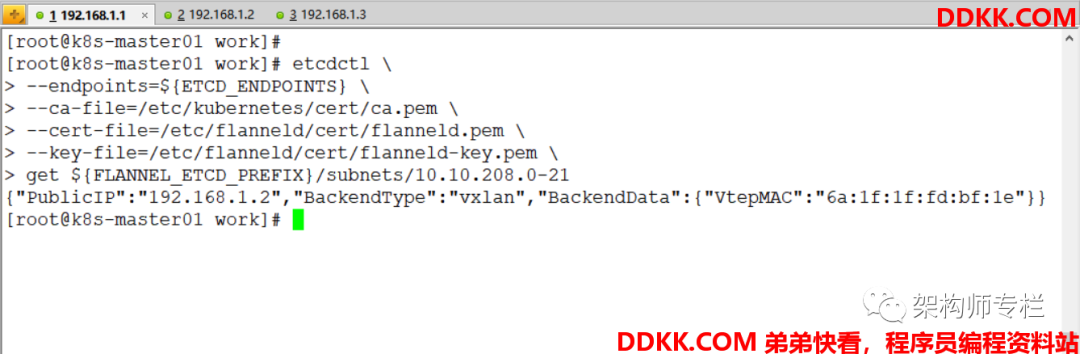

3)查看某一 Pod 网段对应的节点 IP 和 Flannel 接口地址

[root@k8s-master01 work]# etcdctl </span>

--endpoints=${

ETCD_ENDPOINTS}

--ca-file=/etc/kubernetes/cert/ca.pem

--cert-file=/etc/flanneld/cert/flanneld.pem

--key-file=/etc/flanneld/cert/flanneld-key.pem

get ${

FLANNEL_ETCD_PREFIX}/subnets/10.10.208.0-21

4.安装 Docker 服务

Docker 运行和管理容器,Kubelet 通过 Container Runtime Interface (CRI) 与它进行交互。

下载Docker

[root@k8s-master01 work]# wget https://download.docker.com/linux/static/stable/x86_64/docker-19.03.12.tgz

[root@k8s-master01 work]# tar -zxf docker-19.03.12.tgz

安装Docker

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp docker/ root@${

all_ip}:/opt/k8s/bin/

ssh root@${

all_ip} "chmod +x /opt/k8s/bin/"

done

1)创建启动脚本

[root@k8s-master01 work]# cat > docker.service << "EOF"

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.io

[Service]

WorkingDirectory=##DOCKER_DIR##

Environment="PATH=/opt/k8s/bin:/bin:/sbin:/usr/bin:/usr/sbin"

EnvironmentFile=-/run/flannel/docker

ExecStart=/opt/k8s/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

Restart=on-failure

RestartSec=5

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF

[root@k8s-master01 work]# sed -i -e "s|##DOCKER_DIR##|${DOCKER_DIR}|" docker.service

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp docker.service root@${

all_ip}:/etc/systemd/system/

done

配置daemon.json 文件

[root@k8s-master01 work]# cat > daemon.json << EOF

{

"registry-mirrors": ["https://ipbtg5l0.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=cgroupfs"],

"data-root": "${DOCKER_DIR}/data",

"exec-root": "${DOCKER_DIR}/exec",

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "5"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

ssh root@${

all_ip} "mkdir -p /etc/docker/ ${DOCKER_DIR}/{data,exec}"

scp daemon.json root@${

all_ip}:/etc/docker/daemon.json

done

2)启动 Docker

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

ssh root@${

all_ip} "systemctl daemon-reload && systemctl enable docker --now"

done

5.安装 Kubectl 服务

下载Kubectl

[root@k8s-master01 work]# wget https://storage.googleapis.com/kubernetes-release/release/v1.18.3/kubernetes-client-linux-amd64.tar.gz

[root@k8s-master01 work]# tar -zxf kubernetes-client-linux-amd64.tar.gz

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kubernetes/client/bin/kubectl root@${

master_ip}:/opt/k8s/bin/

ssh root@${

master_ip} "chmod +x /opt/k8s/bin/*"

done

1)创建 Admin 证书和密钥

[root@k8s-master01 work]# cat > admin-csr.json << EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

3)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

4)创建 Kubeconfig 文件

配置集群参数

[root@k8s-master01 work]# kubectl config set-cluster kubernetes </span>

--certificate-authority=/opt/k8s/work/ca.pem

--embed-certs=true

--server=${

KUBE_APISERVER}

--kubeconfig=kubectl.kubeconfig

配置客户端认证参数

[root@k8s-master01 work]# kubectl config set-credentials admin </span>

--client-certificate=/opt/k8s/work/admin.pem

--client-key=/opt/k8s/work/admin-key.pem

--embed-certs=true

--kubeconfig=kubectl.kubeconfig

配置上下文参数

[root@k8s-master01 work]# kubectl config set-context kubernetes </span>

--cluster=kubernetes

--user=admin

--kubeconfig=kubectl.kubeconfig

配置默认上下文

[root@k8s-master01 work]# kubectl config use-context kubernetes --kubeconfig=kubectl.kubeconfig

5)创建 Kubectl 配置文件,并配置命令补全工具

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

ssh root@${

master_ip} "mkdir -p ~/.kube"

scp kubectl.kubeconfig root@${

master_ip}:~/.kube/config

ssh root@${

master_ip} "echo 'export KUBECONFIG=$HOME/.kube/config' >> ~/.bashrc"

ssh root@${

master_ip} "echo 'source <(kubectl completion bash)' >> ~/.bashrc"

done

下面命令需要在 k8s-master01 和 k8s-master02 上配置:

[root@k8s-master01 work]# source /usr/share/bash-completion/bash_completion

[root@k8s-master01 work]# source <(kubectl completion bash)

[root@k8s-master01 work]# bash ~/.bashrc

三、安装 Kubenetes 相关组件

1.安装 Kube-APIServer 组件

下载Kubernetes 二进制文件

[root@k8s-master01 work]# wget https://storage.googleapis.com/kubernetes-release/release/v1.18.3/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master01 work]# tar -zxf kubernetes-server-linux-amd64.tar.gz

[root@k8s-master01 work]# cd kubernetes

[root@k8s-master01 kubernetes]# tar -zxf kubernetes-src.tar.gz

[root@k8s-master01 kubernetes]# cd ..

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp -rp kubernetes/server/bin/{

apiextensions-apiserver,kube-apiserver,kube-controller-manager,kube-scheduler,kubeadm,kubectl,mounter} root@${

master_ip}:/opt/k8s/bin/

ssh root@${

master_ip} "chmod +x /opt/k8s/bin/*"

done

1)创建 Kubernetes 证书和密钥

[root@k8s-master01 work]# cat > kubernetes-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.1.1",

"192.168.1.2",

"${CLUSTER_KUBERNETES_SVC_IP}",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local."

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

2)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

ssh root@${

master_ip} "mkdir -p /etc/kubernetes/cert"

scp kubernetes*.pem root@${

master_ip}:/etc/kubernetes/cert/

done

3)配置 Kube-APIServer 审计

创建加密配置文件

[root@k8s-master01 work]# cat > encryption-config.yaml << EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: zhangsan

secret: ${

ENCRYPTION_KEY}

- identity: {

}

EOF

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp encryption-config.yaml root@${

master_ip}:/etc/kubernetes/encryption-config.yaml

done

创建审计策略文件

[root@k8s-master01 work]# cat > audit-policy.yaml << EOF

apiVersion: audit.k8s.io/v1beta1

kind: Policy

rules:

# The following requests were manually identified as high-volume and low-risk, so drop them.

- level: None

resources:

- group: ""

resources:

- endpoints

- services

- services/status

users:

- 'system:kube-proxy'

verbs:

- watch

- level: None

resources:

- group: ""

resources:

- nodes

- nodes/status

userGroups:

- 'system:nodes'

verbs:

- get

- level: None

namespaces:

- kube-system

resources:

- group: ""

resources:

- endpoints

users:

- 'system:kube-controller-manager'

- 'system:kube-scheduler'

- 'system:serviceaccount:kube-system:endpoint-controller'

verbs:

- get

- update

- level: None

resources:

- group: ""

resources:

- namespaces

- namespaces/status

- namespaces/finalize

users:

- 'system:apiserver'

verbs:

- get

# Don't log HPA fetching metrics.

- level: None

resources:

- group: metrics.k8s.io

users:

- 'system:kube-controller-manager'

verbs:

- get

- list

# Don't log these read-only URLs.

- level: None

nonResourceURLs:

- '/healthz'

- /version

- '/swagger'

# Don't log events requests.

- level: None

resources:

- group: ""

resources:

- events

# node and pod status calls from nodes are high-volume and can be large, don't log responses for expected updates from nodes

- level: Request

omitStages:

- RequestReceived

resources:

- group: ""

resources:

- nodes/status

- pods/status

users:

- kubelet

- 'system:node-problem-detector'

- 'system:serviceaccount:kube-system:node-problem-detector'

verbs:

- update

- patch

- level: Request

omitStages:

- RequestReceived

resources:

- group: ""

resources:

- nodes/status

- pods/status

userGroups:

- 'system:nodes'

verbs:

- update

- patch

# deletecollection calls can be large, don't log responses for expected namespace deletions

- level: Request

omitStages:

- RequestReceived

users:

- 'system:serviceaccount:kube-system:namespace-controller'

verbs:

- deletecollection

# Secrets, ConfigMaps, and TokenReviews can contain sensitive & binary data,

# so only log at the Metadata level.

- level: Metadata

omitStages:

- RequestReceived

resources:

- group: ""

resources:

- secrets

- configmaps

- group: authentication.k8s.io

resources:

- tokenreviews

# Get repsonses can be large; skip them.

- level: Request

omitStages:

- RequestReceived

resources:

- group: ""

- group: admissionregistration.k8s.io

- group: apiextensions.k8s.io

- group: apiregistration.k8s.io

- group: apps

- group: authentication.k8s.io

- group: authorization.k8s.io

- group: autoscaling

- group: batch

- group: certificates.k8s.io

- group: extensions

- group: metrics.k8s.io

- group: networking.k8s.io

- group: policy

- group: rbac.authorization.k8s.io

- group: scheduling.k8s.io

- group: settings.k8s.io

- group: storage.k8s.io

verbs:

- get

- list

- watch

# Default level for known APIs

- level: RequestResponse

omitStages:

- RequestReceived

resources:

- group: ""

- group: admissionregistration.k8s.io

- group: apiextensions.k8s.io

- group: apiregistration.k8s.io

- group: apps

- group: authentication.k8s.io

- group: authorization.k8s.io

- group: autoscaling

- group: batch

- group: certificates.k8s.io

- group: extensions

- group: metrics.k8s.io

- group: networking.k8s.io

- group: policy

- group: rbac.authorization.k8s.io

- group: scheduling.k8s.io

- group: settings.k8s.io

- group: storage.k8s.io

# Default level for all other requests.

- level: Metadata

omitStages:

- RequestReceived

EOF

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp audit-policy.yaml root@${

master_ip}:/etc/kubernetes/audit-policy.yaml

done

4)配置 Metrics-Server

创建metrics-server 的 CA 证书请求文件

[root@k8s-master01 work]# cat > proxy-client-csr.json << EOF

{

"CN": "system:metrics-server",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes proxy-client-csr.json | cfssljson -bare proxy-client

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp proxy-client*.pem root@${

master_ip}:/etc/kubernetes/cert/

done

5)创建启动脚本

[root@k8s-master01 work]# cat > kube-apiserver.service.template << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=${

K8S_DIR}/kube-apiserver

ExecStart=/opt/k8s/bin/kube-apiserver \

--insecure-port=0 \

--secure-port=6443 \

--bind-address=##MASTER_IP## \

--advertise-address=##MASTER_IP## \

--default-not-ready-toleration-seconds=360 \

--default-unreachable-toleration-seconds=360 \

--feature-gates=DynamicAuditing=true \

--max-mutating-requests-inflight=2000 \

--max-requests-inflight=4000 \

--default-watch-cache-size=200 \

--delete-collection-workers=2 \

--encryption-provider-config=/etc/kubernetes/encryption-config.yaml \

--etcd-cafile=/etc/kubernetes/cert/ca.pem \

--etcd-certfile=/etc/kubernetes/cert/kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/cert/kubernetes-key.pem \

--etcd-servers=${

ETCD_ENDPOINTS} \

--tls-cert-file=/etc/kubernetes/cert/kubernetes.pem \

--tls-private-key-file=/etc/kubernetes/cert/kubernetes-key.pem \

--audit-dynamic-configuration \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-truncate-enabled=true \

--audit-log-path=${

K8S_DIR}/kube-apiserver/audit.log \

--audit-policy-file=/etc/kubernetes/audit-policy.yaml \

--profiling \

--anonymous-auth=false \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--enable-bootstrap-token-auth=true \

--requestheader-allowed-names="system:metrics-server" \

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--service-account-key-file=/etc/kubernetes/cert/ca.pem \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestriction \

--allow-privileged=true \

--apiserver-count=3 \

--event-ttl=168h \

--kubelet-certificate-authority=/etc/kubernetes/cert/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/cert/kubernetes.pem \

--kubelet-client-key=/etc/kubernetes/cert/kubernetes-key.pem \

--kubelet-https=true \

--kubelet-timeout=10s \

--proxy-client-cert-file=/etc/kubernetes/cert/proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/cert/proxy-client-key.pem \

--service-cluster-ip-range=${

SERVICE_CIDR} \

--service-node-port-range=${

NODE_PORT_RANGE} \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

6)启动 Kube-APIServer 并验证

[root@k8s-master01 work]# for (( A=0; A < 2; A++ ))

do

sed -e "s/##MASTER_NAME##/${MASTER_NAMES[A]}/" -e "s/##MASTER_IP##/${MASTER_IPS[A]}/" kube-apiserver.service.template > kube-apiserver-${

MASTER_IPS[A]}.service

done

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-apiserver-${

master_ip}.service root@${

master_ip}:/etc/systemd/system/kube-apiserver.service

ssh root@${

master_ip} "mkdir -p ${K8S_DIR}/kube-apiserver"

ssh root@${

master_ip} "systemctl daemon-reload && systemctl enable kube-apiserver --now"

done

查看Kube-APIServer 写入 ETCD 的数据

[root@k8s-master01 work]# ETCDCTL_API=3 etcdctl </span>

--endpoints=${

ETCD_ENDPOINTS}

--cacert=/opt/k8s/work/ca.pem

--cert=/opt/k8s/work/etcd.pem

--key=/opt/k8s/work/etcd-key.pem

get /registry/ --prefix --keys-only

查看集群信息

[root@k8s-master01 work]# kubectl cluster-info

[root@k8s-master01 work]# kubectl get all --all-namespaces

[root@k8s-master01 work]# kubectl get componentstatuses

[root@k8s-master01 work]# netstat -anpt | grep 6443

授予kube-apiserver 访问 kubelet API 的权限

[root@k8s-master01 work]# kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes

2.安装 Controller Manager 组件

1)创建 Controller Manager 证书和密钥

[root@k8s-master01 work]# cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"192.168.1.1",

"192.168.1.2"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

2)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-controller-manager*.pem root@${

master_ip}:/etc/kubernetes/cert/

done

3)创建 Kubeconfig 文件

[root@k8s-master01 work]# kubectl config set-cluster kubernetes </span>

--certificate-authority=/opt/k8s/work/ca.pem

--embed-certs=true

--server=${

KUBE_APISERVER}

--kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master01 work]# kubectl config set-credentials system:kube-controller-manager </span>

--client-certificate=kube-controller-manager.pem

--client-key=kube-controller-manager-key.pem

--embed-certs=true

--kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master01 work]# kubectl config set-context system:kube-controller-manager </span>

--cluster=kubernetes

--user=system:kube-controller-manager

--kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master01 work]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-controller-manager.kubeconfig root@${

master_ip}:/etc/kubernetes/

done

4)创建启动脚本

[root@k8s-master01 work]# cat > kube-controller-manager.service.template << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=${

K8S_DIR}/kube-controller-manager

ExecStart=/opt/k8s/bin/kube-controller-manager \

--secure-port=10257 \

--bind-address=127.0.0.1 \

--profiling \

--cluster-name=kubernetes \

--controllers=*,bootstrapsigner,tokencleaner \

--kube-api-qps=1000 \

--kube-api-burst=2000 \

--leader-elect \

--use-service-account-credentials\

--concurrent-service-syncs=2 \

--tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \

--authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--requestheader-allowed-names="system:metrics-server" \

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \

--requestheader-extra-headers-prefix="X-Remote-Extra-" \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \

--experimental-cluster-signing-duration=87600h \

--horizontal-pod-autoscaler-sync-period=10s \

--concurrent-deployment-syncs=10 \

--concurrent-gc-syncs=30 \

--node-cidr-mask-size=24 \

--service-cluster-ip-range=${

SERVICE_CIDR} \

--cluster-cidr=${

CLUSTER_CIDR} \

--pod-eviction-timeout=6m \

--terminated-pod-gc-threshold=10000 \

--root-ca-file=/etc/kubernetes/cert/ca.pem \

--service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

4)启动并验证

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-controller-manager.service.template root@${

master_ip}:/etc/systemd/system/kube-controller-manager.service

ssh root@${

master_ip} "mkdir -p ${K8S_DIR}/kube-controller-manager"

ssh root@${

master_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager --now"

done

查看输出的 Metrics

[root@k8s-master01 work]# curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://127.0.0.1:10257/metrics | head

查看权限

[root@k8s-master01 work]# kubectl describe clusterrole system:kube-controller-manager

[root@k8s-master01 work]# kubectl get clusterrole | grep controller

[root@k8s-master01 work]# kubectl describe clusterrole system:controller:deployment-controller

查看当前的 Leader

[root@k8s-master01 work]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

3.安装 Kube-Scheduler 组件

1)创建 Kube-Scheduler 证书和密钥

[root@k8s-master01 work]# cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.1.1",

"192.168.1.2"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

2)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-scheduler*.pem root@${

master_ip}:/etc/kubernetes/cert/

done

3)创建 Kubeconfig 文件

[root@k8s-master01 work]# kubectl config set-cluster kubernetes </span>

--certificate-authority=/opt/k8s/work/ca.pem

--embed-certs=true

--server=${

KUBE_APISERVER}

--kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master01 work]# kubectl config set-credentials system:kube-scheduler </span>

--client-certificate=kube-scheduler.pem

--client-key=kube-scheduler-key.pem

--embed-certs=true

--kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master01 work]# kubectl config set-context system:kube-scheduler </span>

--cluster=kubernetes

--user=system:kube-scheduler

--kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master01 work]# kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-scheduler.kubeconfig root@${

master_ip}:/etc/kubernetes/

done

4)创建 Kube-Scheduler 配置文件

[root@k8s-master01 work]# cat > kube-scheduler.yaml.template << EOF

apiVersion: kubescheduler.config.k8s.io/v1alpha1

kind: KubeSchedulerConfiguration

bindTimeoutSeconds: 600

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/kube-scheduler.kubeconfig"

qps: 100

enableContentionProfiling: false

enableProfiling: true

hardPodAffinitySymmetricWeight: 1

healthzBindAddress: 127.0.0.1:10251

leaderElection:

leaderElect: true

metricsBindAddress: 127.0.0.1:10251

EOF

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-scheduler.yaml.template root@${

master_ip}:/etc/kubernetes/kube-scheduler.yaml

done

5)创建启动脚本

[root@k8s-master01 work]# cat > kube-scheduler.service.template << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

WorkingDirectory=${

K8S_DIR}/kube-scheduler

ExecStart=/opt/k8s/bin/kube-scheduler \

--port=0 \

--secure-port=10259 \

--bind-address=127.0.0.1 \

--config=/etc/kubernetes/kube-scheduler.yaml \

--tls-cert-file=/etc/kubernetes/cert/kube-scheduler.pem \

--tls-private-key-file=/etc/kubernetes/cert/kube-scheduler-key.pem \

--authentication-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--client-ca-file=/etc/kubernetes/cert/ca.pem \

--requestheader-allowed-names="system:metrics-server" \

--requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \

--requestheader-extra-headers-prefix="X-Remote-Extra-" \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--authorization-kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF

6)启动并验证

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

scp kube-scheduler.service.template root@${

master_ip}:/etc/systemd/system/kube-scheduler.service

ssh root@${

master_ip} "mkdir -p ${K8S_DIR}/kube-scheduler"

ssh root@${

master_ip} "systemctl daemon-reload && systemctl enable kube-scheduler --now"

done

[root@k8s-master01 work]# netstat -nlpt | grep kube-schedule

- 10251:接收 http 请求,非安全端口,不需要认证授权;

- 10259:接收 https 请求,安全端口,需要认认证授权(两个接口都对外提供 /metrics 和 /healthz 的访问)

查看输出的 Metrics

[root@k8s-master01 work]# curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://127.0.0.1:10257/metrics | head

查看权限

[root@k8s-master01 work]# kubectl describe clusterrole system:kube-controller-manager

[root@k8s-master01 work]# kubectl get clusterrole | grep controller

[root@k8s-master01 work]# kubectl describe clusterrole system:controller:deployment-controller

查看当前的 Leader

[root@k8s-master01 work]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

4.安装 Kubelet 组件

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp kubernetes/server/bin/kubelet root@${

all_ip}:/opt/k8s/bin/

ssh root@${

all_ip} "chmod +x /opt/k8s/bin/*"

done

[root@k8s-master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

export BOOTSTRAP_TOKEN=$(kubeadm token create

--description kubelet-bootstrap-token </span>

--groups system:bootstrappers:${

all_name}

--kubeconfig ~/.kube/config)

kubectl config set-cluster kubernetes

--certificate-authority=/etc/kubernetes/cert/ca.pem </span>

--embed-certs=true </span>

--server=${

KUBE_APISERVER}

--kubeconfig=kubelet-bootstrap-${

all_name}.kubeconfig

kubectl config set-credentials kubelet-bootstrap

--token=${

BOOTSTRAP_TOKEN}

--kubeconfig=kubelet-bootstrap-${

all_name}.kubeconfig

kubectl config set-context default

--cluster=kubernetes </span>

--user=kubelet-bootstrap </span>

--kubeconfig=kubelet-bootstrap-${

all_name}.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap-${

all_name}.kubeconfig

done

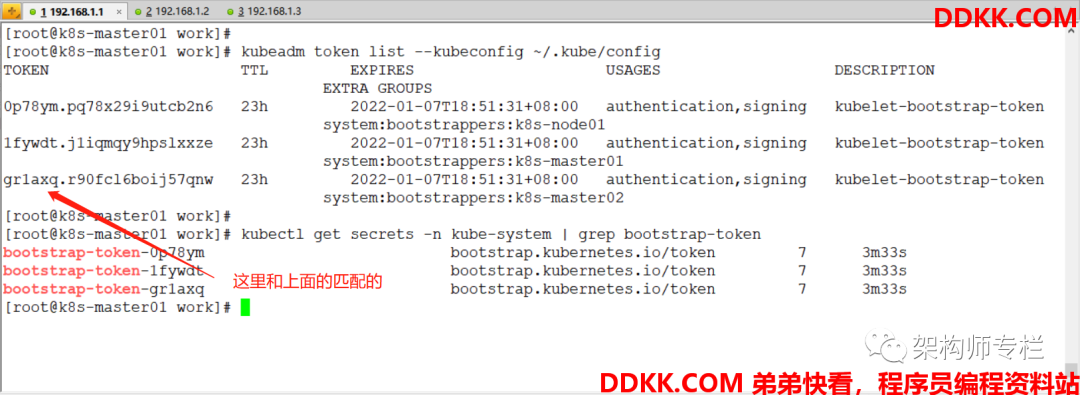

[root@k8s-master01 work]# kubeadm token list --kubeconfig ~/.kube/config # 查看 Kubeadm 为各节点创建的 Token

[root@k8s-master01 work]# kubectl get secrets -n kube-system | grep bootstrap-token # 查看各 Token 关联的 Secret

[root@k8s-master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

scp kubelet-bootstrap-${

all_name}.kubeconfig root@${

all_name}:/etc/kubernetes/kubelet-bootstrap.kubeconfig

done

创建Kubelet 参数配置文件

[root@k8s-master01 work]# cat > kubelet-config.yaml.template << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: "##ALL_IP##"

staticPodPath: ""

syncFrequency: 1m

fileCheckFrequency: 20s

httpCheckFrequency: 20s

staticPodURL: ""

port: 10250

readOnlyPort: 0

rotateCertificates: true

serverTLSBootstrap: true

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/etc/kubernetes/cert/ca.pem"

authorization:

mode: Webhook

registryPullQPS: 0

registryBurst: 20

eventRecordQPS: 0

eventBurst: 20

enableDebuggingHandlers: true

enableContentionProfiling: true

healthzPort: 10248

healthzBindAddress: "##ALL_IP##"

clusterDomain: "${CLUSTER_DNS_DOMAIN}"

clusterDNS:

- "${CLUSTER_DNS_SVC_IP}"

nodeStatusUpdateFrequency: 10s

nodeStatusReportFrequency: 1m

imageMinimumGCAge: 2m

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

volumeStatsAggPeriod: 1m

kubeletCgroups: ""

systemCgroups: ""

cgroupRoot: ""

cgroupsPerQOS: true

cgroupDriver: cgroupfs

runtimeRequestTimeout: 10m

hairpinMode: promiscuous-bridge

maxPods: 220

podCIDR: "${CLUSTER_CIDR}"

podPidsLimit: -1

resolvConf: /etc/resolv.conf

maxOpenFiles: 1000000

kubeAPIQPS: 1000

kubeAPIBurst: 2000

serializeImagePulls: false

evictionHard:

memory.available: "100Mi"

nodefs.available: "10%"

nodefs.inodesFree: "5%"

imagefs.available: "15%"

evictionSoft: {

}

enableControllerAttachDetach: true

failSwapOn: true

containerLogMaxSize: 20Mi

containerLogMaxFiles: 10

systemReserved: {

}

kubeReserved: {

}

systemReservedCgroup: ""

kubeReservedCgroup: ""

enforceNodeAllocatable: ["pods"]

EOF

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

sed -e "s/##ALL_IP##/${all_ip}/" kubelet-config.yaml.template > kubelet-config-${

all_ip}.yaml.template

scp kubelet-config-${

all_ip}.yaml.template root@${

all_ip}:/etc/kubernetes/kubelet-config.yaml

done

1)创建 Kubelet 启动脚本

[root@k8s-master01 work]# cat > kubelet.service.template << EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=${

K8S_DIR}/kubelet

ExecStart=/opt/k8s/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/cert \

--cgroup-driver=cgroupfs \

--cni-conf-dir=/etc/cni/net.d \

--container-runtime=docker \

--container-runtime-endpoint=unix:///var/run/dockershim.sock \

--root-dir=${

K8S_DIR}/kubelet \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet-config.yaml \

--hostname-override=##ALL_NAME## \

--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause-amd64:3.2 \

--image-pull-progress-deadline=15m \

--volume-plugin-dir=${

K8S_DIR}/kubelet/kubelet-plugins/volume/exec/ \

--logtostderr=true \

--v=2

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

EOF

[root@k8s-master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

sed -e "s/##ALL_NAME##/${all_name}/" kubelet.service.template > kubelet-${

all_name}.service

scp kubelet-${

all_name}.service root@${

all_name}:/etc/systemd/system/kubelet.service

done

2)启动并验证

授权

[root@k8s-master01 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --group=system:bootstrappers

启动Kubelet

[root@k8s-master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

ssh root@${

all_name} "mkdir -p ${K8S_DIR}/kubelet/kubelet-plugins/volume/exec/"

ssh root@${

all_name} "systemctl daemon-reload && systemctl enable kubelet --now"

done

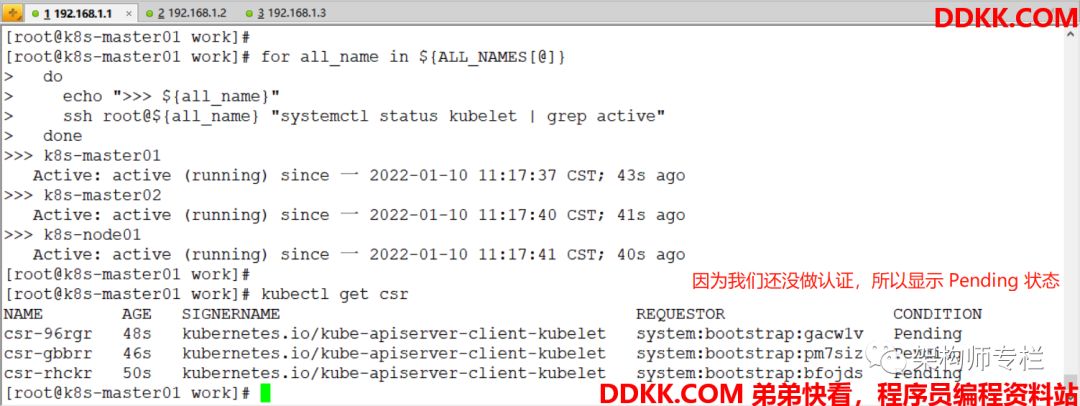

查看Kubelet 服务

[root@k8s-master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

ssh root@${

all_name} "systemctl status kubelet | grep active"

done

[root@k8s-master01 work]# kubectl get csr # 因为我们还没做认证. 所以显示 Pengding 状态

3)Approve CSR 请求

自动Approve CSR 请求(创建三个 ClusterRoleBinding,分别用于自动 approve client renew client renew server 证书)

[root@k8s-master01 work]# cat > csr-crb.yaml << EOF

# Approve all CSRs for the group "system:bootstrappers"

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: auto-approve-csrs-for-group

subjects:

- kind: Group

name: system:bootstrappers

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

apiGroup: rbac.authorization.k8s.io

---

# To let a node of the group "system:nodes" renew its own credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-client-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

apiGroup: rbac.authorization.k8s.io

---

# A ClusterRole which instructs the CSR approver to approve a node requesting a

# serving cert matching its client cert.

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: approve-node-server-renewal-csr

rules:

- apiGroups: ["certificates.k8s.io"]

resources: ["certificatesigningrequests/selfnodeserver"]

verbs: ["create"]

---

# To let a node of the group "system:nodes" renew its own server credentials

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: node-server-cert-renewal

subjects:

- kind: Group

name: system:nodes

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: approve-node-server-renewal-csr

apiGroup: rbac.authorization.k8s.io

EOF

[root@k8s-master01 work]# kubectl apply -f csr-crb.yaml

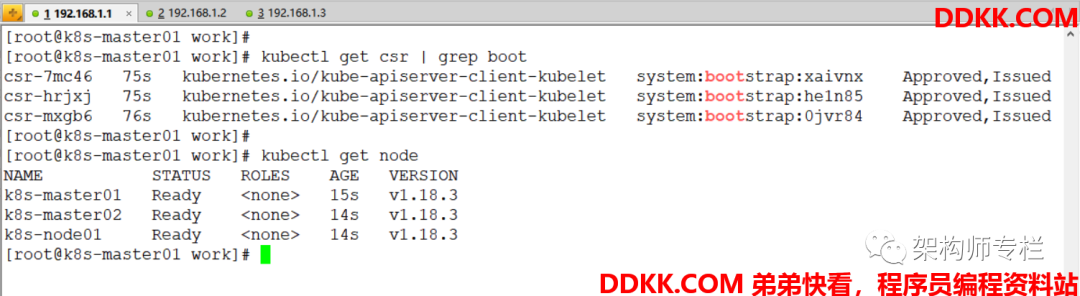

验证(等待一段时间 1 ~ 5 分钟),三个节点的 CSR 都自动 approved)

[root@k8s-master01 work]# kubectl get csr | grep boot # 等待一段时间 (1-10 分钟),三个节点的 CSR 都自动 approved

[root@k8s-master01 work]# kubectl get nodes # 所有节点均 Ready

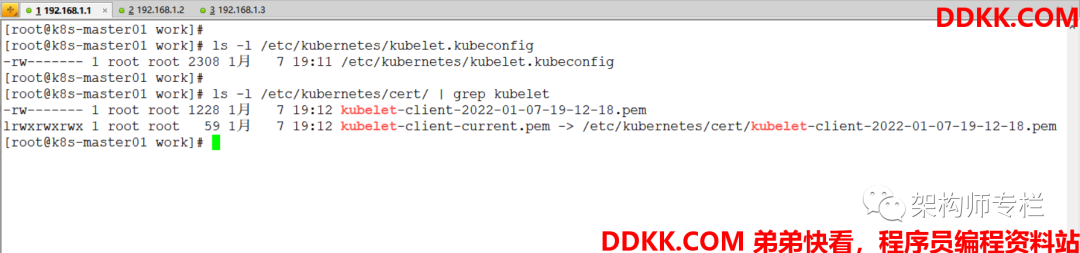

[root@k8s-master01 ~]# ls -l /etc/kubernetes/kubelet.kubeconfig

[root@k8s-master01 ~]# ls -l /etc/kubernetes/cert/ | grep kubelet

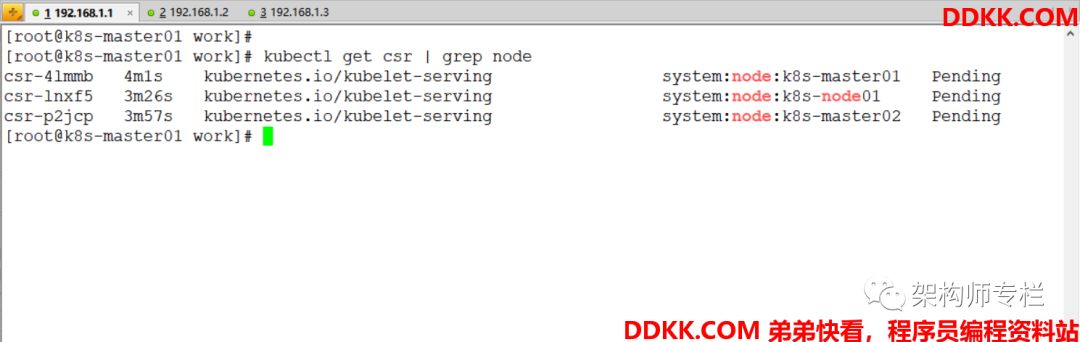

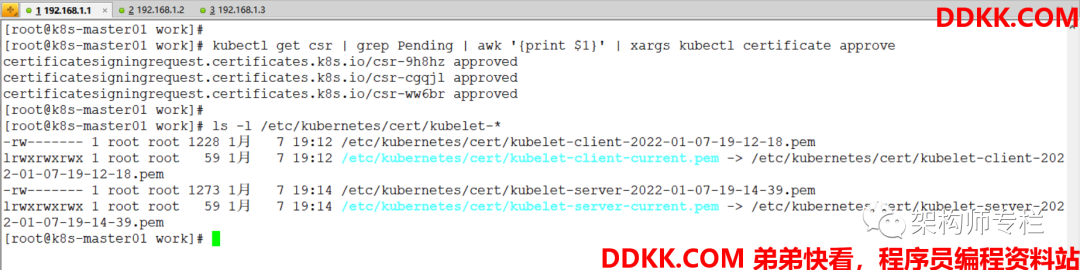

4)手动 Approve Server Cert Csr

基于安全性考虑,CSR approving controllers 不会自动 approve kubelet server 证书签名请求,需要手动 approve

[root@k8s-master01 ~]# kubectl get csr | grep node

[root@k8s-master01 ~]# kubectl get csr | grep Pending | awk '{print $1}' | xargs kubectl certificate approve

[root@k8s-master01 ~]# ls -l /etc/kubernetes/cert/kubelet-*

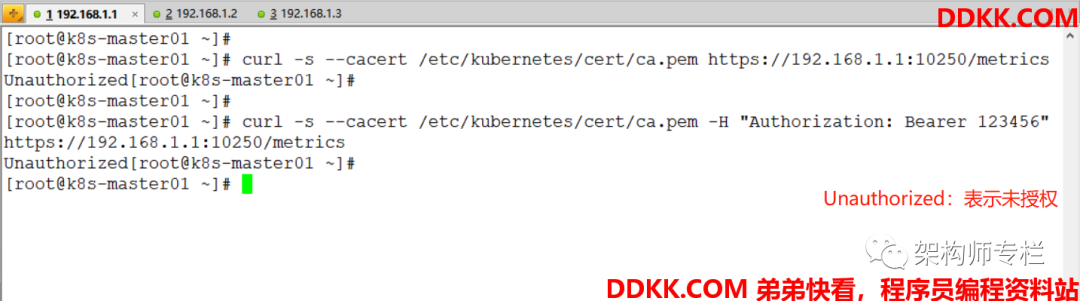

5)Kubelet API 接口配置

Kubelet API 认证和授权

[root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem https://192.168.1.1:10250/metrics

[root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer 123456" https://192.168.1.1:10250/metrics

证书认证和授权

// 默认权限不足

[root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /etc/kubernetes/cert/kube-controller-manager.pem --key /etc/kubernetes/cert/kube-controller-manager-key.pem https://192.168.1.1:10250/metrics

// 使用最高权限的 admin

[root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://192.168.1.1:10250/metrics | head

创建Bear Token 认证和授权

[root@k8s-master01 ~]# kubectl create serviceaccount kubelet-api-test

[root@k8s-master01 ~]# kubectl create clusterrolebinding kubelet-api-test --clusterrole=system:kubelet-api-admin --serviceaccount=default:kubelet-api-test

[root@k8s-master01 ~]# SECRET=$(kubectl get secrets | grep kubelet-api-test | awk '{print $1}')

[root@k8s-master01 ~]# TOKEN=$(kubectl describe secret ${

SECRET} | grep -E '^token' | awk '{print $2}')

[root@k8s-master01 ~]# echo ${

TOKEN}

[root@k8s-master01 ~]# curl -s --cacert /etc/kubernetes/cert/ca.pem -H "Authorization: Bearer ${TOKEN}" https://192.168.1.1:10250/metrics | head

5.安装 Kube-Proxy 组件

Kube-Proxy 运行在所有主机上,用来监听 APIServer 中的 Service 和 Endpoint 的变化情况,并创建路由规则来提供服务 IP 和负载均衡功能。

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp kubernetes/server/bin/kube-proxy root@${

all_ip}:/opt/k8s/bin/

ssh root@${

all_ip} "chmod +x /opt/k8s/bin/*"

done

1)创建 Kube-Proxy 证书和密钥

创建Kube-Proxy 的 CA 证书请求文件

[root@k8s-master01 work]# cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "k8s",

"OU": "System"

}

]

}

EOF

2)生成证书和密钥

[root@k8s-master01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

3)创建 Kubeconfig 文件

[root@k8s-master01 work]# kubectl config set-cluster kubernetes </span>

--certificate-authority=/opt/k8s/work/ca.pem

--embed-certs=true

--server=${

KUBE_APISERVER}

--kubeconfig=kube-proxy.kubeconfig

[root@k8s-master01 work]# kubectl config set-credentials kube-proxy </span>

--client-certificate=kube-proxy.pem

--client-key=kube-proxy-key.pem

--embed-certs=true

--kubeconfig=kube-proxy.kubeconfig

[root@k8s-master01 work]# kubectl config set-context default </span>

--cluster=kubernetes

--user=kube-proxy

--kubeconfig=kube-proxy.kubeconfig

[root@k8s-master01 work]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

scp kube-proxy.kubeconfig root@${

all_ip}:/etc/kubernetes/

done

4)创建 Kube-Proxy 配置文件

[root@k8s-master01 work]# cat > kube-proxy-config.yaml.template << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

burst: 200

kubeconfig: "/etc/kubernetes/kube-proxy.kubeconfig"

qps: 100

bindAddress: ##ALL_IP##

healthzBindAddress: ##ALL_IP##:10256

metricsBindAddress: ##ALL_IP##:10249

enableProfiling: true

clusterCIDR: ${

CLUSTER_CIDR}

hostnameOverride: ##ALL_NAME##

mode: "ipvs"

portRange: ""

kubeProxyIPTablesConfiguration:

masqueradeAll: false

kubeProxyIPVSConfiguration:

scheduler: rr

excludeCIDRs: []

EOF

[root@k8s-master01 work]# for (( i=0; i < 3; i++ ))

do

echo ">>> ${ALL_NAMES[i]}"

sed -e "s/##ALL_NAME##/${ALL_NAMES[i]}/" -e "s/##ALL_IP##/${ALL_IPS[i]}/" kube-proxy-config.yaml.template > kube-proxy-config-${

ALL_NAMES[i]}.yaml.template

scp kube-proxy-config-${

ALL_NAMES[i]}.yaml.template root@${

ALL_NAMES[i]}:/etc/kubernetes/kube-proxy-config.yaml

done

4)创建启动脚本

[root@k8s-master01 work]# cat > kube-proxy.service << EOF

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=${

K8S_DIR}/kube-proxy

ExecStart=/opt/k8s/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy-config.yaml \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

[root@k8s-master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

scp kube-proxy.service root@${

all_name}:/etc/systemd/system/

done

5)启动并验证

[root@k8s-master01 work]# for all_ip in ${

ALL_IPS[@]}

do

echo ">>> ${all_ip}"

ssh root@${

all_ip} "mkdir -p ${K8S_DIR}/kube-proxy"

ssh root@${

all_ip} "modprobe ip_vs_rr"

ssh root@${

all_ip} "systemctl daemon-reload && systemctl enable kube-proxy --now"

done

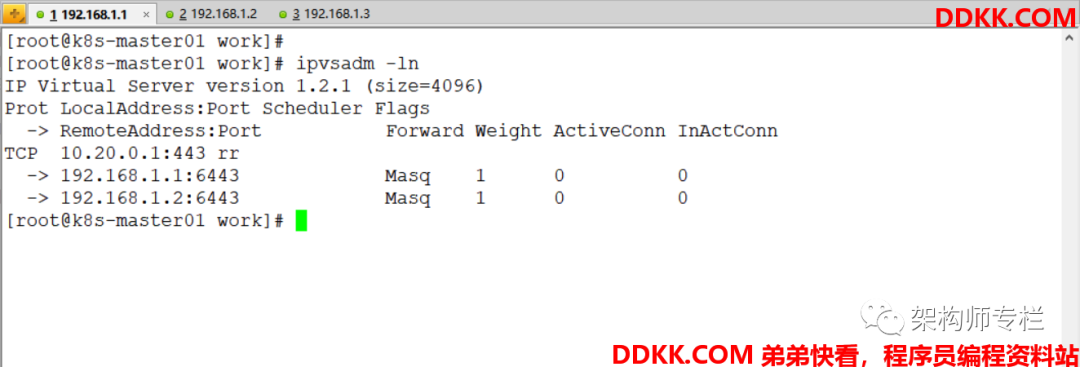

查看ipvs 路由规则

[root@k8s-master01 work]# ipvsadm -ln

问题: 当我们在启动 kube-proxy 组件后,通过 systemctl 查看该组件状态时,出现如下错误

Not using --random-fully in the MASQUERADE rule for iptables because the local version of iptables does not support it

上面报错是因为我们的 iptables 版本不支持 --random-fully 配置(1.6.2 版本上支持),所以我们需要对 iptables 进行升级操作。

[root@master01 work]# wget https://www.netfilter.org/projects/iptables/files/iptables-1.6.2.tar.bz2 --no-check-certificate

[root@master01 work]# for all_name in ${

ALL_NAMES[@]}

do

echo ">>> ${all_name}"

scp iptables-1.6.2.tar.bz2 root@${

all_name}:/root/

ssh root@${

all_name} "yum -y install gcc make libnftnl-devel libmnl-devel autoconf automake libtool bison flex libnetfilter_conntrack-devel libnetfilter_queue-devel libpcap-devel bzip2"

ssh root@${

all_name} "export LC_ALL=C && tar -xf iptables-1.6.2.tar.bz2 && cd iptables-1.6.2 && ./autogen.sh && ./configure && make && make install"

ssh root@${

all_name} "systemctl daemon-reload && systemctl restart kubelet && systemctl restart kube-proxy"

done

6.安装 CoreDNS 插件

1)修改 Coredns 配置

[root@k8s-master01 ~]# cd /opt/k8s/work/kubernetes/cluster/addons/dns/coredns

[root@k8s-master01 coredns]# cp coredns.yaml.base coredns.yaml

[root@k8s-master01 coredns]# sed -i -e "s/PILLAR__DNS__DOMAIN/${CLUSTER_DNS_DOMAIN}/" -e "s/PILLAR__DNS__SERVER/${CLUSTER_DNS_SVC_IP}/" -e "s/PILLAR__DNS__MEMORY__LIMIT/200Mi/" coredns.yaml

2)创建 Coredns 并启动

配置调度策略

[root@k8s-master01 coredns]# kubectl label nodes k8s-master01 node-role.kubernetes.io/master=true

[root@k8s-master01 coredns]# kubectl label nodes k8s-master02 node-role.kubernetes.io/master=true

[root@k8s-master01 coredns]# vim coredns.yaml

......

apiVersion: apps/v1

kind: Deployment

......

spec:

replicas: 2 # 配置成两个副本

......

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Equal"

value: ""

effect: NoSchedule

nodeSelector:

node-role.kubernetes.io/master: "true"

......

[root@k8s-master01 coredns]# kubectl create -f coredns.yaml

kubectl describe pod Pod-Name -n kube-system # Pod-Name 你们需要换成自己的

因为上面镜像使用的是 K8s 官方的镜像(国外),所以可能会出现:

Normal BackOff 72s (x6 over 3m47s) kubelet, k8s-master01 Back-off pulling image "k8s.gcr.io/coredns:1.6.5"

Warning Failed 57s (x7 over 3m47s) kubelet, k8s-master01 Error: ImagePullBackOff

- 出现如上问题后,我们可以通过拉取其它仓库中的镜像,拉取完后重新打个标签即可。

如:docker pull k8s.gcr.io/coredns:1.6.5

我们可以:

docker pull registry.aliyuncs.com/google_containers/coredns:1.6.5

docker tag registry.aliyuncs.com/google_containers/coredns:1.6.5 k8s.gcr.io/coredns:1.6.5

3)验证

[root@k8s-master01 coredns]# kubectl run -it --rm test-dns --image=busybox:1.28.4 sh

If you don't see a command prompt, try pressing enter.

/ #

/ # nslookup kubernetes

Server: 10.20.0.254

Address 1: 10.20.0.254 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.20.0.1 kubernetes.default.svc.cluster.local

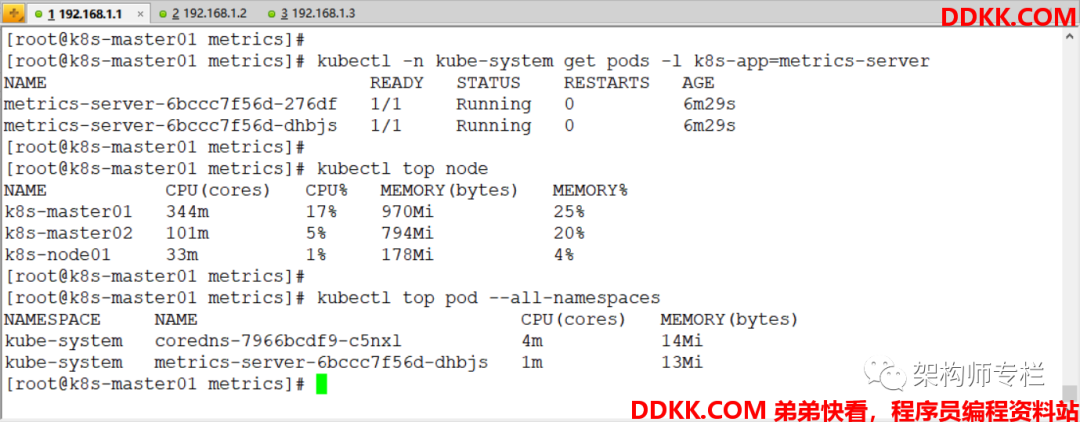

7.安装 Dashboard 仪表盘

[root@k8s-master01 coredns]# cd /opt/k8s/work/

[root@k8s-master01 work]# mkdir metrics

[root@k8s-master01 work]# cd metrics/

[root@k8s-master01 metrics]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.3.6/components.yaml

[root@k8s-master01 metrics]# vim components.yaml

......

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

replicas: 2 # 修改副本数

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

hostNetwork: true # 配置主机网络

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {

}

containers:

- name: metrics-server

image: registry.aliyuncs.com/google_containers/metrics-server-amd64:v0.3.6 # 修改镜像名

imagePullPolicy: IfNotPresent

args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-insecure-tls # 新加的

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP # 新加的

......

[root@k8s-master01 metrics]# kubectl create -f components.yaml

验证:

1)创建证书

[root@k8s-master01 metrics]# cd /opt/k8s/work/

[root@k8s-master01 work]# mkdir -p /opt/k8s/work/dashboard/certs

[root@k8s-master01 work]# openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/C=CN/ST=ZheJiang/L=HangZhou/O=Xianghy/OU=Xianghy/CN=k8s.odocker.com"

[root@k8s-master01 work]# for master_ip in ${

MASTER_IPS[@]}

do

echo ">>> ${master_ip}"

ssh root@${

master_ip} "mkdir -p /opt/k8s/work/dashboard/certs"

scp tls.* root@${

master_ip}:/opt/k8s/work/dashboard/certs/

done

2)修改 Dashboard 配置

手动创建 Secret

[root@master01 ~]# kubectl create namespace kubernetes-dashboard

[root@master01 ~]# kubectl create secret generic kubernetes-dashboard-certs --from-file=/opt/k8s/work/dashboard/certs -n kubernetes-dashboard

修改Dashboard 配置(你们可以通过这个地址来看 Dashboard 的 yaml 文件:传送门)

[root@k8s-master01 work]# cd dashboard/

[root@k8s-master01 dashboard]# vim dashboard.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30080

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta8

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

- --tls-key-file=tls.key

- --tls-cert-file=tls.crt

- --token-ttl=3600

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {

}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {

}

[root@k8s-master01 dashboard]# kubectl create -f dashboard.yaml

创建管理员账户

[root@k8s-master01 dashboard]# kubectl create serviceaccount admin-user -n kubernetes-dashboard

[root@k8s-master01 dashboard]# kubectl create clusterrolebinding admin-user --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:admin-user

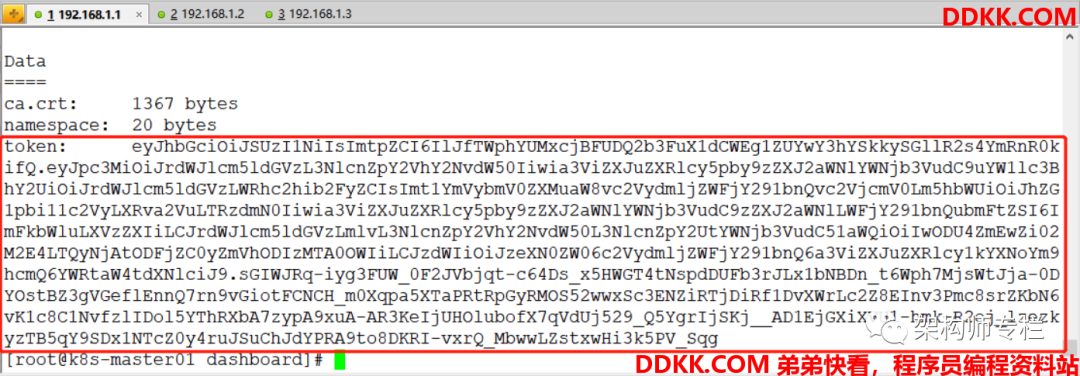

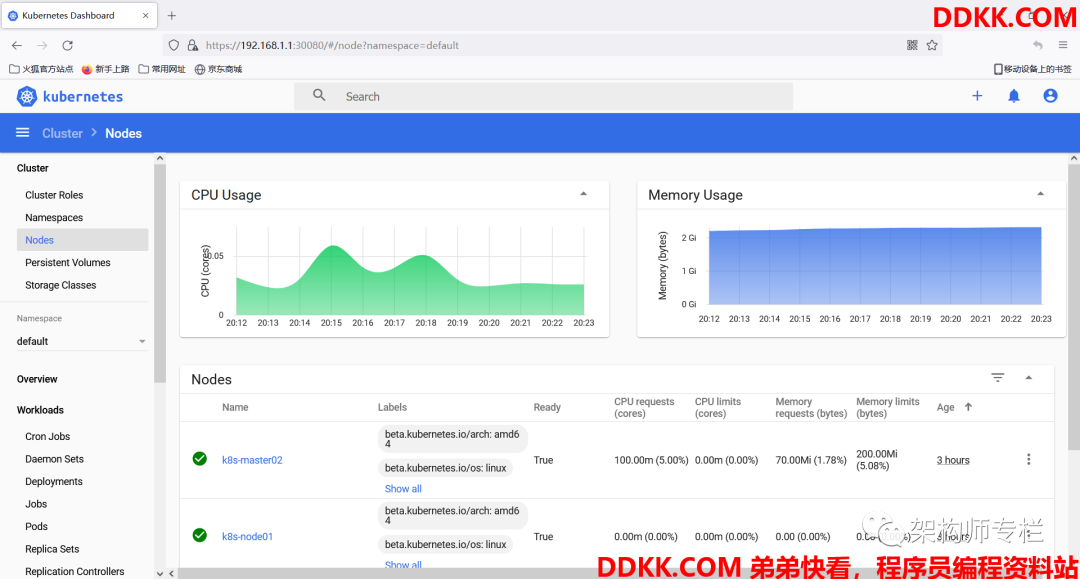

3)验证

获取登录令牌

[root@k8s-master01 dashboard]# kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

来源:

blog.csdn.net/weixin_46902396/article/details/122303350

https://mp.weixin.qq.com/s/-viVAlWgMTcSk76unuQ-4A