spark2.4.5安装

1.spark安装包下载

https://mirror.bit.edu.cn/apache/spark/spark-2.4.5/spark-2.4.5-bin-without-hadoop.tgz

2.上传到服务器

scp ~/Downloads/spark-2.4.5-bin-without-hadoop.tgz root@hadoop003:/root

3.解压

[root@hadoop003 ~]# tar -xvf spark-2.4.5-bin-without-hadoop.tgz -C /usr/local/cluster/

[root@hadoop003 ~]# chown -R hadoop:hadoop /usr/local/cluster/spark-2.4.5-bin-without-hadoop/

4.修改配置文件

#conf目录

[hadoop@hadoop003 conf]$ cp spark-env.sh.template spark-env.sh

[hadoop@hadoop003 conf]$ vim spark-env.sh

export SPARK_DIST_CLASSPATH=$(hadoop classpath)

export JAVA_HOME=/usr/local/java/jdk1.8.0_251

export SPARK_MASTER_HOST=hadoop003

export SPARK_MASTER_PORT=7077

export HADOOP_CONF_DIR=/usr/local/cluster/hadoop-2.9.2

5.配置spark home

vim /etc/profile

export SPARK_HOME=/usr/local/cluster/spark-2.4.5-bin-without-hadoop

export PATH=$PATH:$SPARK_HOME/bin

6.运行spark-shell

[hadoop@hadoop003 spark-2.4.5-bin-without-hadoop]$ spark-shell

20/05/31 21:11:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop003:4040

Spark context available as 'sc' (master = local[*], app id = local-1590930684369).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_251)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

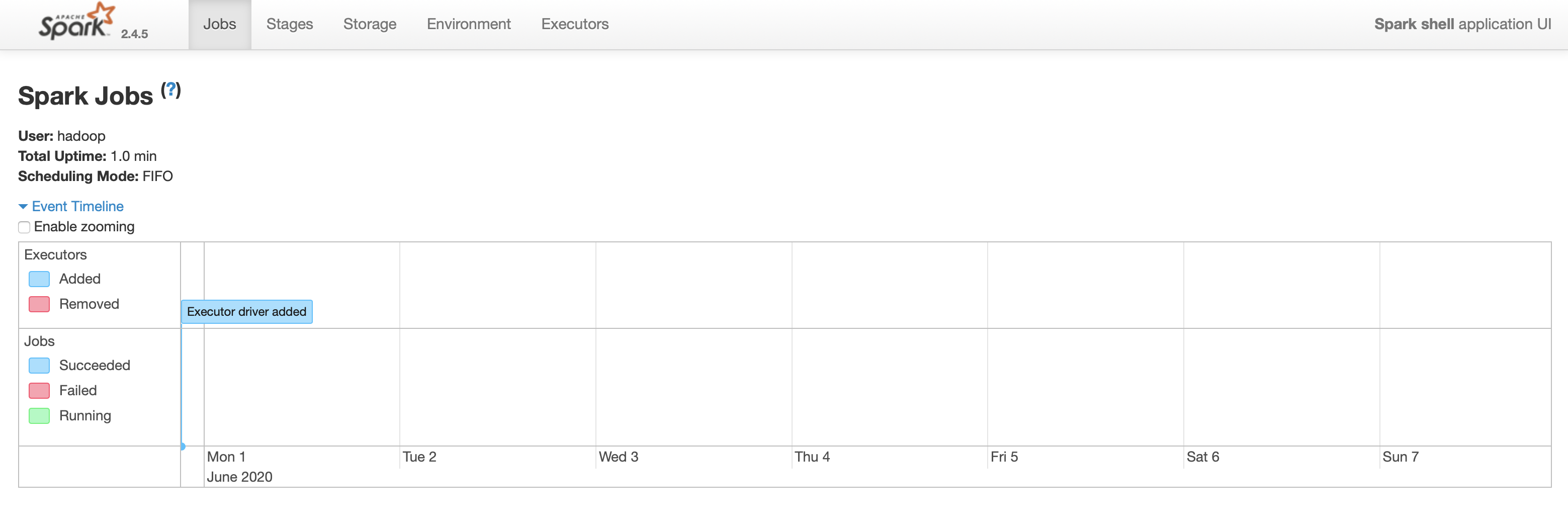

7.界面查看

8.执行SparkPi

[hadoop@hadoop003 spark-2.4.5-bin-without-hadoop]$ run-example SparkPi

20/05/31 21:13:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

20/05/31 21:13:48 INFO spark.SparkContext: Running Spark version 2.4.5

20/05/31 21:13:48 INFO spark.SparkContext: Submitted application: Spark Pi

20/05/31 21:13:48 INFO spark.SecurityManager: Changing view acls to: hadoop

20/05/31 21:13:48 INFO spark.SecurityManager: Changing modify acls to: hadoop

20/05/31 21:13:48 INFO spark.SecurityManager: Changing view acls groups to:

20/05/31 21:13:48 INFO spark.SecurityManager: Changing modify acls groups to:

20/05/31 21:13:48 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

20/05/31 21:13:49 INFO util.Utils: Successfully started service 'sparkDriver' on port 37626.

20/05/31 21:13:49 INFO spark.SparkEnv: Registering MapOutputTracker

20/05/31 21:13:49 INFO spark.SparkEnv: Registering BlockManagerMaster

20/05/31 21:13:49 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

20/05/31 21:13:49 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

20/05/31 21:13:49 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-04f06caf-1022-4fde-b0f9-ce66cc334910

20/05/31 21:13:49 INFO memory.MemoryStore: MemoryStore started with capacity 413.9 MB

20/05/31 21:13:49 INFO spark.SparkEnv: Registering OutputCommitCoordinator

20/05/31 21:13:49 INFO util.log: Logging initialized @2139ms

20/05/31 21:13:49 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

20/05/31 21:13:49 INFO server.Server: Started @2207ms

20/05/31 21:13:49 INFO server.AbstractConnector: Started ServerConnector@411291e5{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

20/05/31 21:13:49 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5a101b1c{/jobs,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2234078{/jobs/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5ec77191{/jobs/job,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c68a5f8{/jobs/job/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@69c6161d{/stages,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3aefae67{/stages/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2e1792e7{/stages/stage,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@796d3c9f{/stages/stage/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6bff19ff{/stages/pool,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@41e1455d{/stages/pool/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4e558728{/storage,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5eccd3b9{/storage/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4d6f197e{/storage/rdd,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6ef7623{/storage/rdd/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@64e1dd11{/environment,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5c089b2f{/environment/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6999cd39{/executors,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@14bae047{/executors/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7ed9ae94{/executors/threadDump,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@66908383{/executors/threadDump/json,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@41477a6d{/static,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@54dcbb9f{/,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@74fef3f7{/api,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5bb8f9e2{/jobs/job/kill,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6a933be2{/stages/stage/kill,null,AVAILABLE,@Spark}

20/05/31 21:13:49 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://hadoop003:4040

20/05/31 21:13:49 INFO spark.SparkContext: Added JAR file:///usr/local/cluster/spark-2.4.5-bin-without-hadoop/examples/jars/spark-examples_2.11-2.4.5.jar at spark://hadoop003:37626/jars/spark-examples_2.11-2.4.5.jar with timestamp 1590930829656

20/05/31 21:13:49 INFO spark.SparkContext: Added JAR file:///usr/local/cluster/spark-2.4.5-bin-without-hadoop/examples/jars/scopt_2.11-3.7.0.jar at spark://hadoop003:37626/jars/scopt_2.11-3.7.0.jar with timestamp 1590930829656

20/05/31 21:13:49 INFO executor.Executor: Starting executor ID driver on host localhost

20/05/31 21:13:49 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 36039.

20/05/31 21:13:49 INFO netty.NettyBlockTransferService: Server created on hadoop003:36039

20/05/31 21:13:49 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

20/05/31 21:13:49 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, hadoop003, 36039, None)

20/05/31 21:13:49 INFO storage.BlockManagerMasterEndpoint: Registering block manager hadoop003:36039 with 413.9 MB RAM, BlockManagerId(driver, hadoop003, 36039, None)

20/05/31 21:13:49 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, hadoop003, 36039, None)

20/05/31 21:13:49 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, hadoop003, 36039, None)

20/05/31 21:13:50 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7d0cc890{/metrics/json,null,AVAILABLE,@Spark}

20/05/31 21:13:50 INFO spark.SparkContext: Starting job: reduce at SparkPi.scala:38

20/05/31 21:13:50 INFO scheduler.DAGScheduler: Got job 0 (reduce at SparkPi.scala:38) with 2 output partitions

20/05/31 21:13:50 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at SparkPi.scala:38)

20/05/31 21:13:50 INFO scheduler.DAGScheduler: Parents of final stage: List()

20/05/31 21:13:50 INFO scheduler.DAGScheduler: Missing parents: List()

20/05/31 21:13:50 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34), which has no missing parents

20/05/31 21:13:50 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 2.0 KB, free 413.9 MB)

20/05/31 21:13:50 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 1381.0 B, free 413.9 MB)

20/05/31 21:13:50 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on hadoop003:36039 (size: 1381.0 B, free: 413.9 MB)

20/05/31 21:13:50 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1163

20/05/31 21:13:50 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at SparkPi.scala:34) (first 15 tasks are for partitions Vector(0, 1))

20/05/31 21:13:50 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

20/05/31 21:13:50 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, executor driver, partition 0, PROCESS_LOCAL, 7866 bytes)

20/05/31 21:13:50 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0)

20/05/31 21:13:50 INFO executor.Executor: Fetching spark://hadoop003:37626/jars/scopt_2.11-3.7.0.jar with timestamp 1590930829656

20/05/31 21:13:50 INFO client.TransportClientFactory: Successfully created connection to hadoop003/192.168.1.15:37626 after 47 ms (0 ms spent in bootstraps)

20/05/31 21:13:50 INFO util.Utils: Fetching spark://hadoop003:37626/jars/scopt_2.11-3.7.0.jar to /tmp/spark-e0b138c7-5a4f-40c6-a31d-7acb008eec01/userFiles-18990e32-64a5-4897-8246-e86a38f90378/fetchFileTemp6342395058100518706.tmp

20/05/31 21:13:51 INFO executor.Executor: Adding file:/tmp/spark-e0b138c7-5a4f-40c6-a31d-7acb008eec01/userFiles-18990e32-64a5-4897-8246-e86a38f90378/scopt_2.11-3.7.0.jar to class loader

20/05/31 21:13:51 INFO executor.Executor: Fetching spark://hadoop003:37626/jars/spark-examples_2.11-2.4.5.jar with timestamp 1590930829656

20/05/31 21:13:51 INFO util.Utils: Fetching spark://hadoop003:37626/jars/spark-examples_2.11-2.4.5.jar to /tmp/spark-e0b138c7-5a4f-40c6-a31d-7acb008eec01/userFiles-18990e32-64a5-4897-8246-e86a38f90378/fetchFileTemp3842024603751339937.tmp

20/05/31 21:13:51 INFO executor.Executor: Adding file:/tmp/spark-e0b138c7-5a4f-40c6-a31d-7acb008eec01/userFiles-18990e32-64a5-4897-8246-e86a38f90378/spark-examples_2.11-2.4.5.jar to class loader

20/05/31 21:13:51 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 867 bytes result sent to driver

20/05/31 21:13:51 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, executor driver, partition 1, PROCESS_LOCAL, 7866 bytes)

20/05/31 21:13:51 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1)

20/05/31 21:13:51 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 392 ms on localhost (executor driver) (1/2)

20/05/31 21:13:51 INFO executor.Executor: Finished task 1.0 in stage 0.0 (TID 1). 824 bytes result sent to driver

20/05/31 21:13:51 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 75 ms on localhost (executor driver) (2/2)

20/05/31 21:13:51 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

20/05/31 21:13:51 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at SparkPi.scala:38) finished in 0.663 s

20/05/31 21:13:51 INFO scheduler.DAGScheduler: Job 0 finished: reduce at SparkPi.scala:38, took 0.718720 s

Pi is roughly 3.1461157305786527

20/05/31 21:13:51 INFO server.AbstractConnector: Stopped Spark@411291e5{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

20/05/31 21:13:51 INFO ui.SparkUI: Stopped Spark web UI at http://hadoop003:4040

20/05/31 21:13:51 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

20/05/31 21:13:51 INFO memory.MemoryStore: MemoryStore cleared

20/05/31 21:13:51 INFO storage.BlockManager: BlockManager stopped

20/05/31 21:13:51 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

20/05/31 21:13:51 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

20/05/31 21:13:51 INFO spark.SparkContext: Successfully stopped SparkContext

20/05/31 21:13:51 INFO util.ShutdownHookManager: Shutdown hook called

20/05/31 21:13:51 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-e0b138c7-5a4f-40c6-a31d-7acb008eec01

20/05/31 21:13:51 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-2e0ec6df-a085-4008-9f98-0e3e78e81830

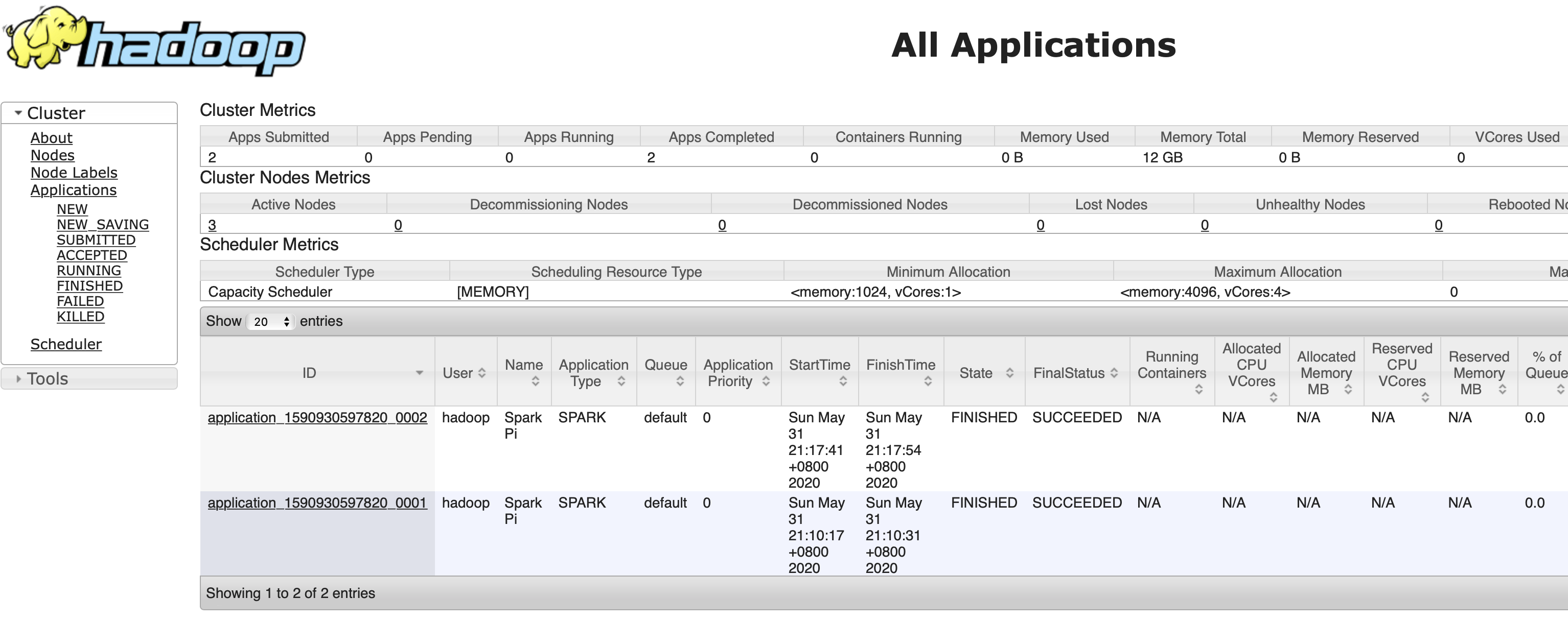

9.on yarn

[hadoop@hadoop003 spark-2.4.5-bin-without-hadoop]$ spark-submit --class org.apache.spark.examples.SparkPi \

> --master yarn \

> --deploy-mode client \

> --driver-memory 1g \

> --executor-memory 1g \

> --executor-cores 1 \

> examples/jars/spark-examples*.jar \

> 10

20/05/31 21:17:33 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

20/05/31 21:17:34 INFO spark.SparkContext: Running Spark version 2.4.5

20/05/31 21:17:34 INFO spark.SparkContext: Submitted application: Spark Pi

20/05/31 21:17:34 INFO spark.SecurityManager: Changing view acls to: hadoop

20/05/31 21:17:34 INFO spark.SecurityManager: Changing modify acls to: hadoop

20/05/31 21:17:34 INFO spark.SecurityManager: Changing view acls groups to:

20/05/31 21:17:34 INFO spark.SecurityManager: Changing modify acls groups to:

20/05/31 21:17:34 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(hadoop); groups with view permissions: Set(); users with modify permissions: Set(hadoop); groups with modify permissions: Set()

20/05/31 21:17:34 INFO util.Utils: Successfully started service 'sparkDriver' on port 46839.

20/05/31 21:17:34 INFO spark.SparkEnv: Registering MapOutputTracker

20/05/31 21:17:34 INFO spark.SparkEnv: Registering BlockManagerMaster

20/05/31 21:17:34 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

20/05/31 21:17:34 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

20/05/31 21:17:34 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-292ea51c-82a2-4274-b43b-cba4fab687a0

20/05/31 21:17:34 INFO memory.MemoryStore: MemoryStore started with capacity 413.9 MB

20/05/31 21:17:34 INFO spark.SparkEnv: Registering OutputCommitCoordinator

20/05/31 21:17:34 INFO util.log: Logging initialized @1968ms

20/05/31 21:17:34 INFO server.Server: jetty-9.3.z-SNAPSHOT, build timestamp: unknown, git hash: unknown

20/05/31 21:17:35 INFO server.Server: Started @2160ms

20/05/31 21:17:35 INFO server.AbstractConnector: Started ServerConnector@436390f4{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

10.on yarn界面查看

11.on yarn 报错解决

20/05/31 21:03:34 ERROR client.TransportClient: Failed to send RPC RPC 5904703606391278935 to /192.168.1.14:40174: java.nio.channels.ClosedChannelException

因为分配的内存少,yarn kill了spark application。

修改yarn-site.xml

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

scp yarn-site.xml hadoop002:$PWD

scp yarn-site.xml hadoop003:$PWD

浙公网安备 33010602011771号

浙公网安备 33010602011771号