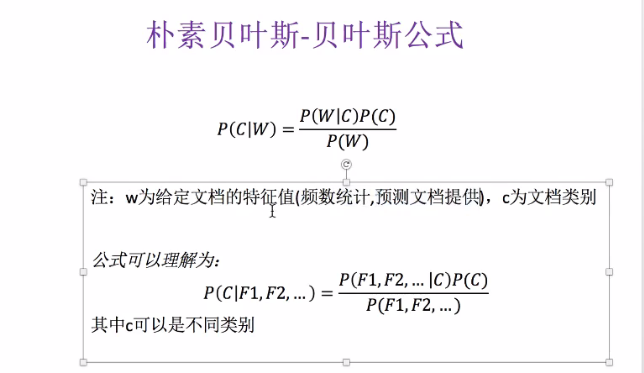

第七节 朴素贝叶斯算法

https://baike.baidu.com/item/%E6%9C%B4%E7%B4%A0%E8%B4%9D%E5%8F%B6%E6%96%AF/4925905

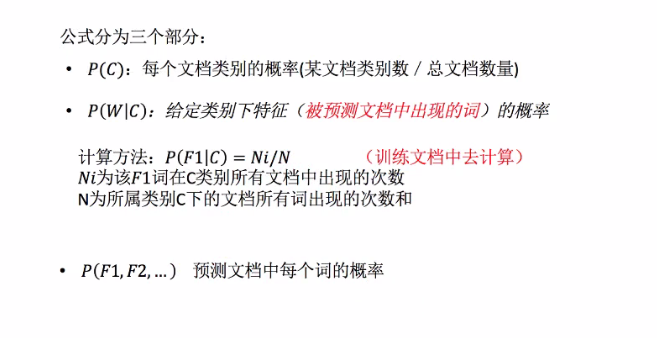

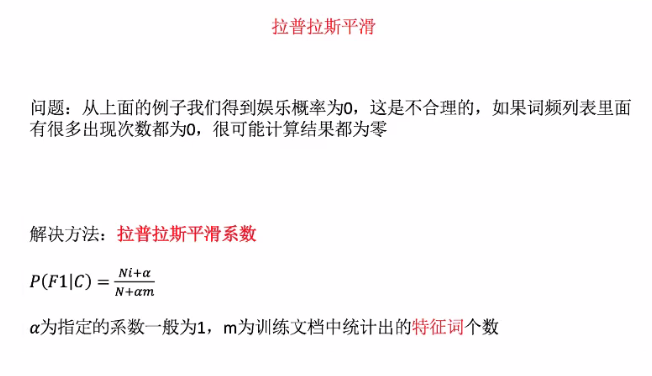

from sklearn.datasets import load_iris, fetch_20newsgroups from sklearn.model_selection import train_test_split from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.naive_bayes import MultinomialNB # 朴素贝叶斯API # 朴素贝叶斯会受到特征之间相关性的影响,各特征相关性越强,预测效果越差,该算法使用简单不需要调参,有坚实的统计学基础有稳定的分类效率,对缺失数据不太敏感,常用于文本分类 def naviebayes(): '''朴素贝叶斯''' news = fetch_20newsgroups(subset='all') # 进行训练集和测试集的分割,x特征值,y目标值 x_train, x_test, y_train, y_test = train_test_split(news.data, news.target, test_size=0.25) # 对数据集进行特征抽取 tf = TfidfVectorizer() # 以训练集当中的词列表进行每篇文章重要性统计['a', 'b', 'c', 'd'] x_train = tf.fit_transform(x_train) x_test = tf.transform(x_test) # 进行朴素贝叶斯算法预测,alpha拉普拉斯平滑系数 mlt = MultinomialNB(alpha=1.0) mlt.fit(x_train, y_train) y_predict = mlt.predict(x_test) sorce = mlt.score(x_test, y_test) if __name__ == "__main__": naviebayes()