3. scala-spark wordCount 案例

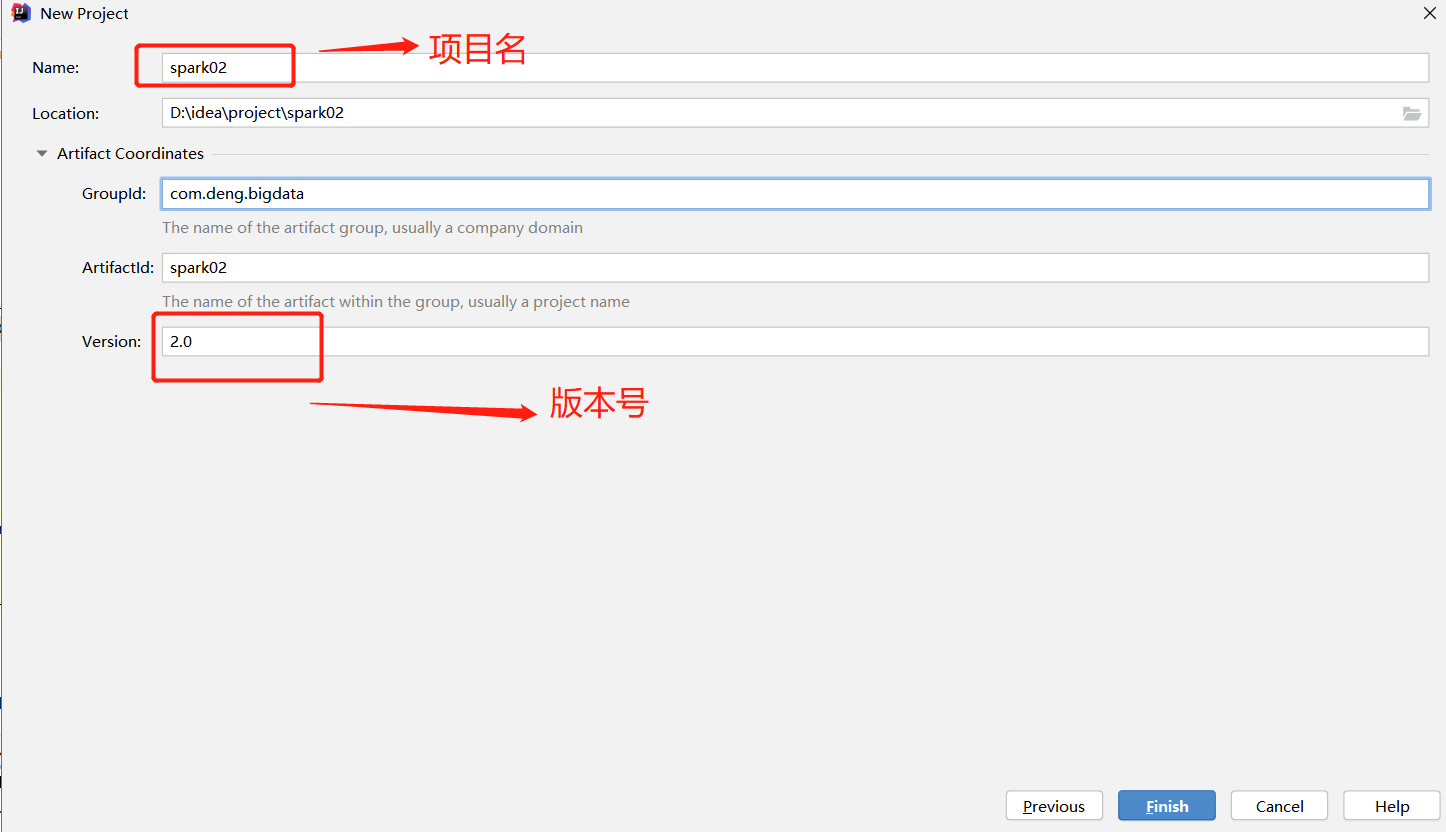

1. 创建maven 工程

2. 相关依赖和插件

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>2.1.1</version>

</dependency>

</dependencies>

<build>

<finalName>wordCount</finalName>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>4.2.0</version>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-assembly-plugin</artifactId>

<version>3.1.0</version>

<configuration>

<archive>

<manifest>

<mainClass>wordCount</mainClass>

</manifest>

</archive>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

3. wordCount 案例

package com.atgu.bigdata.spark import org.apache.spark._ import org.apache.spark.rdd.RDD object wordCount extends App { // local模式 // 1.创建sparkConf 对象 val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("wordCount") // 2. 创建spark 上下文对象 val sc:SparkContext=new SparkContext(config = conf) // 3. 读取文件 val lines: RDD[String] = sc.textFile("file:///opt/data/1.txt") // 4. 切割单词 val words: RDD[String] = lines.flatMap(_.split(" ")) // words.collect().foreach(println) // map private val keycounts: RDD[(String, Int)] = words.map((_, 1)) // private val results: RDD[(String, Int)] = keycounts.reduceByKey(_ + _) private val res: Array[(String, Int)] = results.collect res.foreach(println) }

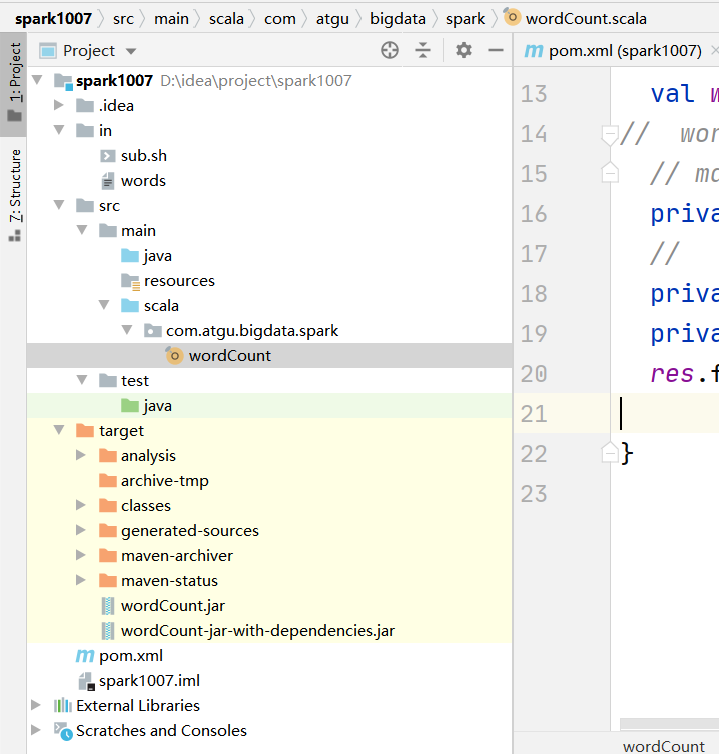

4. 项目目录结构

有疑问可以加wx:18179641802,进行探讨

浙公网安备 33010602011771号

浙公网安备 33010602011771号