url = http://zst.aicai.com/ssq/openInfo/

体育彩票开奖信息:一种思路是正则Html,另一种相当于一个框架xml解析html. 两种方法没有优缺点,不能说那个方便,那个代码少就是容易。有精力还是要有正则扎实的基础才好。

import urllib.request import urllib.parse import re import urllib.request,urllib.parse,http.cookiejar def getHtml(url): cj=http.cookiejar.CookieJar() opener=urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cj)) opener.addheaders=[('User-Agent','Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.101 Safari/537.36'),('Cookie','4564564564564564565646540')] urllib.request.install_opener(opener) html_bytes = urllib.request.urlopen( url ).read() html_string = html_bytes.decode( 'utf-8' ) return html_string #url = http://zst.aicai.com/ssq/openInfo/ #最终输出结果格式如:2015075期开奖号码:6,11,13,19,21,32, 蓝球:4 html = getHtml("http://zst.aicai.com/ssq/openInfo/") #<table class="fzTab nbt"> </table> table = html[html.find('<table class="fzTab nbt">') : html.find('</table>')] #print (table) #<tr onmouseout="this.style.background=''" onmouseover="this.style.background='#fff7d8'"> #<tr \r\n\t\t onmouseout= tmp = table.split('<tr \r\n\t\t onmouseout=',1) #print(tmp) #print(len(tmp)) trs = tmp[1] tr = trs[: trs.find('</tr>')] #print(tr) number = tr.split('<td >')[1].split('</td>')[0] print(number + '期开奖号码:',end='') redtmp = tr.split('<td class="redColor sz12" >') reds = redtmp[1:len(redtmp)-1]#去掉第一个和最后一个没用的元素 #print(reds) for redstr in reds: print(redstr.split('</td>')[0] + ",",end='') print('蓝球:',end='') blue = tr.split('<td class="blueColor sz12" >')[1].split('</td>')[0] print(blue)

from bs4 import BeautifulSoup import urllib.request import urllib.parse import urllib.request,http.cookiejar def getHtml(url): cj = http.cookiejar.CookieJar() opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cj)) opener.addheaders = [('User-Agent', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.101 Safari/537.36'), ('Cookie', '4564564564564564565646540')] urllib.request.install_opener(opener) html_bytes = urllib.request.urlopen(url).read() html_string = html_bytes.decode('utf-8') return html_string html_doc = getHtml("http://zst.aicai.com/ssq/openInfo/") soup = BeautifulSoup(html_doc, 'html.parser') # print(soup.title) #table = soup.find_all('table', class_='fzTab') #print(table)#<tr onmouseout="this.style.background=''" 这种tr丢失了 tr = soup.find('tr',attrs={"onmouseout": "this.style.background=''"}) #print(tr) tds = tr.find_all('td') opennum = tds[0].get_text() #print(opennum) reds = [] for i in range(2,8): reds.append(tds[i].get_text()) #print(reds) blue = tds[8].get_text() #print(blue) #把list转换为字符串:(',').join(list) #最终输出结果格式如:2015075期开奖号码:6,11,13,19,21,32, 蓝球:4 print(opennum+'期开奖号码:'+ (',').join(reds)+", 蓝球:"+blue)

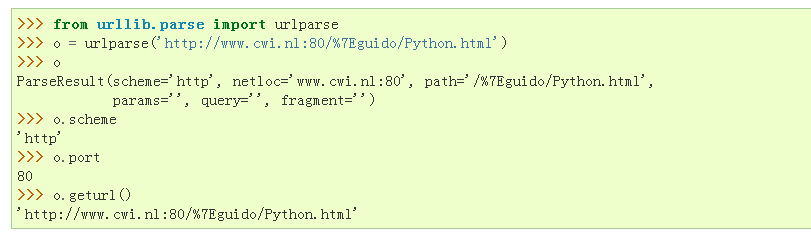

urllib.parse

故名思义,URL parsng ,url解析的意思。

URL解析功能集中在将URL字符串分割到其组件中,或将URL组件合并到URL字符串中。

1 urllib.parse.urlparse(urlstring, scheme='', allow_fragments=True)

from bs4 import BeautifulSoup import urllib.request import urllib.parse import urllib.request,http.cookiejar import requests url = "http://zst.aicai.com/ssq/openInfo/" res = requests.get(url) res.encoding = 'utf-8' soup = BeautifulSoup(res.text, 'html.parser') # print(soup.title) #table = soup.find_all('table', class_='fzTab') #print(table)#<tr onmouseout="this.style.background=''" 这种tr丢失了 tr = soup.find('tr',attrs={"onmouseout": "this.style.background=''"}) #print(tr) tds = tr.find_all('td') opennum = tds[0].get_text() #print(opennum) reds = [] for i in range(2,8): reds.append(tds[i].get_text()) #print(reds) blue = tds[8].get_text() #print(blue) #把list转换为字符串:(',').join(list) #最终输出结果格式如:2015075期开奖号码:6,11,13,19,21,32, 蓝球:4 print(opennum+'期开奖号码:'+ (',').join(reds)+", 蓝球:"+blue)

http://docs.python-requests.org/zh_CN/latest/user/quickstart.html

我感觉写getHtml()方法的人士java出身-。- , 也可以用上述,不用函数方法。

1 __author__ = 'Kming' 2 3 from bs4 import BeautifulSoup 4 import requests 5 url = "http://www.baidu.com" 6 res = requests.get(url) 7 res.encoding='utf-8' 8 soup = BeautifulSoup(res.text, 'html.parser') 9 10 for link in soup.find_all('a'): 11 print(link.get('href'))