ELK 收集 K8S (containerd 容器运行时) 二

部署 filebeat

mkdir -p /data/yaml/k8s-logging/filebeat

cd /data/yaml/k8s-logging/filebeat

cat rbac.yaml

---

# Source: filebeat/templates/filebeat-service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

namespace: k8s-logging

labels:

k8s-app: filebeat

---

# Source: filebeat/templates/filebeat-role.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

namespace: k8s-logging

labels:

k8s-app: filebeat

rules:

- apiGroups: [""] # "" indicates the core API group

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

# Source: filebeat/templates/filebeat-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: k8s-logging

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

kubectl apply -f rbac.yaml

cat cm.yaml

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

namespace: k8s-logging

labels:

k8s-app: filebeat

data:

filebeat.yml: |-

setup.ilm.enabled: false

filebeat.inputs:

- type: container

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

# 添加k8s描述字段

default_indexers.enabled: true

default_matchers.enabled: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

- drop_fields:

# 删除的多余字段

fields: ["host", "tags", "ecs", "log", "prospector", "agent", "input", "beat", "offset"]

ignore_missing: true

multiline.pattern: '^[[:space:]]+(at|\.{3})\b|^Caused by:'

multiline.negate: false

multiline.match: after

setup.template.name: "k8s"

setup.template.pattern: "k8s-*"

setup.template.enabled: false

# 如果是第一次则不需要, 如果 index-template 已经存在需要更新, 则需要

setup.template.overwrite: false

setup.template.settings:

# 根据收集的日志量级, 因为日志会每天一份, 如果一天的日志量小于 30g, 一个 shard 足够

index.number_of_shards: 2

# 这个日志并不是那么重要, 并且如果是单节点的话, 直接设置为 0 个副本

index.number_of_replicas: 0

output.kafka:

hosts: ['kafka-svc:9092']

# 启动进程数

worker: 20

# 发送重试的次数取决于max_retries的设置默认为3

max_retries: 3

# 单个elasticsearch批量API索引请求的最大事件数。默认是50。

bulk_max_size: 800

# 选择符合命名空间的pod进行收集,避免集群压力过大

topics:

- topic: "k8s-%{[kubernetes.namespace]}-%{[kubernetes.container.name]}"

when.equals:

kubernetes.namespace: "pre-nengguan"

- topic: "k8s-%{[kubernetes.namespace]}-%{[kubernetes.container.name]}"

when.equals:

kubernetes.namespace: "test-nengguan"

setup.kibana:

host: ':'

# 设置 ilm 的 policy life, 日志保留

setup.ilm:

policy_file: /etc/indice-lifecycle.json

#没有新日志采集后多长时间关闭文件句柄,默认5分钟,设置成1分钟,加快文件句柄关闭;

close_inactive: 1m

#传输了3h后荏没有传输完成的话就强行关闭文件句柄,这个配置项是解决以上案例问题的key point;

close_timeout: 3h

##这个配置项也应该配置上,默认值是0表示不清理,不清理的意思是采集过的文件描述在registry文件里永不清理,在运行一段时间后,registry会变大,可能会带来问题。

clean_inactive: 48h

#设置了clean_inactive后就需要设置ignore_older,且要保证ignore_older < clean_inactive

ignore_older: 46h

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-index-rules

namespace: k8s-logging

labels:

k8s-app: filebeat

data:

indice-lifecycle.json: |-

{

"policy": {

"phases": {

"hot": {

"actions": {

"rollover": {

"max_size": "5GB" ,

"max_age": "1d"

}

}

},

"delete": {

"min_age": "5d",

"actions": {

"delete": {}

}

}

}

}

}

kubectl apply -f cm.yaml

cat ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

namespace: k8s-logging

labels:

k8s-app: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: filebeat

image: elastic/filebeat:7.4.2

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

runAsUser: 0

resources:

requests:

cpu: 100m

memory: 500Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: rules

mountPath: /etc/indice-lifecycle.json

readOnly: true

subPath: indice-lifecycle.json

- name: data

mountPath: /usr/share/filebeat/data

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: varlog

mountPath: /var/log

readOnly: true

- mountPath: /etc/localtime

readOnly: true

name: time-data

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: rules

configMap:

defaultMode: 0600

name: filebeat-index-rules

- name: varlibdockercontainers

hostPath:

path: /var/log/pods

- name: varlog

hostPath:

path: /var/log

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

- name: time-data

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

kubectl apply -f ds.yaml

检查日志

- 查看 filebeat 的 pod 有没有报错

- 查看 kafka 有没有对应的 topic

部署 elasticsearch

mkdir -p /data/yaml/k8s-logging/elasticsearch

cd /data/yaml/k8s-logging/elasticsearch

cat sts.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: k8s-logging

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.4.2

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- mountPath: /etc/localtime

readOnly: true

name: time-data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

volumes:

- name: time-data

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

initContainers:

- name: fix-permissions

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: es-rbd-sc

resources:

requests:

storage: 6Gi

kubectl apply -f sts.yaml

cat svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: k8s-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

kubectl apply -f svc.yaml

检查 es 集群

kubectl -n k8s-logging exec -it es-cluster-0 -- curl 127.0.0.1:9200/_cluster/health?pretty

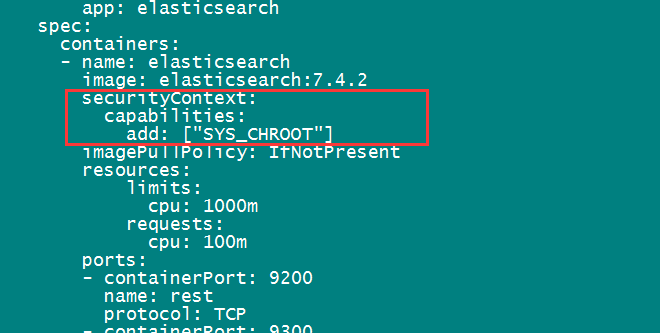

问题: 若遇到 k8s 集群,容器运行时使用 cri-o 的会出现 chroot: cannot change root directory to /: Operation not permitted

解决办法:手动添加

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.4.2

securityContext:

capabilities:

add: ["SYS_CHROOT"]

imagePullPolicy: IfNotPresent

浙公网安备 33010602011771号

浙公网安备 33010602011771号