通过钉钉告警前一天的 MySQL 慢查询,排除已告警过的语句

业务需求

在获取前一天 MySQL 慢查的情况下,进一步做告警(https://www.cnblogs.com/klvchen/articles/13677698.html)

通过 xlsx 获取到 sqlhash ,排除之前已经通知过的告警,只发出新的慢查询 sql 到 jira 上,现版本只支持手动写入 jira

项目代码

app.py

from openpyxl import load_workbook

import redis

import datetime

import os

import dingtalk

# 需要修改的配置文件

#xls_patch = "d:\\Documents\\xls\\"

#txt_patch = "d:\\Documents\\xls\\txt\\"

#redis_host = "192.168.0.200"

xls_patch = "/export_xlsx/"

txt_patch = "/export_xlsx/txt/"

redis_host = "slow_sql_redis"

redis_port = 6379

redis_passwd = "AdmiN@123"

oneday = datetime.timedelta(days=4)

ding_talk_url = "http://192.168.0.200:81/export_xlsx/txt/"

today = datetime.date.today()

yesterday = today - oneday

def get_xls_filename():

filename = xls_patch + "rm-wz9lzm065d7c4b4a0_mysql_slow_sql_" + str(yesterday) + ".xlsx"

# print(filename)

return filename

def write_txt(txt_patch, text):

txt_filename = txt_patch + str(yesterday)+".txt"

with open(txt_filename, 'a', encoding='utf-8') as f:

f.write(text)

return txt_filename

def get_xls_info(filename):

if os.path.exists(filename):

workbook = load_workbook(filename)

sheet = workbook.active

row_length = sheet.max_row

for i in range(2, row_length + 1):

sql_cell = sheet['F' + str(i)]

sql_hash_cell = sheet['I' + str(i)]

# print(sql_cell.value)

# print(sql_hash_cell.value)

result = set_redis(sql_hash_cell.value, sql_cell.value)

if result:

fenge = "======================================\n"

text = "\n".join([sql_hash_cell.value, sql_cell.value, fenge])

txt_filename = write_txt(txt_patch, text)

save_redis()

if 'txt_filename' in vars() and os.path.exists(txt_filename):

#print("send dingding")

msg = "".join([ding_talk_url, txt_filename.replace(txt_patch, '')])

dingtalk.send_msg(msg)

else:

print("xlsx 不存在")

def get_redis_conn():

pool = redis.ConnectionPool(host=redis_host, port=redis_port, password=redis_passwd)

conn = redis.Redis(connection_pool=pool)

return conn

def get_redis():

conn = get_redis_conn()

print(conn.keys())

def set_redis(key, value):

conn = get_redis_conn()

result = conn.setnx(key, value)

# print(result)

return result

def save_redis():

conn = get_redis_conn()

conn.save()

if __name__ == '__main__':

# get_redis()

filename = get_xls_filename()

get_xls_info(filename)

dingtalk.py

import json

import requests

url = 'https://oapi.dingtalk.com/robot/send?access_token=xxxxxxxxxxxxxxxxxxxxx'

def send_msg(msg):

parameter = {

"msgtype": "text",

"text": {

"content": "云服务 -- 线上MySQL写数据库新增慢查询日志已生成,下载请点击:%s ,请及时查看(该链接只可以在公司内网打开)~" % msg

},

}

headers = {

'Content-Type': 'application/json'

}

requests.post(url, data=json.dumps(parameter), headers=headers)

导出依赖

pip freeze > requirements.txt

创建 Redis

mkdir -p /data/slow_sql_redis

cd /data/slow_sql_redis

mkdir conf data

cd conf

# 创建 redis 的配置文件

vi redis.conf

#daemonize yes

pidfile /data/redis.pid

port 6379

tcp-backlog 30000

timeout 0

tcp-keepalive 10

loglevel notice

logfile /data/redis.log

databases 16

#save 900 1

#save 300 10

#save 60 10000

stop-writes-on-bgsave-error no

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir /data

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass AdmiN@123

maxclients 30000

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events KEA

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-entries 512

list-max-ziplist-value 64

set-max-intset-entries 1000

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

cd /data/slow_sql_redis

vi docker-compose.yml

version: '3.4'

services:

slow_sql_redis:

image: redis

ports:

- 6379:6379

command: redis-server /usr/local/etc/redis/redis.conf

volumes:

- /data/slow_sql_redis/data:/data

- /data/slow_sql_redis/conf/redis.conf:/usr/local/etc/redis/redis.conf

networks:

default:

aliases:

- slow_sql_redis

# docker network create slow_sqlhash

networks:

default:

external:

name: slow_sqlhash

# 创建 docker 专用网络

docker network create slow_sqlhash

# 启动 redis

docker-compose up -d

# 测试 redis

创建项目的基础镜像

mkdir -p /data/slow_sqlhash4jira

cd /data/slow_sqlhash4jira

mkdir base_image images

cd base_image

# 创建依赖文件,该文件从项目中导出

vi requirements.txt

certifi==2020.12.5

chardet==4.0.0

et-xmlfile==1.0.1

idna==2.10

jdcal==1.4.1

openpyxl==3.0.5

redis==3.5.3

requests==2.25.1

urllib3==1.26.2

# 创建 Dockerfile

vi Dockerfile

FROM python:3.6

WORKDIR /data

RUN echo "Asia/Shanghai" > /etc/timezone

RUN cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY requirements.txt ./

RUN pip install -r requirements.txt -i https://mirrors.aliyun.com/pypi/simple/

# 创建镜像

docker build -t slow_sqlhash_base4jira:20210114.1 .

创建业务镜像

cd /data/slow_sqlhash4jira/images

# 把 app.py dingtalk.py 放到该目录下

vi Dockerfile

FROM slow_sqlhash_base4jira:20210114.1

WORKDIR /data

COPY . ./

# 创建镜像

docker build -t slow_sqlhash4jira:0.1 .

启动项目

cd /data/slow_sqlhash4jira

vi docker-compose.yml

version: '3.4'

services:

slow_sqlhash4jira:

image: slow_sqlhash4jira:0.1

command: python app.py

#command: sleep 3600

volumes:

- /data/export_xlsx:/export_xlsx

networks:

default:

aliases:

- slow_sqlhash4jira

networks:

default:

external:

name: slow_sqlhash

# 创建 cron 任务

crontab -e

10 11 * * * cd /data/slow_sqlhash4jira && /usr/local/bin/docker-compose up -d > /dev/null 2>&1

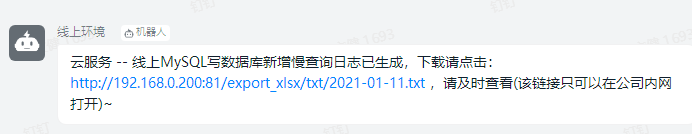

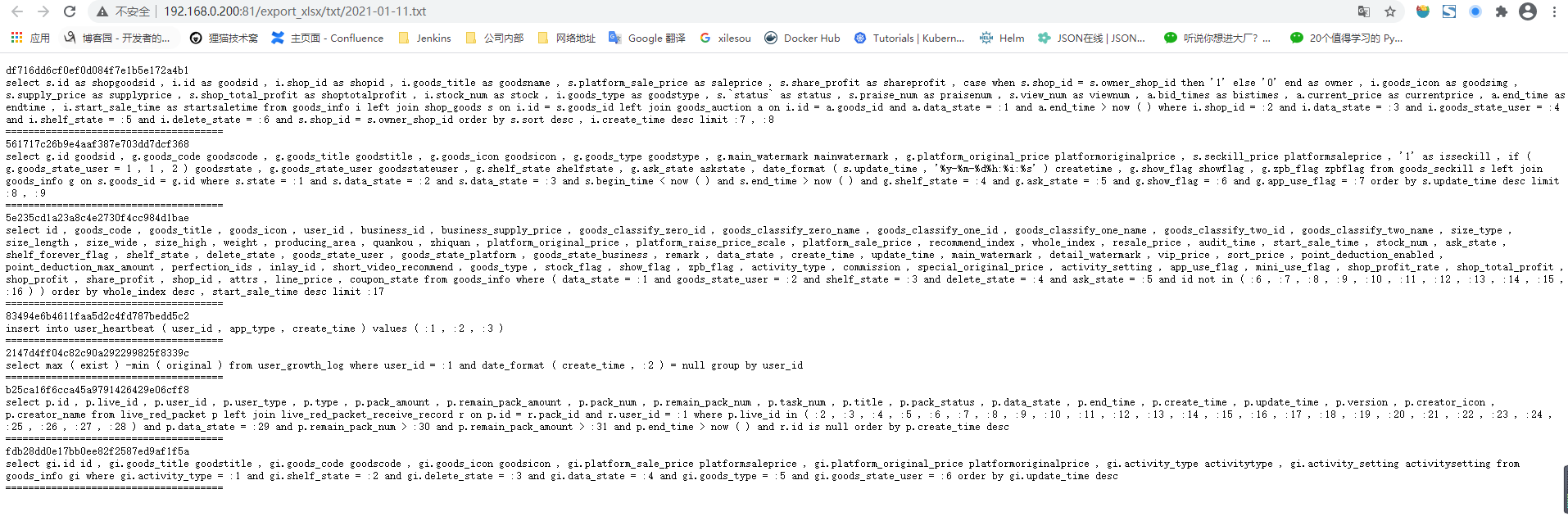

运行结果

点开链接后

浙公网安备 33010602011771号

浙公网安备 33010602011771号