分析nginx access.log统计日业务接口访问量

声明

以下数据为单节点NGINX的访问日志,所有数据均取自生产环境(x.x.x.x)

分析策略及数据采集

-

分析nginx的access.log,获取各个接口uri、访问量

-

随机在12月取三天的日各业务量统计,三天取平均

[root@VM_0_999_centos logs]# ls 2021*log -alh

-rw-r--r-- 1 root root 3.3M Dec 27 17:14 20211207.log

-rw-r--r-- 1 root root 4.1M Dec 27 17:02 20211215.log

-rw-r--r-- 1 root root 3.7M Dec 27 16:58 20211223.log样本1是2021年12月23号(星期四)

样本2是2021年12月15号(星期三)

样本3是2021年12月7号(星期二)

汇总后的log

日志形态

{ "time_local": "07/Dec/2021:06:41:06 +0800", "remote_addr": "1.2.3.4", "referer": "https://aaa.bbb.com/", "USER_CODE": "-", "request": "POST /api/aaa/bbb/cccc HTTP/1.1", "status": 200, "bytes": 42634, "agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.45 Safari/537.36", "x_forwarded": "-", "up_addr": "5.6.7.8:90","up_host": "-","upstream_time": "0.021","request_time": "0.022" }实现代码

__author__ = 'kangpc'

__date__ = '2021-12-28 17:25'

import os,json

import pandas as pd

'''

全局参数

'''

# 日志文件存放目录

logDir = "D:\\性能测试\\"

# 源日志文件,3天汇总

logFile="D:\\性能测试\\huizong.log"

# 清洗完的文件绝对路径

target = os.path.join(logDir,"target.txt")

print (

'''

数据预处理

'''

)

# 定义过滤函数,过滤掉无效uri

filt = ['.js','css','images','static']

def filter_invalid_str(s,filt):

if s.isdigit() or s == '/' or s =='/null':

return 0

for i in filt:

if i in s:

return 0

else:

return 1

print(

'''

数据分析

'''

)

# 统计各个uri的访问量,算出日业务量,过滤掉请求次数为0的uri

def uri_statistics():

result = {}

with open(logFile, 'r',encoding="utf-8") as fr:

for i in fr:

line = json.loads(i)

k = str(line['request'].split()[1])

if filter_invalid_str(k,filt):

if k not in result.keys():

result[k] = 1

elif k in result.keys():

result[k] += 1

else:

print("%s 存入字典时,key没有找到!"%k)

# print(result,len(result))

new_result = {}

for k,v in result.items():

if v >= 3:

new_result[k] = v // 3

# 将清洗完的接口统计数据写入目标文件

for k,v in new_result.items():

data = ''.join([k,'\t',str(v),'\n'])

with open(target,'a',encoding='utf-8') as fw:

fw.write(data)

return target

print(

'''

通过pandas处理到excel

'''

)

def pandas_to_excel(f):

reader = pd.read_table(f,sep='\t',engine='python',names=["interface","total"] ,header=None,iterator=True,encoding='utf-8')

loop = True

chunksize = 10000000

chunks = []

while loop:

try:

chunk = reader.get_chunk(chunksize)

chunks.append(chunk)

except StopIteration:

loop = False

print ("Iteration is stopped.")

# 重新拼接成DataFrame

df = pd.concat(chunks)

df.to_excel(logDir+"result.xlsx")

if __name__ == '__main__':

f = uri_statistics()

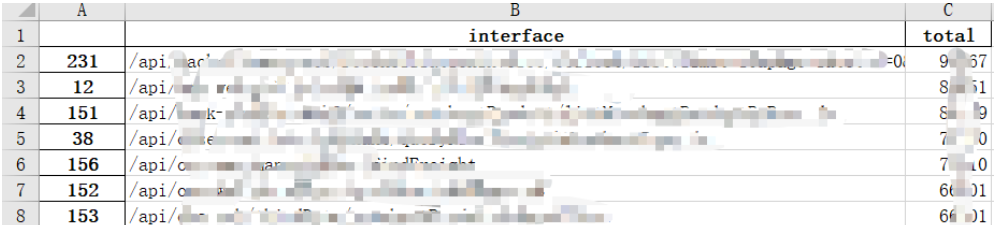

pandas_to_excel(f)统计结果部分截图

更多学习笔记移步

https://www.cnblogs.com/kknote

浙公网安备 33010602011771号

浙公网安备 33010602011771号