Hadoop集群搭建

Hadoop集群简介

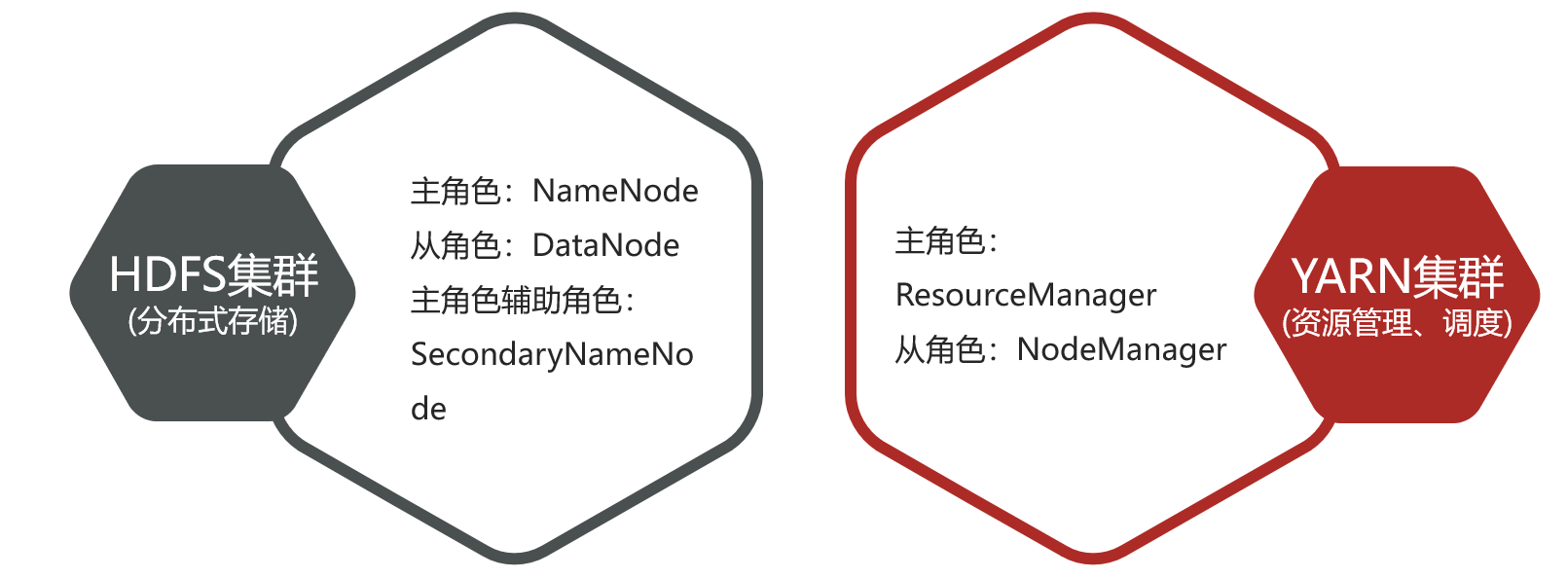

Hadoop集群包括两个集群:HDFS集群、YARN集群

两个集群逻辑上分离、通常物理上在一起

两个集群都是标准的主从架构集群

- 逻辑上分离

两个集群互相之间没有依赖、互不影响,也就是各自独立 - 物理上在一起

因为工作或业务的需要,某些角色进程往往部署在同一台物理服务器上,也就是在同一台物理服务器上同时有HDFS角色和YARN角色 - MapReduce集群呢?

MapReduce是计算框架、代码层面的组件 ,没有集群之说,而集群是涉及到硬件

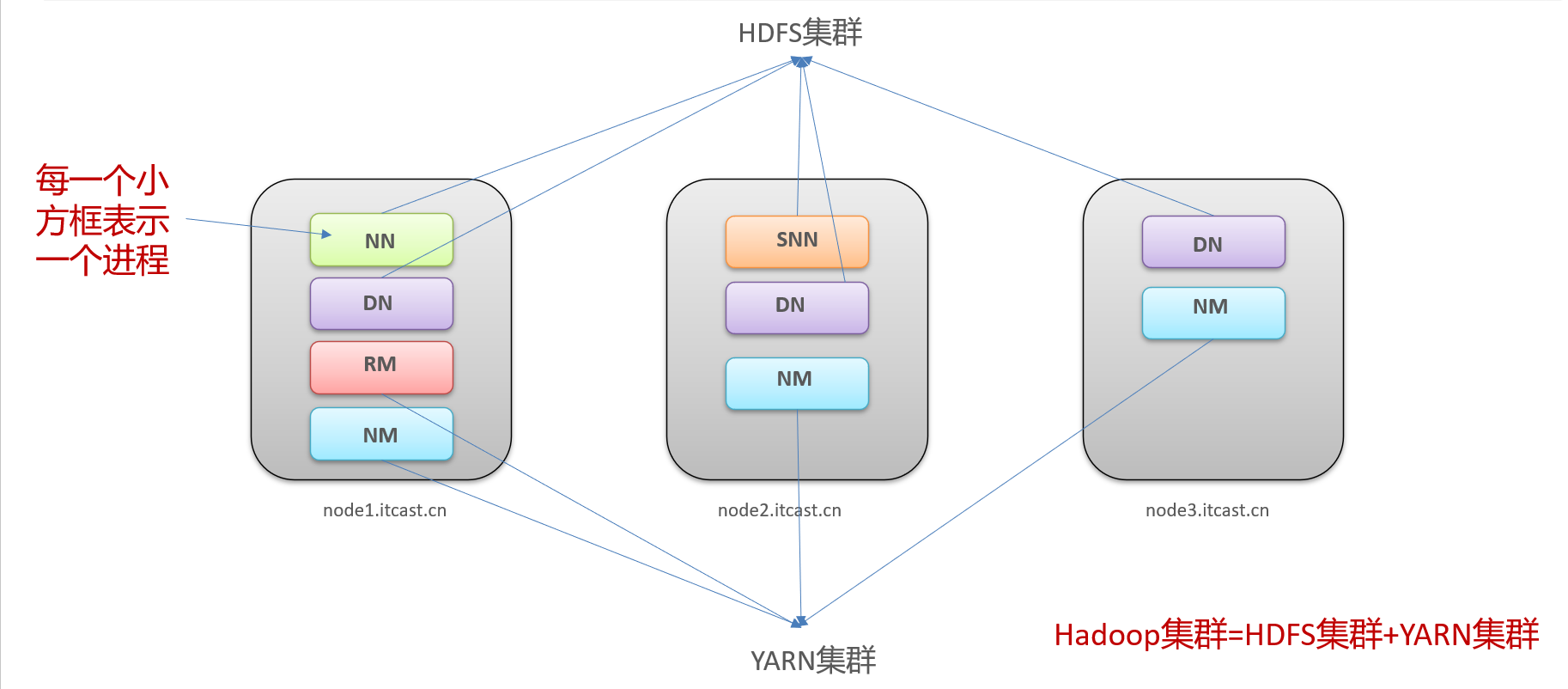

从上图我们可以发现,两个集群逻辑上分离,物理上在一起

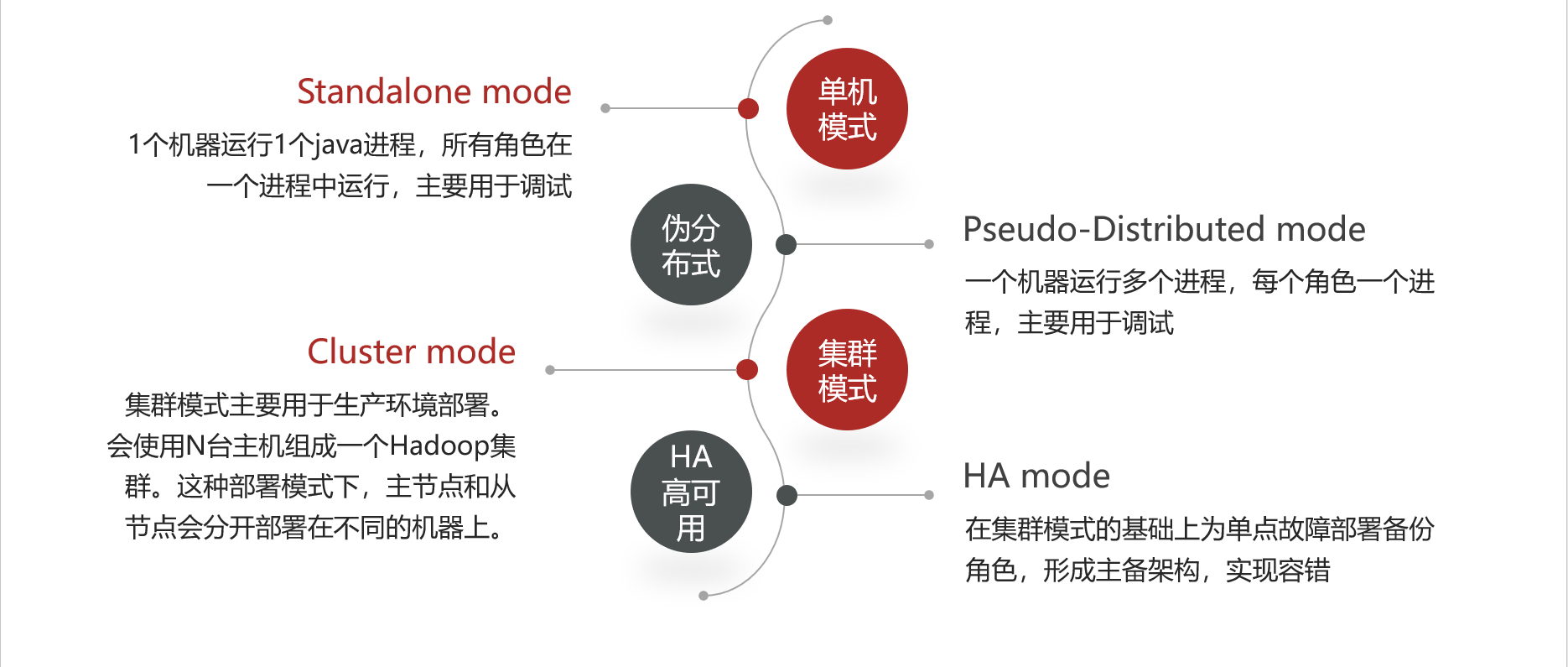

Hadoop部署模式

集群角色规划

- 角色规划的准则

根据软件工作特性和服务器硬件资源情况合理分配

比如依赖内存工作的NameNode是不是部署在大内存机器上? - 角色规划注意事项

资源上有抢夺冲突的,尽量不要部署在一起

工作上需要互相配合的。尽量部署在一起,尽量减少网络流量

| 服务器 | 运行角色 |

| node1.itcast.cn | namenode ,datanode ,resourcemanager ,nodemanager |

| node2.itcast.cn | secondarynamenode ,datanode, nodemanager |

| node3.itcast.cn | datanode ,nodemanager |

Hadoop集群安装

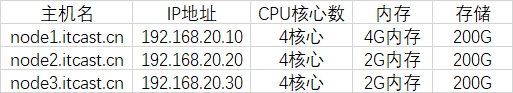

实验环境:(仅供参考)

软件:

VMware Workstation Pro 16.2.3 build-19376536

xshell 6 plus

文件:

CentOS-7.7-x86_64-DVD-1908.iso

hadoop-3.1.4-bin-snappy-CentOS7.tar.gz

jdk-8u241-linux-x64.tar.gz

参数:(仅供参考)

服务器基础环境准备

三台虚拟机最小安装(可安装好一台后克隆两台)

设置主机名及hosts

3台分别设置主机名

[root@localhost ~]# hostnamectl set-hostname node1.itcast.cn

[root@localhost ~]# bash

[root@node1 ~]# hostname

node1.itcast.cn[root@localhost ~]# hostnamectl set-hostname node2.itcast.cn

[root@localhost ~]# bash

[root@node2 ~]# hostname

node2.itcast.cn[root@localhost ~]# hostnamectl set-hostname node3.itcast.cn

[root@localhost ~]# bash

[root@node3 ~]# hostname

node3.itcast.cn3台统一设置本地hosts解析

[root@nodeX ~]# vim /etc/hosts

192.168.20.10 node1 node1.itcast.cn

192.168.20.20 node2 node2.itcast.cn

192.168.20.30 node3 node3.itcast.cn创建工作目录并解包

创建统一工作目录(3台)

mkdir -p /export/server/ #软件安装路径

mkdir -p /export/data/ #数据存储路径

mkdir -p /export/software/ #安装包存放路径关闭防火墙(3台)

systemctl disable firewalld.service #禁止防火墙开启自启

systemctl stop firewalld.service #关闭防火墙设置ssh免密登录

(node1执行->node1|node2|node3)

[root@node1 ~]# ssh-keygen--------------------4个回车 生成公钥、私钥

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:VTKMmOC4mONkDuQheP20d21Oi00dWxkyBi8wyxkcyes root@node1.itcast.cn

The key's randomart image is:

+---[RSA 2048]----+

| .. ooB=.o+ . |

|. + o o+B+o o o|

|ooo o . +o. ....|

|++.. o . o ... + |

|=+. o S . = o |

|*. . E B . |

| o . + |

| |

| |

+----[SHA256]-----+将生成的证书导入至各个主机:ssh-copy-id node1、ssh-copy-id node2、ssh-copy-id node3

[root@node1 ~]# ssh-copy-id node1

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'node1 (192.168.20.10)' can't be established.

ECDSA key fingerprint is SHA256:q0pTm3fIkJ5pLFDOH5uGvTPI/sx1RDPALANebuuiLwI.

ECDSA key fingerprint is MD5:f3:32:42:b8:80:7e:d2:cd:8b:58:cd:c9:bd:cd:ac:90.

Are you sure you want to continue connecting (yes/no)? yes----------------------------------输入yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node1's password: ---------------------------------------------------------输入导入的主机的密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node1'"

and check to make sure that only the key(s) you wanted were added.

[root@node1 ~]# ssh-copy-id node2

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'node2 (192.168.20.20)' can't be established.

ECDSA key fingerprint is SHA256:q0pTm3fIkJ5pLFDOH5uGvTPI/sx1RDPALANebuuiLwI.

ECDSA key fingerprint is MD5:f3:32:42:b8:80:7e:d2:cd:8b:58:cd:c9:bd:cd:ac:90.

Are you sure you want to continue connecting (yes/no)? yes----------------------------------输入yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node2's password:---------------------------------------------------------输入导入的主机的密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node2'"

and check to make sure that only the key(s) you wanted were added.

[root@node1 ~]# ssh-copy-id node3

/usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub"

The authenticity of host 'node3 (192.168.20.30)' can't be established.

ECDSA key fingerprint is SHA256:q0pTm3fIkJ5pLFDOH5uGvTPI/sx1RDPALANebuuiLwI.

ECDSA key fingerprint is MD5:f3:32:42:b8:80:7e:d2:cd:8b:58:cd:c9:bd:cd:ac:90.

Are you sure you want to continue connecting (yes/no)? yes----------------------------------输入yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

root@node3's password:---------------------------------------------------------输入导入的主机的密码

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node3'"

and check to make sure that only the key(s) you wanted were added.

集群时间同步(3台)

配置yum----点击跳转

安装ntp时间同步服务并同步时间

[root@nodeX ~]# yum -y install ntpdate

[root@nodeX ~]# ntpdate ntp4.aliyun.com安装JAVA/JDK(3台)

上传、解压java安装包

[root@nodeX software]# ll

总用量 486020

-rw-r--r--. 1 root root 303134111 9月 15 21:10 hadoop-3.1.4-bin-snappy-CentOS7.tar.gz

-rw-r--r--. 1 root root 194545143 9月 15 21:12 jdk-8u241-linux-x64.tar.gz

[root@node1 software]# tar zxvf jdk-8u241-linux-x64.tar.gz -C /export/server/写入java环境变量

[root@nodeX software]# vim /etc/profile

JAVA_HOME=/export/server/jdk1.8.0_241

JRE_HOME=$JAVA_HOME/jre

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib安装Hadoop包

上传、解压安装包(node1)

将 hadoop-3.1.4-bin-snappy-CentOS7.tar.gz上传至 /export/software/ 并解压至 /export/server/

[root@node1 ~]# cd /export/software/

[root@node1 software]# ll

总用量 296032

-rw-r--r--. 1 root root 303134111 9月 15 21:10 hadoop-3.1.4-bin-snappy-CentOS7.tar.gz

tar -zxvf hadoop-3.1.4-bin-snappy-CentOS7.tar.gz -C /export/server/

修改Hadoop配置文件

- hadoop-env.sh

cd /export/server/hadoop-3.1.4/etc/hadoop/

vim hadoop-env.sh

#设置java环境

export JAVA_HOME=/export/server/jdk1.8.0_241

#设置用户以执行对应角色shell命令

export HDFS_NAMENODE_USER=root

export HDFS_DATANODE_USER=root

export HDFS_SECONDARYNAMENODE_USER=root

export YARN_RESOURCEMANAGER_USER=root

export YARN_NODEMANAGER_USER=root- core-site.xml

cd /export/server/hadoop-3.1.4/etc/hadoop/

vim core-site.xml

<configuration>

<!-- 默认文件系统的名称。通过URI中schema区分不同文件系统。-->

<!-- file:///本地文件系统 hdfs:// hadoop分布式文件系统 gfs://。-->

<!-- hdfs文件系统访问地址:http://nn_host:8020。-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://node1.itcast.cn:8020</value>

</property>

<!-- hadoop本地数据存储目录 format时自动生成 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/export/data/hadoop-3.1.4</value>

</property>

<!-- 在Web UI访问HDFS使用的用户名。-->

<property>

<name>hadoop.http.staticuser.user</name>

<value>root</value>

</property>

</configuration>- hdfs-site.xml

cd /export/server/hadoop-3.1.4/etc/hadoop/

vim hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node2.itcast.cn:9868</value>

</property>

</configuration>- mapred-site.xml

cd /export/server/hadoop-3.1.4/etc/hadoop/

vim mapred-site.xml

<configuration>

<!-- mr程序默认运行方式。yarn集群模式 local本地模式-->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- MR App Master环境变量。-->

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<!-- MR MapTask环境变量。-->

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

<!-- MR ReduceTask环境变量。-->

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value>

</property>

</configuration>- yarn-site.xml

cd /export/server/hadoop-3.1.4/etc/hadoop/

vim yarn-site.xml

<configuration>

<!-- yarn集群主角色RM运行机器。-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>node1.itcast.cn</value>

</property>

<!-- NodeManager上运行的附属服务。需配置成mapreduce_shuffle,才可运行MR程序。-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 每个容器请求的最小内存资源(以MB为单位)。-->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<!-- 每个容器请求的最大内存资源(以MB为单位)。-->

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

</property>

<!-- 容器虚拟内存与物理内存之间的比率。-->

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>4</value>

</property>

</configuration>- workers

cd /export/server/hadoop-3.1.4/etc/hadoop/

vim workers

node1.itcast.cn

node2.itcast.cn

node3.itcast.cn分发安装包及配置(node1)

在node1机器上将Hadoop安装包scp同步到其他机器(node2,node3)

cd /export/server/

scp -r hadoop-3.1.4 root@node2:/export/server/

scp -r hadoop-3.1.4 root@node3:/export/server/配置Hadoop环境变量

vim /etc/profile

export HADOOP_HOME=/export/server/hadoop-3.1.4

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin将修改后的环境变量同步其他机器

scp /etc/profile root@node2:/etc/

scp /etc/profile root@node3:/etc/重新加载环境变量 验证是否生效(3台)

source /etc/profile

[root@node1 server]# hadoop #验证环境变量是否生效

Usage: hadoop [OPTIONS] SUBCOMMAND [SUBCOMMAND OPTIONS]

or hadoop [OPTIONS] CLASSNAME [CLASSNAME OPTIONS]

where CLASSNAME is a user-provided Java class

OPTIONS is none or any of:

buildpaths attempt to add class files from build tree

--config dir Hadoop config directory

--debug turn on shell script debug mode

--help usage information

hostnames list[,of,host,names] hosts to use in slave mode

hosts filename list of hosts to use in slave mode

loglevel level set the log4j level for this command

workers turn on worker mode

SUBCOMMAND is one of:

Admin Commands:

daemonlog get/set the log level for each daemon

Client Commands:

archive create a Hadoop archive

checknative check native Hadoop and compression libraries availability

classpath prints the class path needed to get the Hadoop jar and the required libraries

conftest validate configuration XML files

credential interact with credential providers

distch distributed metadata changer

distcp copy file or directories recursively

dtutil operations related to delegation tokens

envvars display computed Hadoop environment variables

fs run a generic filesystem user client

gridmix submit a mix of synthetic job, modeling a profiled from production load

jar <jar> run a jar file. NOTE: please use "yarn jar" to launch YARN applications, not this command.

jnipath prints the java.library.path

kdiag Diagnose Kerberos Problems

kerbname show auth_to_local principal conversion

key manage keys via the KeyProvider

rumenfolder scale a rumen input trace

rumentrace convert logs into a rumen trace

s3guard manage metadata on S3

trace view and modify Hadoop tracing settings

version print the version

Daemon Commands:

kms run KMS, the Key Management Server

NameNode format(格式化操作)

- 首次启动HDFS时,必须对其进行格式化操作。

- 本质上是一些清理和准备工作,因为此时的HDFS在物理上还是不存在的。

- format本质上是初始化工作,进行HDFS清理和准备工作

- 命令:

[root@node1 server]# hdfs namenode -format

WARNING: /export/server/hadoop-3.1.4/logs does not exist. Creating.

2022-09-15 21:52:08,086 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = node1/192.168.20.10

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.1.4

STARTUP_MSG: classpath = /export/server/hadoop-3.1.4/etc/hadoop:/export/server/hadoop-3.1.4/share/hadoop/common/lib/accessors-smart-1.2.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/asm-5.0.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/avro-1.7.7.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/checker-qual-2.5.2.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-beanutils-1.9.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-cli-1.2.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-codec-1.11.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-collections-3.2.2.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-compress-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-io-2.5.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-lang-2.6.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-lang3-3.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-logging-1.1.3.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-math3-3.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/commons-net-3.6.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/curator-client-2.13.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/curator-framework-2.13.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/error_prone_annotations-2.2.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/failureaccess-1.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/gson-2.2.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/guava-27.0-jre.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/hadoop-annotations-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/hadoop-auth-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/httpclient-4.5.2.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/httpcore-4.4.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-annotations-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-core-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-databind-2.9.10.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jersey-core-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jersey-json-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jersey-server-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jersey-servlet-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jettison-1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-http-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-io-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-security-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-server-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-servlet-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-util-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-webapp-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jetty-xml-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jsch-0.1.55.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/json-smart-2.3.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jsp-api-2.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jsr305-3.0.2.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-client-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-common-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-core-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-server-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerb-util-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerby-config-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerby-util-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/log4j-1.2.17.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/netty-3.10.6.Final.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/nimbus-jose-jwt-7.9.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/paranamer-2.3.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/re2j-1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/snappy-java-1.0.5.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/stax2-api-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/token-provider-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/zookeeper-3.4.13.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/export/server/hadoop-3.1.4/share/hadoop/common/lib/metrics-core-3.2.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/hadoop-common-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/hadoop-common-3.1.4-tests.jar:/export/server/hadoop-3.1.4/share/hadoop/common/hadoop-nfs-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/common/hadoop-kms-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-util-ajax-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/netty-all-4.1.48.Final.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/okio-1.6.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/hadoop-auth-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/httpclient-4.5.2.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/httpcore-4.4.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/nimbus-jose-jwt-7.9.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/json-smart-2.3.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/asm-5.0.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/netty-3.10.6.Final.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jsr305-3.0.2.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/error_prone_annotations-2.2.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-io-2.5.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-server-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-http-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-util-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-io-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-webapp-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-xml-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-servlet-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jetty-security-9.4.20.v20190813.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/hadoop-annotations-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-net-3.6.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jettison-1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-beanutils-1.9.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-lang3-3.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/avro-1.7.7.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/paranamer-2.3.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/commons-compress-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/re2j-1.1.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/gson-2.2.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jsch-0.1.55.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-databind-2.9.10.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-annotations-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/jackson-core-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-3.1.4-tests.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-nfs-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-client-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-client-3.1.4-tests.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-native-client-3.1.4-tests.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-rbf-3.1.4-tests.jar:/export/server/hadoop-3.1.4/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/lib/junit-4.11.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.1.4-tests.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/aopalliance-1.0.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/dnsjava-2.1.7.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/fst-2.50.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/guice-4.0.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/jackson-jaxrs-base-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.9.10.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/java-util-1.9.0.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/javax.inject-1.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/jersey-client-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/json-io-2.5.1.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/objenesis-1.0.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-api-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-client-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-common-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-registry-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-common-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-router-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-tests-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-services-api-3.1.4.jar:/export/server/hadoop-3.1.4/share/hadoop/yarn/hadoop-yarn-services-core-3.1.4.jar

STARTUP_MSG: build = Unknown -r Unknown; compiled by 'root' on 2020-11-04T09:35Z

STARTUP_MSG: java = 1.8.0_241

************************************************************/

2022-09-15 21:52:08,092 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2022-09-15 21:52:08,139 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-9485617c-d6b4-4642-919c-9f75b4dc8747

2022-09-15 21:52:08,357 INFO namenode.FSEditLog: Edit logging is async:true

2022-09-15 21:52:08,387 INFO namenode.FSNamesystem: KeyProvider: null

2022-09-15 21:52:08,388 INFO namenode.FSNamesystem: fsLock is fair: true

2022-09-15 21:52:08,388 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2022-09-15 21:52:08,391 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

2022-09-15 21:52:08,391 INFO namenode.FSNamesystem: supergroup = supergroup

2022-09-15 21:52:08,391 INFO namenode.FSNamesystem: isPermissionEnabled = true

2022-09-15 21:52:08,391 INFO namenode.FSNamesystem: HA Enabled: false

2022-09-15 21:52:08,417 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2022-09-15 21:52:08,423 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2022-09-15 21:52:08,423 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2022-09-15 21:52:08,425 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2022-09-15 21:52:08,426 INFO blockmanagement.BlockManager: The block deletion will start around 2022 九月 15 21:52:08

2022-09-15 21:52:08,427 INFO util.GSet: Computing capacity for map BlocksMap

2022-09-15 21:52:08,427 INFO util.GSet: VM type = 64-bit

2022-09-15 21:52:08,428 INFO util.GSet: 2.0% max memory 405.5 MB = 8.1 MB

2022-09-15 21:52:08,428 INFO util.GSet: capacity = 2^20 = 1048576 entries

2022-09-15 21:52:08,431 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2022-09-15 21:52:08,435 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: defaultReplication = 3

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: maxReplication = 512

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: minReplication = 1

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2022-09-15 21:52:08,435 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2022-09-15 21:52:08,459 INFO namenode.FSDirectory: GLOBAL serial map: bits=24 maxEntries=16777215

2022-09-15 21:52:08,490 INFO util.GSet: Computing capacity for map INodeMap

2022-09-15 21:52:08,491 INFO util.GSet: VM type = 64-bit

2022-09-15 21:52:08,491 INFO util.GSet: 1.0% max memory 405.5 MB = 4.1 MB

2022-09-15 21:52:08,491 INFO util.GSet: capacity = 2^19 = 524288 entries

2022-09-15 21:52:08,491 INFO namenode.FSDirectory: ACLs enabled? false

2022-09-15 21:52:08,491 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2022-09-15 21:52:08,491 INFO namenode.FSDirectory: XAttrs enabled? true

2022-09-15 21:52:08,491 INFO namenode.NameNode: Caching file names occurring more than 10 times

2022-09-15 21:52:08,493 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2022-09-15 21:52:08,494 INFO snapshot.SnapshotManager: SkipList is disabled

2022-09-15 21:52:08,496 INFO util.GSet: Computing capacity for map cachedBlocks

2022-09-15 21:52:08,497 INFO util.GSet: VM type = 64-bit

2022-09-15 21:52:08,497 INFO util.GSet: 0.25% max memory 405.5 MB = 1.0 MB

2022-09-15 21:52:08,497 INFO util.GSet: capacity = 2^17 = 131072 entries

2022-09-15 21:52:08,501 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2022-09-15 21:52:08,501 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2022-09-15 21:52:08,501 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2022-09-15 21:52:08,503 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2022-09-15 21:52:08,503 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2022-09-15 21:52:08,504 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2022-09-15 21:52:08,504 INFO util.GSet: VM type = 64-bit

2022-09-15 21:52:08,504 INFO util.GSet: 0.029999999329447746% max memory 405.5 MB = 124.6 KB

2022-09-15 21:52:08,504 INFO util.GSet: capacity = 2^14 = 16384 entries

2022-09-15 21:52:08,518 INFO namenode.FSImage: Allocated new BlockPoolId: BP-688478168-192.168.20.10-1663249928514

2022-09-15 21:52:08,525 INFO common.Storage: Storage directory /export/data/hadoop-3.1.4/dfs/name has been successfully formatted.

2022-09-15 21:52:08,536 INFO namenode.FSImageFormatProtobuf: Saving image file /export/data/hadoop-3.1.4/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2022-09-15 21:52:08,577 INFO namenode.FSImageFormatProtobuf: Image file /export/data/hadoop-3.1.4/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 391 bytes saved in 0 seconds .

2022-09-15 21:52:08,584 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2022-09-15 21:52:08,588 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown.

2022-09-15 21:52:08,589 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at node1/192.168.20.10

************************************************************/

- 首次启动之前需要format操作

- format只能进行一次 后续不再需要

- 如果多次format除了造成数据丢失外

- 还会导致hdfs集群主从角色之间互不识别

- 通过删除所有机器hadoop.tmp.dir目录重新forma解决;可以通过查看core-site.xml文件来查看配置hadoop.tmp.dir目录

Hadoop集群启动关闭

手动逐个进程启停(二选一)

- 每台机器上每次手动启动关闭一个角色进程,有时在为某台服务器维护时,可以做到精准控制

- HDFS集群

hdfs --daemon start namenode|datanode|secondarynamenode hdfs --daemon stop namenode|datanode|secondarynamenode - YARN集群

yarn --daemon start resourcemanager|nodemanager yarn --daemon stop resourcemanager|nodemanager

shell脚本一键启停(二选一)

- 在node1上,使用软件自带的shell脚本一键启动(/export/server/Hadoop-3.1.4/sbin)

- 前提:配置好机器之间的SSH免密登录和workers文件。

- HDFS集群

start-dfs.sh stop-dfs.sh - YARN集群

start-yarn.sh stop-yarn.sh - Hadoop集群

start-all.sh stop-all.sh -

[root@node1 hadoop-3.1.4]# start-all.sh Starting namenodes on [node1.itcast.cn] 上一次登录:四 9月 15 21:57:19 CST 2022pts/0 上 Starting datanodes 上一次登录:四 9月 15 21:59:34 CST 2022pts/0 上 node3.itcast.cn: WARNING: /export/server/hadoop-3.1.4/logs does not exist. Creating. node2.itcast.cn: WARNING: /export/server/hadoop-3.1.4/logs does not exist. Creating. Starting secondary namenodes [node2.itcast.cn] 上一次登录:四 9月 15 21:59:36 CST 2022pts/0 上 Starting resourcemanager 上一次登录:四 9月 15 21:59:39 CST 2022pts/0 上 resourcemanager is running as process 18126. Stop it first. Starting nodemanagers 上一次登录:四 9月 15 21:59:43 CST 2022pts/0 上

Hadoop集群启动日志

启动完毕之后可以使用jps命令查看进程是否启动成功

[root@node1 hadoop-3.1.4]# jps

19635 Jps

18985 DataNode

18812 NameNode

19484 NodeManager

18126 ResourceManager

[root@node2 ~]# jps

17120 SecondaryNameNode

17009 DataNode

17201 NodeManager

17322 Jps

[root@node3 ~]# jps

17315 Jps

17084 DataNode

17197 NodeManagerHadoop启动日志

日志路径:/export/server/hadoop-3.1.4/logs/

[root@node1 ~]# ll /export/server/hadoop-3.1.4/logs/

总用量 172

-rw-r--r--. 1 root root 32483 9月 15 21:59 hadoop-root-datanode-node1.itcast.cn.log

-rw-r--r--. 1 root root 690 9月 15 21:59 hadoop-root-datanode-node1.itcast.cn.out

-rw-r--r--. 1 root root 41417 9月 15 22:00 hadoop-root-namenode-node1.itcast.cn.log

-rw-r--r--. 1 root root 690 9月 15 21:59 hadoop-root-namenode-node1.itcast.cn.out

-rw-r--r--. 1 root root 36488 9月 15 21:59 hadoop-root-nodemanager-node1.itcast.cn.log

-rw-r--r--. 1 root root 2262 9月 15 21:59 hadoop-root-nodemanager-node1.itcast.cn.out

-rw-r--r--. 1 root root 42586 9月 15 21:59 hadoop-root-resourcemanager-node1.itcast.cn.log

-rw-r--r--. 1 root root 2278 9月 15 21:57 hadoop-root-resourcemanager-node1.itcast.cn.out

-rw-r--r--. 1 root root 0 9月 15 21:52 SecurityAuth-root.audit

drwxr-xr-x. 2 root root 6 9月 15 22:01 userlogs

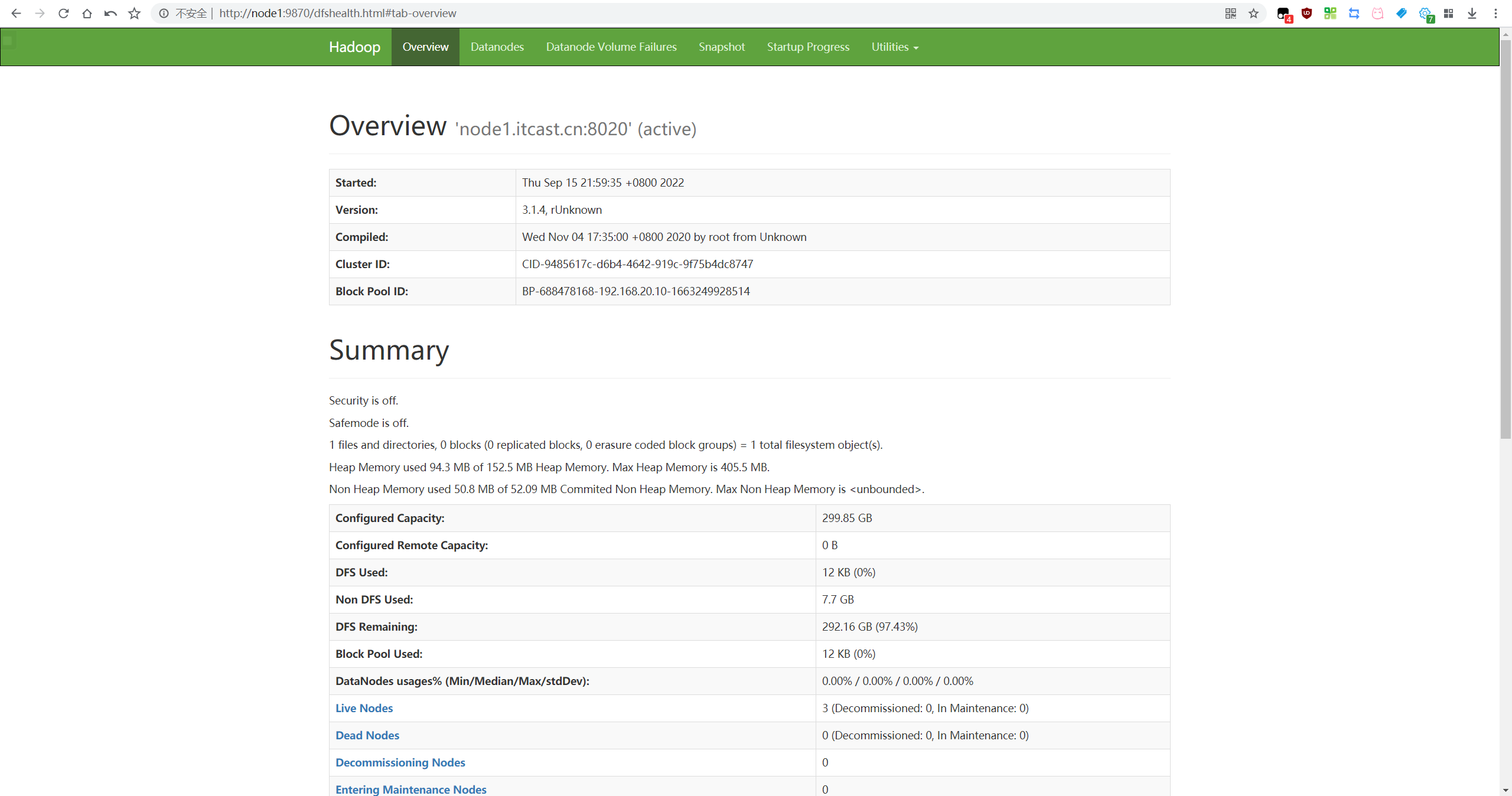

Hadoop Web UI页面

HDFS集群

地址:http://namenode_host:9870

其中namenode_host是namenode运行所在机器的主机名或者ip

如果使用主机名访问,别忘了在Windows配置hosts(C:\Windows\System32\drivers\etc\hosts)

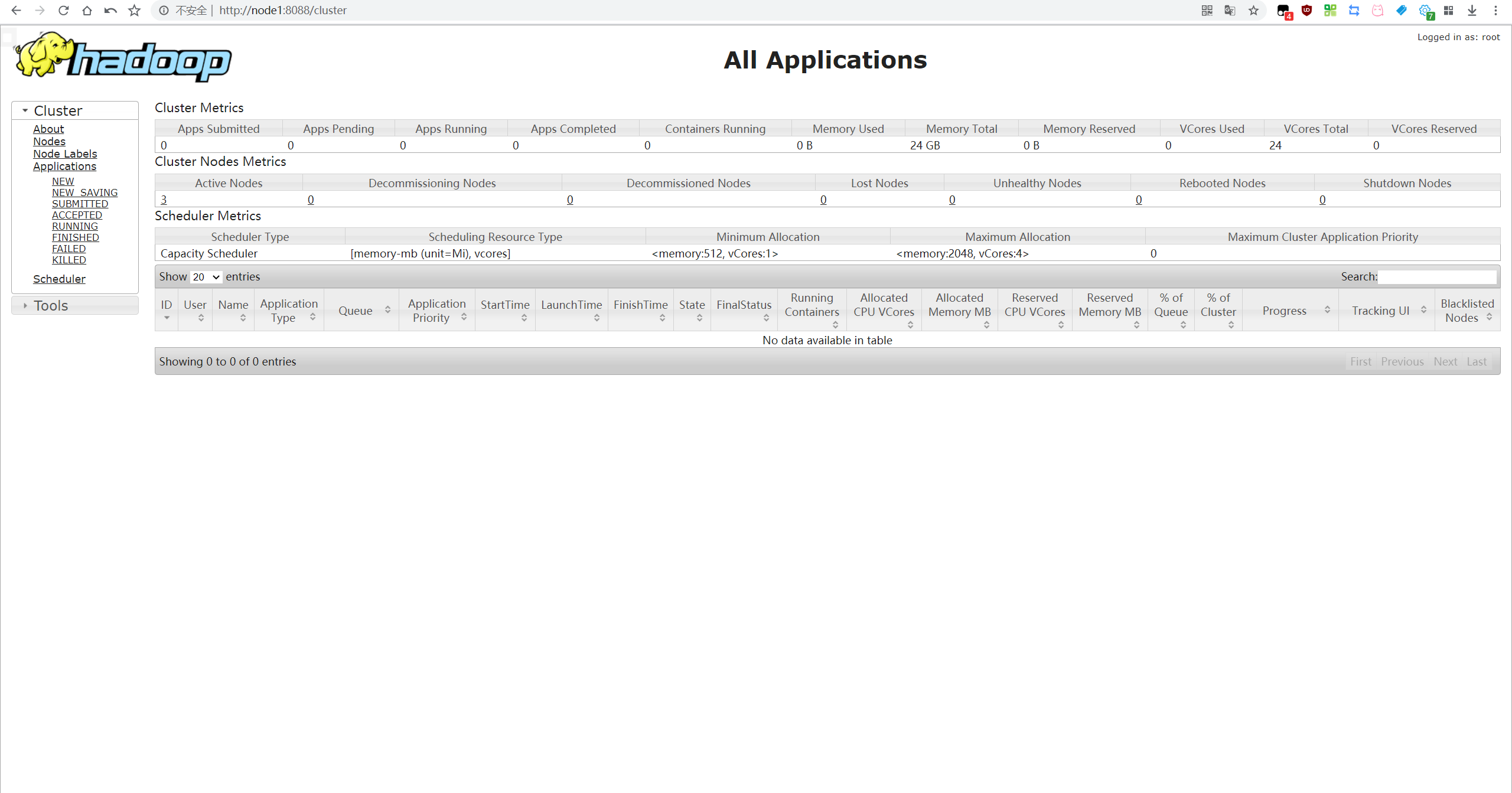

YARN集群

地址:http://resourcemanager_host:8088

其中resourcemanager_host是resourcemanager运行所在机器的主机名或者ip

如果使用主机名访问,别忘了在Windows配置hosts(C:\Windows\System32\drivers\etc\hosts)

附上所用文件

https://wwu.lanzouw.com/i7miD0fcdqbi

https://www.123pan.com/s/uJZ0Vv-ZSWBd 提取码:8888

浙公网安备 33010602011771号

浙公网安备 33010602011771号