kafka

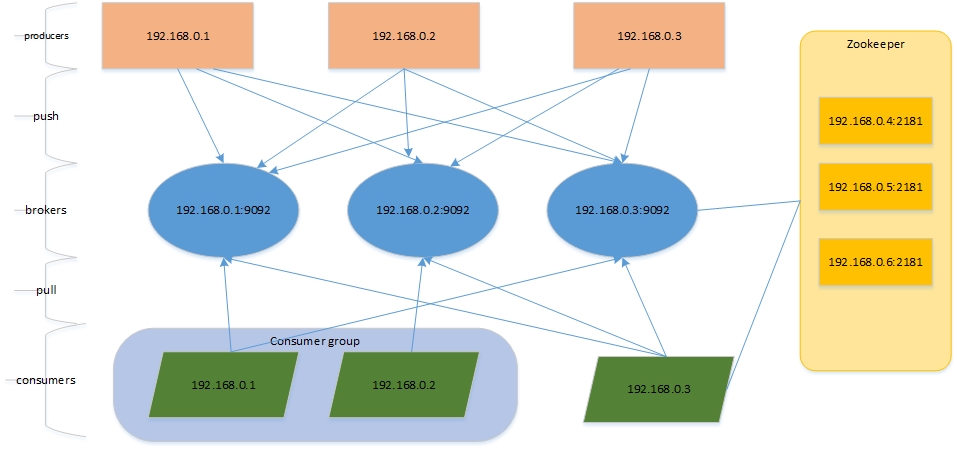

架构组建

安装kafka

-

官网:

-

tar -zxvf

-

进入到config目录下修改server.properties

broker.id

listeners=

zookeeper.connect

-

启动

sh kafka-server-start.sh -daemon ../config/server.properties

5.测试:

生产者:bin/kafka-console-producer.sh --broker-list 192.168.153.118:9092 --topic test

消费者:bin``/kafka-console-consumer``.sh --bootstrap-server localhost:9092 --topic ``test --from-beginning

基本操作

查看官方文档:http://kafka.apache.org/documentation/

实现细节

-

消息

消息由key(可选),value;消息批量发送

-

Topic&Partition

日志策略-压缩策略

消息发送可靠性机制

producer发送消息到broker后,有三种确认方式

副本机制

使用kafka标准原生API

public class KafkaProducerDemo {

public static void main(String[] args) throws ExecutionException, InterruptedException {

//创建kafka producer

Properties properties = new Properties();

properties.setProperty("bootstrap.servers","172.16.143.31:31647");

properties.setProperty("key.serializer", StringSerializer.class.getName());

properties.setProperty("value.serializer", StringSerializer.class.getName());

KafkaProducer<String, String> kafkaProducer = new KafkaProducer(properties);

//创建消息

ProducerRecord<String, String> record

= new ProducerRecord<String, String>("sdk-test","sdk","test");

//发送消息

Future<RecordMetadata> metadataFuture = kafkaProducer.send(record);

//强制执行

metadataFuture.get();

}

}

Spring kafka

官方文档:https://docs.spring.io/spring-kafka/reference/#reference

使用:kafkaTemplate

依赖:

compile group: 'org.springframework.kafka', name: 'spring-kafka', version: '2.0.4.RELEASE'

Spring Boot kafka

依赖:

自动装配:KafkaAutoConfiguration

配置文件:

浙公网安备 33010602011771号

浙公网安备 33010602011771号