GreenPlum 大数据平台--segment 失效问题恢复

1,问题检查

[gpadmin@greenplum01 conf]$ psql -c "select * from gp_segment_configuration where status='d'" dbid | content | role | preferred_role | mode | status | port | hostname | address | replication_por t ------+---------+------+----------------+------+--------+-------+-------------+-------------+---------------- -- 12 | 2 | m | m | s | d | 43002 | greenplum03 | greenplum03 | 4400 2 7 | 5 | m | p | s | d | 6001 | greenplum03 | greenplum03 | 3400 1 (2 rows)

发现状态的

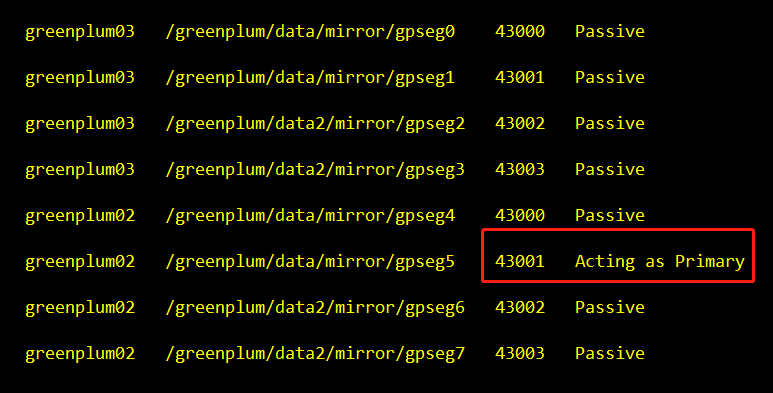

[gpadmin@greenplum01 conf]$ gpstate -m 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-Starting gpstate with args: -m 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44' 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Jan 16 2019 02:32:15' 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master... 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-------------------------------------------------------------- 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--Current GPDB mirror list and status 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:--Type = Group 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-------------------------------------------------------------- 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- Mirror Datadir Port Status Data Status 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg0 43000 Passive Synchronized 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg1 43001 Passive Synchronized 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-greenplum03 /greenplum/data2/mirror/gpseg2 43002 Failed <<<<<<<< 这个出现问题了 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data2/mirror/gpseg3 43003 Passive Synchronized 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg4 43000 Passive Synchronized 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg5 43001 Acting as Primary Change Tracking 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg6 43002 Passive Synchronized 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg7 43003 Passive Synchronized 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[INFO]:-------------------------------------------------------------- 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-1 segment(s) configured as mirror(s) are acting as primaries 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-1 segment(s) configured as mirror(s) have failed ------------看这里 20190711:17:06:51:025238 gpstate:greenplum01:gpadmin-[WARNING]:-1 mirror segment(s) acting as primaries are in change tracking

01,连接问题

首先解决连接是否成功,ping 相应的主机看返回是否是成功状态

ping greenplum03

02,激活失效的segment

gprecoverseg

恢复过程会启动失效的Segment并且确定需要同步的已更改文件

在gprecoverseg完成后,系统会进入到Resynchronizing模式并且开始复制更改过的文件。这个过程在后台运行,而系统处于在线状态并且能够接受数据库请求。

当重新同步过程完成时,系统状态是Synchronized

需要恢复两个

日志:

1 [gpadmin@greenplum01 conf]$ gprecoverseg 2 20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Starting gprecoverseg with args: 3 20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44' 4 20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Jan 16 2019 02:32:15' 5 20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Checking if segments are ready to connect 6 20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master... 7 20190711:17:10:44:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master... 8 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Heap checksum setting is consistent between master and the segments that are candidates for recoverseg 9 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Greenplum instance recovery parameters 10 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- 11 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Recovery type = Standard 12 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- 13 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Recovery 1 of 2 14 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- 15 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Synchronization mode = Incremental 16 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance host = greenplum03 17 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance address = greenplum03 18 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance directory = /greenplum/data2/mirror/gpseg2 19 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance port = 43002 20 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance replication port = 44002 21 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance host = greenplum02 22 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance address = greenplum02 23 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance directory = /greenplum/data2/primary/gpseg2 24 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance port = 6002 25 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance replication port = 34002 26 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Target = in-place 27 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- 28 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Recovery 2 of 2 29 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- 30 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Synchronization mode = Incremental 31 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance host = greenplum03 32 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance address = greenplum03 33 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance directory = /greenplum/data/primary/gpseg5 34 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance port = 6001 35 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Failed instance replication port = 34001 36 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance host = greenplum02 37 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance address = greenplum02 38 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance directory = /greenplum/data/mirror/gpseg5 39 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance port = 43001 40 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Source instance replication port = 44001 41 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- Recovery Target = in-place 42 20190711:17:10:45:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:---------------------------------------------------------- 43 44 Continue with segment recovery procedure Yy|Nn (default=N): 45 > Y 46 20190711:17:11:31:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-2 segment(s) to recover 47 20190711:17:11:31:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Ensuring 2 failed segment(s) are stopped 48 49 20190711:17:11:32:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Ensuring that shared memory is cleaned up for stopped segments 50 updating flat files 51 20190711:17:11:32:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating configuration with new mirrors 52 20190711:17:11:33:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating mirrors 53 . 54 20190711:17:11:34:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Starting mirrors 55 20190711:17:11:34:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-era is 24a58010f9c5a05a_190711113124 56 20190711:17:11:34:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Commencing parallel primary and mirror segment instance startup, please wait... 57 .. 58 20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Process results... 59 20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating configuration to mark mirrors up 60 20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating primaries 61 20190711:17:11:36:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Commencing parallel primary conversion of 2 segments, please wait... 62 . 63 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Process results... 64 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Done updating primaries 65 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-****************************************************************** 66 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Updating segments for resynchronization is completed. 67 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-For segments updated successfully, resynchronization will continue in the background. 68 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:- 69 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-Use gpstate -s to check the resynchronization progress. 70 20190711:17:11:37:025375 gprecoverseg:greenplum01:gpadmin-[INFO]:-******************************************************************

03, 检测同步

gpstate -m

[gpadmin@greenplum01 conf]$ gpstate -m 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-Starting gpstate with args: -m 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-local Greenplum Version: 'postgres (Greenplum Database) 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44' 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-master Greenplum Version: 'PostgreSQL 8.3.23 (Greenplum Database 5.16.0 build commit:23cec7df0406d69d6552a4bbb77035dba4d7dd44) on x86_64-pc-linux-gnu, compiled by GCC gcc (GCC) 6.2.0, 64-bit compiled on Jan 16 2019 02:32:15' 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-Obtaining Segment details from master... 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-------------------------------------------------------------- 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--Current GPDB mirror list and status 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:--Type = Group 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-------------------------------------------------------------- 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- Mirror Datadir Port Status Data Status 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg0 43000 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data/mirror/gpseg1 43001 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data2/mirror/gpseg2 43002 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum03 /greenplum/data2/mirror/gpseg3 43003 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg4 43000 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data/mirror/gpseg5 43001 Acting as Primary Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg6 43002 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:- greenplum02 /greenplum/data2/mirror/gpseg7 43003 Passive Synchronized 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[INFO]:-------------------------------------------------------------- 20190711:17:12:10:025484 gpstate:greenplum01:gpadmin-[WARNING]:-1 segment(s) configured as mirror(s) are acting as primaries

发现恢复出来了

04,恢复初始化状态

因为宕机一个主segment,镜像会激活另一个,并且成为主segment。运行gprecoverseg之后,主segment依旧没变化,失效的segment没有正式加进来,所以需要让他变成初始化的时候的segment状态,让所有segment重新恢复平衡系统

检查这个segment的状态

gpstate -e

运行gpstate -m来确保所有镜像都是Synchronized。 gpstate -m

一直在运行了

假如有Resynchronizing模式 ,需要耐心等待

用-r选项运行gprecoverseg,让Segment回到它们的首选角色。

gprecoverseg -r

在重新平衡之后,运行gpstate -e来确认所有的Segment都处于它们的首选角色。

gpstate -e

这个就没问题了

人生就像一滴水,非要落下才后悔!

--kingle

浙公网安备 33010602011771号

浙公网安备 33010602011771号