BP神经网络公式推导(含代码实现)

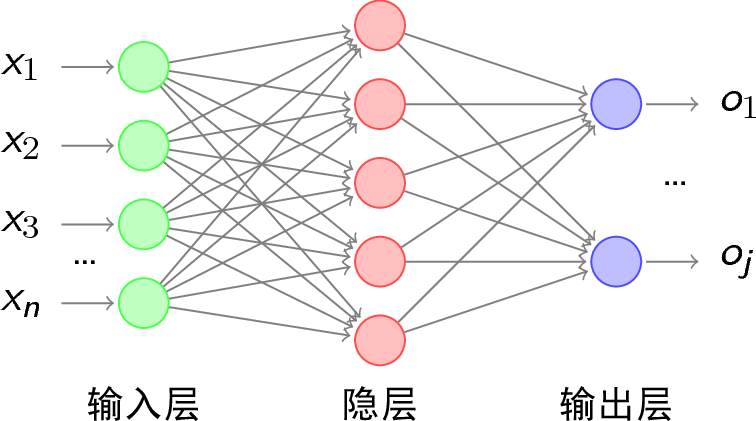

什么是BP神经网络

BP(Back Propagation)神经网络是一种按误差反向传播(简称误差反传)训练的多层前馈网络,它的基本思想是梯度下降法,利用梯度搜索技术,以期使网络的实际输出值和期望输出值的误差最小。

BP神经网络包括信号的前向传播和误差的反向传播两个过程。即计算误差输出时按从输入到输出的方向进行,而调整权值和阈值则从输出到输入的方向进行。

网络结构:BP神经网络整个网络结构包含了:一层输入层,一到多层隐含层,一层的输出层。

隐含层的选取

在BP神经网络中,输入层和输出层的节点个数都是确定的,而隐含层节点个数不确定,那么应该设置为多少才合适呢?实际上,隐含层的节点个数的多少对神经网络的性能是有影响的,有一个经验公式可以确定隐含层节点数目,公式如下:

h

=

m

+

n

+

a

h=\sqrt{m+n}+a

h=m+n+a

其中h为隐含层节点数目,m为输入层节点数目,n为输出层节点数目,a为1~10之间的调节常数。

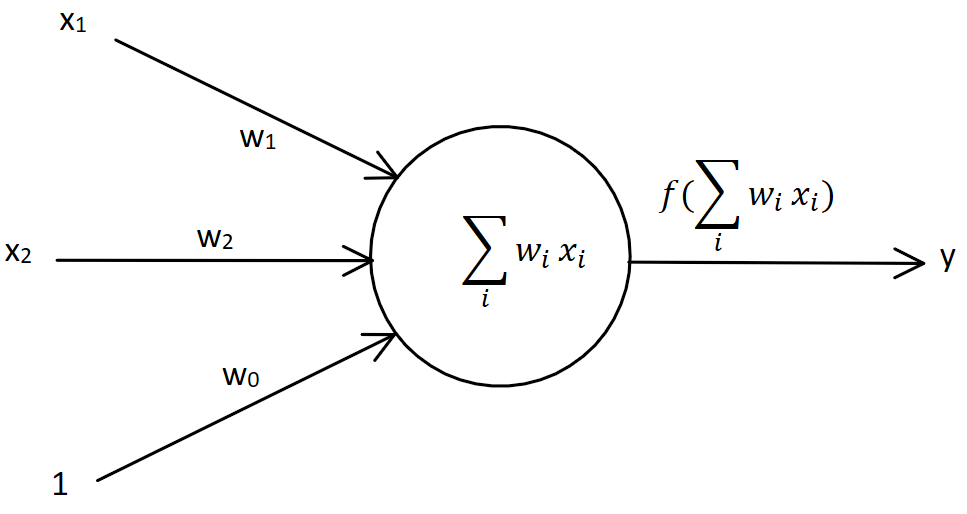

单神经元梯度

信号的前向传播

为了便于理解,这里先用单个神经元梯度为例。

图中有两个输入 [ x 1 , x 2 ] [x_1,x_2] [x1,x2],两个权值 [ w 1 , w 2 ] [w_1,w_2] [w1,w2],偏置值为 w 0 w_0 w0,f为激活函数。

前向传播过程中:

z

=

w

0

x

0

+

w

1

x

1

+

w

2

x

2

=

∑

i

=

0

2

w

i

x

i

,

其

中

x

0

=

1

y

=

f

∑

i

=

0

2

w

i

x

i

z=w_0x_0+w_1x_1+w_2x_2=\sum_{i=0}^2w_ix_i,其中x_0=1 \\ y=f\sum_{i=0}^2w_ix_i

z=w0x0+w1x1+w2x2=i=0∑2wixi,其中x0=1y=fi=0∑2wixi

用向量形式可表示为:

z

=

∑

i

=

0

2

w

i

x

i

=

w

T

x

y

=

f

(

w

T

x

)

z=\sum_{i=0}^2w_ix_i=w^Tx \\ y=f(w^Tx)

z=i=0∑2wixi=wTxy=f(wTx)

误差的反向传播

在BP神经网络中,误差反向传播基于Delta学习规则。我们已知输出层的结果为

y

=

y

=

f

(

w

T

x

)

y=y=f(w^Tx)

y=y=f(wTx),对于预测值与真实值之间误差的计算,我们使用如下公式(代价函数):

E

=

1

2

(

t

−

y

)

2

E=\frac{1}{2}(t-y)^2

E=21(t−y)2

其中真实值为t。

BP神经网络的主要目的是修正权值,使得误差数值达到最小。Delta学习规则是一种利用梯度下降的一般性的学习规则。公式如下:

Δ

W

=

−

ŋ

E

′

δ

E

δ

w

=

δ

1

2

[

t

−

f

(

w

T

x

)

]

2

δ

w

=

1

2

∗

2

[

t

−

f

(

w

T

x

)

]

∗

(

−

f

′

(

w

T

x

)

)

δ

w

T

x

δ

w

=

−

(

t

−

y

)

f

′

(

w

T

x

)

x

Δ

W

=

−

ŋ

E

′

=

ŋ

(

t

−

y

)

f

′

(

w

T

x

)

x

=

ŋ

δ

x

,

其

中

δ

=

(

t

−

y

)

f

′

(

w

T

x

)

\begin{aligned} ΔW&=-ŋE' \\ \frac{\delta E}{\delta w}&=\frac{\delta\frac{1}{2}[t-f(w^Tx)]^2}{\delta w} \\ &=\frac{1}{2}*2[t-f(w^Tx)]*(-f'(w^Tx))\frac{\delta w^Tx}{\delta w} \\ &=-(t-y)f'(w^Tx)x \\ ΔW&=-ŋE'=ŋ(t-y)f'(w^Tx)x=ŋ\delta x,其中\delta=(t-y)f'(w^Tx) \end{aligned}

ΔWδwδEΔW=−ŋE′=δwδ21[t−f(wTx)]2=21∗2[t−f(wTx)]∗(−f′(wTx))δwδwTx=−(t−y)f′(wTx)x=−ŋE′=ŋ(t−y)f′(wTx)x=ŋδx,其中δ=(t−y)f′(wTx)

Delta学习规则小结

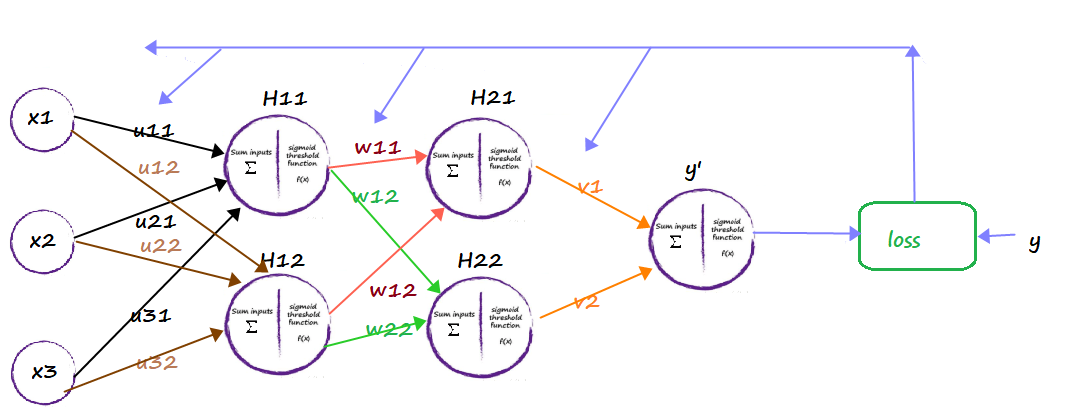

全连接层梯度

正向传播

第一层

输入 x 1 , x 2 , x 3 x_1,x_2,x_3 x1,x2,x3

h

1

1

=

u

11

x

1

+

u

21

x

2

+

u

31

x

3

=

∑

i

=

1

3

u

i

1

x

i

h

2

1

=

u

12

x

1

+

u

22

x

2

+

u

32

x

3

=

∑

i

=

1

3

u

i

2

x

i

H

1

1

=

f

(

h

1

1

)

H

2

1

=

f

(

h

2

1

)

h

1

=

[

h

1

1

,

h

2

1

]

=

[

u

11

u

21

u

31

u

12

u

22

u

32

]

T

[

x

1

x

2

x

3

]

=

u

T

x

H

1

=

f

(

u

T

x

)

h_{1}^1=u_{11}x_1+u_{21}x_2+u_{31}x_3=\sum_{i=1}^3u_{i1}x_i \\ h_{2}^1=u_{12}x_1+u_{22}x_2+u_{32}x_3=\sum_{i=1}^3u_{i2}x_i \\ H_{1}^1=f(h_{1}^1) \\ H_{2}^1=f(h_{2}^1) \\ h^1=[h_1^1,h_2^1]=\begin{bmatrix} u_{11} & u_{21} & u_{31} \\ u_{12} & u_{22} & u_{32} \end{bmatrix}^T \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} =u^Tx \\ H^1=f(u^Tx)

h11=u11x1+u21x2+u31x3=i=1∑3ui1xih21=u12x1+u22x2+u32x3=i=1∑3ui2xiH11=f(h11)H21=f(h21)h1=[h11,h21]=[u11u12u21u22u31u32]T⎣⎡x1x2x3⎦⎤=uTxH1=f(uTx)

上图有两个隐含层,为了便于解释,这里用

x

i

j

x_i^j

xij表示第j层的第i个节点,如

h

1

1

h_1^1

h11表示第一个隐含层的第一个节点。

第二层

输入

H

1

1

,

H

2

1

H_1^1,H2^1

H11,H21

h

1

2

=

w

11

H

1

1

+

w

21

H

2

1

h

2

2

=

w

12

H

1

1

+

w

22

H

2

1

H

1

2

=

f

(

h

1

2

)

h

2

2

=

f

(

h

2

2

)

h

2

=

[

h

1

2

,

h

2

2

]

=

[

w

11

w

21

w

12

w

22

]

T

[

H

1

1

H

2

1

]

=

w

T

H

1

H

2

=

f

(

w

T

H

1

)

h_1^2=w_{11}H_1^1+w_{21}H_2^1 \\ h_2^2=w_{12}H_1^1+w_{22}H_2^1 \\ H_1^2=f(h_1^2) \\ h_2^2=f(h_2^2) \\ h^2=[h_1^2,h_2^2]=\begin{bmatrix} w_{11} & w_{21} \\ w_{12} & w_{22} \end{bmatrix}^T \begin{bmatrix} H_1^1 \\ H_2^1 \end{bmatrix} =w^TH^1 \\ H^2=f(w^TH^1)

h12=w11H11+w21H21h22=w12H11+w22H21H12=f(h12)h22=f(h22)h2=[h12,h22]=[w11w12w21w22]T[H11H21]=wTH1H2=f(wTH1)

输出层

输入

H

1

2

,

h

2

2

H_1^2,h_2^2

H12,h22

y

=

f

(

v

1

H

1

2

+

v

2

H

2

2

)

=

f

(

v

T

H

2

)

y=f(v_1H_1^2+v_2H_2^2)=f(v^TH^2)

y=f(v1H12+v2H22)=f(vTH2)

反向传播

输出层

Δ

W

=

−

ŋ

E

′

=

ŋ

(

t

−

y

)

f

′

(

v

T

H

2

)

H

2

=

ŋ

δ

H

2

δ

=

(

t

−

y

)

f

′

(

v

T

H

2

)

\begin{aligned} ΔW&=-ŋE'=ŋ(t-y)f'(v^TH^2)H^2=ŋ\delta H^2 \\ \delta&=(t-y)f'(v^TH^2) \end{aligned}

ΔWδ=−ŋE′=ŋ(t−y)f′(vTH2)H2=ŋδH2=(t−y)f′(vTH2)

第二层

首先根据Delta学习规则可得:

Δ

W

=

−

ŋ

E

′

δ

E

δ

w

=

δ

1

2

[

t

−

f

(

v

T

H

2

)

]

2

δ

w

=

1

2

∗

2

[

t

−

f

(

v

T

H

2

)

]

∗

(

−

f

′

(

v

T

H

2

)

)

δ

v

T

H

2

δ

w

=

−

(

t

−

y

)

f

′

(

v

T

H

2

)

δ

v

T

H

2

δ

w

\begin{aligned} ΔW&=-ŋE' \\ \frac{\delta E}{\delta w}&=\frac{\delta\frac{1}{2}[t-f(v^TH^2)]^2}{\delta w} \\ &=\frac{1}{2}*2[t-f(v^TH^2)]*(-f'(v^TH^2))\frac{\delta v^TH^2}{\delta w} \\ &=-(t-y)f'(v^TH^2)\frac{\delta v^TH^2}{\delta w} \end{aligned}

ΔWδwδE=−ŋE′=δwδ21[t−f(vTH2)]2=21∗2[t−f(vTH2)]∗(−f′(vTH2))δwδvTH2=−(t−y)f′(vTH2)δwδvTH2

此时我们先计算

δ

v

T

H

2

δ

w

\frac{\delta v^TH^2}{\delta w}

δwδvTH2的取值:

δ

v

T

H

2

δ

w

=

δ

v

T

H

2

δ

H

2

∗

δ

H

2

δ

w

=

v

T

δ

f

(

w

T

H

1

)

δ

w

=

v

T

f

′

(

W

T

H

1

)

H

1

\begin{aligned} \frac{\delta v^TH^2}{\delta w}&=\frac{\delta v^TH^2}{\delta H^2}*\frac{\delta H^2}{\delta w} \\ &=v^T\frac{\delta f(w^TH^1)}{\delta w} \\ &=v^Tf'(W^TH^1)H^1 \end{aligned}

δwδvTH2=δH2δvTH2∗δwδH2=vTδwδf(wTH1)=vTf′(WTH1)H1

这样我们就可以将上述结果带入到

Δ

W

ΔW

ΔW中

Δ

W

=

ŋ

(

t

−

y

)

f

′

(

v

T

H

2

)

δ

v

T

H

2

δ

w

=

ŋ

(

t

−

y

)

f

′

(

v

T

H

2

)

v

T

f

′

(

W

T

H

1

)

H

1

=

ŋ

δ

v

T

f

′

(

W

T

H

1

)

H

1

=

ŋ

δ

2

H

1

\begin{aligned} ΔW&=ŋ(t-y)f'(v^TH^2)\frac{\delta v^TH^2}{\delta w} \\ &=ŋ(t-y)f'(v^TH^2)v^Tf'(W^TH^1)H^1 \\ &=ŋ\delta v^Tf'(W^TH^1)H^1 \\ &=ŋ\delta^2H^1 \end{aligned}

ΔW=ŋ(t−y)f′(vTH2)δwδvTH2=ŋ(t−y)f′(vTH2)vTf′(WTH1)H1=ŋδvTf′(WTH1)H1=ŋδ2H1

最后我们就得到了

δ

2

\delta^2

δ2

δ

2

=

δ

v

T

f

′

(

W

T

H

1

)

\delta^2=\delta v^Tf'(W^TH^1)

δ2=δvTf′(WTH1)

第一层

首先根据Delta学习规则可得:

Δ

W

=

−

ŋ

E

′

δ

E

δ

u

=

δ

1

2

[

t

−

f

(

v

T

H

2

)

]

2

δ

u

=

1

2

∗

2

[

t

−

f

(

v

T

H

2

)

]

∗

(

−

f

′

(

v

T

H

2

)

)

δ

v

T

H

2

δ

u

=

−

(

t

−

y

)

f

′

(

v

T

H

2

)

δ

v

T

H

2

δ

u

\begin{aligned} ΔW&=-ŋE' \\ \frac{\delta E}{\delta u}&=\frac{\delta\frac{1}{2}[t-f(v^TH^2)]^2}{\delta u} \\ &=\frac{1}{2}*2[t-f(v^TH^2)]*(-f'(v^TH^2))\frac{\delta v^TH^2}{\delta u} \\ &=-(t-y)f'(v^TH^2)\frac{\delta v^TH^2}{\delta u} \end{aligned}

ΔWδuδE=−ŋE′=δuδ21[t−f(vTH2)]2=21∗2[t−f(vTH2)]∗(−f′(vTH2))δuδvTH2=−(t−y)f′(vTH2)δuδvTH2

此时我们先计算

δ

v

T

H

2

δ

u

\frac{\delta v^TH^2}{\delta u}

δuδvTH2的取值:

δ

v

T

H

2

δ

u

=

δ

v

T

H

2

δ

H

2

∗

δ

H

2

δ

H

1

∗

δ

H

1

δ

u

=

v

T

δ

f

(

w

T

H

1

)

δ

H

1

∗

δ

f

(

u

T

x

)

δ

u

=

v

T

f

′

(

w

T

H

1

)

w

T

∗

f

′

(

u

T

x

)

x

\begin{aligned} \frac{\delta v^TH^2}{\delta u}&=\frac{\delta v^TH^2}{\delta H^2}*\frac{\delta H^2}{\delta H^1}*\frac{\delta H^1}{\delta u} \\ &=v^T\frac{\delta f(w^TH^1)}{\delta H^1}*\frac{\delta f(u^Tx)}{\delta u} \\ &=v^Tf'(w^TH^1)w^T*f'(u^Tx)x \end{aligned}

δuδvTH2=δH2δvTH2∗δH1δH2∗δuδH1=vTδH1δf(wTH1)∗δuδf(uTx)=vTf′(wTH1)wT∗f′(uTx)x

这样我们就可以将上述结果带入到

Δ

W

ΔW

ΔW中

Δ

W

=

ŋ

(

t

−

y

)

f

′

(

v

T

H

2

)

δ

v

T

H

2

δ

u

=

ŋ

(

t

−

y

)

f

′

(

v

T

H

2

)

v

T

f

′

(

w

T

H

1

)

w

T

∗

f

′

(

u

T

x

)

x

=

ŋ

δ

v

T

f

′

(

w

T

H

1

)

w

T

∗

f

′

(

u

T

x

)

x

=

ŋ

δ

2

w

T

f

′

(

u

T

x

)

x

=

ŋ

δ

1

x

\begin{aligned} ΔW&=ŋ(t-y)f'(v^TH^2)\frac{\delta v^TH^2}{\delta u} \\ &=ŋ(t-y)f'(v^TH^2)v^Tf'(w^TH^1)w^T*f'(u^Tx)x \\ &=ŋ\delta v^Tf'(w^TH^1)w^T*f'(u^Tx)x \\ &=ŋ\delta^2w^Tf'(u^Tx)x \\ &=ŋ\delta^1x \end{aligned}

ΔW=ŋ(t−y)f′(vTH2)δuδvTH2=ŋ(t−y)f′(vTH2)vTf′(wTH1)wT∗f′(uTx)x=ŋδvTf′(wTH1)wT∗f′(uTx)x=ŋδ2wTf′(uTx)x=ŋδ1x

参考

δ

=

(

t

−

y

)

f

′

(

v

T

H

2

)

δ

2

=

δ

v

T

f

′

(

W

T

H

1

)

\delta=(t-y)f'(v^TH^2) \\ \delta^2=\delta v^Tf'(W^TH^1)

δ=(t−y)f′(vTH2)δ2=δvTf′(WTH1)

最后我们就得到了

δ

1

\delta^1

δ1

δ

1

=

δ

2

w

T

f

′

(

u

T

x

)

\delta^1=\delta^2w^Tf'(u^Tx)

δ1=δ2wTf′(uTx)

代码

import numpy as np

#输入数据

X = np.array([[1,0,0],

[1,0,1],

[1,1,0],

[1,1,1]])

#标签

Y = np.array([[0,1,1,0]])

#权值初始化,取值范围-1到1

V = np.random.random((3,4))*2-1

W = np.random.random((4,1))*2-1

print(V)

print(W)

#学习率设置

lr = 0.11

def sigmoid(x):

return 1/(1+np.exp(-x))

def dsigmoid(x):

return x*(1-x)

def update():

global X,Y,W,V,lr

L1 = sigmoid(np.dot(X,V))#隐藏层输出(4,4)

L2 = sigmoid(np.dot(L1,W))#输出层输出(4,1)

L2_delta = (Y.T - L2)*dsigmoid(L2)

L1_delta = L2_delta.dot(W.T)*dsigmoid(L1)

W_C = lr*L1.T.dot(L2_delta)

V_C = lr*X.T.dot(L1_delta)

W = W + W_C

V = V + V_C

for i in range(20000):

update()#更新权值

if i%500==0:

L1 = sigmoid(np.dot(X,V))#隐藏层输出(4,4)

L2 = sigmoid(np.dot(L1,W))#输出层输出(4,1)

print('Error:',np.mean(np.abs(Y.T-L2)))

L1 = sigmoid(np.dot(X,V))#隐藏层输出(4,4)

L2 = sigmoid(np.dot(L1,W))#输出层输出(4,1)

print(L2)

参考资料:

https://segmentfault.com/a/1190000021529971

本文来自博客园,作者:Kim的小破院子,转载请注明原文链接:https://www.cnblogs.com/kimj/p/15648012.html