数据采集第四次大作业

作业一

作业①:

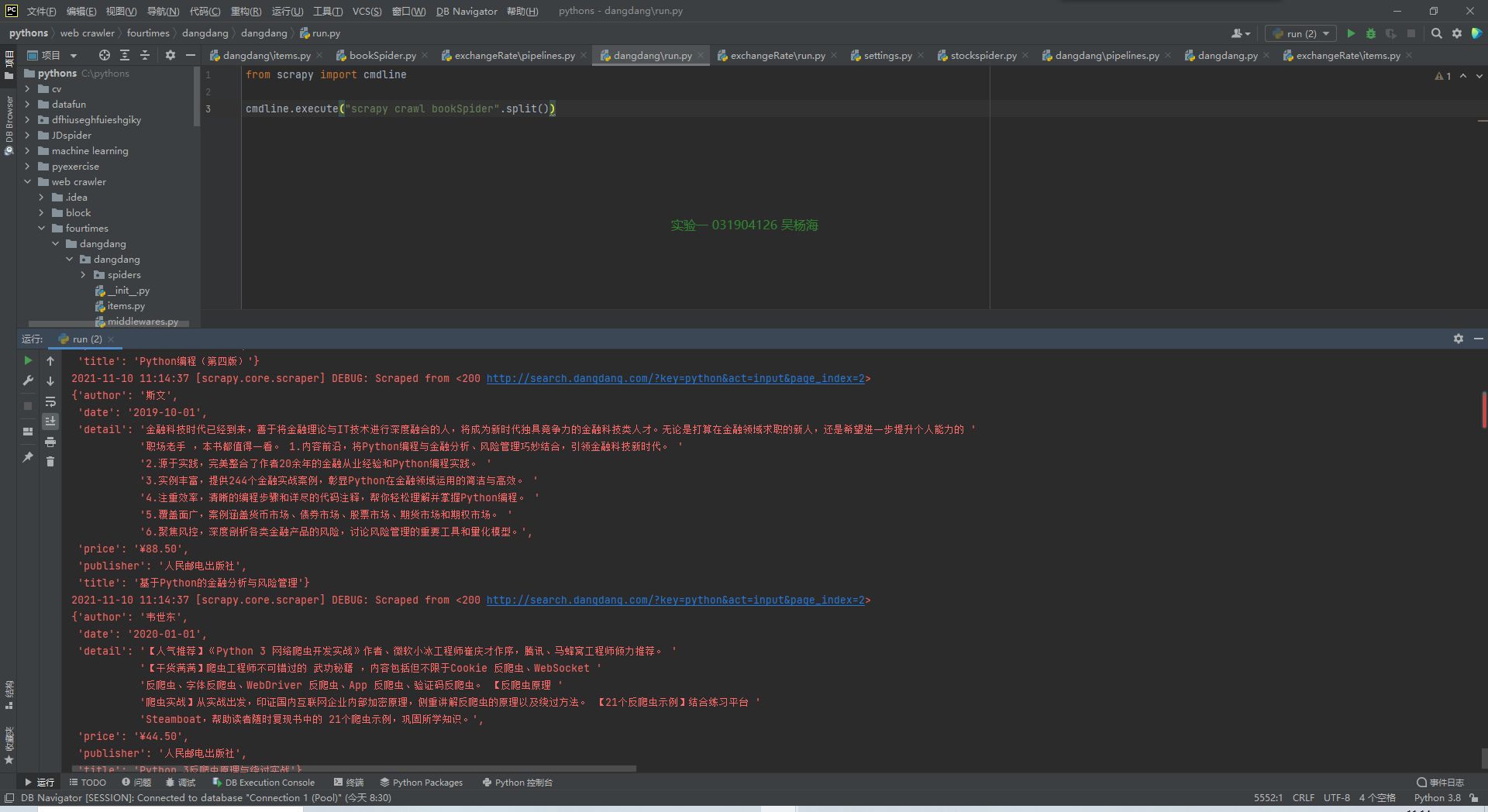

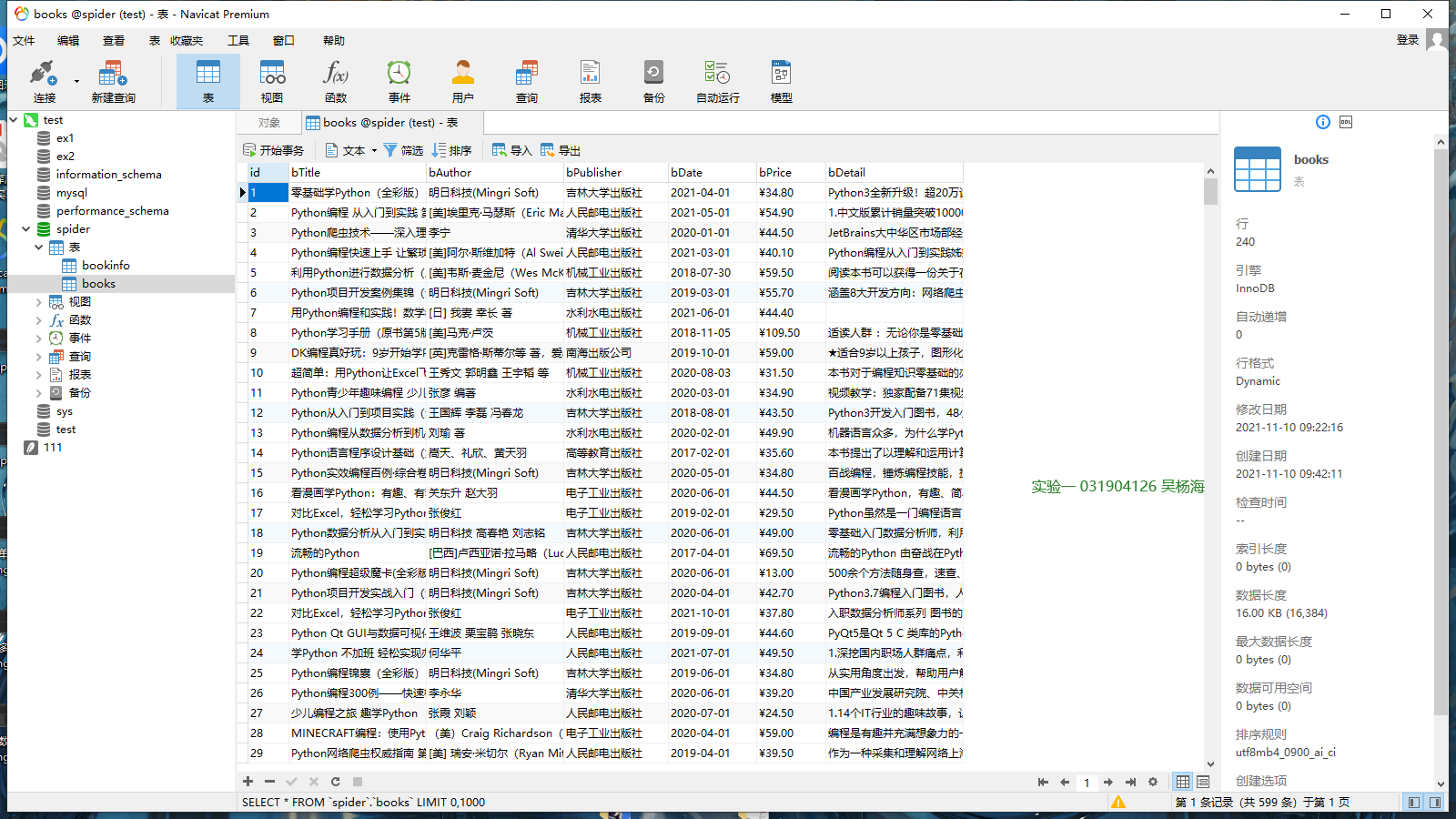

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;Scrapy+Xpath+MySQL数据库存储技术路线爬取当当网站图书数据

候选网站:http://search.dangdang.com/?key=python&act=input

关键词:学生可自由选择

结果展示:

实现过程:

思路部分:在当当网站中找到我们所需要的图书信息的xpath路径,思路非常的简单

代码部分:

爬虫主体:

class DdSpider(scrapy.Spider):

name = 'bookSpider'

keyword = 'python'

start_urls = ['http://search.dangdang.com/']

source_url = 'http://search.dangdang.com/'

header = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) '

'Chrome/57.0.2987.98 Safari/537.36 LBBROWSER'}

def parse(self, response):

try:

dammit = UnicodeDammit(response.body, ["utf-8", "gbk"])

data = dammit.unicode_markup

selector = scrapy.Selector(text=data)

lis = selector.xpath("//li['@ddt-pit'][starts-with(@class,'line')]")

for li in lis:

title = li.xpath("./a[position()=1]/@title").extract_first()

price = li.xpath("./p[@class='price']/span[@class='search_now_price']/text()").extract_first()

author = li.xpath("./p[@class='search_book_author']/span[position()=1]/a/@title").extract_first()

date = li.xpath("./p[@class='search_book_author']/span[position()=last()-1]/text()").extract_first()

publisher = li.xpath("./p[@class='search_book_author']/span[position()=last()]/a/@title").extract_first()

detail = li.xpath("./p[@class='detail']/text()").extract_first()

item = DangdangItem()

item["title"] = title.strip() if title else ""

item["author"] = author.strip() if author else ""

item["date"] = date.strip()[1:] if date else ""

item["publisher"] = publisher.strip() if publisher else ""

item["price"] = price.strip() if price else ""

item["detail"] = detail.strip() if detail else ""

yield item

# 爬取前十页内容

for i in range(1, 11):

url = DdSpider.source_url + "?key=" + DdSpider.keyword + "&act=input&page_index=" + str(i)

yield scrapy.Request(url=url, callback=self.parse)

except Exception as err:

print(err)

pipeline部分:由于本次实验涉及了mysql数据库,首先我们需要在数据库中创建好存储数据的表,在代码中创建也可以,但使用mysql的相关工具更加方便,在已经创建好表的情况之下就可以对数据直接进行插入操作了。

class DangdangPipeline(object):

def open_spider(self, spider):

print("opened")

try:

# 建立和数据库的连接

# 参数1:数据库的ip(localhost为本地ip的意思)

# 参数2:数据库端口

# 参数3,4:用户及密码

# 参数5:数据库名

# 参数6:编码格式

self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd='root', db="Spider",

charset="utf8")

# 建立游标

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

# 如果有books表就删除

self.cursor.execute("delete from books")

self.opened = True

self.count = 0

except Exception as err:

print(err)

self.opened = False

def close_spider(self, spider):

if self.opened:

# 用连接提交

self.con.commit()

# 关闭连接

self.con.close()

self.opened = False

print("closed")

print("总共爬取", self.count, "本书籍")

def process_item(self, item, spider):

try:

if self.opened:

self.cursor.execute(

"insert into books (id, bTitle, bAuthor, bPublisher, bDate, bPrice, bDetail) values (%s,%s,%s,%s,%s,%s,%s)",

(self.count+1, item["title"], item["author"], item["publisher"], item["date"], item["price"], item["detail"]))

self.count += 1

except Exception as err:

print(err)

return item

items部分:没有什么特别的地方

class DangdangItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title = scrapy.Field()

author = scrapy.Field()

date = scrapy.Field()

publisher = scrapy.Field()

detail = scrapy.Field()

price = scrapy.Field()

心得体会:巩固了对MySQL数据库的使用方法和scrapy的使用

代码地址:https://gitee.com/kilig-seven/crawl_project/tree/master/%E7%AC%AC%E5%9B%9B%E6%AC%A1%E5%A4%A7%E4%BD%9C%E4%B8%9A

作业二

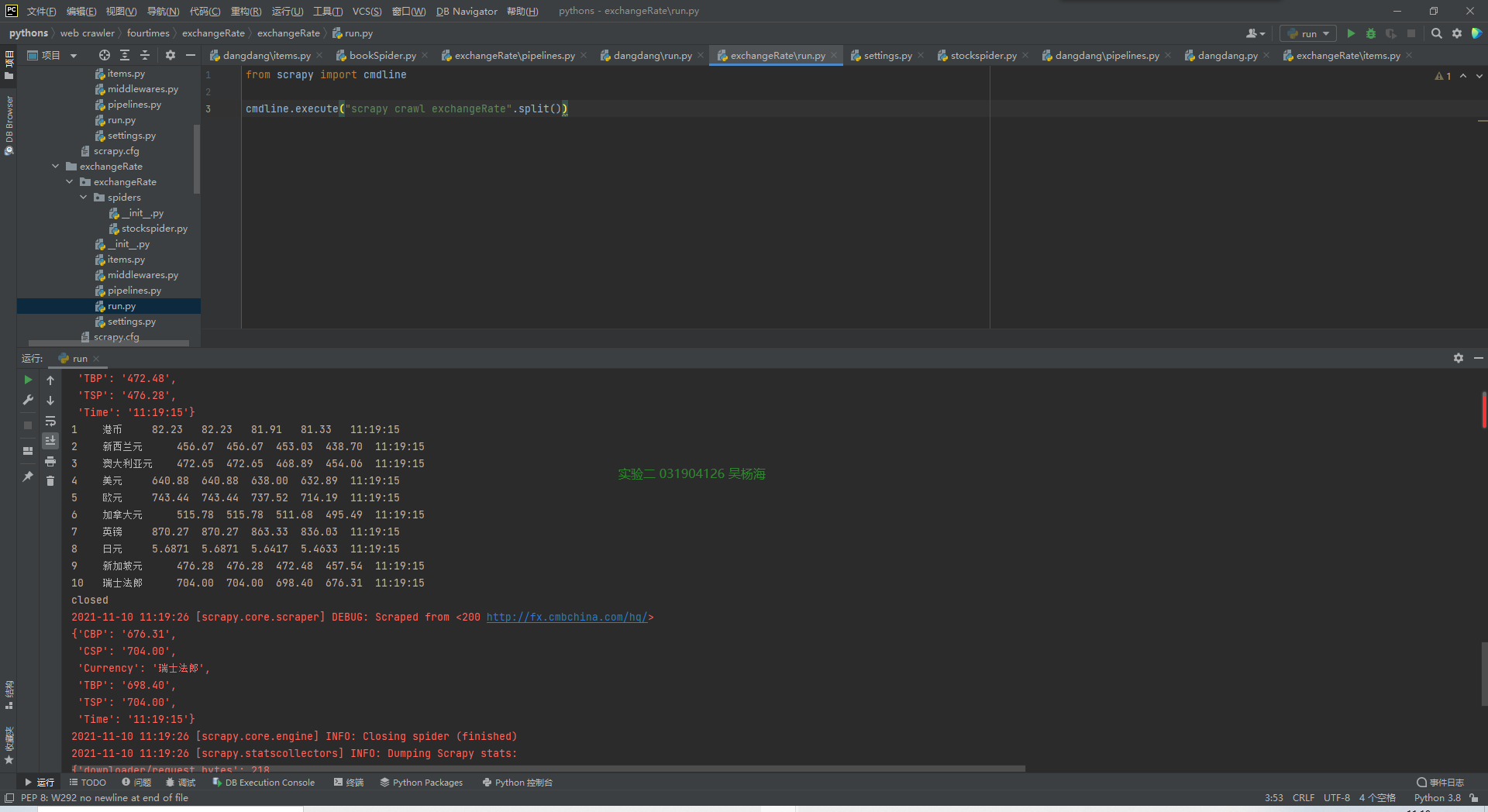

要求:熟练掌握 scrapy 中 Item、Pipeline 数据的序列化输出方法;使用scrapy框架+Xpath+MySQL数据库存储技术路线爬取外汇网站数据。

候选网站:招商银行网:http://fx.cmbchina.com/hq/

输出信息:MySQL数据库存储和输出格式

| Id | Currency | TSP | CSP | TBP | CBP | Time |

|---|---|---|---|---|---|---|

| 1 | 港币 | 86.60 | 86.60 | 86.26 | 85.65 | 15:36:30 |

| 2...... |

class StocksSpider(scrapy.Spider): name = 'exchangeRate' start_urls = ['http://fx.cmbchina.com/hq/'] source_url = 'http://fx.cmbchina.com/hq/' header = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) ' 'Chrome/57.0.2987.98 Safari/537.36 LBBROWSER'} def start_request(self): url = StocksSpider.source_url yield scrapy.Request(url=url, callback=self.parse) def parse(self, response): try: dammit = UnicodeDammit(response.body, ["utf-8", "gbk"]) data = dammit.unicode_markup selector = scrapy.Selector(text=data) items = selector.xpath("//div[@class='box hq']/div[@id='realRateInfo']/table[@align='center']") count = 2 while count < 12: Currency = items.xpath("//tr[position()="+str(count)+"]/td[@class='fontbold']/text()").extract_first() TSP = items.xpath("//tr[position()="+str(count)+"]/td[position()=4]/text()").extract_first() CSP = items.xpath("//tr[position()="+str(count)+"]/td[position()=5]/text()").extract_first() TBP = items.xpath("//tr[position()="+str(count)+"]/td[position()=6]/text()").extract_first() CBP = items.xpath("//tr[position()="+str(count)+"]/td[position()=7]/text()").extract_first() Time = items.xpath("//tr[position()="+str(count)+"]/td[position()=8]/text()").extract_first() item = ExchangeRateItem() item["Currency"] = Currency.strip() item["TSP"] = TSP.strip() item["CSP"] = CSP.strip() item["TBP"] = TBP.strip() item["CBP"] = CBP.strip() item["Time"] = Time.strip() count += 1 yield item except Exception as err: print(err)

pipeline部分:

class ExchangeratePipeline(object): def open_spider(self, spider): print("opened") try: self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd='root', db="spider", charset="utf8") self.cursor = self.con.cursor(pymysql.cursors.DictCursor) self.cursor.execute("delete from waihui") self.opened = True except Exception as err: print(err) self.opened = False def close_spider(self, spider): if self.opened: self.con.commit() self.con.close() self.opened = False print("closed") count = 0 print("序号\t", "交易币\t", "现汇卖出价\t", "现钞卖出价\t ", "现汇买入价\t ", "现钞买入价\t", "时间\t") def process_item(self, item, spider): try: self.count += 1 print(str(self.count) + "\t", item["Currency"] + "\t", str(item["TSP"]) + "\t", str(item["CSP"]) + "\t", str(item["TBP"]) + "\t", str(item["CBP"]) + "\t", item["Time"]) if self.opened: self.cursor.execute( "insert into waihui (wId, wCurrency, wTSP, wCSP, wTBP, wCBP, wTime) values (%s,%s,%s,%s,%s,%s,%s)", (str(self.count), item["Currency"], str(item["TSP"]), str(item["CSP"]), str(item["TBP"]), str(item["CBP"]), str(item["Time"]))) except Exception as err: print(err) return item

items部分:

class ExchangeRateItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() Id = scrapy.Field() Currency = scrapy.Field() TSP = scrapy.Field() CSP = scrapy.Field() TBP = scrapy.Field() CBP = scrapy.Field() Time = scrapy.Field()

代码地址:https://gitee.com/kilig-seven/crawl_project/tree/master/%E7%AC%AC%E5%9B%9B%E6%AC%A1%E5%A4%A7%E4%BD%9C%E4%B8%9A

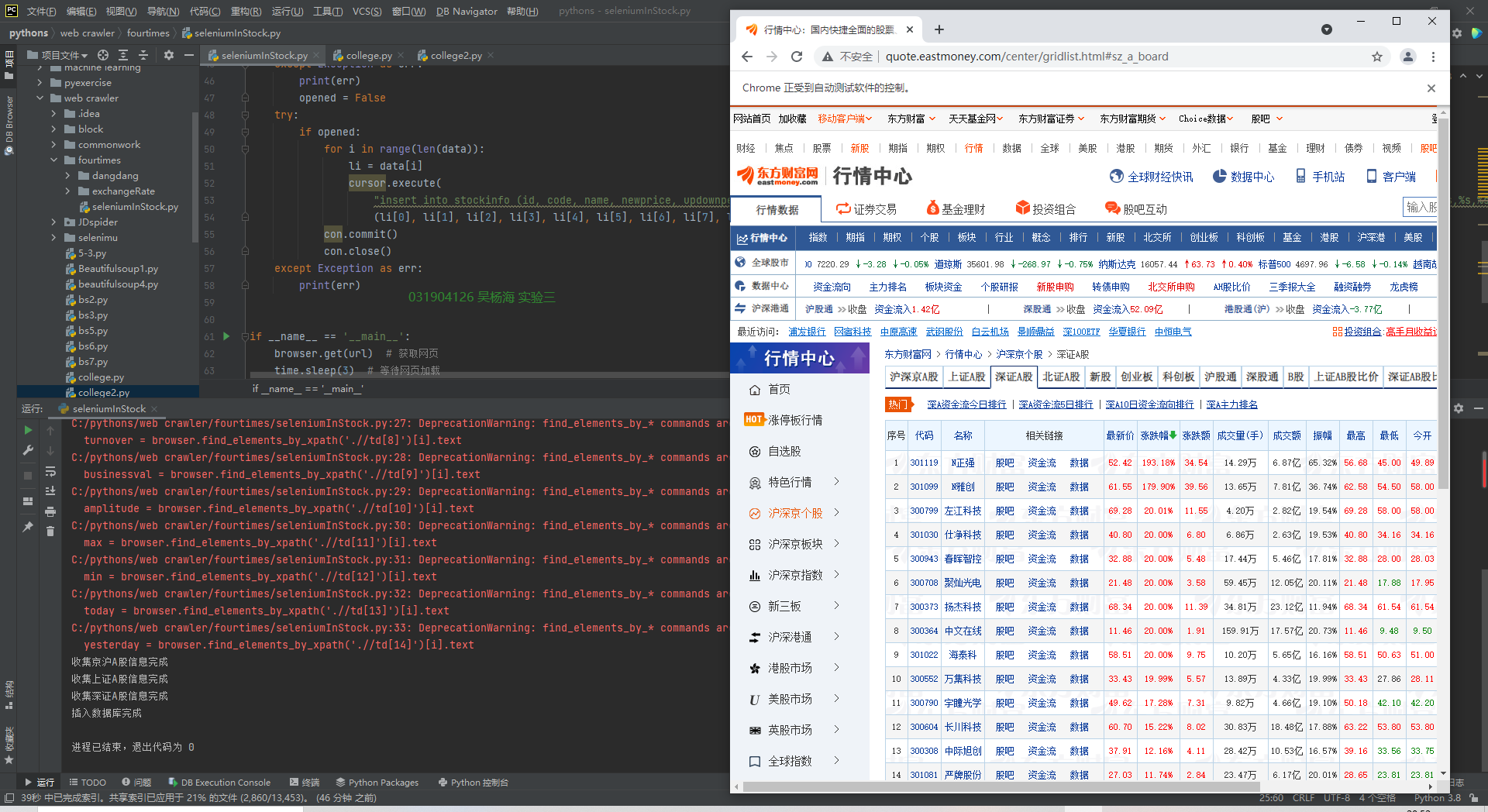

作业三

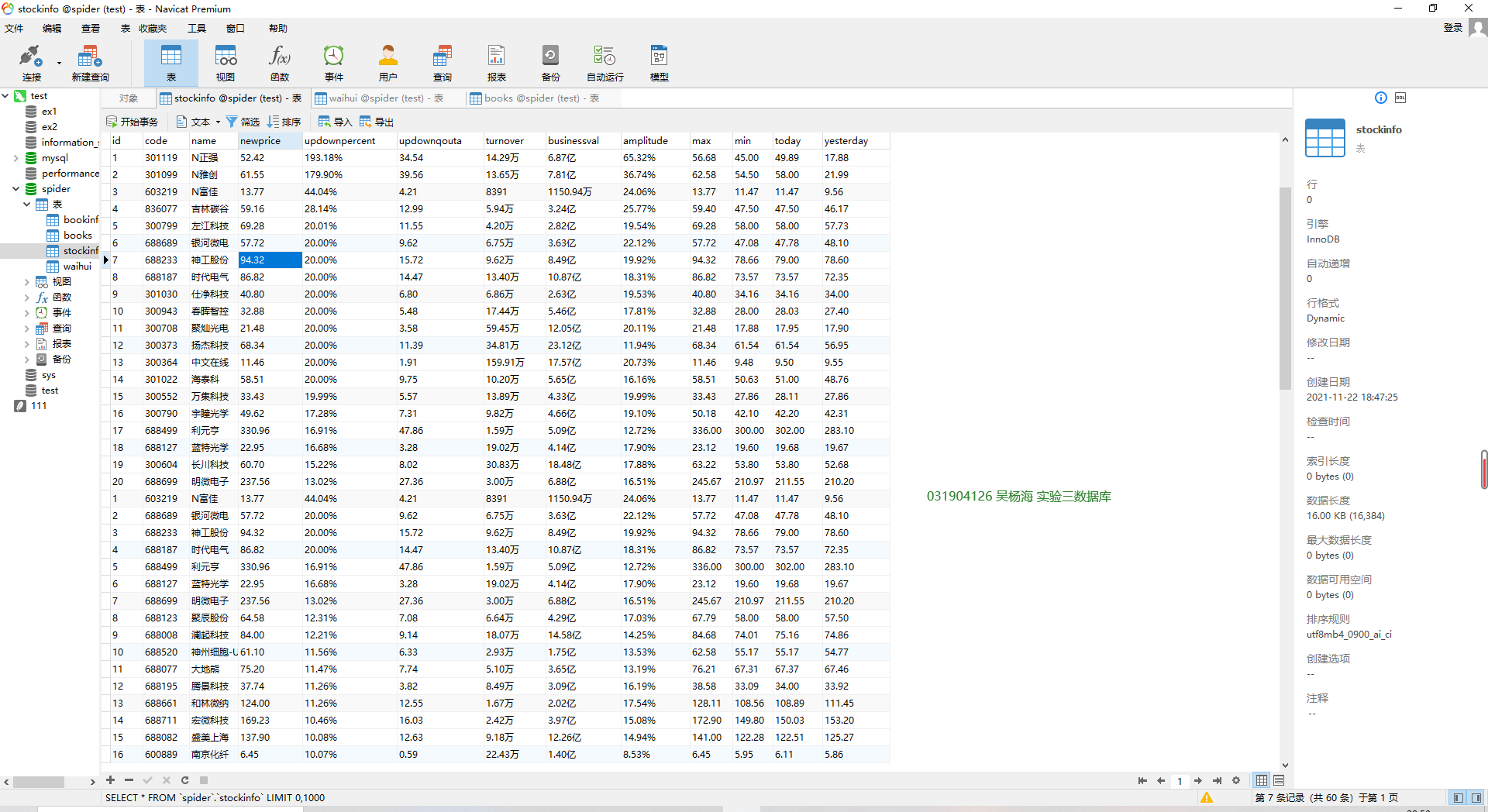

要求:熟练掌握 Selenium 查找HTML元素、爬取Ajax网页数据、等待HTML元素等内容;使用Selenium框架+ MySQL数据库存储技术路线爬取“沪深A股”、“上证A股”、“深证A股”3个板块的股票数据信息。

候选网站:东方财富:http://quote.eastmoney.com/center/gridlist.html#hs_a_board

输出信息:MySQL数据库存储和输出格式如下,表头应是英文命名例如:序号id,股票代码:bStockNo……,由同学们自行定义设计表头:

def get_data(): global data try: tr = browser.find_elements_by_xpath('//*[@id="table_wrapper-table"]/tbody/tr') for i in range(len(tr)): id = browser.find_elements_by_xpath('.//td[position()=1]')[i].text code = browser.find_elements_by_xpath('.//td[2]')[i].text name = browser.find_elements_by_xpath('.//td[3]')[i].text newprice = browser.find_elements_by_xpath('.//td[5]')[i].text updownpercent = browser.find_elements_by_xpath('.//td[6]')[i].text updownqouta = browser.find_elements_by_xpath('.//td[7]')[i].text turnover = browser.find_elements_by_xpath('.//td[8]')[i].text businessval = browser.find_elements_by_xpath('.//td[9]')[i].text amplitude = browser.find_elements_by_xpath('.//td[10]')[i].text max = browser.find_elements_by_xpath('.//td[11]')[i].text min = browser.find_elements_by_xpath('.//td[12]')[i].text today = browser.find_elements_by_xpath('.//td[13]')[i].text yesterday = browser.find_elements_by_xpath('.//td[14]')[i].text data.append([id, code, name, newprice, updownpercent, updownqouta, turnover, businessval, amplitude, max, min, today, yesterday]) except TimeoutError: get_data()

insert_database()函数,用于将数据插入数据库当中

def insert_database(): try: con = pymysql.connect(host='localhost', port=3306, user='root', password='root', db='spider', charset='utf8') # 连接数据库,事先已经创建好表 cursor = con.cursor(pymysql.cursors.DictCursor) cursor.execute("delete from stockinfo") opened = True except Exception as err: print(err) opened = False try: if opened: for i in range(len(data)): li = data[i] cursor.execute( "insert into stockinfo (id, code, name, newprice, updownpercent, updownqouta, turnover, businessval, amplitude, max, min, today, yesterday) values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)", (li[0], li[1], li[2], li[3], li[4], li[5], li[6], li[7], li[8], li[9], li[10], li[11], li[12])) con.commit() con.close() except Exception as err: print(err)

翻页部分:利用selenium的功能加载按钮模拟点击进行页面的跳转

button1 = wait.until(EC.element_to_be_clickable((By.XPATH, '//*[@id="nav_sh_a_board"]/a'))) # 上证A股翻页按钮 button1.click() # 点击翻页 time.sleep(3) # 等待网页加载

心得体会:数据库在进行完插入操作后一定要记得将数据库关闭并提交,否则数据怎么都插入不进行,血与泪的教训。

代码地址:https://gitee.com/kilig-seven/crawl_project/tree/master/%E7%AC%AC%E5%9B%9B%E6%AC%A1%E5%A4%A7%E4%BD%9C%E4%B8%9A