在k8s上安装KubeSphere

1.安装Docker

sudo yum remove docker*

sudo yum install -y yum-utils

#配置docker的yum地址

sudo yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#查看当前镜像源中支持的docker版本

yum list docker-ce --showduplicates

#安装指定版本

sudo yum install -y docker-ce-20.10.8 docker-ce-cli-20.10.8 containerd.io-1.4.8

#启动&开机启动docker

systemctl enable docker --now

# docker加速配置

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://82m9ar63.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

2.安装Kubernetes

2.1基本环境(iptables)

每个机器使用内网ip互通

每个机器配置自己的hostname,不能用localhost

#设置每个机器自己的hostname

hostnamectl set-hostname kht121

# 将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

#关闭swap

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sudo sysctl --system

2.1基本环境(ipvs)

#禁用iptables和firewalld服务

#kubernetes和docker在运行中会产生大量的iptables规则,为了不让系统规则跟它们混淆,直接关闭系统的规则

#1关闭firewalld服务

[root@master ~]# systemctl stop firewalld

[root@master ~]# systemctl disable firewalld

#2关闭iptables服务

[root@master ~]# systemctl stop iptables

[root@master ~]# systemctl disable iptables

#修改linux的内核参数

# 修改linux的内核参数,添加网桥过滤和地址转发功能

# 编辑/etc/sysctl.d/kubernetes.conf文件,添加如下配置:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

# 重新加载配置

[root@master ~]# sysctl -p

# 加载网桥过滤模块

[root@master ~]# modprobe br_netfilter

# 查看网桥过滤模块是否加载成功

[root@master ~]# lsmod | grep br_netfilter

[root@kht141 ~]# lsmod | grep br_netfilter

br_netfilter 22256 0

bridge 151336 1 br_netfilter

#配置ipvs功能

#在kubernetes中service有两种代理模型,一种是基于iptables的,一种是基于ipvs的

#两者比较的话,ipvs的性能明显要高一些,但是如果要使用它,需要手动载入ipvs模块

# 1 安装ipset和ipvsadm

[root@master ~]# yum install ipset ipvsadmin -y

# 2 添加需要加载的模块写入脚本文件

#(在内核4.19+版本nf_conntrack_ipv4已经改为nf_conntrack,4.18以下使用nf_conntrack_ipv4)

[root@master ~]# cat <<EOF > /etc/sysconfig/modules/ipvs.modules

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

# 3 为脚本文件添加执行权限

[root@master ~]# chmod +x /etc/sysconfig/modules/ipvs.modules

# 4 执行脚本文件

[root@master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modules

# 5 查看对应的模块是否加载成功

[root@master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

reboot重启并验证

[root@kht121 calico]# ipvsadm -ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

2.2安装kubelet、kubeadm、kubectl

#配置k8s的yum源地址

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#查看当前镜像源中支持的kubelet版本

yum list kubelet --showduplicates

#安装 kubelet,kubeadm,kubectl

sudo yum install -y kubelet-1.23.10 kubeadm-1.23.10 kubectl-1.23.10

#启动kubelet

sudo systemctl enable --now kubelet

# 在安装kubernetes集群之前,必须要提前准备好集群需要的镜像,所需镜像可以通过下面命令查看

[root@kht121 ~]# kubeadm config images list

kubeadm config images list --kubernetes-version=1.23.10

[root@kht121 ~]# kubeadm config images list

I0823 19:19:17.662932 23929 version.go:255] remote version is much newer: v1.24.4; falling back to: stable-1.23

k8s.gcr.io/kube-apiserver:v1.23.10

k8s.gcr.io/kube-controller-manager:v1.23.10

k8s.gcr.io/kube-scheduler:v1.23.10

k8s.gcr.io/kube-proxy:v1.23.10

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

#所有机器配置master域名

echo "192.168.2.121 kht121" >> /etc/hosts

2.3初始化master节点

###注意更改主机名

kubeadm init \

--apiserver-advertise-address=192.168.2.121 \

--control-plane-endpoint=kht121 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.23.10 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

2.4记录关键信息

our Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join kht121:6443 --token kxzyp8.nq7pax6gy4l40vy3 \

--discovery-token-ca-cert-hash sha256:54abb11284c598276a04a88a114496c8dbd67592dc046c737ff9a0f32dbdc98d \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join kht121:6443 --token kxzyp8.nq7pax6gy4l40vy3 \

--discovery-token-ca-cert-hash sha256:54abb11284c598276a04a88a114496c8dbd67592dc046c737ff9a0f32dbdc98d

2.5执行相关命令

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#查看集群状态

[root@kht121 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kht121 NotReady control-plane,master 4m58s v1.23.10

#重新生成新令牌

kubeadm token create --print-join-command

2.6主节点安装caclio插件

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

#caclio安装成功后等待并插件

[root@kht121 kht]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-67c466d997-dgjl6 1/1 Running 0 5m9s

kube-system calico-node-wbhws 1/1 Running 0 5m9s

kube-system coredns-65c54cc984-lxwwv 1/1 Running 0 12m

kube-system coredns-65c54cc984-psfp5 1/1 Running 0 12m

kube-system etcd-kht121 1/1 Running 2 13m

kube-system kube-apiserver-kht121 1/1 Running 2 13m

kube-system kube-controller-manager-kht121 1/1 Running 2 13m

kube-system kube-proxy-kcwm7 1/1 Running 0 12m

kube-system kube-scheduler-kht121 1/1 Running 2 13m

[root@kht121 kht]# kubectl get node

NAME STATUS ROLES AGE VERSION

kht121 Ready control-plane,master 13m v1.23.10

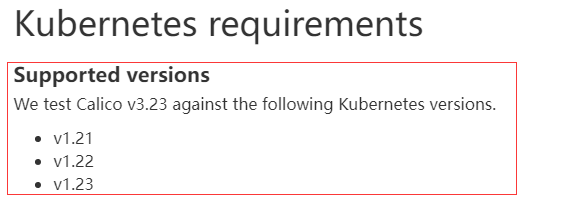

查看特定caclio版本对应的k8s版本

下载特定版本的yaml文件

3.安装KubeSphere前置环境

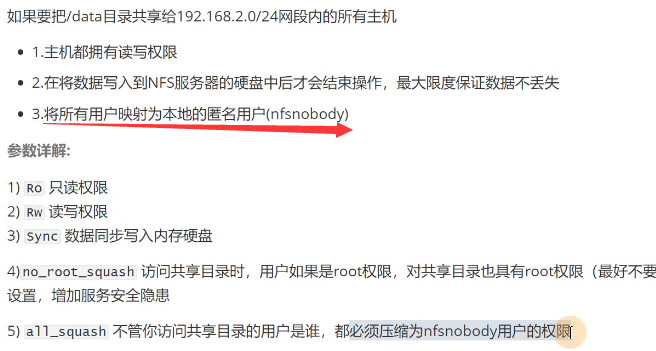

3.1.nfs文件系统

3.1.1.安装nfs-server

# 在每个机器。

yum install -y nfs-utils

# 在master 执行以下命令

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

#ubuntu系统

sudo apt install nfs-kernel-server -y

/work/nfs *(rw,sync,no_subtree_check,no_root_squash)

# 执行以下命令,启动 nfs 服务;创建共享目录

mkdir -p /nfs/data

# 在master执行

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

# 使配置生效

exportfs -r

#检查配置是否生效

exportfs

#如下效果为成功

[root@kht121 ~]# exportfs

/nfs/data <world>

#重载配置文件,不需要重启服务

root@kht121 etc]# exportfs -arv

exporting 192.168.2.0/24:/nfs/data

#检测端口

[root@kht121 etc]# netstat -ntlp|grep rpc

tcp 0 0 0.0.0.0:41327 0.0.0.0:* LISTEN 58670/rpc.statd

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 58608/rpcbind

tcp 0 0 0.0.0.0:20048 0.0.0.0:* LISTEN 58678/rpc.mountd

tcp6 0 0 :::111 :::* LISTEN 58608/rpcbind

tcp6 0 0 :::20048 :::* LISTEN 58678/rpc.mountd

tcp6 0 0 :::54517 :::* LISTEN 58670/rpc.statd

#检测共享内容

[root@kht121 etc]# cat /var/lib/nfs/etab

/nfs/data 192.168.2.0/24(rw,sync,wdelay,hide,nocrossmnt,secure,no_root_squash,no_all_squash,no_subtree_check,secure_locks,acl,no_pnfs,anonuid=65534,anongid=65534,sec=sys,rw,secure,no_root_squash,no_all_squash)

#服务端授权,否则目录无法共享

[root@kht121 data]# chown -R nfsnobody:nfsnobody /nfs/data/

3.1.2配置nfs-client(选做,node节点执行)

#同样执行

yum install -y nfs-utils

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

#用showmount -e查看远程服务器rpc提供的可挂载的nfs信息

[root@kht122 data]# showmount -e 192.168.2.121

Export list for 192.168.2.121:

/nfs/data 192.168.2.0/24

#创建挂载点目录(可不同)

mkdir -p /nfs/data1

#执行挂载命令,完成后测试文件是否共享

mount -t nfs 192.168.2.121:/nfs/data /nfs/data1

#配置客户端重启时自动挂载

vim /etc/fstab

192.168.2.121:/nfs/data /nfs/data1 nfs defaults 0 0

#查看挂载是否成功

[root@kht122 data1]# df -h

192.168.2.121:/nfs/data 17G 12G 5.6G 68% /nfs/data1

#卸载挂载

umount /nfs/data1

3.1.3安装metrics-server

3.1.4配置默认存储

配置动态供应的默认存储类,新建sc.yaml文件,内容如下,

使用时更改对应的IP地址

执行:kubectl apply -f sc.yaml

查看效果

[root@kht121 test]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-storage (default) k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 11s

#查看对应的pod

[root@kht121 ~]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

default nfs-client-provisioner-5b7f5cb7c8-brnbs 1/1 Running 1 (30s ago) 14m

kube-system calico-kube-controllers-67c466d997-dgjl6 1/1 Running 2 (3m34s ago) 14h

kube-system calico-node-js7ms 1/1 Running 1 (76m ago) 87m

kube-system calico-node-wbhws 1/1 Running 2 (3m34s ago) 14h

kube-system coredns-65c54cc984-lxwwv 1/1 Running 2 (3m29s ago) 14h

kube-system coredns-65c54cc984-psfp5 1/1 Running 2 (3m29s ago) 14h

kube-system etcd-kht121 1/1 Running 4 (3m34s ago) 14h

kube-system kube-apiserver-kht121 1/1 Running 4 (3m24s ago) 14h

kube-system kube-controller-manager-kht121 1/1 Running 4 (3m34s ago) 14h

kube-system kube-proxy-kcwm7 1/1 Running 2 (3m34s ago) 14h

kube-system kube-proxy-wkmj6 1/1 Running 1 (76m ago) 91m

kube-system kube-scheduler-kht121 1/1 Running 4 (3m34s ago) 14h

sc.yaml测试

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.2.121 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /nfs/data ## nfs服务器共享的目录

volumes:

- name: nfs-client-root

nfs:

server: 192.168.2.121

path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

4.安装KubeSphere

#下载核心文件,并修改cluster-configuration.yaml后执行

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/kubesphere-installer.yaml

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.3.0/cluster-configuration.yaml

#查看安装进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

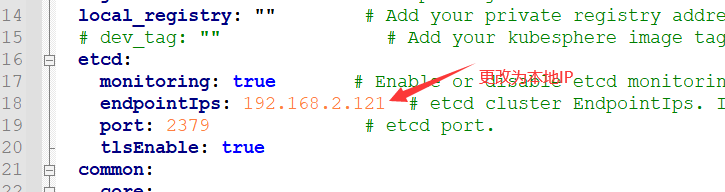

#解决etcd监控证书找不到问题

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

访问任意机器的 30880端口

账号 : admin

密码 : P@88w0rd