prometheus-operator 详细总结(helm一键安装)

一、介绍prometheus-operator

二、查看配置rbac授权

三、helm安装prometheus-operator

四、配置监控k8s组件

五、granafa添加新数据源

六、监控mysql

七、alertmanager配置

最后、卸载prometheus-operator

新版、变动

一、概述

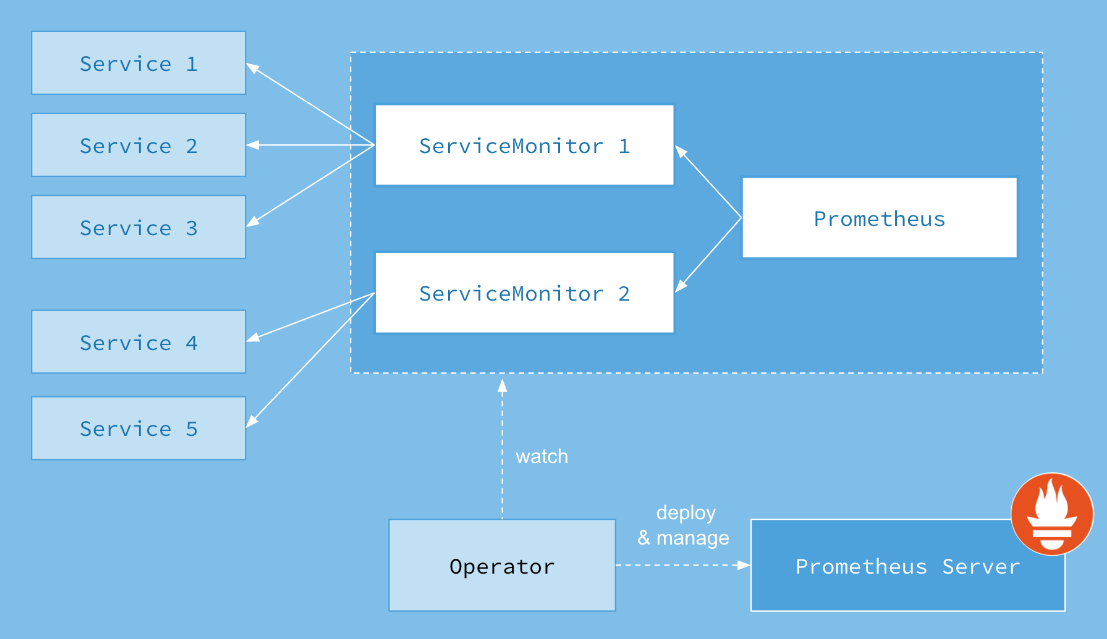

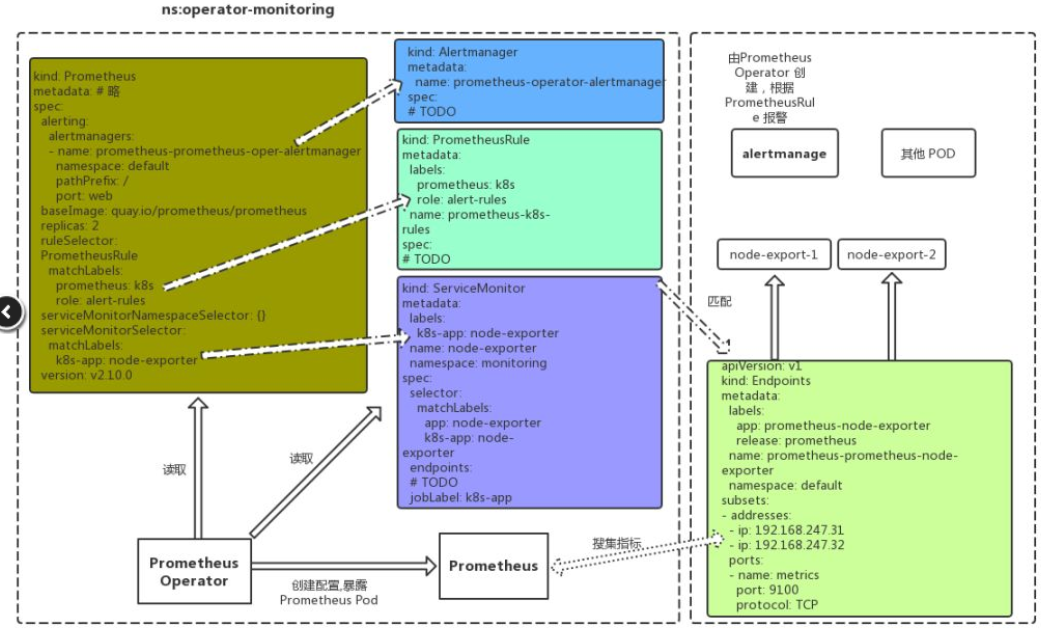

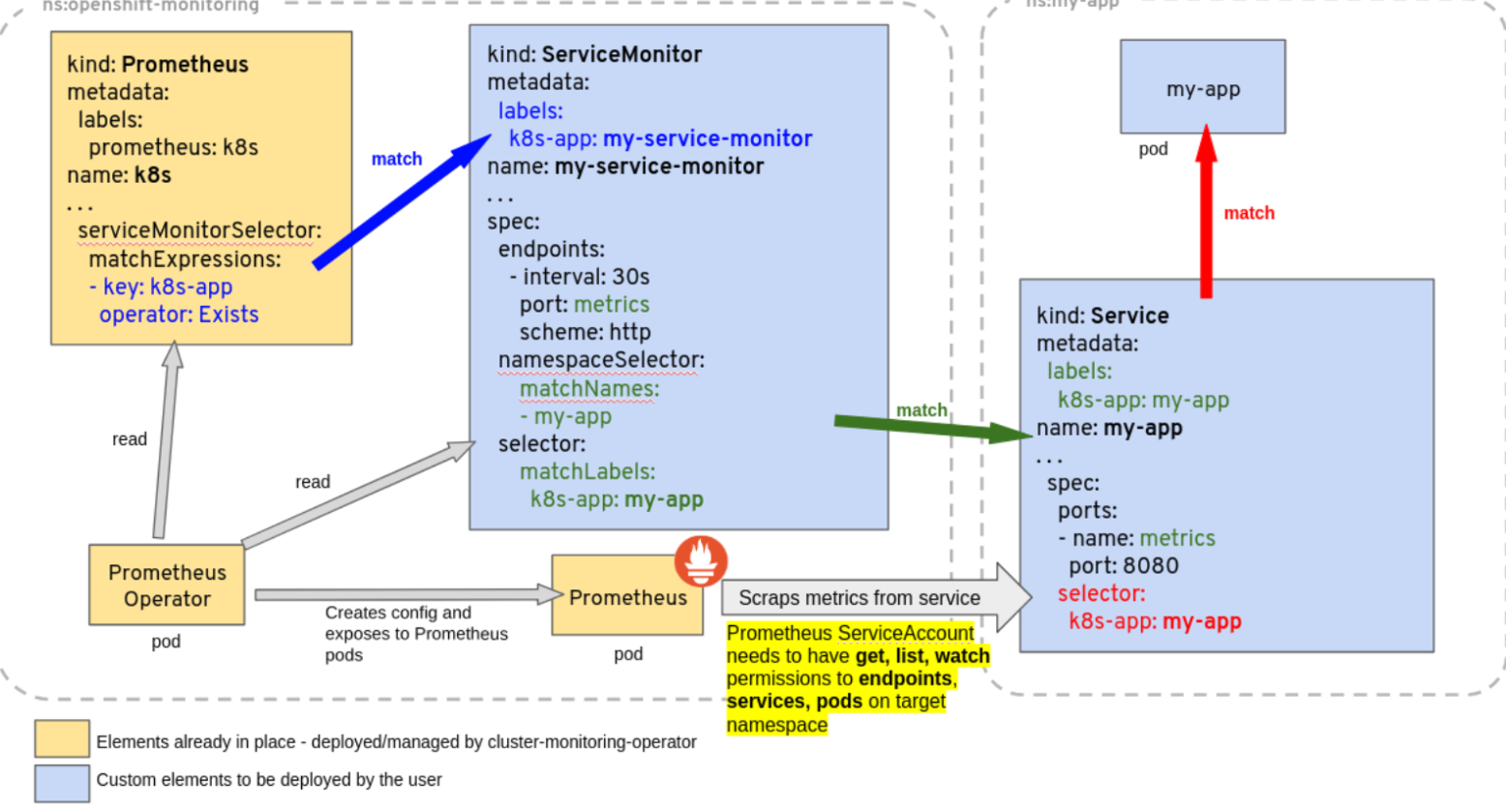

The Prometheus resource 声明性地描述了Prometheus deployment所需的状态,而ServiceMonitor描述了由Prometheus 监视的目标集

Service

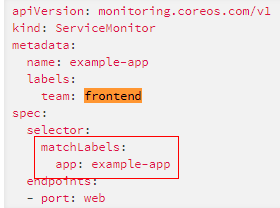

ServiceMonitor

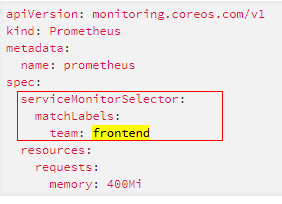

通过selector匹配service。ps:这里的team:frontend,下面会提及到。通过标签选择endpoints,实现动态发现服务

port:web #对应service的端口名

Prometheus

通过matchLabels匹配ServiceMonitor的标签

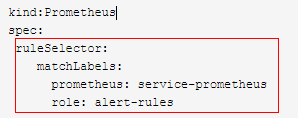

规则绑定:通过ruleSelector(匹配标签 prometheus:service-prometheus)选择PrometheusRule里面的labels prometheus:service-prometheus

PrometheusRule

规则配置

上面的架构配置后,使得前端团队能够创建新的servicemonitor和serive,从而允许对Prometheus进行动态重新配置

Altertmanager

apiVersion: monitoring.coreos.com/v1 kind: Alertmanager metadata: generation: 1 labels: app: prometheus-operator-alertmanager chart: prometheus-operator-0.1.27 heritage: Tiller release: my-release name: my-release-prometheus-oper-alertmanager namespace: default spec: baseImage: quay.io/prometheus/alertmanager externalUrl: http://my-release-prometheus-oper-alertmanager.default:9093 listenLocal: false logLevel: info paused: false replicas: 1 retention: 120h routePrefix: / serviceAccountName: my-release-prometheus-oper-alertmanager version: v0.15.2

二、查看配置rbac授权(默认下面的不用配置)

如果激活了RBAC授权,则必须为prometheus和prometheus-operator创建RBAC规则,为prometheus-operator创建了一个ClusterRole和一个ClusterRoleBinding。

2.1 为prometheus sa赋予相关权限

apiVersion: v1 kind: ServiceAccount metadata: name: prometheus apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRole metadata: name: prometheus rules: - apiGroups: [""] resources: - nodes - services - endpoints - pods verbs: ["get", "list", "watch"] - apiGroups: [""] resources: - configmaps verbs: ["get"] - nonResourceURLs: ["/metrics"] verbs: ["get"] apiVersion: rbac.authorization.k8s.io/v1beta1 kind: ClusterRoleBinding metadata: name: prometheus roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: prometheus subjects: - kind: ServiceAccount name: prometheus namespace: default

2.2为prometheus-operator sa赋予相关权限,详细参考官方文档,这里就补贴出来了

https://coreos.com/operators/prometheus/docs/latest/user-guides/getting-started.html

三、通过helm安装prometheus-operator

github官方链接

https://github.com/helm/charts/tree/master/stable/prometheus-operator

新版连接

https://github.com/prometheus-community/helm-charts/tree/main/charts/kube-prometheus-stack

安装命令

$ helm install --name my-release stable/prometheus-operator

安装指定参数,比如prometheus的serivce type改为nodeport,默认为ClusterIP,(prometheus-operator service文件 官方的文档设置了cluster:None导致不能直接修改,办法是部署后,再通过kubectl -f service.yaml实现修改为nodeport)

$ helm install --name my-release stable/prometheus-operator --set prometheus.service.type=NodePort --set prometheus.service.nodePort=30090

或者安装指定yaml文件

$ helm install --name my-release stable/prometheus-operator -f values1.yaml,values2.yaml

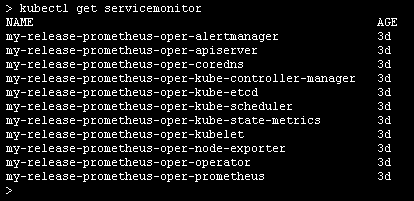

四、配置监控k8s组件

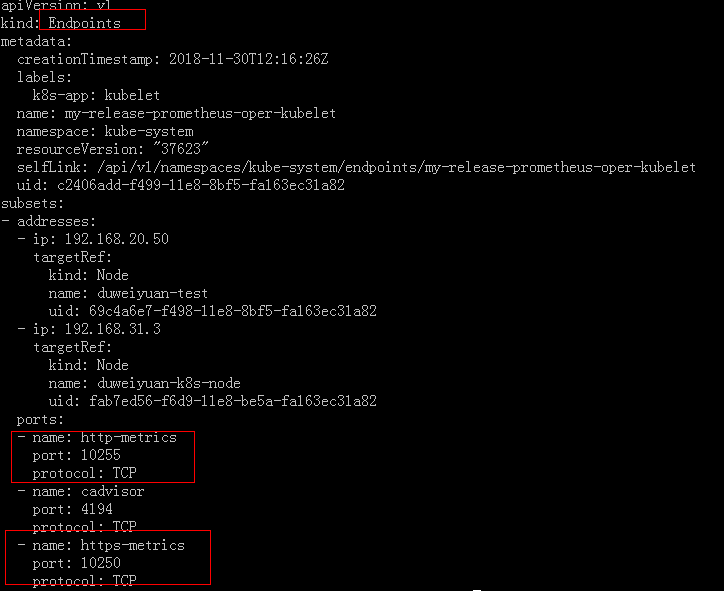

4.1配置监控kubelet(默认没监控上,因为名字为kubelet的servicemonitor 使用了http方式访问endpoint的10255,我在rancher搭建的k8s上是使用https的10250端口),默认配置如下:

参考官方文档https://coreos.com/operators/prometheus/docs/latest/user-guides/cluster-monitoring.html,修改servicemonitor,如下

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

name: kubelet

labels:

k8s-app: kubelet

spec:

jobLabel: k8s-app

endpoints: #这里默认使用http方式,而且没有使用tls,修改为如下红色配置

- port: https-metrics

scheme: https

interval: 30s

tlsConfig:

insecureSkipVerify: true

bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

- port: https-metrics

scheme: https

path: /metrics/cadvisor

interval: 30s

honorLabels: true

tlsConfig:

insecureSkipVerify: true

bearerTokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

selector:

matchLabels:

k8s-app: kubelet

namespaceSelector:

matchNames:

- kube-system

执行修改kubectl apply -f 上面的文件.yaml

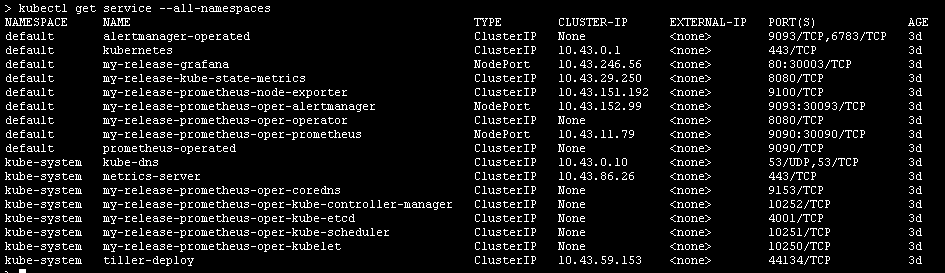

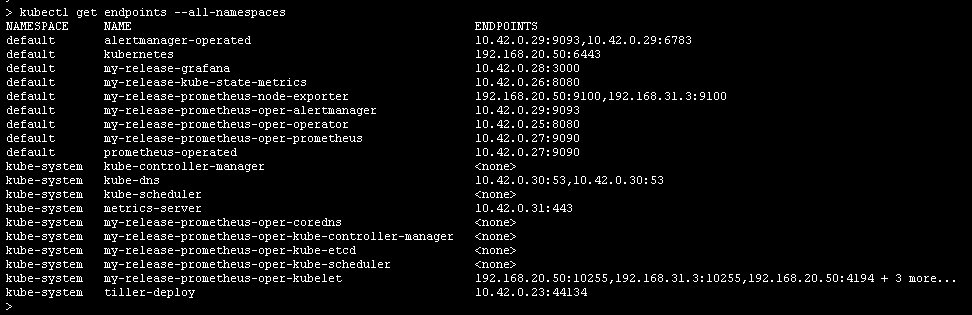

4.2配置监控kube-controller-manager

由于我这里部署的kube-controller-manager不是pod形式启动的,而是直接容器启动,导致Service selector无法选择对应的pod,因此查看Endpoints的配置是没有subset.ip的,最后导致prometheus的target不能抓取到数据,因此我修改endpoints文件(添加红色字段的内容,ip改为master运行的主机ip),同时取消Service的selector如下:

kubectl apply -f 上面的文件.yaml

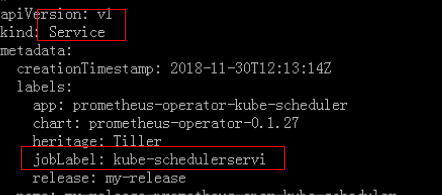

kubectl edit svc my-release-prometheus-oper-kube-scheduler 画面如下,把红色的selector删除,:wq保存

4.3同理配置kube-scheduler,端口改为10252,省略。

4.4配置etcd

Service配置:

ServiceMonitor配置:

4.5jobLabel的作用:

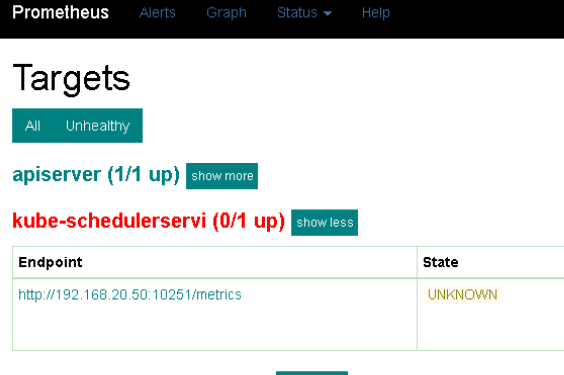

我配置Service的jobLabel为kube-schedulerservi

target显示(刷新页面等待一些时间,才会看到结果)如下:

五、granafa添加新数据源(默认有一个数据源,为了区分应用和默认的监控,这里再添加一个应用的)

5.1定义资源Prometheus

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app: prometheus

prometheus: service-prometheus

name: service-prometheus

namespace: monitoring

spec:

....

5.2 查看grafana-datasource configmap默认配置

kubectl get configmap my-release-prometheus-oper-grafana-datasource -o yaml

apiVersion: v1

data:

datasource.yaml: |-

apiVersion: 1

datasources:

- name: service-prometheus

type: prometheus

url: http://service-ip:9090/ #这个没测试过,有空再研究

access: proxy

isDefault: true

kind: ConfigMa

5.3修改grafana-datasource configmap

六、监控mysql

要修改的默认值如下,values.yaml

mysqlRootPassword: testing mysqlUser: mysqlu mysqlPassword: mysql123 mysqlDatabase: mydb metrics: enabled: true image: prom/mysqld-exporter imageTag: v0.10.0 imagePullPolicy: IfNotPresent resources: {} annotations: {} # prometheus.io/scrape: "true" # prometheus.io/port: "9104" livenessProbe: initialDelaySeconds: 15 timeoutSeconds: 5 readinessProbe: initialDelaySeconds: 5 timeoutSeconds: 1

6.1安装mysql

helm install --name my-release2 -f values.yaml stable/mysql

6.2创建pv

apiVersion: v1 kind: PersistentVolume metadata: name: my-release2-mysql spec: capacity: storage: 8Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Recycle hostPath: path: /data

6.3创建mysql对应ServiceMonitor

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: app: my-release2-mysql heritage: Tiller release: my-release name: my-release2-mysql namespace: default spec: endpoints: - interval: 15s port: metrics jobLabel: jobLabel namespaceSelector: matchNames: - default selector: matchLabels: app: my-release2-mysql release: my-release2

6.4granafa配置

https://grafana.com/dashboards/6239 ,这里下载json模版

然后导入granafa,datasource选择默认的就可以了。

七、alertmanager配置(默认不用配置)

7.1那prometheus资源如何识别alertmanager呢?那是通过prometheus的字段alerting实现匹配alertmanager service,如下:

prometheus实例

apiVersion: monitoring.coreos.com/v1 kind: Prometheus metadata: labels: app: prometheus-operator-prometheus name: my-release-prometheus-oper-prometheus namespace: default spec: alerting: alertmanagers: - name: my-release-prometheus-oper-alertmanager #匹配名为my-release-prometheus-alertmanager 的service namespace: default pathPrefix: / port: web ruleSelector: #选择label为如下的PrometheusRule matchLabels: app: promethetus-operator release: my-release

ruleNamespaceSelector: {} #所有命名空间的PrometheusRule

alertmanager实例

apiVersion: monitoring.coreos.com/v1 kind: Alertmanager metadata: labels: app: prometheus-operator-alertmanager chart: prometheus-operator-0.1.27 heritage: Tiller release: my-release name: my-release-prometheus-oper-alertmanager #secretname用到这里的name namespace: default spec: baseImage: quay.io/prometheus/alertmanager externalUrl: http://my-release-prometheus-oper-alertmanager.default:9093 listenLocal: false logLevel: info paused: false replicas: 1 retention: 120h routePrefix: / serviceAccountName: my-release-prometheus-oper-alertmanager version: v0.15.2

7.2 alertmanager实例如何重新读取alertmanager的配置文件配置呢???是通过prometheus-operator/deployment.yaml里面的- --config-reloader-image=quay.io/coreos/configmap-reload:v0.0.1实现,ps:新版有改动,请参考最新版本,使用了在同一个pod里面启动一个congi-reloader容器热重启配置,因此只需要在configmap或secret里面修改配置。

secret22.yaml

apiVersion: v1 data: alertmanager.yaml: Z2xvYmFsOgogIHJlc29sdmVfdGltZW91dDogNW0KcmVjZWl2ZXJzOgotIG5hbWU6ICJudWxsIgpyb3V0ZToKICBncm91cF9ieToKICAtIGpvYgogIGdyb3VwX2ludGVydmFsOiA1bQogIGdyb3VwX3dhaXQ6IDMwcwogIHJlY2VpdmVyOiAibnVsbCIKICByZXBlYXRfaW50ZXJ2YWw6IDEyaAogIHJvdXRlczoKICAtIG1hdGNoOgogICAgICBhbGVydG5hbWU6IERlYWRNYW5zU3dpdGNoCiAgICByZWNlaXZlcjogIm51bGwiCg== kind: Secret #这些加密内容是alertmanager的配置参数,在linux可以通过 echo "上面data序列"|base64 -d 解密 metadata: labels: app: prometheus-operator-alertmanager chart: prometheus-operator-0.1.27 heritage: Tiller release: my-release name: alertmanager-my-release-prometheus-oper-alertmanager #必须为alertmanager-名字 namespace: default type: Opaque

详情:https://github.com/helm/charts/blob/master/stable/prometheus-operator/templates/prometheus-operator/deployment.yaml

apiVersion: apps/v1beta2 kind: Deployment metadata: name: my-release-prometheus-oper-operator namespace: default template: spec: containers: - args: - --kubelet-service=kube-system/my-release-prometheus-oper-kubelet - --localhost=127.0.0.1 - --prometheus-config-reloader=quay.io/coreos/prometheus-config-reloader:v0.25.0 - --config-reloader-image=quay.io/coreos/configmap-reload:v0.0.1 #通过这个容器重新加载alertmanager的配置,具体实现官网没写 image: quay.io/coreos/prometheus-operator:v0.25.0

PrometheusRule实现规则读取

all.rules.yaml 参考:https://github.com/helm/charts/blob/master/stable/prometheus-operator/templates/alertmanager/rules/all.rules.yaml

apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: name: prometheus-operator labels: app: prometheus-operator #Prometheus资源的ruleSelector会选择这个标签

7.3 重点:重新加载alertmanager配置的操作,如下:(ps:新版有改动,请参考最新版本,使用了在同一个pod里面启动一个congi-reloader容器热重启配置,因此只需要在configmap或secret里面修改配置。)

7.3.1:定义alertmanager.yaml文件

global: resolve_timeout: 5m route: group_by: ['job'] group_wait: 30s group_interval: 5m repeat_interval: 12h receiver: 'webhook' receivers: - name: 'webhook' webhook_configs: - url: 'http://alertmanagerwh:30500/'

ps:不能用tab作为空格,否则会报错

7.3.2:先删除再创建名为alertmanager-{ALERTMANAGER_NAME}的secret(其中{ALERTMANAGER_NAME}对应alertmanager实例名称,按照上面例子就是my-release-prometheus-oper-alertmanager)

kubectl delete secret alertmanager-my-release-prometheus-oper-alertmanager

kubectl create secret generic alertmanager-my-release-prometheus-oper-alertmanager --from-file=alertmanager.yaml

7.3.3 :查看是否生效(ps:新版有改动,请参考最新版本,使用了在同一个pod里面启动一个congi-reloader容器热重启配置,因此只需要在configmap或secret里面修改配置。自动热重启,无需重启alermanager)

等几秒钟中,在alertmanager的ui界面status就可以看看是否生效了。其他配置请查看https://prometheus.io/docs/alerting/configuration/

微信告警方法 https://www.cnblogs.com/jiuchongxiao/p/9024211.html

最后、如何卸载prometheus-operator(重新安装,可以参考这个)

1、直接通过helm delete删除

$ helm delete my-release

2、删除相关crd (helm install的时候自动安装了crd资源)

kubectl delete crd prometheuses.monitoring.coreos.com

kubectl delete crd prometheusrules.monitoring.coreos.com

kubectl delete crd servicemonitors.monitoring.coreos.com

kubectl delete crd alertmanagers.monitoring.coreos.com

3、删除helm 上的my-release

helm del --purge my-release

其他

新版、变动

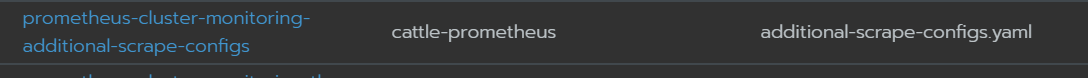

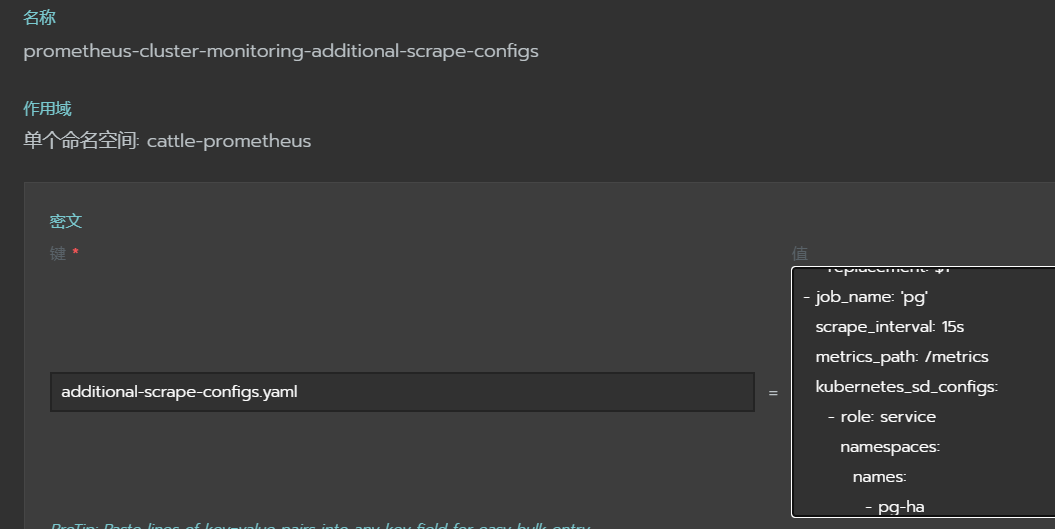

ps:新版可以通过secret里面prometheus-cluster-monitoring-additional-scrape-configs动态获取新metric,不需要创建ServiceMonitor,如下:

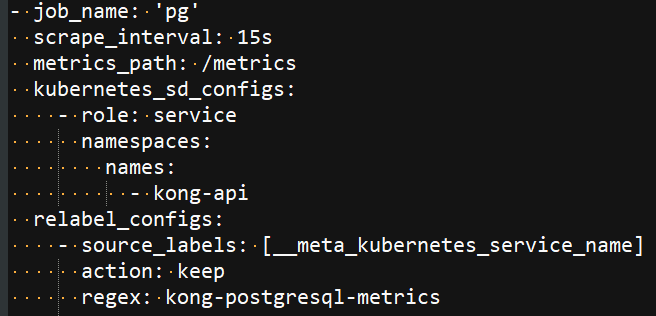

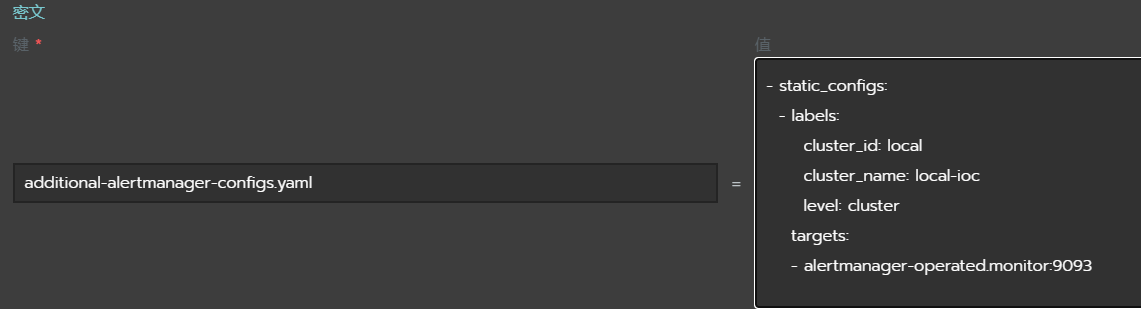

# prometheus添加alermanager的识别,也可以通过secret的prometheus-cluster-monitoring-additional-alertmanager-configs,

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号