HttpClient 代理使用 proxy & 异步HttpClient大量请求

https://www.cnblogs.com/yxnyd/p/9801396.html

HttpClient(二)HttpClient使用Ip代理与处理连接超时

前言

其实前面写的那一点点东西都是轻轻点水,其实HttpClient还有很多强大的功能:

一、HttpClient使用代理IP

1.1、前言

在爬取网页的时候,有的目标站点有反爬虫机制,对于频繁访问站点以及规则性访问站点的行为,会采集屏蔽IP措施。

这时候,代理IP就派上用场了。可以使用代理IP,屏蔽一个就换一个IP。

关于代理IP的话 也分几种 透明代理、匿名代理、混淆代理、高匿代理,一般使用高匿代理。

1.2、几种代理IP

1)透明代理(Transparent Proxy)

REMOTE_ADDR = Proxy IP

HTTP_VIA = Proxy IP

HTTP_X_FORWARDED_FOR = Your IP

透明代理虽然可以直接“隐藏”你的IP地址,但是还是可以从HTTP_X_FORWARDED_FOR来查到你是谁。

2)匿名代理(Anonymous Proxy)

REMOTE_ADDR = proxy IP

HTTP_VIA = proxy IP

HTTP_X_FORWARDED_FOR = proxy IP

匿名代理比透明代理进步了一点:别人只能知道你用了代理,无法知道你是谁。

还有一种比纯匿名代理更先进一点的:混淆代理

3)混淆代理(Distorting Proxies)

REMOTE_ADDR = Proxy IP

HTTP_VIA = Proxy IP

HTTP_X_FORWARDED_FOR = Random IP address

如上,与匿名代理相同,如果使用了混淆代理,别人还是能知道你在用代理,但是会得到一个假的IP地址,伪装的更逼真。

4)高匿代理(Elite proxy或High Anonymity Proxy)

REMOTE_ADDR = Proxy IP

HTTP_VIA = not determined

HTTP_X_FORWARDED_FOR = not determined

可以看出来,高匿代理让别人根本无法发现你是在用代理,所以是最好的选择。

一般我们搞爬虫 用的都是 高匿的代理IP;

那代理IP 从哪里搞呢 很简单 百度一下,你就知道 一大堆代理IP站点。 一般都会给出一些免费的,但是花点钱搞收费接口更加方便。

1.3、实例来使用代理Ip

使用 RequestConfig.custom().setProxy(proxy).build() 来设置代理IP

package com.jxlg.study.httpclient;

import com.sun.org.apache.regexp.internal.RE;

import org.apache.http.HttpEntity;

import org.apache.http.HttpHost;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

public class UseProxy {

public static void main(String[] args) throws IOException {

//创建httpClient实例

CloseableHttpClient httpClient = HttpClients.createDefault();

//创建httpGet实例

HttpGet httpGet = new HttpGet("http://www.tuicool.com");

//设置代理IP,设置连接超时时间 、 设置 请求读取数据的超时时间 、 设置从connect Manager获取Connection超时时间、

HttpHost proxy = new HttpHost("58.60.255.82",8118);

RequestConfig requestConfig = RequestConfig.custom()

.setProxy(proxy)

.setConnectTimeout(10000)

.setSocketTimeout(10000)

.setConnectionRequestTimeout(3000)

.build();

httpGet.setConfig(requestConfig);

//设置请求头消息

httpGet.setHeader("User-Agent","Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36");

CloseableHttpResponse response = httpClient.execute(httpGet);

if (response != null){

HttpEntity entity = response.getEntity(); //获取返回实体

if (entity != null){

System.out.println("网页内容为:"+ EntityUtils.toString(entity,"utf-8"));

}

}

if (response != null){

response.close();

}

if (httpClient != null){

httpClient.close();

}

}

}

1.4、实际开发中怎么去获取代理ip

我们可以使用HttpClient来 爬取 http://www.xicidaili.com/ 上最新的20条的高匿代理IP,来保存到 链表中,当一个IP被屏蔽之后获取连接超时时,

就接着取出 链表中的一个IP,以此类推,可以判断当链表中的数量小于5的时候,就重新爬取 代理IP 来保存到链表中。

1.5、HttpClient连接超时及读取超时

httpClient在执行具体http请求时候 有一个连接的时间和读取内容的时间;

1)HttpClient连接时间

所谓连接的时候 是HttpClient发送请求的地方开始到连接上目标url主机地址的时间,理论上是距离越短越快,

线路越通畅越快,但是由于路由复杂交错,往往连接上的时间都不固定,运气不好连不上,HttpClient的默认连接时间,据我测试,

默认是1分钟,假如超过1分钟 过一会继续尝试连接,这样会有一个问题 假如遇到一个url老是连不上,会影响其他线程的线程进去,说难听点,

就是蹲着茅坑不拉屎。所以我们有必要进行特殊设置,比如设置10秒钟 假如10秒钟没有连接上 我们就报错,这样我们就可以进行业务上的处理,

比如我们业务上控制 过会再连接试试看。并且这个特殊url写到log4j日志里去。方便管理员查看。

2)HttpClient读取时间

所谓读取的时间 是HttpClient已经连接到了目标服务器,然后进行内容数据的获取,一般情况 读取数据都是很快速的,

但是假如读取的数据量大,或者是目标服务器本身的问题(比如读取数据库速度慢,并发量大等等..)也会影响读取时间。

同上,我们还是需要来特殊设置下,比如设置10秒钟 假如10秒钟还没读取完,就报错,同上,我们可以业务上处理。

比如我们这里给个地址 http://central.maven.org/maven2/,这个是国外地址 连接时间比较长的,而且读取的内容多 。很容易出现连接超时和读取超时。

我们如何用代码实现呢?

HttpClient给我们提供了一个RequestConfig类 专门用于配置参数比如连接时间,读取时间以及前面讲解的代理IP等。

例子:

package com.jxlg.study.httpclient;

import org.apache.http.HttpEntity;

import org.apache.http.client.config.RequestConfig;

import org.apache.http.client.methods.CloseableHttpResponse;

import org.apache.http.client.methods.HttpGet;

import org.apache.http.impl.client.CloseableHttpClient;

import org.apache.http.impl.client.HttpClients;

import org.apache.http.util.EntityUtils;

import java.io.IOException;

public class TimeSetting {

public static void main(String[] args) throws IOException {

CloseableHttpClient httpClient = HttpClients.createDefault();

HttpGet httpGet = new HttpGet("http://central.maven.org/maven2/");

RequestConfig config = RequestConfig.custom()

.setConnectTimeout(5000)

.setSocketTimeout(5000)

.build();

httpGet.setConfig(config);

httpGet.setHeader("User-Agent", "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.94 Safari/537.36");

CloseableHttpResponse response = httpClient.execute(httpGet);

if (response != null){

HttpEntity entity = response.getEntity();

System.out.println("网页内容为:"+ EntityUtils.toString(entity,"UTF-8"));

}

if (response != null){

response.close();

}

if (httpClient != null){

httpClient.close();

}

}

}

httpClient在请求时设置代理服务器(Http Proxy)的方法

https://www.iteye.com/blog/rd-030-2357128

httpclient的两个重要的参数maxPerRoute及MaxTotal

https://blog.csdn.net/u013905744/article/details/94714696

博客分类:

异步HttpClient大量请求

由于项目中有用到HttpClient异步发送大量http请求,所以做已记录

思路:使用HttpClient连接池,多线程

public class HttpAsyncClient {

private static int socketTimeout = 500;// 设置等待数据超时时间0.5秒钟 根据业务调整

private static int connectTimeout = 2000;// 连接超时

private static int poolSize = 100;// 连接池最大连接数

private static int maxPerRoute = 100;// 每个主机的并发最多只有1500

private static int connectionRequestTimeout = 3000; //从连接池中后去连接的timeout时间

// http代理相关参数

private String host = "58.60.255.82";

private int port = 8118;

private String username = "";

private String password = "";

// 异步httpclient

private CloseableHttpAsyncClient asyncHttpClient;

// 异步加代理的httpclient

private CloseableHttpAsyncClient proxyAsyncHttpClient;

public HttpAsyncClient() {

try {

this.asyncHttpClient = createAsyncClient(false);

this.proxyAsyncHttpClient = createAsyncClient(true);

} catch (Exception e) {

e.printStackTrace();

}

}

public CloseableHttpAsyncClient createAsyncClient(boolean proxy)

throws KeyManagementException, UnrecoverableKeyException,

NoSuchAlgorithmException, KeyStoreException,

MalformedChallengeException, IOReactorException {

RequestConfig requestConfig = RequestConfig.custom()

.setConnectionRequestTimeout(connectionRequestTimeout)

.setConnectTimeout(connectTimeout)

.setSocketTimeout(socketTimeout).build();

SSLContext sslcontext = SSLContexts.createDefault();

UsernamePasswordCredentials credentials = new UsernamePasswordCredentials(

username, password);

CredentialsProvider credentialsProvider = new BasicCredentialsProvider();

credentialsProvider.setCredentials(AuthScope.ANY, credentials);

// 设置协议http和https对应的处理socket链接工厂的对象

Registry<SchemeIOSessionStrategy> sessionStrategyRegistry = RegistryBuilder

.<SchemeIOSessionStrategy> create()

.register("http", NoopIOSessionStrategy.INSTANCE)

.register("https", new SSLIOSessionStrategy(sslcontext))

.build();

// 配置io线程

IOReactorConfig ioReactorConfig = IOReactorConfig.custom().setSoKeepAlive(false).setTcpNoDelay(true)

.setIoThreadCount(Runtime.getRuntime().availableProcessors())

.build();

// 设置连接池大小

ConnectingIOReactor ioReactor;

ioReactor = new DefaultConnectingIOReactor(ioReactorConfig);

PoolingNHttpClientConnectionManager conMgr = new PoolingNHttpClientConnectionManager(

ioReactor, null, sessionStrategyRegistry, null);

if (poolSize > 0) {

conMgr.setMaxTotal(poolSize);

}

if (maxPerRoute > 0) {

conMgr.setDefaultMaxPerRoute(maxPerRoute);

} else {

conMgr.setDefaultMaxPerRoute(10);

}

ConnectionConfig connectionConfig = ConnectionConfig.custom()

.setMalformedInputAction(CodingErrorAction.IGNORE)

.setUnmappableInputAction(CodingErrorAction.IGNORE)

.setCharset(Consts.UTF_8).build();

Lookup<AuthSchemeProvider> authSchemeRegistry;

authSchemeRegistry = RegistryBuilder

.<AuthSchemeProvider> create()

.register(AuthSchemes.BASIC, new BasicSchemeFactory())

.register(AuthSchemes.DIGEST, new DigestSchemeFactory())

.register(AuthSchemes.NTLM, new NTLMSchemeFactory())

.register(AuthSchemes.SPNEGO, new SPNegoSchemeFactory())

.register(AuthSchemes.KERBEROS, new KerberosSchemeFactory())

.build();

conMgr.setDefaultConnectionConfig(connectionConfig);

if (proxy) {

return HttpAsyncClients.custom().setConnectionManager(conMgr)

.setDefaultCredentialsProvider(credentialsProvider)

.setDefaultAuthSchemeRegistry(authSchemeRegistry)

.setProxy(new HttpHost(host, port))

.setDefaultCookieStore(new BasicCookieStore())

.setDefaultRequestConfig(requestConfig).build();

} else {

return HttpAsyncClients.custom().setConnectionManager(conMgr)

.setDefaultCredentialsProvider(credentialsProvider)

.setDefaultAuthSchemeRegistry(authSchemeRegistry)

.setDefaultCookieStore(new BasicCookieStore()).build();

}

}

public CloseableHttpAsyncClient getAsyncHttpClient() {

return asyncHttpClient;

}

public CloseableHttpAsyncClient getProxyAsyncHttpClient() {

return proxyAsyncHttpClient;

}

}

public class HttpClientFactory {

private static HttpAsyncClient httpAsyncClient = new HttpAsyncClient();

private HttpClientFactory() {

}

private static HttpClientFactory httpClientFactory = new HttpClientFactory();

public static HttpClientFactory getInstance() {

return httpClientFactory;

}

public HttpAsyncClient getHttpAsyncClientPool() {

return httpAsyncClient;

}

}

public void sendThredPost(List<FaceBookUserQuitEntity> list,String title,String subTitle,String imgUrl){

if(list == null || list.size() == 0){

new BusinessException("亚洲查询用户数据为空");

}

int number = list.size();

int num = number / 10;

PostThread[] threads = new PostThread[1];

if(num > 0){

threads = new PostThread[10];

for(int i = 0; i <= 9; i++) {

List<FaceBookUserQuitEntity> threadList = list.subList(i * num, (i + 1) * num > number ? number : (i + 1) * num);

if (threadList == null || threadList.size() == 0) {

new BusinessException("亚洲切分用户数据为空");

}

threads[i] = new PostThread(HttpClientFactory.getInstance().getHttpAsyncClientPool().getAsyncHttpClient(),

threadList, title, subTitle, imgUrl);

}

for (int k = 0; k< threads.length; k++) {

threads[k].start();

logger.info("亚洲线程: {} 启动",k);

}

for (int j = 0; j < threads.length; j++) {

try {

threads[j].join();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}else{

threads[0] = new PostThread(HttpClientFactory.getInstance().getHttpAsyncClientPool().getAsyncHttpClient(),

list,title,subTitle, imgUrl);

threads[0].start();

try {

threads[0].join();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

public PostThread(CloseableHttpAsyncClient httpClient, List<FaceBookUserQuitEntity> list, String title, String subTitle,String imgUrl){

this.httpClient = httpClient;

this.list = list;

this. title= title;

this. subTitle= subTitle;

this. imgUrl= imgUrl;

}

@Override

public void run() {

try {

int size = list.size();

for (int k = 0; k < size; k += 100) {

List<FaceBookUserQuitEntity> subList = new ArrayList<FaceBookUserQuitEntity>();

if (k + 100 < size) {

subList = list.subList(k, k + 100);

} else {

subList = list.subList(k, size);

}

if(subList.size() > 0){

httpClient.start();

final long startTime = System.currentTimeMillis();

final CountDownLatch latch = new CountDownLatch(subList.size());

for (FaceBookUserQuitEntity faceBookEntity : subList) {

String senderId = faceBookEntity.getSenderId();

String player_id = faceBookEntity.getPlayer_id();

logger.info("开始发送消息:playerid=" + player_id);

String bodyStr = getPostbody(senderId, player_id, title, subTitle,

imgUrl, "Play Game", "");

if (!bodyStr.isEmpty()) {

final HttpPost httpPost = new HttpPost(URL);

StringEntity stringEntity = new StringEntity(bodyStr, "utf-8");

stringEntity.setContentEncoding("UTF-8");

stringEntity.setContentType("application/json");

httpPost.setEntity(stringEntity);

httpClient.execute(httpPost, new FutureCallback<HttpResponse>() {

@Override

public void completed(HttpResponse result) {

latch.countDown();

int statusCode = result.getStatusLine().getStatusCode();

if(200 == statusCode){

logger.info("请求发消息成功="+bodyStr);

try {

logger.info(EntityUtils.toString(result.getEntity(), "UTF-8"));

} catch (IOException e) {

e.printStackTrace();

}

}else{

logger.info("请求返回状态="+statusCode);

logger.info("请求发消息失败="+bodyStr);

try {

logger.info(EntityUtils.toString(result.getEntity(), "UTF-8"));

} catch (IOException e) {

e.printStackTrace();

}

}

}

@Override

public void failed(Exception ex) {

latch.countDown();

logger.info("请求发消息失败e="+ex);

}

@Override

public void cancelled() {

latch.countDown();

}

});

}

}

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

long leftTime = 10000 - (System.currentTimeMillis() - startTime);

if (leftTime > 0) {

try {

Thread.sleep(leftTime);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

} catch (UnsupportedCharsetException e) {

e.printStackTrace();

}

}

以上工具代码可直接使用,发送逻辑代码需适当修改。

HttpClient超时设置详解

httpclient的几个重要参数,及httpclient连接池的重要参数说明

httpclient的两个重要的参数maxPerRoute及MaxTotal

httpclient的连接池3个参数

HTTP请求时connectionRequestTimeout 、connectionTimeout、socketTimeout三个超时时间的含义

1.connectionRequestTimout:指从连接池获取连接的timeout

2.connetionTimeout:指客户端和服务器建立连接的timeout,

就是http请求的三个阶段,一:建立连接;二:数据传送;三,断开连接。超时后会ConnectionTimeOutException

3.socketTimeout:指客户端和服务器建立连接后,客户端从服务器读取数据的timeout,超出后会抛出SocketTimeOutException

httpclient封装了java中进行http网络请求的底层实现,是一个被广泛使用的组件。

httpclient是支持池化机制的,这两个参数maxPerRoute及MaxTotal就是表示池化设置的。

例子2:Apache的Fluent,其Executor类

/**

* An Executor for fluent requests

* <p/>

* A {@link PoolingHttpClientConnectionManager} with maximum 100 connections per route and

* a total maximum of 200 connections is used internally.

*/

//最大100 connections per route 以及 最大200个 connection

CONNMGR = new PoolingHttpClientConnectionManager(sfr);

CONNMGR.setDefaultMaxPerRoute(100);

CONNMGR.setMaxTotal(200);

CLIENT = HttpClientBuilder.create().setConnectionManager(CONNMGR).build();

maxPerRoute及MaxTotal参数含义

maxPerRoute及MaxTotal这两个参数的含义是什么呢?

下面用测试代码说明一下

测试端

public class HttpFluentUtil {

private Logger logger = LoggerFactory.getLogger(HttpFluentUtil.class);

private final static int MaxPerRoute = 2;

private final static int MaxTotal = 4;

final static PoolingHttpClientConnectionManager CONNMGR;

final static HttpClient CLIENT;

final static Executor EXECUTOR;

static {

LayeredConnectionSocketFactory ssl = null;

try {

ssl = SSLConnectionSocketFactory.getSystemSocketFactory();

} catch (final SSLInitializationException ex) {

final SSLContext sslcontext;

try {

sslcontext = SSLContext.getInstance(SSLConnectionSocketFactory.TLS);

sslcontext.init(null, null, null);

ssl = new SSLConnectionSocketFactory(sslcontext);

} catch (final SecurityException ignore) {

} catch (final KeyManagementException ignore) {

} catch (final NoSuchAlgorithmException ignore) {

}

}

final Registry<ConnectionSocketFactory> sfr = RegistryBuilder.<ConnectionSocketFactory>create()

.register("http", PlainConnectionSocketFactory.getSocketFactory())

.register("https", ssl != null ? ssl : SSLConnectionSocketFactory.getSocketFactory()).build();

CONNMGR = new PoolingHttpClientConnectionManager(sfr);

CONNMGR.setDefaultMaxPerRoute(MaxPerRoute);

CONNMGR.setMaxTotal(MaxTotal);

CLIENT = HttpClientBuilder.create().setConnectionManager(CONNMGR).build();

EXECUTOR = Executor.newInstance(CLIENT);

}

public static String Get(String uri, int connectTimeout, int socketTimeout) throws IOException {

return EXECUTOR.execute(Request.Get(uri).connectTimeout(connectTimeout).socketTimeout(socketTimeout))

.returnContent().asString();

}

public static String Post(String uri, StringEntity stringEntity, int connectTimeout, int socketTimeout)

throws IOException {

return EXECUTOR.execute(Request.Post(uri).socketTimeout(socketTimeout)

.addHeader("Content-Type", "application/json").body(stringEntity)).returnContent().asString();

}

public static void main(String[] args) {

HttpUtil httpUtil = new HttpUtil();

String url = "http://localhost:9064/app/test"; // 服务端sleep 5秒再返回

for (int i = 0; i < 5; i++) { // MaxPerRoute若设置为2,则5线程分3组返回(2、2、1),共15秒

new Thread(new Runnable() {

@Override

public void run() {

try {

String result = HttpFluentUtil.Get(url, 2000, 2000);

System.out.println(result);

} catch (IOException e) {

e.printStackTrace();

}

}

}).start();

}

}

}

服务器端

很简单的springmvc

@GetMapping(value="test")

public String test() throws InterruptedException {

Thread.sleep(1000);

return "1";

}

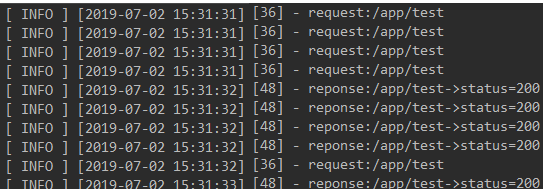

测试1:测试端MaxPerRoute=5 MaxTotal=4

服务器端结果

可以看到先接收4个请求,处理完成后,再接收下一次剩余的1个请求。即其一次最多接收MaxTotal次请求。

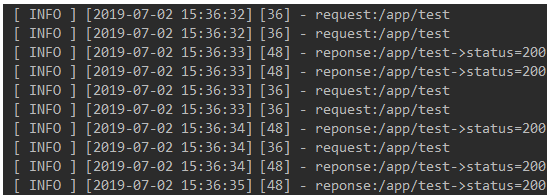

测试2:测试端MaxPerRoute=2 MaxTotal=5

服务器端结果

可以看到接收2个请求,2个请求,1个请求,即说明maxPerRoute意思是某一个服务每次能并行接收的请求数量。

什么场景下要设置?

知道了两个参数的含义,那么在什么情况下要对这两个参数进行设置呢?

比如说下面的场景

服务1要通过Fluent调用服务2的接口。服务1发送了400个请求,但由于Fluent默认只支持maxPerRoute=100,MaxTotal=200,比如接口执行时间为500ms,由于maxPerRoute=100,所以要分为100,100,100,100分四批来执行,全部执行完成需要2000ms。而如果maxPerRoute设置为400,全部执行完需要500ms。在这种情况下(提供并发能力时)就要对这两个参数进行设置了。

设置的方法

1、Apache Fluent可以使用上面测试的HttpFluentUtil工具类来执行请求

2、RestTemplate类似使用下面的方式

@Bean

public HttpClient httpClient() {

Registry<ConnectionSocketFactory> registry = RegistryBuilder.<ConnectionSocketFactory>create()

.register("http", PlainConnectionSocketFactory.getSocketFactory())

.register("https", SSLConnectionSocketFactory.getSocketFactory())

.build();

PoolingHttpClientConnectionManager connectionManager = new PoolingHttpClientConnectionManager(registry);

connectionManager.setMaxTotal(restTemplateProperties.getMaxTotal());

connectionManager.setDefaultMaxPerRoute(restTemplateProperties.getDefaultMaxPerRoute());

connectionManager.setValidateAfterInactivity(restTemplateProperties.getValidateAfterInactivity());

RequestConfig requestConfig = RequestConfig.custom()

.setSocketTimeout(restTemplateProperties.getSocketTimeout())

.setConnectTimeout(restTemplateProperties.getConnectTimeout())

.setConnectionRequestTimeout(restTemplateProperties.getConnectionRequestTimeout())

.build();

return HttpClientBuilder.create()

.setDefaultRequestConfig(requestConfig)

.setConnectionManager(connectionManager)

.build();

}

@Bean

public ClientHttpRequestFactory httpRequestFactory() {

return new HttpComponentsClientHttpRequestFactory(httpClient());

}

@Bean

public RestTemplate restTemplate() {

return new RestTemplate(httpRequestFactory());

}

其中RestTemplateProperties通过配置文件来配置

max-total default-max-per-route connect-timeout 获取连接超时 connection-request-timeout 请求超时 socket-timeout 读超时

总结:

max-total:连接池里的最大连接数 default-max-per-route:某一个/每服务每次能并行接收的请求数量 connect-timeout 从连接池里获取连接超时时间 connection-request-timeout 请求超时时间 socket-timeout 读超时时间

参考:https://blog.csdn.net/u013905744/java/article/details/94714696