Kubernetes使用cephfs作为后端存储

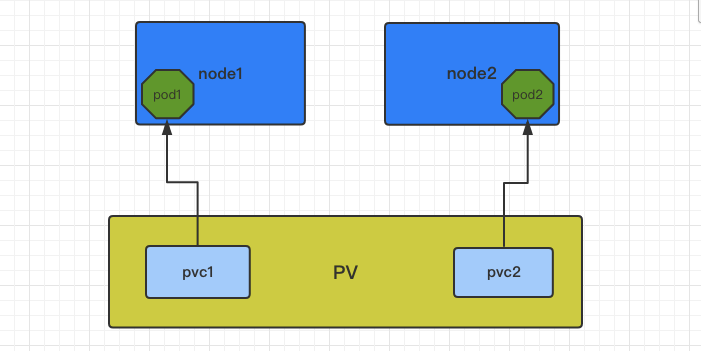

这里使用了k8s自身的持久化卷存储机制:PV和PVC。各组件之间的关系参考下图:

PV的Access Mode(访问模式)

The access modes are:

ReadWriteOnce – the volume can be mounted as read-write by a single node

ReadOnlyMany – the volume can be mounted read-only by many nodes

ReadWriteMany – the volume can be mounted as read-write by many nodes

In the CLI, the access modes are abbreviated to:

RWO - ReadWriteOnce

ROX - ReadOnlyMany

RWX - ReadWriteMany

Reclaim Policy(回收策略)

Current reclaim policies are:

Retain – manual reclamation

Recycle – basic scrub (“rm -rf /thevolume/*”)

Delete – associated storage asset such as AWS EBS, GCE PD, Azure Disk, or OpenStack Cinder volume is deleted

Currently, only NFS and HostPath support recycling. AWS EBS, GCE PD, Azure Disk, and Cinder volumes support deletion.

PV是有状态的资源对象,有以下几种状态:

1、Available:空闲状态

2、Bound:已经绑定到某个pvc上

3、Released:对应的pvc已经删除,但资源还没有被回收

4、Failed:pv自动回收失败

1、创建secret。在secret中,data域的各子域的值必须为base64编码值。

#echo "AQDchXhYTtjwHBAAk2/H1Ypa23WxKv4jA1NFWw==" | base64 QVFEY2hYaFlUdGp3SEJBQWsyL0gxWXBhMjNXeEt2NGpBMU5GV3c9PQo= #vim ceph-secret.yaml apiVersion: v1 kind: Secret metadata: name: ceph-secret data: key: QVFEY2hYaFlUdGp3SEJBQWsyL0gxWXBhMjNXeEt2NGpBMU5GV3c9PQo= |

2、创建pv。pv只能是网络存储,不属于任何node,但可以在每个node上访问。pv并不是定义在pod上的,而是独立定义于pod之外。目前pv仅支持定义存储容量。

#vim ceph-pv.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: cephfs

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteMany

fsType: xfs

cephfs:

monitors:

- 172.16.100.5 :6789

- 172.16.100.6 :6789

- 172.16.100.7 :6789

path: /opt/eshop_dir/eshop

user: admin

secretRef:

name: ceph-secret

|

3、创建pvc

#vim ceph-pvc.yml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 8Gi

|

4、查看pv和pvc

#kubectl get pv cephfs 10Gi RWX Retain Bound default/cephfs 2d #kubectl get pvc cephfs Bound cephfs 10Gi RWX 2d |

5、创建rc,这个只是测试样例

#vim ceph-rc.yml

kind: ReplicationController

metadata:

name: cephfstest

labels:

name: cephfstest

spec:

replicas: 4

selector:

name: cephfstest

template:

metadata:

labels:

name: cephfstest

spec:

containers:

- name: cephfstest

image: 172.60.0.107/pingpw/nginx-php:v4

env:

- name: GET_HOSTS_FROM

value: env

ports:

- containerPort: 81

volumeMounts:

- name: cephfs

mountPath: "/opt/cephfstest"

volumes:

- name: cephfs

persistentVolumeClaim:

claimName: cephfs

|

5、查看pod

#kubectl get pod -o wide cephfstest-09j37 1/1 Running 0 2d 10.244.5.16 kuber-node03 cephfstest-216r6 1/1 Running 0 2d 10.244.3.25 kuber-node01 cephfstest-4sjgr 1/1 Running 0 2d 10.244.4.26 kuber-node02 cephfstest-p2x7c 1/1 Running 0 2d 10.244.6.22 kuber-node04

|

浙公网安备 33010602011771号

浙公网安备 33010602011771号