docker 网络的几种模式

docker 网络分为单机和多机,我们来了解一下docker的单机网络

docker单机网络分为以下几种:

1)bridge NetWork,使用--net=bridge指定,默认设置。

2)Host NetWork ,使用--net=host指定。

3)None NetWork,使用--net=none指定。

4)Container NetWork,使用--net=container:NAME_or_ID指定。

5)自定义 NetWork,使用docker network create my_net,使用--net=my_net指定。

1、首先,我们来看看一个比较重要的概念,关于namespace,看看network的namespace到底是怎么回事。

通过实践演示network-namespace。

创建容器:创建两个busybox容器

[root@docker01 ~]# docker run -itd --name test1 busybox

f862152b6631cf28cf041b454ab85f5d190b03029c088a331a64b164900ef331

[root@docker01 ~]# docker run -itd --name test2 busybox

f78690e1e0b820c4fea8af6e4d062f4f6460f68697274e70b0189b5c2ff3386d

启动了2个容器,test1与test2,进入这2个容器查看各自的ip地址

[root@docker01 ~]# docker exec -it test1 sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 30: eth0@if31: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever / # exit [root@docker01 ~]# docker exec -it test2 sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 32: eth0@if33: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

可以看出test1的ip为172.17.0.2,test2的ip为172.17.0.3。其实这块就是网络命名空间!

在test2上ping可以ping通test1的namespace。

[root@docker01 ~]# docker exec -it test2 sh / # ping 172.17.0.2 PING 172.17.0.2 (172.17.0.2): 56 data bytes 64 bytes from 172.17.0.2: seq=0 ttl=64 time=18.389 ms 64 bytes from 172.17.0.2: seq=1 ttl=64 time=0.084 ms

可以发现命名空间是独立的,容器内的网络命名空间和容器外不同,容器和容器之前的网络命名空间也是相对独立的。

2、那么docker网络究竟是如何配置的?

1. bridge网络模式

我们进入容器内,ping baidu发现可以ping通。这是什么原理呢,肯定是通过可以转接的方式,如:容器--->虚拟机---->宿主机-->baidu。

/ # ping www.baidu.com PING www.baidu.com (115.239.210.27): 56 data bytes 64 bytes from 115.239.210.27: seq=0 ttl=127 time=15.410 ms 64 bytes from 115.239.210.27: seq=1 ttl=127 time=7.586 ms

查看docker网络:

[root@docker01 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 99c36d692cac bridge bridge local ec237fbb8837 host host local 94e84f3d8354 none null local

这里我们可以看到桥接模式。

对于test1与test2 来讲,他是通过bridge的方式

我们可以看一下其内部网络信息:

[root@docker01 ~]# docker inspect test1 ...... "NetworkSettings": { "Bridge": "", "SandboxID": "6f8f2f996e4d5bfeddd179832cc5ffb3cd3f9ee830ce6acfff16fd249e9e0130", "HairpinMode": false, "LinkLocalIPv6Address": "", "LinkLocalIPv6PrefixLen": 0, "Ports": {}, "SandboxKey": "/var/run/docker/netns/6f8f2f996e4d", "SecondaryIPAddresses": null, "SecondaryIPv6Addresses": null, "EndpointID": "d0a01da2cd1b487c6829518c7bdc01215b66a11b1e79e80862ab81c49e5cb0f7", "Gateway": "172.17.0.1", "GlobalIPv6Address": "", "GlobalIPv6PrefixLen": 0, "IPAddress": "172.17.0.2", "IPPrefixLen": 16, "IPv6Gateway": "", "MacAddress": "02:42:ac:11:00:02", "Networks": { "bridge": { "IPAMConfig": null, "Links": null, "Aliases": null, "NetworkID": "99c36d692cac8d6be351681e590e0048f6a746fd9e1c88b2e3b7769dfd57fccb", "EndpointID": "d0a01da2cd1b487c6829518c7bdc01215b66a11b1e79e80862ab81c49e5cb0f7", "Gateway": "172.17.0.1", "IPAddress": "172.17.0.2", "IPPrefixLen": 16, "IPv6Gateway": "", "GlobalIPv6Address": "", "GlobalIPv6PrefixLen": 0, "MacAddress": "02:42:ac:11:00:02", "DriverOpts": null } }

......

查看宿主机ip信息:

[root@docker01 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:cf:3d:4b brd ff:ff:ff:ff:ff:ff inet 10.0.0.99/24 brd 10.0.0.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fecf:3d4b/64 scope link valid_lft forever preferred_lft forever 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:1f:f4:29:1a brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:1fff:fef4:291a/64 scope link valid_lft forever preferred_lft forever 4: veth3b111e2@if32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker_gwbridge state UP group default link/ether 62:a9:c9:0b:be:d6 brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet6 fe80::60a9:c9ff:fe0b:bed6/64 scope link valid_lft forever preferred_lft forever 5: vethdc6d1fa@if30: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether a2:17:9c:af:d1:d9 brd ff:ff:ff:ff:ff:ff link-netnsid 2 inet6 fe80::a017:9cff:feaf:d1d9/64 scope link valid_lft forever preferred_lft forever

这个机器有5个网路,除了lo,ens33,docker0,veth3b111e2@if32,vethdc6d1fa@if30,veth其实是连接了2个networknamespace,vethdbcb3a6@if11与vethdc6d1fa@if30负责连接docker0上边的,容器test1与test2里面也应该有个和veth连接的。

[root@docker01 ~]# docker exec test1 ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 30: eth0@if31: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

eth0@if31和外边的veth3b111e2@if32 其实是一对。test2同理。

可以通过命令查看他们的关系: yum install -y bridge-utils

#安装后可以运行brctl这个命令了

[root@docker01 ~]# brctl show docker0 8000.02421ff4291a no veth3b111e2 vethdc6d1fa

因为目前两个容器test1与test2的桥接,所以就显示两个。

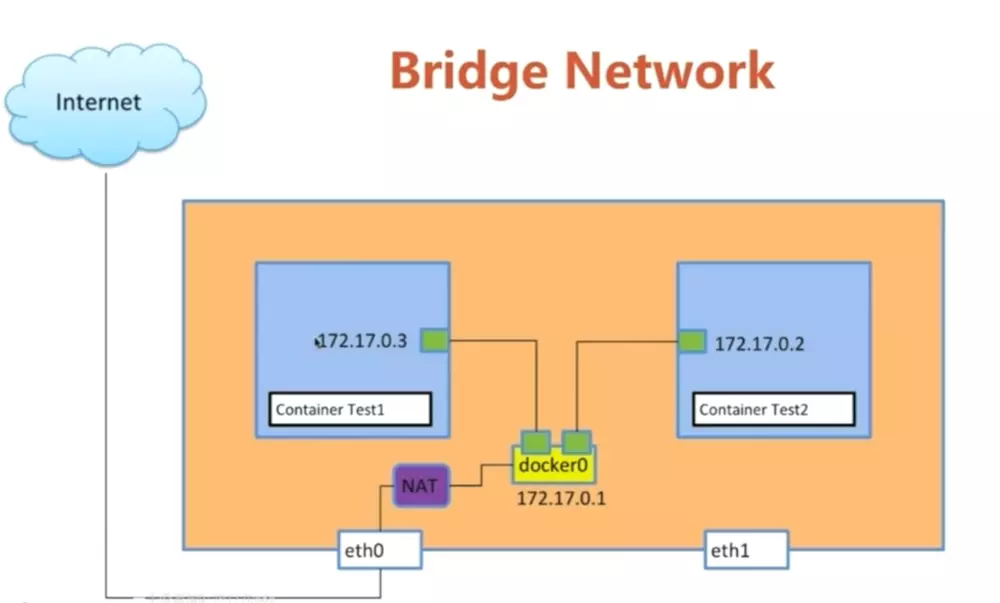

test1 和test2 之前通过docker0,docker0 类似test1和test2之前的路由器,docker0 在通过nat的eth0连接互联网。

为了形象理解docker bridge network可以参考下图:

2. host 网络模式

host模式下容器不会获得一个独立的network namespace,而是与宿主机共用一个。这就意味着容器不会有自己的网卡信息,而是使用宿主机的。容器除了网络,其他都是隔离的。

[root@docker01 ~]# docker run -itd --name test3 --network host busybox 2268c2f8eb8ff74b72e521148dc1fe82a9a822857f251abab2368b24924453d6 [root@docker01 ~]# docker exec -it test3 /bin/sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:cf:3d:4b brd ff:ff:ff:ff:ff:ff inet 10.0.0.99/24 brd 10.0.0.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fecf:3d4b/64 scope link valid_lft forever preferred_lft forever 3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:1f:f4:29:1a brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:1fff:fef4:291a/64 scope link valid_lft forever preferred_lft forever 4: veth3b111e2@if32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker_gwbridge state UP group default link/ether 62:a9:c9:0b:be:d6 brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet6 fe80::60a9:c9ff:fe0b:bed6/64 scope link valid_lft forever preferred_lft forever 5: vethdc6d1fa@if30: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default link/ether a2:17:9c:af:d1:d9 brd ff:ff:ff:ff:ff:ff link-netnsid 2 inet6 fe80::a017:9cff:feaf:d1d9/64 scope link valid_lft forever preferred_lft forever

此容器没有自己的接口,与宿主机ip完全一样,起容器只能起单个,比如nginx。

3. none网络模式:

获取独立的network namespace,但不为容器进行任何网络配置,需要我们手动配置。

[root@docker01 ~]# docker run -itd --name test4 --network none busybox 7222ec17cd70c1094af5710445bec25bd2a7bd09c86f97348857ad5f70ed21b3 [root@docker01 ~]# docker exec -it test4 /bin/sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever

此容器没有接口ip,与外界无沟通,用于安全性比较高的业务,可自己手动添加网络。

4. container 网络模式:

与指定的容器使用同一个network namespace,具有同样的网络配置信息,两个容器除了网络,其他都还是隔离的。新创建的容器不会创建自己的网卡,配置自己的IP,而是和一个指定的容器共享IP、端口范围等。同样,两个容器除了网络方面,其他的如文件系统、进程列表等还是隔离的。两个容器的进程可以通过lo网卡设备通信。

[root@docker01 ~]# docker run -itd --name test5 --net=container:test1 busybox af3a29e3171f82ef1a2e827d9307fbd2de6484ec225c6227de5de2fe6e557a90 [root@docker01 ~]# docker exec -it test5 /bin/sh / # ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 30: eth0@if31: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0 valid_lft forever preferred_lft forever

可以看出 test5 与 test1的网络配置信息是一致的。

5. 自定义网络模式:

与默认的bridge原理一样,但自定义网络具备内部DNS发现,可以通过容器名或者主机名容器之间网络通信。

通过docker network create 创建自定义的网络:

[root@docker01 ~]# docker network create test [root@docker01 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 99c36d692cac bridge bridge local ec237fbb8837 host host local 94e84f3d8354 none null local ea714a707d9d test bridge local

创建容器指定自定义网桥:

[root@docker01 ~]# docker run -itd --name test6 --net=test busybox

6b4add91370de5e9ac727c5d8992e516c7a5e8f75846f55f4c314bc433083998

[root@docker01 ~]# docker run -itd --name test7 --net=test busybox

eb55f38600e725f050dea02d5227516858a4a3c7c6f85fec8bc9932864c811f8

与普通bridge网络不同的是,自定义网络中创建的容器可以通过容器名或者主机名互通。

[root@docker01 ~]# docker exec -it test6 /bin/sh / # ping test7 PING test7 (172.21.0.3): 56 data bytes 64 bytes from 172.21.0.3: seq=0 ttl=64 time=0.151 ms 64 bytes from 172.21.0.3: seq=1 ttl=64 time=0.132 ms ^C --- test7 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.083/0.122/0.151 ms / # exit [root@docker01 ~]# docker exec -it test7 /bin/sh / # ping test6 PING test6 (172.21.0.2): 56 data bytes 64 bytes from 172.21.0.2: seq=0 ttl=64 time=0.139 ms 64 bytes from 172.21.0.2: seq=1 ttl=64 time=0.087 ms ^C --- test6 ping statistics --- 3 packets transmitted, 3 packets received, 0% packet loss round-trip min/avg/max = 0.087/0.104/0.139 ms

其原理是各容器内部的hosts文件做了ip与主机名解析。

/ # cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.21.0.3 eb55f38600e7

浙公网安备 33010602011771号

浙公网安备 33010602011771号