sklearn的基本使用

一、简单使用

from sklearn.linear_model import LinearRegression

from sklearn import datasets

# 加载数据,波士顿房价预测

loaded_data = datasets.load_boston()

data_X = loaded_data.data

data_Y = loaded_data.target

# 线性回归模型

model = LinearRegression()

model.fit(data_X, data_Y)

print(model.predict(data_X[:4, :]))

print(data_Y[:4])

[30.00384338 25.02556238 30.56759672 28.60703649]

[24. 21.6 34.7 33.4]

print(model.coef_) # 系数

print(model.intercept_) # 截距

[-1.08011358e-01 4.64204584e-02 2.05586264e-02 2.68673382e+00

-1.77666112e+01 3.80986521e+00 6.92224640e-04 -1.47556685e+00

3.06049479e-01 -1.23345939e-02 -9.52747232e-01 9.31168327e-03

-5.24758378e-01]

36.45948838509001

print(model.get_params()) # 获取模型的参数设置

{'copy_X': True, 'fit_intercept': True, 'n_jobs': None, 'normalize': False, 'positive': False}

# 模型的评价,这里线性回归是R^2,即SSR/SST(SST=SSE+SSR)

print(model.score(data_X, data_Y))

0.7406426641094094

二、预处理

(1) preprocessing.scale()

每个特征(每列),减均值除以方差

from sklearn import preprocessing

import numpy as np

a = np.array([[10, 2.7, 3.6],

[-100, 5, -2],

[120, 20, 40]], dtype=np.float64)

print(a)

[[ 10. 2.7 3.6]

[-100. 5. -2. ]

[ 120. 20. 40. ]]

print(preprocessing.scale(a))

[[ 0. -0.85170713 -0.55138018]

[-1.22474487 -0.55187146 -0.852133 ]

[ 1.22474487 1.40357859 1.40351318]]

一般情况下,每一列是一个特征,每一行为一个样本

所以scale默认按列标准化(减去均值,除以标准差)

print(np.mean(a, axis=0))

print(np.std(a, axis=0))

print((a - np.mean(a, axis=0)) / np.std(a, axis=0))

[10. 9.23333333 13.86666667]

[89.8146239 7.67086841 18.61994152]

[[ 0. -0.85170713 -0.55138018]

[-1.22474487 -0.55187146 -0.852133 ]

[ 1.22474487 1.40357859 1.40351318]]

(2)preprocessing.minmax_scale()

from sklearn import preprocessing

from sklearn.model_selection import train_test_split

from sklearn.datasets._samples_generator import make_classification

from sklearn.svm import SVC

import matplotlib.pyplot as plt

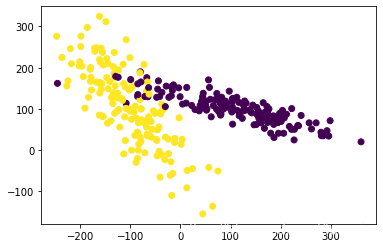

X, y = make_classification(n_samples=300, n_features=2, n_redundant=0, scale=100,

n_informative=2, random_state=22, n_clusters_per_class=1)

plt.scatter(X[:, 0], X[:, 1], c=y)

plt.show()

最大最小标准化

(

m

a

x

−

m

i

n

)

x

i

j

−

X

.

m

i

n

X

.

m

a

x

−

X

.

m

i

n

+

m

i

n

(max-min)\frac{x_{ij}-X.min}{X.max-X.min}+min

(max−min)X.max−X.minxij−X.min+min

首先每个元素减去那列的最小值,再除以那列的最大减最小,这样得到的每个元素是0到1的,然后再把[0,1]的变成[a,b]的,即乘上(b-a),再加上a

max,min是指定的范围,X.max、X.min是那列的最大值、最小值

# 最大最小标准化

X = preprocessing.minmax_scale(X) # 默认feature_range是0~1

# preprocessing.minmax_scale(X, copy=False) # 原地操作,与上面等价

print(X[:4,:])

[[0.85250502 0.46068155]

[0.21852168 0.75693678]

[0.63473781 0.52695779]

[0.5421389 0.5902955 ]]

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

clf = SVC()

clf.fit(X_train, y_train)

print(clf.score(X_test, y_test))

0.9333333333333333

三、交叉验证

K临近算法,用于分类,找到离它最近的前K个样本,取这k个样本的类别的众数,作为分类结果

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

iris = load_iris()

X = iris.data

y = iris.target

# random_state用于复现随机结果,保证每次都是一样的

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=4)

knn = KNeighborsClassifier(n_neighbors=7)

knn.fit(X_train, y_train)

y_pred = knn.predict(X_test)

print(y_pred)

print(y_test)

print(knn.score(X_test, y_test))

[2 0 2 2 2 1 2 0 0 2 0 0 0 1 2 0 1 0 0 2 0 2 1 0 0 0 0 0 0 2 1 0 2 0 1 2 2

1]

[2 0 2 2 2 1 1 0 0 2 0 0 0 1 2 0 1 0 0 2 0 2 1 0 0 0 0 0 0 2 1 0 2 0 1 2 2

1]

0.9736842105263158

(1)cross_val_score

注意:是确保模型的评分的准确性,而不是提升了模型

这里是分类,scoring用的是 accuracy 正确率,

如果是回归的话,可以用 neg_mean_squared_error 均方误差(MSE)

from sklearn.model_selection import cross_val_score

knn = KNeighborsClassifier(n_neighbors=5)

# 将数据分5份,每一份依次作为测试集,剩下4份作为训练集

# 取5此测试结果评分的平均值

scores = cross_val_score(knn, X, y, cv=5, scoring='accuracy')

print(scores)

print(scores.mean())

[0.96666667 1. 0.93333333 0.96666667 1. ]

0.9733333333333334

几种scoring可选值

from sklearn import metrics

print(metrics.SCORERS.keys())

dict_keys(['explained_variance', 'r2', 'max_error', 'neg_median_absolute_error', 'neg_mean_absolute_error', 'neg_mean_absolute_percentage_error', 'neg_mean_squared_error', 'neg_mean_squared_log_error', 'neg_root_mean_squared_error', 'neg_mean_poisson_deviance', 'neg_mean_gamma_deviance', 'accuracy', 'top_k_accuracy', 'roc_auc', 'roc_auc_ovr', 'roc_auc_ovo', 'roc_auc_ovr_weighted', 'roc_auc_ovo_weighted', 'balanced_accuracy', 'average_precision', 'neg_log_loss', 'neg_brier_score', 'adjusted_rand_score', 'rand_score', 'homogeneity_score', 'completeness_score', 'v_measure_score', 'mutual_info_score', 'adjusted_mutual_info_score', 'normalized_mutual_info_score', 'fowlkes_mallows_score', 'precision', 'precision_macro', 'precision_micro', 'precision_samples', 'precision_weighted', 'recall', 'recall_macro', 'recall_micro', 'recall_samples', 'recall_weighted', 'f1', 'f1_macro', 'f1_micro', 'f1_samples', 'f1_weighted', 'jaccard', 'jaccard_macro', 'jaccard_micro', 'jaccard_samples', 'jaccard_weighted'])

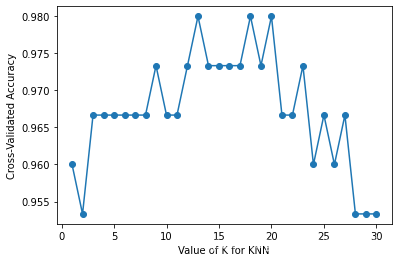

找到合适的 k

from sklearn.model_selection import cross_val_score

import matplotlib.pyplot as plt

k_range = range(1, 31)

k_scores = []

for k in k_range:

knn = KNeighborsClassifier(n_neighbors=k)

scores = cross_val_score(knn, X, y, cv=10, scoring='accuracy')

# loss = -cross_val_score(knn, X, y, cv=10, scoring='neg_mean_squared_error')

k_scores.append(scores.mean())

plt.plot(k_range, k_scores, 'o-')

plt.xlabel('Value of K for KNN')

plt.ylabel('Cross-Validated Accuracy')

plt.show()

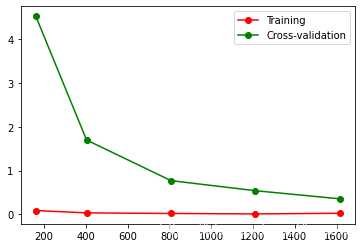

(2)learning_curve

学习曲线,随着训练样本的逐渐增多,算法训练出的模型的表现能力通过学习曲线可以清晰的看出模型对数据的过拟合和欠拟合

from sklearn.model_selection import learning_curve

from sklearn.datasets import load_digits

from sklearn.svm import SVC

import matplotlib.pyplot as plt

import numpy as np

digits = load_digits()

X = digits.data

y = digits.target

数据量分别取0.1、0.25…,逐渐增多

每次将样本分为 10 份进行交叉验证

train_sizes, train_loss, test_loss = learning_curve(

SVC(gamma=0.001), X, y, cv=10, scoring='neg_mean_squared_error',

train_sizes=[0.1, 0.25, 0.5, 0.75, 1]

)

train_loss_mean = -np.mean(train_loss, axis=1)

test_loss_mean = -np.mean(test_loss, axis=1)

plt.plot(train_sizes, train_loss_mean, 'ro-', label='Training')

plt.plot(train_sizes, test_loss_mean, 'go-', label='Cross-validation')

plt.legend()

plt.show()

可以看出,随着数据量的增大,训练集和测试集的效果都慢慢变好

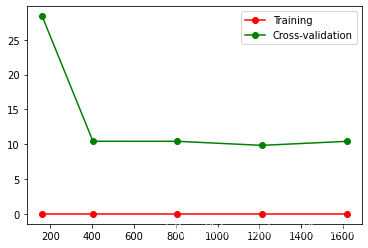

下面改变模型的参数,看看效果

train_sizes, train_loss, test_loss = learning_curve(

SVC(gamma=0.1), X, y, cv=10, scoring='neg_mean_squared_error',

train_sizes=[0.1, 0.25, 0.5, 0.75, 1]

)

train_loss_mean = -np.mean(train_loss, axis=1)

test_loss_mean = -np.mean(test_loss, axis=1)

plt.plot(train_sizes, train_loss_mean, 'ro-', label='Training')

plt.plot(train_sizes, test_loss_mean, 'go-', label='Cross-validation')

plt.legend()

plt.show()

把gamma改为0.1后,发现随着数据量的增大,测试集的损失值降不下来,反而变高,说明模型过拟合了

说明模型参数的选取是至关重要的,

(3)validation_curve

验证曲线,是不同参数值下模型的准确率

前面的学习曲线,是不同训练集大小下的准确率

利用验证曲线可以方便的选取合适的参数

from sklearn.model_selection import validation_curve

from sklearn.datasets import load_digits

from sklearn.svm import SVC

import matplotlib.pyplot as plt

import numpy as np

digits = load_digits()

X = digits.data

y = digits.target

# param_range = np.logspace(-6, -2.3, 5)

param_range = [0.0001, 0.0005, 0.001, 0.002, 0.004]

# 使用param_name设置待调参数名称,param_range设置待调参数的取值范围

train_loss, test_loss = validation_curve(

SVC(), X, y, param_name='gamma', param_range=param_range, cv=10,

scoring='neg_mean_squared_error')

train_loss_mean = -np.mean(train_loss, axis=1)

test_loss_mean = -np.mean(test_loss, axis=1)

plt.plot(param_range, train_loss_mean, 'ro-', label='Training')

plt.plot(param_range, test_loss_mean, 'go-', label='Cross-validation')

plt.xlabel('gamma')

plt.ylabel('loss')

plt.legend()

plt.show()

发现gamma在0.001附近的效果比较好

四、保存模型

from sklearn import svm

from sklearn import datasets

clf = svm.SVC()

iris = datasets.load_iris()

X, y = iris.data, iris.target

clf.fit(X, y)

method1: pickle

import pickle

# save

with open('data/clf.pickle', 'wb') as f:

pickle.dump(clf, f)

# restore

with open('data/clf.pickle', 'rb') as f:

clf2 = pickle.load(f)

print(clf2.predict(X[0:1]))

[0]

method2: joblib(官网上说这种速度快一点)

import joblib

joblib.dump(clf, 'data/clf.pkl')

# save

clf3 = joblib.load('data/clf.pkl')

# restore

print(clf3.predict(X[0:1]))

[0]