1.记我的第一次python爬虫爬取网页视频

It is my first time to public some notes on this platform, and I just want to improve myself by recording something that I learned everyday.

Partly , I don't know much about network crawler , and that makes me just understanding something that floats on the surface.

But since I was learning three days when I got a method to craw some videos on the web.

I am very excited, I just know how to craw something from the internet to computer hard disk. It is a start, surely, this is the first step, I just got to keep moving.

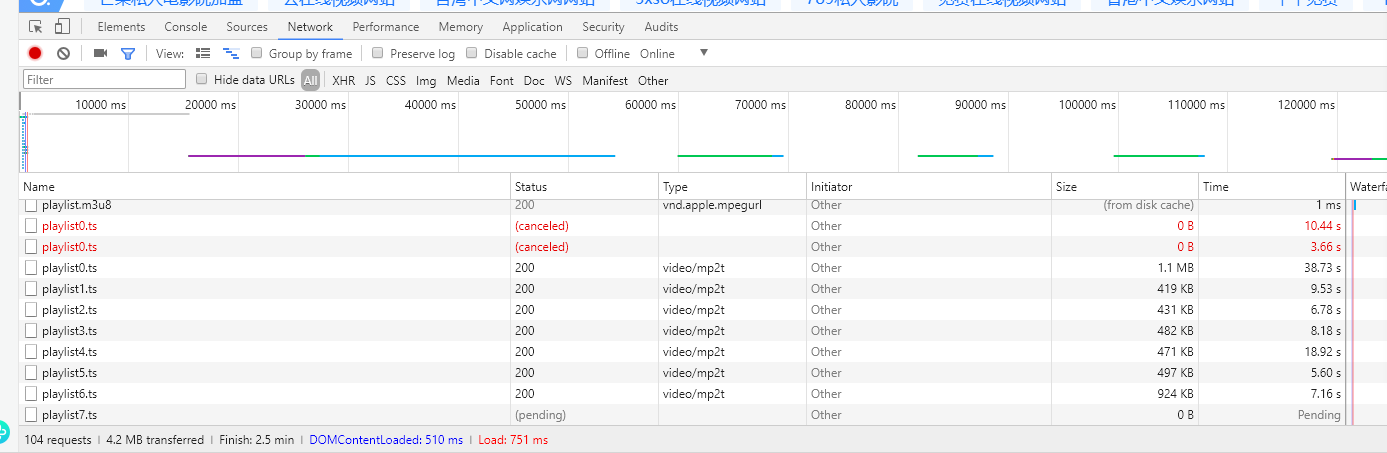

Step 1: Find a video on the web page, then plays the video online, press the keyboard shortcuts F12, it occurs element-checked page

as the following pictures:

Click .ts file and then you will see the URL, that is the point.

Step 2: Writing python code, as following:

1 from multiprocessing import Pool

2 import requests

3

4

5 def demo(i):

6 try:

7 url = "https://vip.holyshitdo.com/2019/5/8/c2417/playlist%0d.ts"%i

8 #simulate browser

9 print(url)

10 headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36Name','Referer':'http://91.com','Content-Type': 'multipart/form-data; session_language=cn_CN'}

11 r = requests.get(url, headers=headers)

12 #print(r.content) save the video with binary format

13 with open('./mp4/{}'.format(url[-10:]),'wb')as f:

14 f.write(r.content)

15 except:

16 return ""

17

18

19 if __name__=='__main__': # program entry

20 pool = Pool(10) # create a process pool

21 for i in range(193):

22 pool.apply_async(demo,(i,)) # execute

23

24

25 pool.close()

26 pool.join()

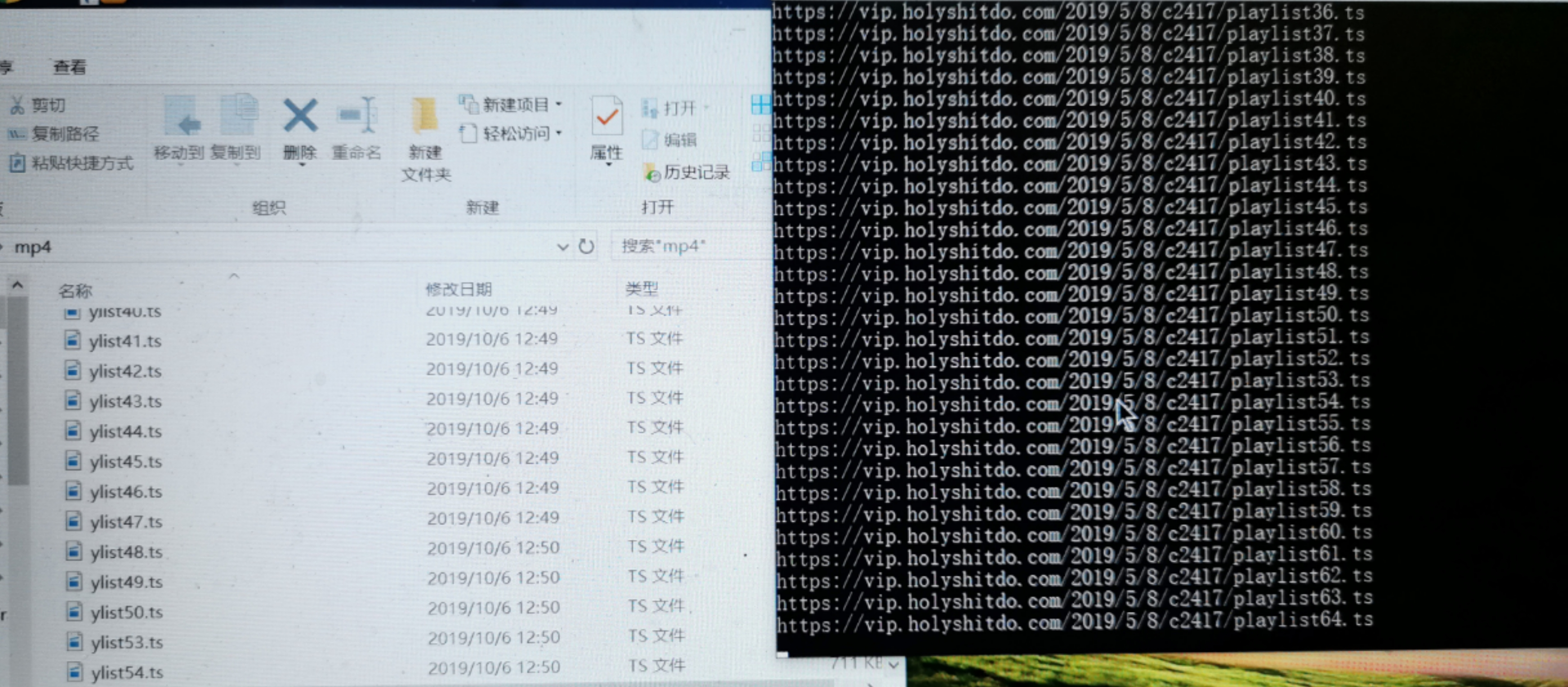

Step 3:Running code

Step 4 : Last but not least, merge .ts fragments into MP4 format.

Get to the terminal interface , under the saved diretory and use command line "copy /b *.ts newfile.mp4"

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

THAT IS ALL FOR NOW, TO BE CONTINUED~( ̄▽ ̄~)~