利用python3 实现网站资源探测工具

使用实例:python xxx.py -u http://www.xxxx.net -f 字典.txt -n 10

-u 指定扫描网站;

-f 指定扫描字典;

-n 指定扫描线程数。

#!/usr/bin/env python3 # -*- coding: utf-8 -*- # by 默不知然 2018-03-15 import threading from threading import Thread import time import sys import requests import getopt #创建类,并对目标网站发起请求 class scan_thread (threading.Thread): global real_url_list real_url_list = [] def __init__(self,url): threading.Thread.__init__(self) self.url = url def run(self): try: r = requests.get(self.url) print(self.url,'------->>',str(r.status_code)) if int(r.status_code) == 200: real_url_list.append(self.url) l[0] = l[0] - 1 except Exception as e: print(e) #获取字典并构造url并声明扫描线程 def url_makeup(dicts,url,threshold): global url_list global l url_list = [] l =[] l.append(0) dic = str(dicts) with open (dic,'r') as f: code_list = f.readlines() for i in code_list: url_list.append(url+i.replace('\r','').replace('\n','')) while len(url_list): try: if l[0] < threshold: n = url_list.pop(0) l[0] = l[0] + 1 thread = scan_thread(n) thread.start() except KeyboardInterrupt: print('用户停止了程序,完成目录扫描。') sys.exit() #获取输入参数 def get_args(): global get_url global get_dicts global get_threshold try: options,args = getopt.getopt(sys.argv[1:],"u:f:n:") except getopt.GetoptError: print("错误参数") sys.exit() for option,arg in options: if option == '-u': get_url = arg if option == '-f': get_dicts = arg if option == '-n': get_threshold = int(arg) #主函数,运行扫描程序 if __name__ == '__main__': get_args() url = get_url dicts = get_dicts threshold = get_threshold url_makeup(dicts,url,threshold) time.sleep(0.2) print('目标网站存在目录: ','\n', real_url_list)

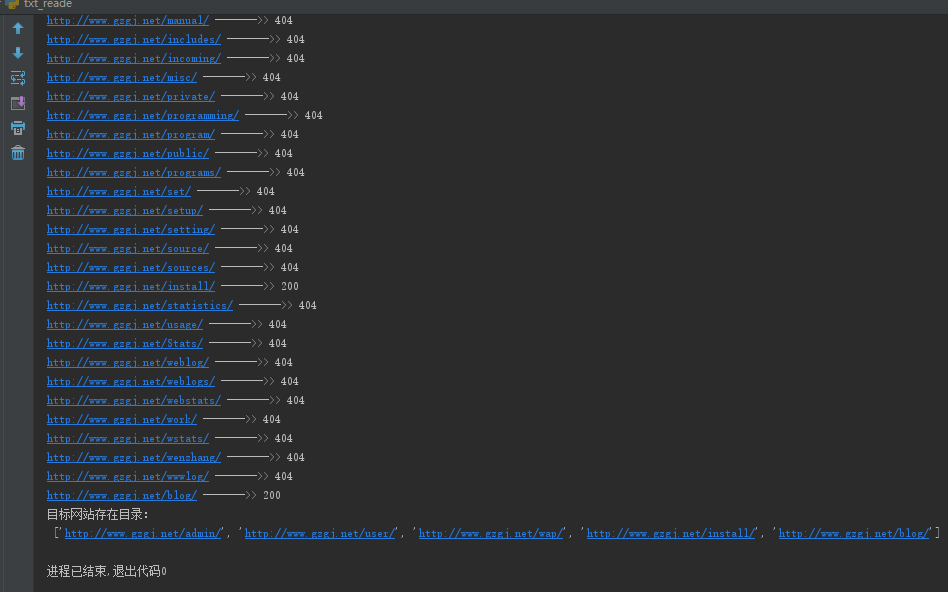

实例:如下是对http://www.gzgi.net 的目录扫描。

参考链接:

州的先生bolg: http://zmister.com/archives/180.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号