云原生学习笔记-DAY5

K8s实战案例

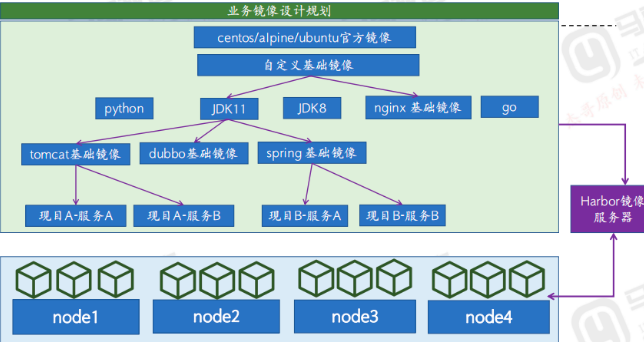

1 业务容器化案例一 业务规划及镜像构建

1.1 centos base镜像构建

1.1.1 准备构建镜像所需要的文件

root@image-build:/opt/dockerfile/system/centos/centos-7.9.2009# cat Dockerfile

FROM centos:7.9.2009

LABEL maintainer="jack <465647818@qq.com>"

#MAINTAINER jack <465647818@qq.com> #MAINTAINER指令已废弃,使用LABEL

ADD filebeat-7.12.1-x86_64.rpm /tmp

#在官方centos镜像上安装一些基础软件包

RUN yum -y install epel-release && yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop traceroute tcpdump iftop dos2unix telnet bind-utils libtool pcre2 pcre2-devel && rm -rf /tmp/filebeat-7.12.1-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd nginx -u 2088

#RUN yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && rm -rf /etc/localtime /tmp/filebeat-7.12.1-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && useradd nginx -u 2088

#用户一般在做业务镜像时候添加,如果需要在做基础系统镜像时添加,可以加上以下指令

#RUN groupadd -g 2088 nginx && useradd -u 2088 -g 2088 nginx

#RUN useradd -u 2023 nginx #与上一条命令效果相同

#修改镜像默认的时区设置,默认值是UTC时区

#RUN ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

root@image-build:/opt/dockerfile/system/centos/centos-7.9.2009# cat build-command.sh

#!/bin/bash

#docker build -t harbor.magedu.net/baseimages/magedu-centos-base:7.9.2009 .

#docker push harbor.magedu.net/baseimages/magedu-centos-base:7.9.2009

TAG=`date +%Y-%m-%d_%H-%M-%S`

/usr/local/bin/nerdctl build -t harbor.idockerinaction.info/baseimages/centos-base:7.9.2009_${TAG} .

/usr/local/bin/nerdctl tag harbor.idockerinaction.info/baseimages/centos-base:7.9.2009_${TAG} harbor.idockerinaction.info/baseimages/centos-base:7.9.2009

/usr/local/bin/nerdctl push harbor.idockerinaction.info/baseimages/centos-base:7.9.2009

1.1.2 构建并上传镜像

root@image-build:/opt/dockerfile/system/centos/centos-7.9.2009# bash build-command.sh

[+] Building 11.4s (8/8) FINISHED

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.36kB 0.0s

=> [internal] load metadata for docker.io/library/centos:7.9.2009 1.6s

=> [internal] load build context 0.0s

=> => transferring context: 50B 0.0s

=> [1/3] FROM docker.io/library/centos:7.9.2009@sha256:be65f488b7764ad3638f236b7b515b3678369a5124c47b8d32916d6487418ea4 0.0s

=> => resolve docker.io/library/centos:7.9.2009@sha256:be65f488b7764ad3638f236b7b515b3678369a5124c47b8d32916d6487418ea4 0.0s

=> CACHED [2/3] ADD filebeat-7.12.1-x86_64.rpm /tmp 0.0s

=> CACHED [3/3] RUN yum -y install epel-release && yum install -y /tmp/filebeat-7.12.1-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake p 0.0s

=> exporting to docker image format 9.6s

=> => exporting layers 0.0s

=> => exporting manifest sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff1740e8a 0.0s

=> => exporting config sha256:f0e8d994b4585a4c7844c08949b69358d2b95589e33dc8299bbfb5525af4100e 0.0s

=> => sending tarball 9.6s

unpacking harbor.idockerinaction.info/baseimages/centos-base:7.9.2009_2023-06-03_07-58-41 (sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff1740e8a)...

Loaded image: harbor.idockerinaction.info/baseimages/centos-base:7.9.2009_2023-06-03_07-58-41

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff1740e8a)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff1740e8a: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:f0e8d994b4585a4c7844c08949b69358d2b95589e33dc8299bbfb5525af4100e: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 3.4 s total: 3.7 Ki (1.1 KiB/s)

root@image-build:/opt/dockerfile/system/centos/centos-7.9.2009#

1.1.3 通过镜像启动容器测试运行

root@image-build:/opt/dockerfile/system/centos/centos-7.9.2009# nerdctl run --name=centostest -it --rm harbor.idockerinaction.info/baseimages/centos-base:7.9.2009 bash

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

harbor.idockerinaction.info/baseimages/centos-base:7.9.2009: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff1740e8a: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:f0e8d994b4585a4c7844c08949b69358d2b95589e33dc8299bbfb5525af4100e: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:8cae81f05235f7fc9b37bf3c1155973e87d5958c291dbfb5dd484dc4c4d5a4db: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:c694f3a2d0b825036e6bb1305def20655cbb3c8051d7afea5cfc72a5ef65a5ff: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 14.6s total: 218.2 (14.9 MiB/s)

[root@da5ad257bb29 /]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.4.0.79 netmask 255.255.255.0 broadcast 10.4.0.255

inet6 fe80::a824:ffff:fe1a:59ef prefixlen 64 scopeid 0x20<link>

ether aa:24:ff:1a:59:ef txqueuelen 0 (Ethernet)

RX packets 12 bytes 1096 (1.0 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6 bytes 472 (472.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

1.2 jdk base镜像构建

1.2.1 准备制作镜像所需的文件

#profile文件要在原有基础上添加以下配置

root@image-build:/opt/dockerfile/web/jdk/centos-7.9.2009-jdk-8u212# tail -5 profile

export JAVA_HOME=/usr/local/jdk

export TOMCAT_HOME=/apps/tomcat

export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$TOMCAT_HOME/bin:$PATH

export CLASSPATH=.$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tools.jar

#准备Dockerfile

root@image-build:/opt/dockerfile/web/jdk/centos-7.9.2009-jdk-8u212# cat Dockerfile

#执行build之前先要将centos-base:7.9.2009上传到harbor, 否则build无法进行,会报错

FROM harbor.idockerinaction.info/baseimages/centos-base:7.9.2009

LABEL maintainer="jack <465647818@qq.com>"

#将JDK源码包放入镜像并做好链接

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

#替换profile文件,加入JAVA_HOME相关环境变量

ADD profile /etc/profile

#给镜像注入一些环境变量,根据实际情况加入相关变量

#ENV name idockerinaction

#普通用户一般在做业务镜像时候添加,如果需要在做基础系统镜像时使用普通用户测试,可以加上以下指令

#RUN groupadd -g 2023 www && useradd -u 2023 -g 2023 www

#以下环境变量命令如果不加的话,使用镜像启动容器以后,root用户无法执行java命令。但是如果切换到普通用户如su - www却能够执行java命令

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

#修改镜像时区,如果基础镜像已经修改了,这一步就可以不做

#RUN rm -f /etc/localtime && ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

#准备build-command.sh

root@image-build:/opt/dockerfile/web/jdk/centos-7.9.2009-jdk-8u212# cat build-command.sh

#!/bin/bash

TAG=`date +%Y-%m-%d_%H-%M-%S`

nerdctl build -t harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base-${TAG} .

nerdctl image tag harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base-${TAG} harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base

nerdctl push harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base

1.2.2 构建并上传镜像

nerdctl push harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base

root@image-build:/opt/dockerfile/web/jdk/centos-7.9.2009-jdk-8u212# bash build-command.sh

[+] Building 44.1s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.26kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-base:7.9.2009 0.1s

=> [auth] baseimages/centos-base:pull token for harbor.idockerinaction.info 0.0s

=> [1/4] FROM harbor.idockerinaction.info/baseimages/centos-base:7.9.2009@sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff17 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-base:7.9.2009@sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36fff17 0.0s

=> [internal] load build context 3.6s

=> => transferring context: 195.05MB 3.5s

=> [2/4] ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/ 5.6s

=> [3/4] RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk 0.1s

=> [4/4] ADD profile /etc/profile 0.0s

=> exporting to docker image format 34.4s

=> => exporting layers 18.9s

=> => exporting manifest sha256:fa3ddbea5ddc1d8a056c32814e10e8576e82f317fc6908b1c8e9c5a202098569 0.0s

=> => exporting config sha256:a55ac556fcae3a841f2b07645ea0c6165c9c4e55b55d7121ea93e5dddc318a87 0.0s

=> => sending tarball 15.5s

unpacking harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base-2023-06-03_09-33-29 (sha256:fa3ddbea5ddc1d8a056c32814e10e8576e82f317fc6908b1c8e9c5a202098569)...

Loaded image: harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base-2023-06-03_09-33-29

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:fa3ddbea5ddc1d8a056c32814e10e8576e82f317fc6908b1c8e9c5a202098569)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:fa3ddbea5ddc1d8a056c32814e10e8576e82f317fc6908b1c8e9c5a202098569: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:a55ac556fcae3a841f2b07645ea0c6165c9c4e55b55d7121ea93e5dddc318a87: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 2.9 s total: 5.9 Ki (2.0 KiB/s)

root@image-build:/opt/dockerfile/web/jdk/centos-7.9.2009-jdk-8u212#

1.2.3 通过镜像启动容器测试运行

root@image-build:/opt/dockerfile/system/centos/centos-7.9.2009# nerdctl run --name=centostest -it --rm harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base bash

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base: resolved |++++++++++++++++++++++++++++++++++++++|

manifest-sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a0c56ee564dc477ddb87ba0e3479ab16: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:10f5012947ae761de2822e1054d47ee538ed7283882ce5c95a5fb3233dd02d88: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:dc88f4166dd9fb9eb4f60999b48a17ec53426589881a5043dee405431b91ae79: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:7c5e31a494dac30e1ea9420b2cce96e7749135d0ab8e4fa8d0bcfc0b7de2b80c: done |++++++++++++++++++++++++++++++++++++++|

layer-sha256:fe83e24520bce0b187777d74bedd578080eb80d40dfedb0eeaae9d62e50feb9a: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 11.0s total: 187.3 (17.0 MiB/s)

[root@f86d2612f782 /]# java -version

java version "1.8.0_212"

Java(TM) SE Runtime Environment (build 1.8.0_212-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode)

[root@f86d2612f782 /]# su - nginx

[nginx@f86d2612f782 ~]$ java -version

java version "1.8.0_212"

Java(TM) SE Runtime Environment (build 1.8.0_212-b10)

Java HotSpot(TM) 64-Bit Server VM (build 25.212-b10, mixed mode)

1.3 tomcat base镜像构建

1.3.1 准备制作镜像所需的文件

root@image-build:/opt/dockerfile/web/tomcat/centos-7.9.2009-tomcat-8.5.43# cat Dockerfile

FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base

LABEL maintainer="jack <465647818@qq.com>"

RUN mkdir /data/tomcat/{webapps,logs} -pv

ADD apache-tomcat-8.5.43.tar.gz /apps

RUN ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat

#如果需要普通用户运行tomcat,可以加上以下指令,用户名www根据实际情况而定。这个建议在业务镜像加

#RUN groupadd -g 2023 www && useradd -u 2023 -g 2023 www

#RUN chown -R www:www /apps/apache-tomcat-8.5.65

RUN useradd tomcat -u 2050 && chown -R tomcat:tomcat /{apps,data}

#K8s volume一般在定义资源的yaml文件设定,如果想在构建镜像时定义定义卷,需要加上以下命令

#VOLUME /data

1.3.2 构建并上传镜像

root@image-build:/opt/dockerfile/web/tomcat/centos-7.9.2009-tomcat-8.5.43# bash build-command.sh

[+] Building 19.0s (11/11) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 738B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base 0.1s

=> [auth] baseimages/centos-7.9.2009-jdk-8u212:pull token for harbor.idockerinaction.info 0.0s

=> [1/5] FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base@sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a0c56ee564dc477dd 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base@sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a0c56ee564dc477dd 0.0s

=> [internal] load build context 0.3s

=> => transferring context: 9.72MB 0.3s

=> [2/5] RUN mkdir /data/tomcat/{webapps,logs} -pv 0.1s

=> [3/5] ADD apache-tomcat-8.5.43.tar.gz /apps 0.3s

=> [4/5] RUN ln -sv /apps/apache-tomcat-8.5.43 /apps/tomcat 0.1s

=> [5/5] RUN useradd tomcat -u 2050 && chown -R tomcat:tomcat /{apps,data} 0.7s

=> exporting to docker image format 17.1s

=> => exporting layers 0.9s

=> => exporting manifest sha256:52e2b8e83afbb481622fe3b3f41d41a499fd02f49a5467a64be773af3b56fb65 0.0s

=> => exporting config sha256:e5c529188b0a27e4167ee25d2e4875893325858e2101a99e38006c89bc9fe2f3 0.0s

=> => sending tarball 16.2s

unpacking harbor.idockerinaction.info/baseimages/centos-7.9.2009-tomcat-8.5.43:base-2023-06-03_10-27-27 (sha256:52e2b8e83afbb481622fe3b3f41d41a499fd02f49a5467a64be773af3b56fb65)...

Loaded image: harbor.idockerinaction.info/baseimages/centos-7.9.2009-tomcat-8.5.43:base-2023-06-03_10-27-27

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:52e2b8e83afbb481622fe3b3f41d41a499fd02f49a5467a64be773af3b56fb65)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:52e2b8e83afbb481622fe3b3f41d41a499fd02f49a5467a64be773af3b56fb65: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:e5c529188b0a27e4167ee25d2e4875893325858e2101a99e38006c89bc9fe2f3: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.5 s total: 7.8 Ki (5.2 KiB/s)

root@image-build:/opt/dockerfile/web/tomcat/centos-7.9.2009-tomcat-8.5.43#

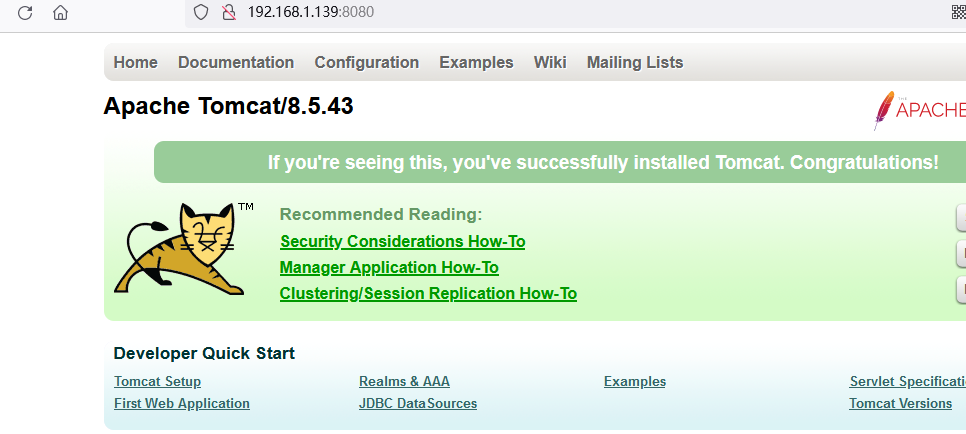

1.3.3 通过镜像启动容器测试

root@image-build:/opt/dockerfile/web/tomcat/centos-7.9.2009-tomcat-8.5.43# nerdctl run -it --rm --name=tomcattest -p 8080:8080 harbor.idockerinaction.info/baseimages/centos-7.9.2009-tomcat-8.5.43:base bash

[root@d98c2218b47a /]# cd /apps/tomcat/

[root@d98c2218b47a tomcat]# bin/catalina.sh run

验证页面可以正常访问

1.4 nginx base镜像构建

1.4.1 准备制作镜像所需的文件

#Dockerifle

root@image-build:/opt/dockerfile/web/nginx/centos-7.9.2009-nginx-1.22.0# cat Dockerfile

#Nginx Base Image

FROM harbor.idockerinaction.info/baseimages/centos-base:7.9.2009

LABEL maintainer='jack <465647818@qq.com>'

#ADD nginx-1.22.0.tar.gz /usr/local/src/

#RUN cd /usr/local/src/nginx-1.22.0 && ./configure && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx &&rm -rf /usr/local/src/nginx-1.22.0.tar.gz

ADD nginx-1.22.0.tar.gz /opt

RUN cd /opt/nginx-1.22.0 && ./configure --prefix=/apps/nginx --user=nginx --group=nginx --with-http_ssl_module --with-http_v2_module --with-http_realip_module --with-http_flv_module --with-http_stub_status_module --with-http_gzip_static_module --with-pcre --with-stream --with-stream_ssl_module --http-client-body-temp-path=/var/tmp/nginx/client/ --http-proxy-temp-path=/var/tmp/nginx/proxy/ --http-fastcgi-temp-path=/var/tmp/nginx/fcgi/ --http-uwsgi-temp-path=/var/tmp/nginx/uwsgi --http-scgi-temp-path=/var/tmp/nginx/scgi --error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log --pid-path=/var/run/nginx/nginx.pid --lock-path=/var/lock/nginx.lock && make && make install && cd /opt && rm -rf /opt/nginx-1.22.0* && mkdir -pv /var/tmp/nginx && ln -s /apps/nginx/sbin/nginx /usr/sbin/nginx

#build-command.sh

root@image-build:/opt/dockerfile/web/nginx/centos-7.9.2009-nginx-1.22.0# cat build-command.sh

#!/bin/bash

TAG=`date +%Y-%m-%d_%H-%M-%S`

nerdctl build -t harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base-${TAG} .

nerdctl tag harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base-${TAG} harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base

nerdctl push harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base

1.4.2 构建并上传镜像

root@image-build:/opt/dockerfile/web/nginx/centos-7.9.2009-nginx-1.22.0# bash build-command.sh

[+] Building 59.9s (9/9) FINISHED

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.23kB 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-base:7.9.2009 0.1s

=> [auth] baseimages/centos-base:pull token for harbor.idockerinaction.info 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 1.07MB 0.0s

=> CACHED [1/3] FROM harbor.idockerinaction.info/baseimages/centos-base:7.9.2009@sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-base:7.9.2009@sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9e7b7a36ff 0.0s

=> [2/3] ADD nginx-1.22.0.tar.gz /opt 0.1s

=> [3/3] RUN cd /opt/nginx-1.22.0 && ./configure --prefix=/apps/nginx --user=nginx --group=nginx --with-http_ssl_module --with-http_v2_m 48.8s

=> exporting to docker image format 10.7s

=> => exporting layers 0.6s

=> => exporting manifest sha256:1503590a40fe836a495151e72b7a5b0961942b7beb5b191eb23415b03b9412a2 0.0s

=> => exporting config sha256:f59ac413c3db415ede52789bdcffa835603e807039fd9b92a3031cd265cefc54 0.0s

=> => sending tarball 10.1s

unpacking harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base-2023-06-03_14-52-29 (sha256:1503590a40fe836a495151e72b7a5b0961942b7beb5b191eb23415b03b9412a2)...

Loaded image: harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base-2023-06-03_14-52-29

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:1503590a40fe836a495151e72b7a5b0961942b7beb5b191eb23415b03b9412a2)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:1503590a40fe836a495151e72b7a5b0961942b7beb5b191eb23415b03b9412a2: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:f59ac413c3db415ede52789bdcffa835603e807039fd9b92a3031cd265cefc54: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.3 s total: 5.5 Ki (4.2 KiB/s)

root@image-build:/opt/dockerfile/web/nginx/centos-7.9.2009-nginx-1.22.0#

1.4.3 通过镜像启动容器测试

root@image-build:/opt/dockerfile/web/nginx/centos-7.9.2009-nginx-1.22.0# nerdctl run --name=nginxtest -it --rm -p 80:80 harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base bash

[root@95954e5e0589 /]# nginx

[root@95954e5e0589 /]# netstat -an|grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN

#验证可以访问

root@image-build:~# curl -I 192.168.1.139

HTTP/1.1 200 OK

Server: nginx/1.22.0

Date: Sat, 03 Jun 2023 07:02:30 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Sat, 03 Jun 2023 06:53:18 GMT

Connection: keep-alive

ETag: "647ae35e-267"

Accept-Ranges: bytes

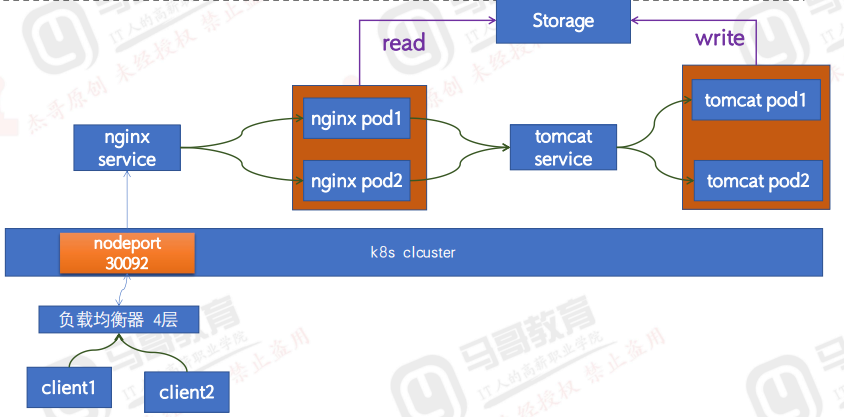

2 业务容器化案例二 Nginx+Tomcat+NFS实现动静分离

2.1 构建tomcat 业务镜像

2.1.1 准备制作镜像所需的文件

#build-command.sh

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-tomcat-8.5.43-app1# cat build-command.sh

#!/bin/bash

#TAG=`date +%Y-%m-%d_%H-%M-%S`

TAG=$1

#nerdctl build -t harbor.idockerinaction.info/p1/centos-tomcat-p1:${TAG} .

#nerdctl image tag harbor.idockerinaction.info/p1/centos-tomcat-p1:${TAG} harbor.idockerinaction.info/p1/centos-tomcat-p1:app1

nerdctl build -t harbor.idockerinaction.info/p1/centos-7.9.2009-tomcat-8.5.43-app1:${TAG} .

nerdctl push harbor.idockerinaction.info/p1/centos-7.9.2009-tomcat-8.5.43-app1:${TAG}

2.1.2 制作镜像并上传

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-tomcat-8.5.43-app1# bash build-command.sh v1

[+] Building 17.0s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.20kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-7.9.2009-tomcat-8.5.43:base 0.0s

=> [1/5] FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-tomcat-8.5.43:base@sha256:52e2b8e83afbb481622fe3b3f41d41a499fd02f49a 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-7.9.2009-tomcat-8.5.43:base@sha256:52e2b8e83afbb481622fe3b3f41d41a499fd02f49a 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 95B 0.0s

=> CACHED [2/5] ADD server.xml /apps/tomcat/conf/ 0.0s

=> CACHED [3/5] ADD run_tomcat.sh /apps/tomcat/bin/ 0.0s

=> CACHED [4/5] ADD app1.tar.gz /data/tomcat/webapps/app1/ 0.0s

=> CACHED [5/5] RUN chown -R nginx:nginx /data/ /apps/ 0.0s

=> exporting to docker image format 16.6s

=> => exporting layers 0.0s

=> => exporting manifest sha256:4b67b2954b74656002d28b9057613a19589d1984d16ba633666f72fb331e9e87 0.0s

=> => exporting config sha256:bab422fb05dae594a4b1a37b0fe067882bfa11fcce60314cdd7a01ff559a03e3 0.0s

=> => sending tarball 16.6s

unpacking harbor.idockerinaction.info/p1/centos-7.9.2009-tomcat-8.5.43-app1:v1 (sha256:4b67b2954b74656002d28b9057613a19589d1984d16ba633666f72fb331e9e87)...

Loaded image: harbor.idockerinaction.info/p1/centos-7.9.2009-tomcat-8.5.43-app1:v1

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:4b67b2954b74656002d28b9057613a19589d1984d16ba633666f72fb331e9e87)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:4b67b2954b74656002d28b9057613a19589d1984d16ba633666f72fb331e9e87: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:bab422fb05dae594a4b1a37b0fe067882bfa11fcce60314cdd7a01ff559a03e3: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.7 s total: 10.0 K (5.9 KiB/s)

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-tomcat-8.5.43-app1#

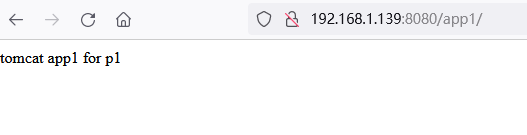

2.1.3 通过镜像启动容器测试

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-tomcat-8.5.43-app1# nerdctl run --name=tomcattest --rm -it -p 8080:8080 harbor.idockerinaction.info/p1/centos-7.9.2009-tomcat-8.5.43-app1:v1

Using CATALINA_BASE: /apps/tomcat

Using CATALINA_HOME: /apps/tomcat

Using CATALINA_TMPDIR: /apps/tomcat/temp

Using JRE_HOME: /usr/local/jdk

Using CLASSPATH: /apps/tomcat/bin/bootstrap.jar:/apps/tomcat/bin/tomcat-juli.jar

Tomcat started.

# <nerdctl>

127.0.0.1 localhost localhost.localdomain

::1 localhost localhost.localdomain

10.4.0.90 0a218668cfa9 tomcattest

# </nerdctl>

测试页面可以正常访问

2.2 构建nginx业务镜像

2.2.1 准备构建镜像的文件

#build-command.sh

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-nginx-1.22.0-web1# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.idockerinaction.info/p1/nginx-web1:${TAG} .

#echo "镜像构建完成,即将上传到harbor"

#sleep 1

#docker push harbor.idockerinaction.info/p1/nginx-web1:${TAG}

#echo "镜像上传到harbor完成"

#nerdctl build -t harbor.idockerinaction.info/p1/nginx-web1:${TAG} .

#nerdctl push harbor.idockerinaction.info/p1/nginx-web1:${TAG}

nerdctl build -t harbor.idockerinaction.info/p1/centos-7.9.2009-nginx-1.22.0-web1:${TAG} .

nerdctl push harbor.idockerinaction.info/p1/centos-7.9.2009-nginx-1.22.0-web1:${TAG}

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-nginx-1.22.0-web1#

2.2.2 构建镜像并上传

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-nginx-1.22.0-web1# bash build-command.sh v1

[+] Building 10.4s (11/11) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 395B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base 0.1s

=> [auth] baseimages/centos-7.9.2009-nginx-1.22.0:pull token for harbor.idockerinaction.info 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 3.52kB 0.0s

=> [1/5] FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base@sha256:1503590a40fe836a495151e72b7a5b0961942b7beb5 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base@sha256:1503590a40fe836a495151e72b7a5b0961942b7beb5 0.0s

=> [2/5] ADD nginx.conf /apps/nginx/conf/nginx.conf 0.0s

=> [3/5] ADD app1.tar.gz /apps/nginx/html/webapp/ 0.0s

=> [4/5] ADD index.html /apps/nginx/html/index.html 0.0s

=> [5/5] RUN mkdir -p /apps/nginx/html/webapp/static /apps/nginx/html/webapp/images 0.1s

=> exporting to docker image format 10.0s

=> => exporting layers 0.1s

=> => exporting manifest sha256:9d218810bf8cfd49f4b9407ffda6688b7b63e1caf85221ca3b7bb0389a5bcc10 0.0s

=> => exporting config sha256:ba3667da6dbba2a281ba6c537931f4c58058996524745dae6fde5c796895862b 0.0s

=> => sending tarball 9.9s

unpacking harbor.idockerinaction.info/p1/centos-7.9.2009-nginx-1.22.0-web1:v1 (sha256:9d218810bf8cfd49f4b9407ffda6688b7b63e1caf85221ca3b7bb0389a5bcc10)...

Loaded image: harbor.idockerinaction.info/p1/centos-7.9.2009-nginx-1.22.0-web1:v1

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:9d218810bf8cfd49f4b9407ffda6688b7b63e1caf85221ca3b7bb0389a5bcc10)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:9d218810bf8cfd49f4b9407ffda6688b7b63e1caf85221ca3b7bb0389a5bcc10: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:ba3667da6dbba2a281ba6c537931f4c58058996524745dae6fde5c796895862b: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.3 s total: 7.6 Ki (5.8 KiB/s)

root@image-build:/opt/dockerfile/web/p1/centos-7.9.2009-nginx-1.22.0-web1#

2.2.3 通过镜像创建容器测试

单独启动容器会报错,由于单独启动的容器无法解析配置文件里面的tomcat service名称。只能在k8s里面使用镜像启动容器

root@image-build:/opt/dockerfile/web/nginx/centos-7.9.2009-nginx-1.22.0# nerdctl run --name nginxtest -it --rm -p 80:80 harbor.idockerinaction.info/p1/centos-7.9.2009-nginx-1.22.0-web1:v1

nginx: [emerg] host not found in upstream "p1-tomcat-app1-service.p1:80" in /apps/nginx/conf/nginx.conf:35

2.3 部署tomcat app1 service

root@image-build:/opt/k8s-data/yaml/namespaces# kubectl apply -f p1-ns.yaml

namespace/p1 created

root@image-build:/opt/k8s-data/yaml/namespaces# cd ../p1/tomcat-app1

root@image-build:/opt/k8s-data/yaml/p1/tomcat-app1# kubectl apply -f tomcat-app1.yaml

deployment.apps/p1-tomcat-app1-deployment created

service/p1-tomcat-app1-service created

root@image-build:/opt/k8s-data/yaml/p1/tomcat-app1# kubectl get svc -n p1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

p1-tomcat-app1-service ClusterIP 10.100.32.185 <none> 80/TCP 71s

2.4 部署nginx service

root@image-build:/opt/k8s-data/yaml/p1/nginx# kubectl apply -f nginx.yaml

deployment.apps/p1-nginx-deployment created

service/p1-nginx-service created

root@image-build:/opt/k8s-data/yaml/p1/nginx# kubectl get svc -n p1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

p1-nginx-service NodePort 10.100.62.129 <none> 80:30090/TCP,443:30091/TCP 27s

p1-tomcat-app1-service ClusterIP 10.100.32.185 <none> 80/TCP 11m

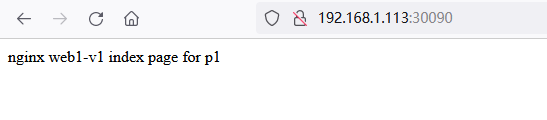

2.5 验证访问,注意url后面的/

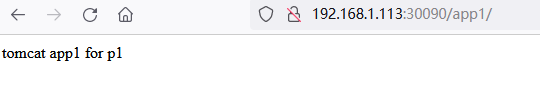

3 业务容器化案例三 PV/PVC及zookeeper

3.1 构建zookeeper镜像

3.1.1 准备构建镜像的文件

#Dockerfile

root@image-build:/opt/k8s-data/dockerfile/web/p1/alpine-3.6.2-jdk-8u144-zookeeper# cat Dockerfile

FROM harbor.idockerinaction.info/baseimages/slim_java:8

ENV ZK_VERSION 3.4.14

ADD repositories /etc/apk/repositories

# Download Zookeeper

COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz

COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc

COPY KEYS /tmp/KEYS

RUN apk add --no-cache --virtual .build-deps \

ca-certificates \

gnupg \

tar \

wget && \

#

# Install dependencies

apk add --no-cache \

bash && \

#

#

# Verify the signature

export GNUPGHOME="$(mktemp -d)" && \

gpg -q --batch --import /tmp/KEYS && \

gpg -q --batch --no-auto-key-retrieve --verify /tmp/zk.tgz.asc /tmp/zk.tgz && \

#

# Set up directories

#

mkdir -p /zookeeper/data /zookeeper/wal /zookeeper/log && \

#

# Install

tar -x -C /zookeeper --strip-components=1 --no-same-owner -f /tmp/zk.tgz && \

#

# Slim down

cd /zookeeper && \

cp dist-maven/zookeeper-${ZK_VERSION}.jar . && \

rm -rf \

*.txt \

*.xml \

bin/README.txt \

bin/*.cmd \

conf/* \

contrib \

dist-maven \

docs \

lib/*.txt \

lib/cobertura \

lib/jdiff \

recipes \

src \

zookeeper-*.asc \

zookeeper-*.md5 \

zookeeper-*.sha1 && \

#

# Clean up

apk del .build-deps && \

rm -rf /tmp/* "$GNUPGHOME"

COPY conf /zookeeper/conf/

COPY bin/zkReady.sh /zookeeper/bin/

COPY entrypoint.sh /

ENV PATH=/zookeeper/bin:${PATH} \

ZOO_LOG_DIR=/zookeeper/log \

ZOO_LOG4J_PROP="INFO, CONSOLE, ROLLINGFILE" \

JMXPORT=9010

ENTRYPOINT [ "/entrypoint.sh" ]

CMD [ "zkServer.sh", "start-foreground" ]

EXPOSE 2181 2888 3888 9010

#build-command.sh

root@image-build:/opt/k8s-data/dockerfile/web/p1/alpine-3.6.2-jdk-8u144-zookeeper# cat build-command.sh

#!/bin/bash

TAG=$1

#nerdctl build -t harbor.idockerinaction.info/p1/zookeeper:${TAG} .

#nerdctl push harbor.idockerinaction.info/p1/zookeeper:${TAG}

nerdctl build -t harbor.idockerinaction.info/p1/alpine-3.6.2-jdk-8u144-zookeeper:${TAG} .

sleep 1

nerdctl push harbor.idockerinaction.info/p1/alpine-3.6.2-jdk-8u144-zookeeper:${TAG}

root@image-build:/opt/k8s-data/dockerfile/web/p1/alpine-3.6.2-jdk-8u144-zookeeper#

3.1.2 构建并上传镜像

root@image-build:/opt/k8s-data/dockerfile/web/p1/alpine-3.6.2-jdk-8u144-zookeeper# bash build-command.sh v3.4.14

[+] Building 31.5s (14/15)

=> [internal] load build definition from Dockerfile 0.0s

[+] Building 31.7s (15/15) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 1.75kB 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/slim_java:8 0.2s

=> [auth] baseimages/slim_java:pull token for harbor.idockerinaction.info 0.0s

=> [1/9] FROM harbor.idockerinaction.info/baseimages/slim_java:8@sha256:044e42fb89cda51e83701349a9b79e8117300f4841511ed853f73caf 2.1s

=> => resolve harbor.idockerinaction.info/baseimages/slim_java:8@sha256:044e42fb89cda51e83701349a9b79e8117300f4841511ed853f73caf 0.0s

=> => sha256:88286f41530e93dffd4b964e1db22ce4939fffa4a4c665dab8591fbab03d4926 1.99MB / 1.99MB 0.1s

=> => sha256:fd529fe251b34db45de24e46ae4d8f57c5b8bbcfb1b8d8c6fb7fa9fcdca8905e 27.33MB / 27.33MB 0.6s

=> => extracting sha256:88286f41530e93dffd4b964e1db22ce4939fffa4a4c665dab8591fbab03d4926 0.2s

=> => sha256:7141511c4dad1bb64345a3cd38e009b1bcd876bba3e92be040ab2602e9de7d1e 3.19MB / 3.19MB 0.2s

=> => extracting sha256:7141511c4dad1bb64345a3cd38e009b1bcd876bba3e92be040ab2602e9de7d1e 0.2s

=> => extracting sha256:fd529fe251b34db45de24e46ae4d8f57c5b8bbcfb1b8d8c6fb7fa9fcdca8905e 1.4s

=> [internal] load build context 1.4s

=> => transferring context: 37.75MB 1.4s

=> [2/9] ADD repositories /etc/apk/repositories 0.2s

=> [3/9] COPY zookeeper-3.4.14.tar.gz /tmp/zk.tgz 0.1s

=> [4/9] COPY zookeeper-3.4.14.tar.gz.asc /tmp/zk.tgz.asc 0.0s

=> [5/9] COPY KEYS /tmp/KEYS 0.0s

=> [6/9] RUN apk add --no-cache --virtual .build-deps ca-certificates gnupg tar 21.8s

=> [7/9] COPY conf /zookeeper/conf/ 0.1s

=> [8/9] COPY bin/zkReady.sh /zookeeper/bin/ 0.0s

=> [9/9] COPY entrypoint.sh / 0.0s

=> exporting to docker image format 7.0s

=> => exporting layers 3.1s

=> => exporting manifest sha256:8598abec7bf68af5ddf44a3fa091044468829de0f106dd1271f50cfc1ff3a7ff 0.0s

=> => exporting config sha256:ce3aefaa5b7ede9859098bce12ed05ff9dfd6b2368cba8fc19da21357d904017 0.0sd => => sending tarball 3.8s

Loaded image: harbor.idockerinaction.info/p1/alpine-3.6.2-jdk-8u144-zookeeper:v3.4.14

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:8598abec7bf68af5ddf44a3fa091044468829de0f106dd1271f50cfc1ff3a7ff)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:8598abec7bf68af5ddf44a3fa091044468829de0f106dd1271f50cfc1ff3a7ff: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:ce3aefaa5b7ede9859098bce12ed05ff9dfd6b2368cba8fc19da21357d904017: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.8 s total: 16.1 K (8.9 KiB/s)

root@image-build:/opt/k8s-data/dockerfile/web/p1/alpine-3.6.2-jdk-8u144-zookeeper#

3.1.3 使用镜像启动容器测试

root@image-build:/opt/k8s-data/dockerfile/web/p1/alpine-3.6.2-jdk-8u144-zookeeper# nerdctl run --name=zktest --rm -it -p 2181:2181 harbor.idockerinaction.info/p1/alpine-3.6.2-jdk-8u144-zookeeper:v3.4.14

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

2023-06-04 07:57:49,531 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /zookeeper/bin/../conf/zoo.cfg

2023-06-04 07:57:49,542 [myid:] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3

2023-06-04 07:57:49,542 [myid:] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 1

2023-06-04 07:57:49,544 [myid:] - WARN [main:QuorumPeerMain@116] - Either no config or no quorum defined in config, running in standalone mode

2023-06-04 07:57:49,544 [myid:] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@138] - Purge task started.

2023-06-04 07:57:49,547 [myid:] - INFO [main:QuorumPeerConfig@136] - Reading configuration from: /zookeeper/bin/../conf/zoo.cfg

2023-06-04 07:57:49,552 [myid:] - INFO [main:ZooKeeperServerMain@98] - Starting server

2023-06-04 07:57:49,561 [myid:] - INFO [PurgeTask:DatadirCleanupManager$PurgeTask@144] - Purge task completed.

2023-06-04 07:57:49,566 [myid:] - INFO [main:Environment@100] - Server environment:zookeeper.version=3.4.14-4c25d480e66aadd371de8bd2fd8da255ac140bcf, built on 03/06/2019 16:18 GMT

2023-06-04 07:57:49,566 [myid:] - INFO [main:Environment@100] - Server environment:host.name=e62347948bec

2023-06-04 07:57:49,567 [myid:] - INFO [main:Environment@100] - Server environment:java.version=1.8.0_144

2023-06-04 07:57:49,567 [myid:] - INFO [main:Environment@100] - Server environment:java.vendor=Oracle Corporation

2023-06-04 07:57:49,568 [myid:] - INFO [main:Environment@100] - Server environment:java.home=/usr/lib/jvm/java-8-oracle

2023-06-04 07:57:49,568 [myid:] - INFO [main:Environment@100] - Server environment:java.class.path=/zookeeper/bin/../zookeeper-server/target/classes:/zookeeper/bin/../build/classes:/zookeeper/bin/../zookeeper-server/target/lib/*.jar:/zookeeper/bin/../build/lib/*.jar:/zookeeper/bin/../lib/slf4j-log4j12-1.7.25.jar:/zookeeper/bin/../lib/slf4j-api-1.7.25.jar:/zookeeper/bin/../lib/netty-3.10.6.Final.jar:/zookeeper/bin/../lib/log4j-1.2.17.jar:/zookeeper/bin/../lib/jline-0.9.94.jar:/zookeeper/bin/../lib/audience-annotations-0.5.0.jar:/zookeeper/bin/../zookeeper-3.4.14.jar:/zookeeper/bin/../zookeeper-server/src/main/resources/lib/*.jar:/zookeeper/bin/../conf:

2023-06-04 07:57:49,569 [myid:] - INFO [main:Environment@100] - Server environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2023-06-04 07:57:49,570 [myid:] - INFO [main:Environment@100] - Server environment:java.io.tmpdir=/tmp

2023-06-04 07:57:49,572 [myid:] - INFO [main:Environment@100] - Server environment:java.compiler=<NA>

2023-06-04 07:57:49,572 [myid:] - INFO [main:Environment@100] - Server environment:os.name=Linux

2023-06-04 07:57:49,573 [myid:] - INFO [main:Environment@100] - Server environment:os.arch=amd64

2023-06-04 07:57:49,574 [myid:] - INFO [main:Environment@100] - Server environment:os.version=5.15.0-73-generic

2023-06-04 07:57:49,574 [myid:] - INFO [main:Environment@100] - Server environment:user.name=root

2023-06-04 07:57:49,575 [myid:] - INFO [main:Environment@100] - Server environment:user.home=/root

2023-06-04 07:57:49,576 [myid:] - INFO [main:Environment@100] - Server environment:user.dir=/zookeeper

2023-06-04 07:57:49,581 [myid:] - INFO [main:ZooKeeperServer@836] - tickTime set to 2000

2023-06-04 07:57:49,582 [myid:] - INFO [main:ZooKeeperServer@845] - minSessionTimeout set to -1

2023-06-04 07:57:49,582 [myid:] - INFO [main:ZooKeeperServer@854] - maxSessionTimeout set to -1

2023-06-04 07:57:49,598 [myid:] - INFO [main:ServerCnxnFactory@117] - Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory

2023-06-04 07:57:49,609 [myid:] - INFO [main:NIOServerCnxnFactory@89] - binding to port 0.0.0.0/0.0.0.0:2181

root@image-build:~# nerdctl ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e62347948bec harbor.idockerinaction.info/p1/alpine-3.6.2-jdk-8u144-zookeeper:v3.4.14 "/entrypoint.sh zkSe…" About a minute ago Up 0.0.0.0:2181->2181/tcp zktest

root@image-build:~# nerdctl exec -it zktest bash

bash-4.3# netstat -an |grep 2181

tcp 0 0 :::2181 :::* LISTEN

3.2 创建PV/PVC

root@image-build:/opt/k8s-data/yaml/p1/zookeeper/pv# kubectl apply -f zookeeper-persistentvolume.yaml

persistentvolume/zookeeper-datadir-pv-1 created

persistentvolume/zookeeper-datadir-pv-2 created

persistentvolume/zookeeper-datadir-pv-3 created

root@image-build:/opt/k8s-data/yaml/p1/zookeeper/pv# kubectl apply -f zookeeper-persistentvolumeclaim.yaml

persistentvolumeclaim/zookeeper-datadir-pvc-1 created

persistentvolumeclaim/zookeeper-datadir-pvc-2 created

persistentvolumeclaim/zookeeper-datadir-pvc-3 created

3.3 创建pod测试

root@image-build:/opt/k8s-data/yaml/p1/zookeeper# kubectl apply -f zookeeper.yaml

3.4 验证zookeeper 角色状态

root@image-build:/opt/k8s-data/yaml/p1/zookeeper# kubectl exec -it zookeeper1-7fccd69c54-7jxgg -n p1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-4.3# zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower

root@image-build:/opt/k8s-data/yaml/p1/zookeeper# kubectl exec -it zookeeper2-784448bdcf-zcm5x -n p1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-4.3# zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: leader

bash-4.3# exit

exit

root@image-build:/opt/k8s-data/yaml/p1/zookeeper# kubectl exec -it zookeeper3-cff5f4c48-jbfc2 -n p1 bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

bash-4.3# zkServer.sh status

ZooKeeper JMX enabled by default

ZooKeeper remote JMX Port set to 9010

ZooKeeper remote JMX authenticate set to false

ZooKeeper remote JMX ssl set to false

ZooKeeper remote JMX log4j set to true

Using config: /zookeeper/bin/../conf/zoo.cfg

Mode: follower

4 业务容器化案例之四 PV/PVC及Redis单机

4.1 构建redis单机业务镜像

4.1.1 准备制作镜像的文件

#build-command.sh

root@image-build:/opt/k8s-data/dockerfile/web/p1/redis# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.idockerinaction.info/p1/redis:${TAG} .

#sleep 3

#docker push harbor.idockerinaction.info/p1/redis:${TAG}

nerdctl build -t harbor.idockerinaction.info/p1/centos-7.9.2009-redis-4.0.14:${TAG} .

sleep 1

nerdctl push harbor.idockerinaction.info/p1/centos-7.9.2009-redis-4.0.14:${TAG}

4.1.2 构建并上传镜像

root@image-build:/opt/k8s-data/dockerfile/web/p1/centos-7.9.2009-redis-4.0.14# bash build-command.sh v4.0.14

[+] Building 11.6s (10/10) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 626B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-base:7.9.2009 0.1s

=> [1/5] FROM harbor.idockerinaction.info/baseimages/centos-base:7.9.2009@sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-base:7.9.2009@sha256:fd68f672b0b4ce140222e2df9a8194e5e713f6b7277b9b9 0.0s

=> [internal] load build context 0.0s

=> => transferring context: 104B 0.0s

=> CACHED [2/5] ADD redis-4.0.14.tar.gz /usr/local/src 0.0s

=> CACHED [3/5] RUN ln -sv /usr/local/src/redis-4.0.14 /usr/local/redis && cd /usr/local/redis && make && cp src/redis-cli /usr/ 0.0s

=> CACHED [4/5] ADD redis.conf /usr/local/redis/redis.conf 0.0s

=> CACHED [5/5] ADD run_redis.sh /usr/local/redis/entrypoint.sh 0.0s

=> exporting to docker image format 11.4s

=> => exporting layers 0.0s

=> => exporting manifest sha256:6755bf1dbf819c131817c8c68271668122603671b9b0a405ed92073c1ba44cfb 0.0s

=> => exporting config sha256:929c5366d4cc9de9d9c20d725dcaf14527e49740275f4449f8972e8ed88c21ae 0.0s

=> => sending tarball 11.4s

unpacking harbor.idockerinaction.info/p1/centos-7.9.2009-redis:v4.0.14 (sha256:6755bf1dbf819c131817c8c68271668122603671b9b0a405ed92073c1ba44cfb)...

Loaded image: harbor.idockerinaction.info/p1/centos-7.9.2009-redis:v4.0.14

INFO[0000] pushing as a reduced-platform image (application/vnd.docker.distribution.manifest.v2+json, sha256:6755bf1dbf819c131817c8c68271668122603671b9b0a405ed92073c1ba44cfb)

WARN[0000] skipping verifying HTTPS certs for "harbor.idockerinaction.info"

manifest-sha256:6755bf1dbf819c131817c8c68271668122603671b9b0a405ed92073c1ba44cfb: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:929c5366d4cc9de9d9c20d725dcaf14527e49740275f4449f8972e8ed88c21ae: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.6 s total: 6.1 Ki (3.8 KiB/s)

root@image-build:/opt/k8s-data/dockerfile/web/p1/centos-7.9.2009-redis-4.0.14#

4.1.3 从镜像启动容器测试

root@image-build:/opt/k8s-data/dockerfile/web/p1/centos-7.9.2009-redis-4.0.14# nerdctl run --name=redistest -it --rm harbor.idockerinaction.info/p1/centos-7.9.2009-redis:v4.0.14

7:C 04 Jun 19:05:40.750 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

7:C 04 Jun 19:05:40.751 # Redis version=4.0.14, bits=64, commit=00000000, modified=0, pid=7, just started

7:C 04 Jun 19:05:40.751 # Configuration loaded

# <nerdctl>

127.0.0.1 localhost localhost.localdomain

::1 localhost localhost.localdomain

10.4.0.104 691b084eb0e3 redistest

# </nerdctl>

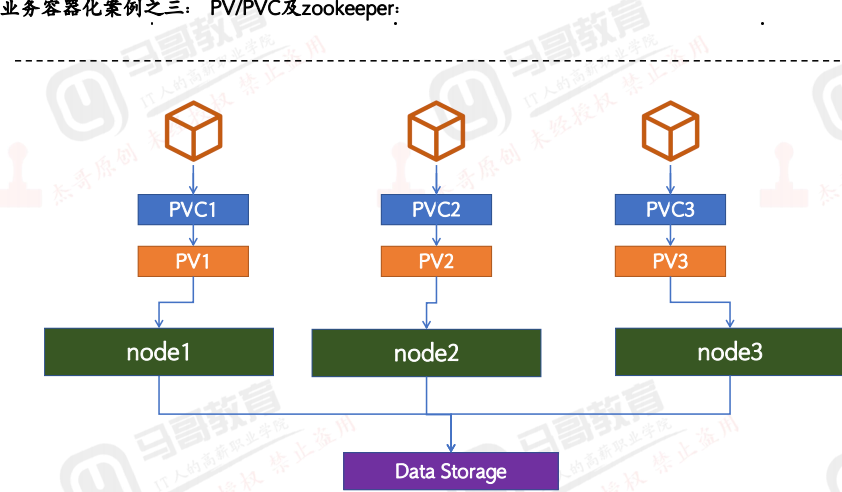

4.2 创建redis pod所用的PV/PVC

root@image-build:/opt/k8s-data/yaml/p1/redis/pv# kubectl apply -f redis-persistentvolume.yaml

persistentvolume/redis-datadir-pv-1 created

root@image-build:/opt/k8s-data/yaml/p1/redis/pv# kubectl apply -f redis-persistentvolumeclaim.yaml

persistentvolumeclaim/redis-datadir-pvc-1 created

root@image-build:/opt/k8s-data/yaml/p1/redis/pv#

4.3 创建redis pod测试

root@image-build:/opt/k8s-data/yaml/p1/redis# kubectl apply -f redis.yaml

deployment.apps/deploy-devops-redis created

service/srv-devops-redis created

root@image-build:/opt/k8s-data/yaml/p1/redis#

4.4 写入数据测试

#写入数据

root@ubuntu:~# redis-cli -h 192.168.1.111 -p 36379 -a 123456

192.168.1.111:36379> keys *

(empty list or set)

192.168.1.111:36379> set key1 value1

OK

192.168.1.111:36379> set key2 value2

OK

192.168.1.111:36379> keys *

1) "key1"

2) "key2"

#查看rdb已保存

root@k8s-ha1:/data/k8sdata/p1/redis-datadir-1# ll

total 4

drwxr-xr-x 2 root root 22 Jun 4 19:29 ./

drwxr-xr-x 8 root root 138 Jun 4 19:22 ../

-rw-r--r-- 1 root root 124 Jun 4 19:29 dump.rdb

4.5 删除redis pod测试

可以看到, pod被删除重建后,redis数据任然存在,因为数据被保存在存储上

root@image-build:/opt/k8s-data/dockerfile/web/p1# kubectl get pods -n p1

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-659bf87b4-wr7w5 1/1 Running 0 9m23s

p1-nginx-deployment-88b498c8f-f82h5 1/1 Running 2 (5h22m ago) 26h

p1-tomcat-app1-deployment-f5bdb7f9b-29ct2 1/1 Running 1 (5h22m ago) 26h

zookeeper1-7fccd69c54-7jxgg 1/1 Running 0 146m

zookeeper2-784448bdcf-dvzp6 1/1 Running 0 113m

zookeeper3-cff5f4c48-jbfc2 1/1 Running 0 146m

root@image-build:/opt/k8s-data/dockerfile/web/p1# kubectl delete pod deploy-devops-redis-659bf87b4-wr7w5 -n p1

pod "deploy-devops-redis-659bf87b4-wr7w5" deleted

root@image-build:/opt/k8s-data/dockerfile/web/p1# kubectl get pods -n p1

NAME READY STATUS RESTARTS AGE

deploy-devops-redis-659bf87b4-pvvvx 1/1 Running 0 39s

p1-nginx-deployment-88b498c8f-f82h5 1/1 Running 2 (5h23m ago) 26h

p1-tomcat-app1-deployment-f5bdb7f9b-29ct2 1/1 Running 1 (5h23m ago) 26h

zookeeper1-7fccd69c54-7jxgg 1/1 Running 0 147m

zookeeper2-784448bdcf-dvzp6 1/1 Running 0 114m

zookeeper3-cff5f4c48-jbfc2 1/1 Running 0 147m

root@image-build:/opt/k8s-data/dockerfile/web/p1#

root@ubuntu:~# redis-cli -h 192.168.1.111 -p 36379 -a 123456

192.168.1.111:36379> keys *

1) "key1"

2) "key2"

192.168.1.111:36379>

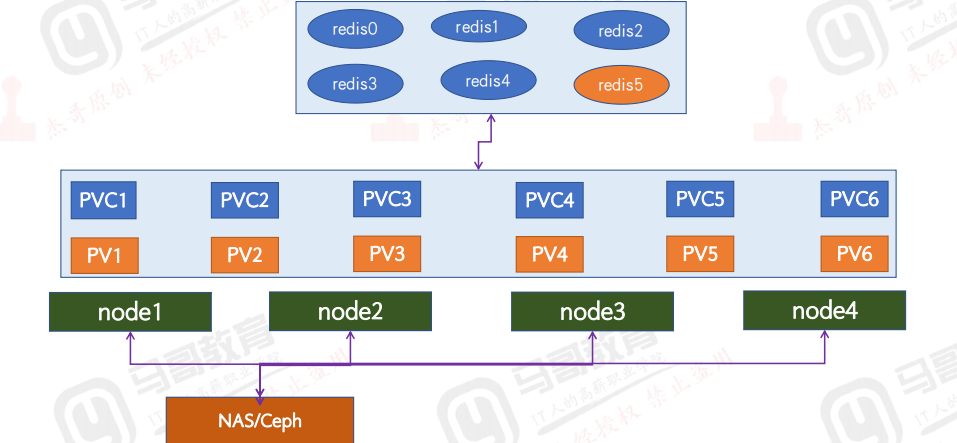

5 业务容器化案例之五 PV/PVC及Redis Cluster

5.1 创建PV

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster/pv# kubectl apply -f redis-cluster-pv.yaml

persistentvolume/redis-cluster-pv0 created

persistentvolume/redis-cluster-pv1 created

persistentvolume/redis-cluster-pv2 created

persistentvolume/redis-cluster-pv3 created

persistentvolume/redis-cluster-pv4 created

persistentvolume/redis-cluster-pv5 created

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster/pv#

5.2 创建configmap

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# cat redis.conf

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl create configmap redis-conf --from-file=redis.conf -n p1

configmap/redis-conf created

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl get configmap -n p1

NAME DATA AGE

kube-root-ca.crt 1 2d22h

redis-conf 1 14s

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl describe configmap redis-conf -n p1

Name: redis-conf

Namespace: p1

Labels: <none>

Annotations: <none>

Data

====

redis.conf:

----

appendonly yes

cluster-enabled yes

cluster-config-file /var/lib/redis/nodes.conf

cluster-node-timeout 5000

dir /var/lib/redis

port 6379

BinaryData

====

Events: <none>

5.3 创建redis cluster

PVC会在创建pod同时一并创建

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl apply -f redis.yaml

service/redis created

service/redis-access created

statefulset.apps/redis created

5.4 初始化集群

redis 4之前使用redis-trib初始化,redis 5使用redis-cli初始化

5.4.1 启动一个ubuntu pod用于初始化集群

#如果apt update太慢可以将apt源改成国内的

root@ubuntu1804:/# apt update

root@ubuntu1804:/# apt install python2.7 python-pip redis-tools dnsutils iputils-ping net-tools

root@ubuntu1804:/# pip install --upgrade pip

#初始化集群,注意redis-0.redis.p1.svc.cluster.local是pod的完整名称

root@ubuntu1804:/# redis-trib.py create \

`dig +short redis-0.redis.p1.svc.cluster.local`:6379 \

`dig +short redis-1.redis.p1.svc.cluster.local`:6379 \

`dig +short redis-2.redis.p1.svc.cluster.local`:6379

> Redis-trib 0.5.1 Copyright (c) HunanTV Platform developers

> INFO:root:Instance at 10.200.117.55:6379 checked

> INFO:root:Instance at 10.200.182.147:6379 checked

> INFO:root:Instance at 10.200.81.6:6379 checked

> INFO:root:Add 5462 slots to 10.200.117.55:6379

> INFO:root:Add 5461 slots to 10.200.182.147:6379

> INFO:root:Add 5461 slots to 10.200.81.6:6379

#将redis-3加入redis-0,形成一组主备

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-0.redis.p1.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-3.redis.p1.svc.cluster.local`:6379

Redis-trib 0.5.1 Copyright (c) HunanTV Platform developers

INFO:root:Instance at 10.200.81.21:6379 has joined 10.200.81.6:6379; now set replica

INFO:root:Instance at 10.200.81.21:6379 set as replica to 33628b24f2d531720bfba8c5a32e88e345ddf941

#将redis-4加入redis-1, 形成一组主备

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-1.redis.p1.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-4.redis.p1.svc.cluster.local`:6379

Redis-trib 0.5.1 Copyright (c) HunanTV Platform developers

INFO:root:Instance at 10.200.182.146:6379 has joined 10.200.182.147:6379; now set replica

INFO:root:Instance at 10.200.182.146:6379 set as replica to fbfac7c3b794079c00c49b5305e3ca1356774038

将redis-5加入redis-2,形成一组主备

root@ubuntu1804:/# redis-trib.py replicate \

--master-addr `dig +short redis-2.redis.p1.svc.cluster.local`:6379 \

--slave-addr `dig +short redis-5.redis.p1.svc.cluster.local`:6379

Redis-trib 0.5.1 Copyright (c) HunanTV Platform developers

INFO:root:Instance at 10.200.117.61:6379 has joined 10.200.117.55:6379; now set replica

INFO:root:Instance at 10.200.117.61:6379 set as replica to a17cac2bbf7289cefbc67c0c363ef4dced50eebd

5.5 验证集群运行状态

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl exec -it redis-0 -n p1 -- bash

root@redis-0:/data# redis-cli

127.0.0.1:6379> cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:5

cluster_my_epoch:1

cluster_stats_messages_ping_sent:1131

cluster_stats_messages_pong_sent:1184

cluster_stats_messages_meet_sent:4

cluster_stats_messages_sent:2319

cluster_stats_messages_ping_received:1183

cluster_stats_messages_pong_received:1135

cluster_stats_messages_meet_received:1

cluster_stats_messages_received:2319

127.0.0.1:6379> CLUSTER NODES

a17cac2bbf7289cefbc67c0c363ef4dced50eebd 10.200.117.55:6379@16379 master - 0 1686044411000 2 connected 0-5461

45a2b7ec833fdb9bfeb3b8562458a84c81951599 10.200.117.61:6379@16379 slave a17cac2bbf7289cefbc67c0c363ef4dced50eebd 0 1686044411000 5 connected

fbfac7c3b794079c00c49b5305e3ca1356774038 10.200.182.147:6379@16379 master - 0 1686044412005 0 connected 5462-10922

a76541e79c5c0316f6eddc7ae102a8bb4e066d7c 10.200.182.146:6379@16379 slave fbfac7c3b794079c00c49b5305e3ca1356774038 0 1686044411003 4 connected

be7305c3a40955ef9632c27dd9dc2fc4384c2499 10.200.81.21:6379@16379 slave 33628b24f2d531720bfba8c5a32e88e345ddf941 0 1686044411503 3 connected

33628b24f2d531720bfba8c5a32e88e345ddf941 10.200.81.6:6379@16379 myself,master - 0 1686044410000 1 connected 10923-16383

127.0.0.1:6379>

5.6 测试写入数据

测试在master node 写入数据

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl exec -it redis-1 -n p1 -- sh

# redis-cli

127.0.0.1:6379> set key1 value1

OK

在slave node 验证数据

root@image-build:/opt/k8s-data/yaml/p1/redis-cluster# kubectl exec -it redis-4 -n p1 -- sh

# redis-cli

127.0.0.1:6379> keys *

1) "key1"

127.0.0.1:6379>

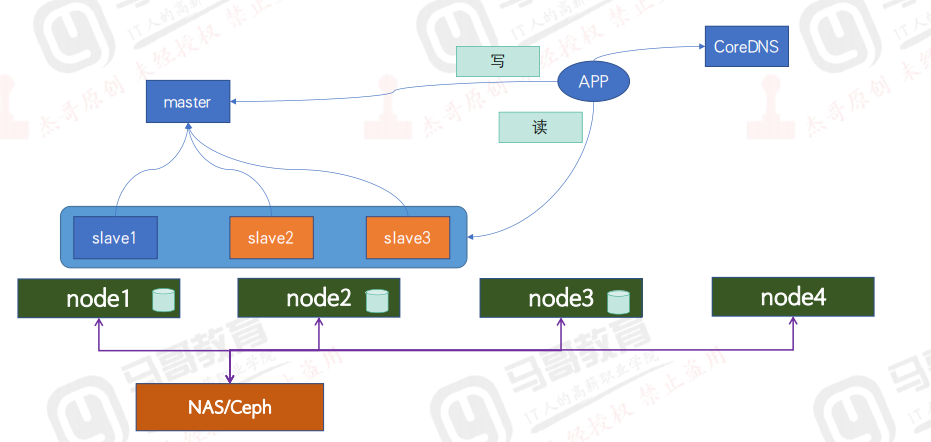

6 业务容器化案例之六 MySQL一主多从

6.1 创建PV

root@image-build:/opt/k8s-data/yaml/p1/mysql/pv# kubectl apply -f mysql-persistentvolume.yaml

persistentvolume/mysql-datadir-1 created

persistentvolume/mysql-datadir-2 created

persistentvolume/mysql-datadir-3 created

persistentvolume/mysql-datadir-4 created

persistentvolume/mysql-datadir-5 created

persistentvolume/mysql-datadir-6 created

root@image-build:/opt/k8s-data/yaml/p1/mysql/pv#

6.2 创建configmap

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl apply -f mysql-configmap.yaml

configmap/mysql created

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl describe configmap mysql -n p1

Name: mysql

Namespace: p1

Labels: app=mysql

Annotations: <none>

Data

====

master.cnf:

----

# Apply this config only on the master.

[mysqld]

log-bin

log_bin_trust_function_creators=1

lower_case_table_names=1

slave.cnf:

----

# Apply this config only on slaves.

[mysqld]

super-read-only

log_bin_trust_function_creators=1

BinaryData

====

Events: <none>

root@image-build:/opt/k8s-data/yaml/p1/mysql#

6.3 创建service

root@image-build:/opt/k8s-data/yaml/p1/mysql# cat mysql-services.yaml

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

namespace: p1

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

namespace: p1

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl apply -f mysql-services.yaml

service/mysql created

service/mysql-read created

6.4 使用statefulset创建mysql pod

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl apply -f mysql-statefulset.yaml

statefulset.apps/mysql created

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl get pods -n p1

NAME READY STATUS RESTARTS AGE

mysql-0 2/2 Running 0 56s

mysql-1 1/2 Running 0 20s

6.5 验证mysql 服务状态

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl exec -it mysql-1 -n p1 -- sh

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 110

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: mysql-0.mysql

Master_User: root

Master_Port: 3306

Connect_Retry: 10

Master_Log_File: mysql-0-bin.000003

Read_Master_Log_Pos: 154

Relay_Log_File: mysql-1-relay-bin.000002

Relay_Log_Pos: 322

Relay_Master_Log_File: mysql-0-bin.000003

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 154

Relay_Log_Space: 531

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 100

Master_UUID: 647964b6-04d3-11ee-b87f-62c09e9e630c

Master_Info_File: /var/lib/mysql/master.info

SQL_Delay: 0

SQL_Remaining_Delay: NULL

Slave_SQL_Running_State: Slave has read all relay log; waiting for more updates

Master_Retry_Count: 86400

Master_Bind:

Last_IO_Error_Timestamp:

Last_SQL_Error_Timestamp:

Master_SSL_Crl:

Master_SSL_Crlpath:

Retrieved_Gtid_Set:

Executed_Gtid_Set:

Auto_Position: 0

Replicate_Rewrite_DB:

Channel_Name:

Master_TLS_Version:

1 row in set (0.00 sec)

ERROR:

No query specified

mysql>

6.6 写入数据测试

#主节点写入数据

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl exec -it mysql-0 -n p1 -- sh

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 440

Server version: 5.7.36-log MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show master status\G;

*************************** 1. row ***************************

File: mysql-0-bin.000003

Position: 154

Binlog_Do_DB:

Binlog_Ignore_DB:

Executed_Gtid_Set:

1 row in set (0.00 sec)

ERROR:

No query specified

mysql> create database mytestdb;

Query OK, 1 row affected (0.01 sec)

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| mytestdb |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.00 sec)

mysql> exit

Bye

# exit

#从节点可以读取到写入的数据

root@image-build:/opt/k8s-data/yaml/p1/mysql# kubectl exec -it mysql-2 -n p1 -- sh

Defaulted container "mysql" out of: mysql, xtrabackup, init-mysql (init), clone-mysql (init)

# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 215

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+------------------------+

| Database |

+------------------------+

| information_schema |

| mysql |

| mytestdb |

| performance_schema |

| sys |

| xtrabackup_backupfiles |

+------------------------+

6 rows in set (0.02 sec)

mysql>

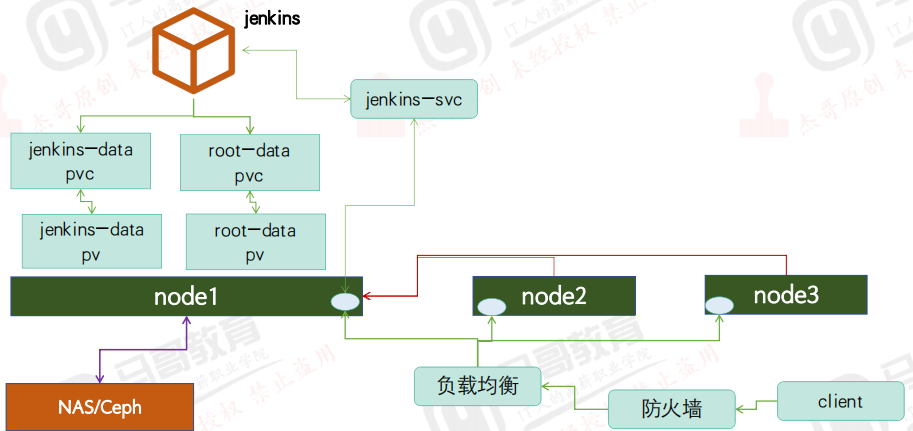

7 业务容器化案例之七 java应用-Jenkins

7.1 构建jenkins镜像

7.1.1 准备构建镜像的文件

7.1.2 构建并上传镜像

root@image-build:/opt/k8s-data/dockerfile/web/jdk/jenkins# bash build-command.sh

即将开始就像构建,请稍等!

3

2

1

[+] Building 26.1s (9/9) FINISHED

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 310B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base 0.1s

=> [auth] baseimages/centos-7.9.2009-jdk-8u212:pull token for harbor.idockerinaction.info 0.0s

=> CACHED [1/3] FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base@sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base@sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a0c56ee5 0.0s

=> [internal] load build context 1.5s

manifest-sha256:eba622914f0a17ec0f98aa42d654ba9dcf4f2e3e47aa4ba0d4c392ea2bf492d1: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:039481ec64decf3c43cd49cae1ccfec2f1b7f13e91290ec9bb954d75d9712113: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.9 s total: 7.3 Ki (3.8 KiB/s)

镜像上传成功!

root@image-build:/opt/k8s-data/dockerfile/web/jdk/jenkins#

7.1.3 使用镜像创建容器测试

root@image-build:/opt/k8s-data/dockerfile/web/jdk/jenkins# nerdctl run -it --rm -p 8080:8080 harbor.idockerinaction.info/p1/centos-7.9.2009-jdk-8u212-jenkins:v2.319.2

Running from: /apps/jenkins/jenkins.war

2023-06-07 04:55:39.852+0000 [id=1] INFO org.eclipse.jetty.util.log.Log#initialized: Logging initialized @846ms to org.eclipse.jetty.util.log.JavaUtilLog

2023-06-07 04:55:40.014+0000 [id=1] INFO winstone.Logger#logInternal: Beginning extraction from war file

2023-06-07 04:55:42.155+0000 [id=1] WARNING o.e.j.s.handler.ContextHandler#setContextPath: Empty contextPath

2023-06-07 04:55:42.275+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: jetty-9.4.43.v20210629; built: 2021-06-30T11:07:22.254Z; git: 526006ecfa3af7f1a27ef3a288e2bef7ea9dd7e8; jvm 1.8.0_212-b10

2023-06-07 04:55:42.732+0000 [id=1] INFO o.e.j.w.StandardDescriptorProcessor#visitServlet: NO JSP Support for /, did not find org.eclipse.jetty.jsp.JettyJspServlet

2023-06-07 04:55:42.799+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: DefaultSessionIdManager workerName=node0

2023-06-07 04:55:42.800+0000 [id=1] INFO o.e.j.s.s.DefaultSessionIdManager#doStart: No SessionScavenger set, using defaults

2023-06-07 04:55:42.801+0000 [id=1] INFO o.e.j.server.session.HouseKeeper#startScavenging: node0 Scavenging every 600000ms

2023-06-07 04:55:43.531+0000 [id=1] INFO hudson.WebAppMain#contextInitialized: Jenkins home directory: /root/.jenkins found at: $user.home/.jenkins

2023-06-07 04:55:43.709+0000 [id=1] INFO o.e.j.s.handler.ContextHandler#doStart: Started w.@6c451c9c{Jenkins v2.319.2,/,file:///apps/jenkins/jenkins-data/,AVAILABLE}{/apps/jenkins/jenkins-data}

2023-06-07 04:55:43.740+0000 [id=1] INFO o.e.j.server.AbstractConnector#doStart: Started ServerConnector@78452606{HTTP/1.1, (http/1.1)}{0.0.0.0:8080}

2023-06-07 04:55:43.741+0000 [id=1] INFO org.eclipse.jetty.server.Server#doStart: Started @4735ms

2023-06-07 04:55:43.742+0000 [id=21] INFO winstone.Logger#logInternal: Winstone Servlet Engine running: controlPort=disabled

2023-06-07 04:55:45.355+0000 [id=28] INFO jenkins.InitReactorRunner$1#onAttained: Started initialization

2023-06-07 04:55:45.395+0000 [id=27] INFO jenkins.InitReactorRunner$1#onAttained: Listed all plugins

2023-06-07 04:55:47.102+0000 [id=27] INFO jenkins.InitReactorRunner$1#onAttained: Prepared all plugins

2023-06-07 04:55:47.110+0000 [id=28] INFO jenkins.InitReactorRunner$1#onAttained: Started all plugins

2023-06-07 04:55:47.140+0000 [id=27] INFO jenkins.InitReactorRunner$1#onAttained: Augmented all extensions

2023-06-07 04:55:48.473+0000 [id=29] INFO jenkins.InitReactorRunner$1#onAttained: System config loaded

2023-06-07 04:55:48.475+0000 [id=27] INFO jenkins.InitReactorRunner$1#onAttained: System config adapted

2023-06-07 04:55:48.476+0000 [id=27] INFO jenkins.InitReactorRunner$1#onAttained: Loaded all jobs

2023-06-07 04:55:48.477+0000 [id=27] INFO jenkins.InitReactorRunner$1#onAttained: Configuration for all jobs updated

2023-06-07 04:55:48.525+0000 [id=42] INFO hudson.model.AsyncPeriodicWork#lambda$doRun$1: Started Download metadata

2023-06-07 04:55:48.552+0000 [id=42] INFO hudson.util.Retrier#start: Attempt #1 to do the action check updates server

2023-06-07 04:55:49.058+0000 [id=26] INFO jenkins.install.SetupWizard#init:

*************************************************************

*************************************************************

*************************************************************

Jenkins initial setup is required. An admin user has been created and a password generated.

Please use the following password to proceed to installation:

adcc1c1677504f1da63a78ddd4d02ad8

This may also be found at: /root/.jenkins/secrets/initialAdminPassword

*************************************************************

*************************************************************

*************************************************************

2023-06-07 04:56:05.471+0000 [id=29] INFO jenkins.InitReactorRunner$1#onAttained: Completed initialization

2023-06-07 04:56:05.512+0000 [id=20] INFO hudson.WebAppMain$3#run: Jenkins is fully up and running

2023-06-07 04:56:06.520+0000 [id=42] INFO h.m.DownloadService$Downloadable#load: Obtained the updated data file for hudson.tasks.Maven.MavenInstaller

2023-06-07 04:56:06.522+0000 [id=42] INFO hudson.util.Retrier#start: Performed the action check updates server successfully at the attempt #1

2023-06-07 04:56:06.525+0000 [id=42] INFO hudson.model.AsyncPeriodicWork#lambda$doRun$1: Finished Download metadata. 17,988 ms

查看网页可以正常登录

7.2 创建PV/PVC

root@image-build:/opt/k8s-data/yaml/p1/jenkins/pv# kubectl apply -f jenkins-persistentvolume.yaml

persistentvolume/jenkins-datadir-pv created

persistentvolume/jenkins-root-datadir-pv created

root@image-build:/opt/k8s-data/yaml/p1/jenkins/pv# kubectl apply -f jenkins-persistentvolumeclaim.yaml

persistentvolumeclaim/jenkins-datadir-pvc created

persistentvolumeclaim/jenkins-root-data-pvc created

7.3 使用jenkins镜像启动服务

root@image-build:/opt/k8s-data/yaml/p1/jenkins# cat jenkins.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: p1-jenkins

name: p1-jenkins-deployment

namespace: p1

spec:

replicas: 1

selector:

matchLabels:

app: p1-jenkins

template:

metadata:

labels:

app: p1-jenkins

spec:

containers:

- name: p1-jenkins-container

image: harbor.idockerinaction.info/p1/centos-7.9.2009-jdk-8u212-jenkins:v2.319.2

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

volumeMounts:

- mountPath: "/apps/jenkins/jenkins-data/"

name: jenkins-datadir-p1

- mountPath: "/root/.jenkins"

name: jenkins-root-datadir

volumes:

- name: jenkins-datadir-p1

persistentVolumeClaim:

claimName: jenkins-datadir-pvc

- name: jenkins-root-datadir

persistentVolumeClaim:

claimName: jenkins-root-data-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: p1-jenkins

name: p1-jenkins-service

namespace: p1

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 38080

selector:

app: p1-jenkins

root@image-build:/opt/k8s-data/yaml/p1/jenkins# kubectl apply -f jenkins.yaml

deployment.apps/p1-jenkins-deployment created

service/p1-jenkins-service created

7.4 WEB登录访问jenkins

192.168.1.189是负载均衡的地址

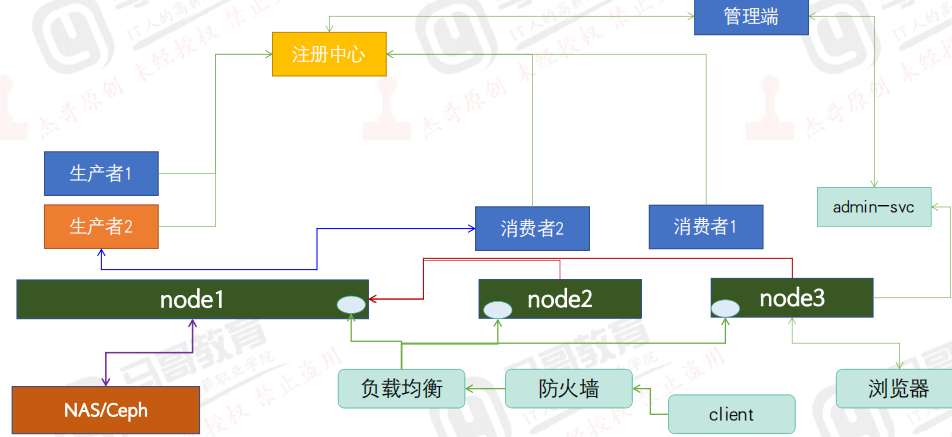

8 业务容器化案例之八 dubbo微服务

8.1 构建镜像

8.1.1 构建dubbo-provider镜像

#注意修改dubbo-demo-provider-2.1.5/conf/dubbo.properties里面zookeeper地址

root@image-build:/opt/k8s-data/dockerfile/web/p1/dubbo/provider# bash build-command.sh

[+] Building 38.2s (14/14) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 533B 0.0s

manifest-sha256:b33327ff0658791fc42730c439644d50a35e91957c31a7afb2d208edac235c9e: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:5566f92ca4bf2e4011faffbe502c6fbb414e45625e9a1547be48d2d5ccea9d8e: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 2.2 s total: 9.3 Ki (4.2 KiB/s)

8.1.2 构建dubbo-consumer镜像

#注意修改dubbo-demo-consumer-2.1.5/conf/dubbo.properties里面zookeeper地址

root@image-build:/opt/k8s-data/dockerfile/web/p1/dubbo/consumer# bash build-command.sh

[+] Building 35.2s (13/13) FINISHED

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 531B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base 0.1s

=> CACHED [1/8] FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base@sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a 0.0s

=> => resolve harbor.idockerinaction.info/baseimages/centos-7.9.2009-jdk-8u212:base@sha256:0946f4f75bdf90d8e0fab33e3f6aefb2a0c56ee5 0.0s

manifest-sha256:0a7d810cf79830b26f8baf17fbe91c1e043d727963b3091e168b9f9ce142e364: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:1ab09c660da242f78f2cbf7f165d7058b845cbb88350b2c8ae9cc624cbe8b758: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.9 s total: 9.3 Ki (4.9 KiB/s)

8.1.3 构建dubboadmin镜像

#主要要修改dubbo.properties文件里面zookeeper地址

root@image-build:/opt/k8s-data/dockerfile/web/p1/dubbo/dubboadmin# bash build-command.sh v1

manifest-sha256:6d5275a744c297dee08a51ad53e4e5c0d0c3506b00783a72bd194b0552104aa3: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:8e48ec3795e48e9278b4e220feea7ad43597a19470b216d2de7a8df02fd78db0: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 2.5 s total: 11.9 K (4.8 KiB/s)

root@image-build:/opt/k8s-data/dockerfile/web/p1/dubbo/dubboadmin#

8.2 启动微服务

8.2.1 启动dubbo provider服务

root@image-build:/opt/k8s-data/yaml/p1/dubbo/provider# kubectl apply -f provider.yaml

deployment.apps/p1-provider-deployment created

service/p1-provider-spec created

8.2.2 启动dubbo consumer服务

root@image-build:/opt/k8s-data/yaml/p1/dubbo/consumer# kubectl apply -f consumer.yaml

deployment.apps/p1-consumer-deployment created

service/p1-consumer-server created

8.2.3 启动dubboadmin

root@image-build:/opt/k8s-data/yaml/p1/dubbo/dubboadmin# kubectl apply -f dubboadmin.yaml

deployment.apps/p1-dubboadmin-deployment created

service/p1-dubboadmin-service created

8.3 验证服务运行状态

8.3.1 查看provider日志

root@image-build:/opt/k8s-data/yaml/p1/dubbo# kubectl exec -it p1-provider-deployment-bd8954776-pfdd9 -n p1 -- bash

[root@p1-provider-deployment-bd8954776-pfdd9 /]# tail -f /apps/dubbo/provider/logs/stdout.log

[15:58:08] Hello world238, request from consumer: /10.200.182.169:36432

[15:58:10] Hello world239, request from consumer: /10.200.182.169:36432

[15:58:12] Hello world240, request from consumer: /10.200.182.169:36432

[15:58:14] Hello world241, request from consumer: /10.200.182.169:36432

[15:58:16] Hello world242, request from consumer: /10.200.182.169:36432

[15:58:18] Hello world243, request from consumer: /10.200.182.169:36432

8.3.2 查看consumer日志

root@image-build:/opt/k8s-data/yaml/p1/dubbo# kubectl exec -it p1-consumer-deployment-5d55cdc8b9-7v9mz -n p1 -- bash

[root@p1-consumer-deployment-5d55cdc8b9-7v9mz /]# cat /apps/dubbo/consumer/logs/stdout.log

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: UseCMSCompactAtFullCollection is deprecated and will likely be removed in a future release.

log4j:WARN No appenders could be found for logger (com.alibaba.dubbo.common.logger.LoggerFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

[15:50:11] Hello world0, response form provider: 10.200.81.29:20880

[15:50:13] Hello world1, response form provider: 10.200.81.29:20880

[15:50:15] Hello world2, response form provider: 10.200.81.29:20880

[15:50:17] Hello world3, response form provider: 10.200.81.29:20880

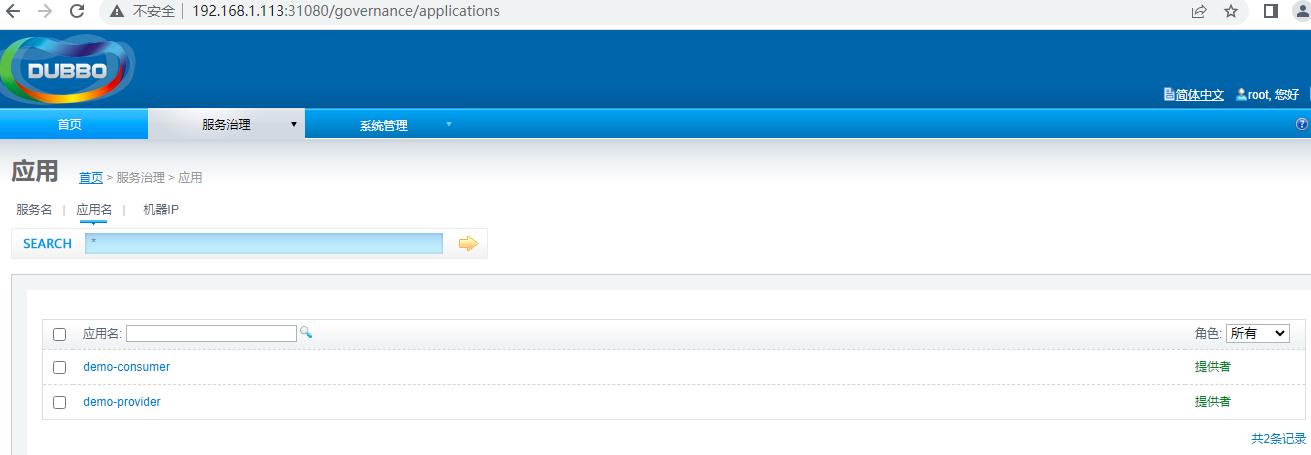

8.3.3 web 访问dubboadmin

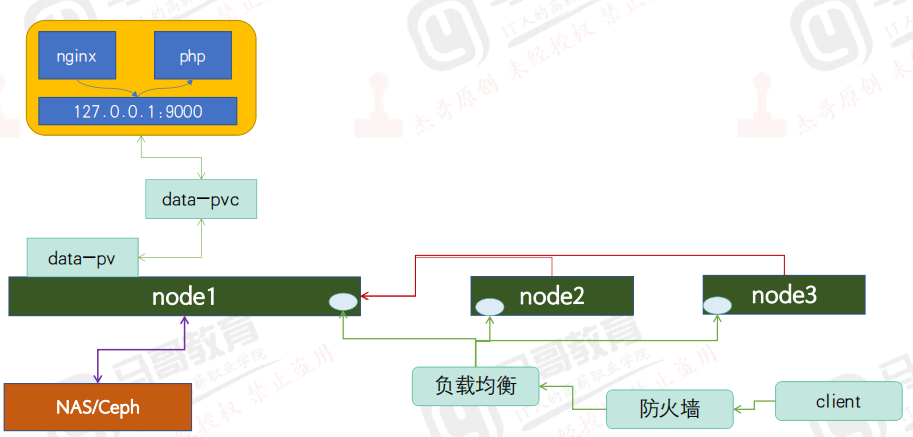

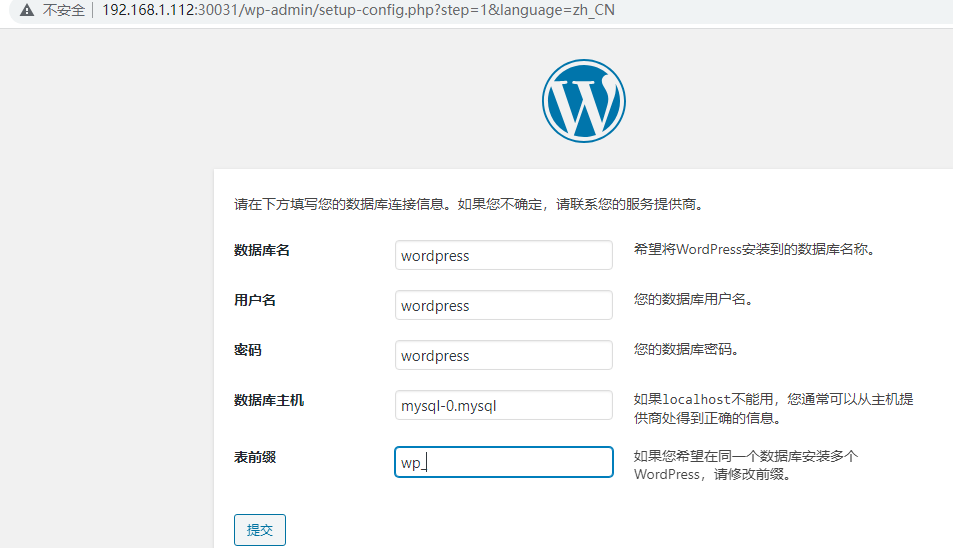

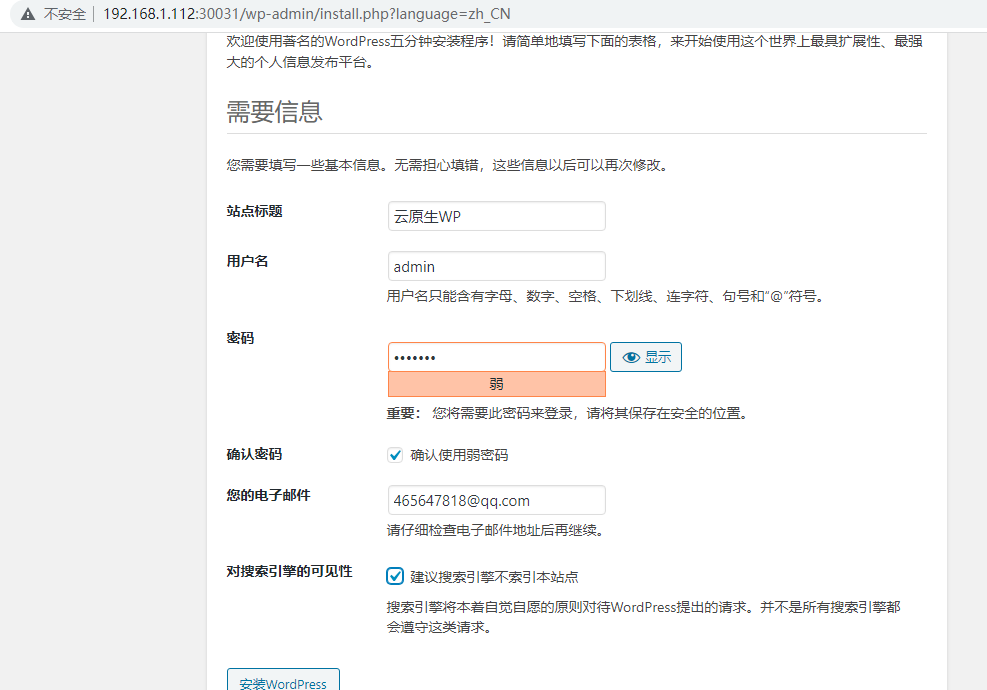

9 业务容器化案例之九 WordPress

9.1 构建业务镜像

9.1.1 构建wordpress-nginx业务镜像

#Dockerfile

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/nginx# cat Dockerfile

FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base

ADD nginx.conf /apps/nginx/conf/nginx.conf

ADD run_nginx.sh /apps/nginx/sbin/run_nginx.sh

RUN mkdir -pv /home/nginx/wordpress

RUN chown nginx.nginx /home/nginx/wordpress/ -R

EXPOSE 80 443

CMD ["/apps/nginx/sbin/run_nginx.sh"]

#build-command.sh

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/nginx# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.idockerinaction.info/p1/wordpress-nginx:${TAG} .

nerdctl build -t harbor.idockerinaction.info/p1/wordpress-nginx:${TAG} .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

nerdctl push harbor.idockerinaction.info/p1/wordpress-nginx:${TAG}

echo "镜像上传完成"

5 构建并上传

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/nginx# bash build-command.sh v1

[+] Building 11.3s (11/11) FINISHED

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 347B 0.0s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base 0.1s

=> [auth] baseimages/centos-7.9.2009-nginx-1.22.0:pull token for harbor.idockerinaction.info 0.0s

=> CACHED [1/5] FROM harbor.idockerinaction.info/baseimages/centos-7.9.2009-nginx-1.22.0:base@sha256:1503590a40fe836a495151e72b7a5b 0.0s

manifest-sha256:a95edb1d1f9eb4676235a34ced8f6d4b6f4fcdaef8faf841cf4d49e6c4110dbf: done |++++++++++++++++++++++++++++++++++++++|

config-sha256:3e389b136042b0baecabb7f5d1625124c8bd48b5e54471096f157fbbfb378d38: done |++++++++++++++++++++++++++++++++++++++|

elapsed: 1.6 s total: 7.6 Ki (4.7 KiB/s)

镜像上传完成

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/nginx#

9.1.2 构建wordpress-php业务镜像

#Dockerfile

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/php# cat Dockerfile

#PHP Base Image

FROM harbor.idockerinaction.info/baseimages/centos-base:7.9.2009

LABEL maintainer='jack <465647818@qq.com>'

RUN yum install -y https://mirrors.tuna.tsinghua.edu.cn/remi/enterprise/remi-release-7.rpm && yum install php56-php-fpm php56-php-mysql -y

ADD www.conf /opt/remi/php56/root/etc/php-fpm.d/www.conf

#RUN useradd nginx -u 2019

ADD run_php.sh /usr/local/bin/run_php.sh

EXPOSE 9000

CMD ["/usr/local/bin/run_php.sh"]

#build-command.sh

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/php# cat build-command.sh

#!/bin/bash

TAG=$1

#docker build -t harbor.idockerinaction.info/p1/wordpress-php-5.6:${TAG} .

nerdctl build -t harbor.idockerinaction.info/p1/wordpress-php-5.6:${TAG} .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

nerdctl push harbor.idockerinaction.info/p1/wordpress-php-5.6:${TAG}

echo "镜像上传完成"

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/php#

5 构建并上传

root@image-build:/opt/k8s-data/dockerfile/web/p1/wordpress/php# bash build-command.sh v1

9.2 运行wordpress-app deployment

root@image-build:/opt/k8s-data/yaml/p1/wordpress# cat wordpress.yaml

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-deployment

namespace: p1

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-app

template:

metadata:

labels:

app: wordpress-app

spec:

containers:

- name: wordpress-app-nginx

image: harbor.idockerinaction.info/p1/wordpress-nginx:v1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false